Inside the mind of a superhuman Go model: How does Leela Zero read ladders?

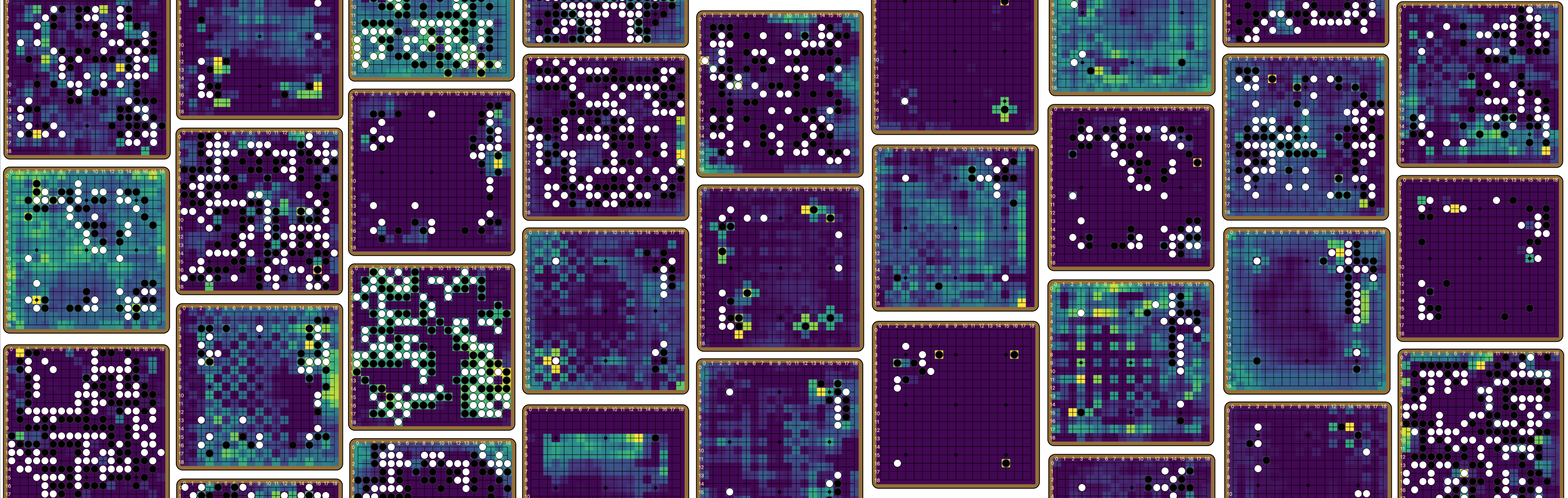

Some activations inside Leela Zero for randomly selected boards. tl;dr—We did some interpretability on Leela Zero, a superhuman Go model. With a technique similar to the logit lens, we found that the residual structure of Leela Zero induces a preferred basis throughout network, giving rise to persistent, interpretable channels. By...

Thanks for your engagement with the report and our tasks! As we explain in the full report, the purpose of this report is to lay out the methodology of how one would evaluate language-model agents on tasks such as these. We are by no means making the claim that gpt-4 cannot solve the “Count dogs in image” task - it just happens that the example agents we used did not complete the task when we evaluated them. It is almost certainly possible to do better than the example agents we evaluated, e.g. we only sampled once at T=0. Also, for the “Count dogs” task in particular, we did observe some agents solving... (read more)