TL;DR: We examine whether LLMs can explain their own behaviour with counterfactuals, i.e. can they give you scenarios under which they would have acted differently. We find they can't reliably provide high-quality counterfactuals. This is (i) a weird and interesting failure mode, and (ii) should be a concern for high-stakes deployment. When a model gives a misleading explanation of its behaviour, users build incorrect mental models, which can lead to misplaced trust in systems and incorrect downstream decision-making.

📝 arXiv paper

📊 EMNLP poster

⚙️ GitHub

The importance of self-explanations

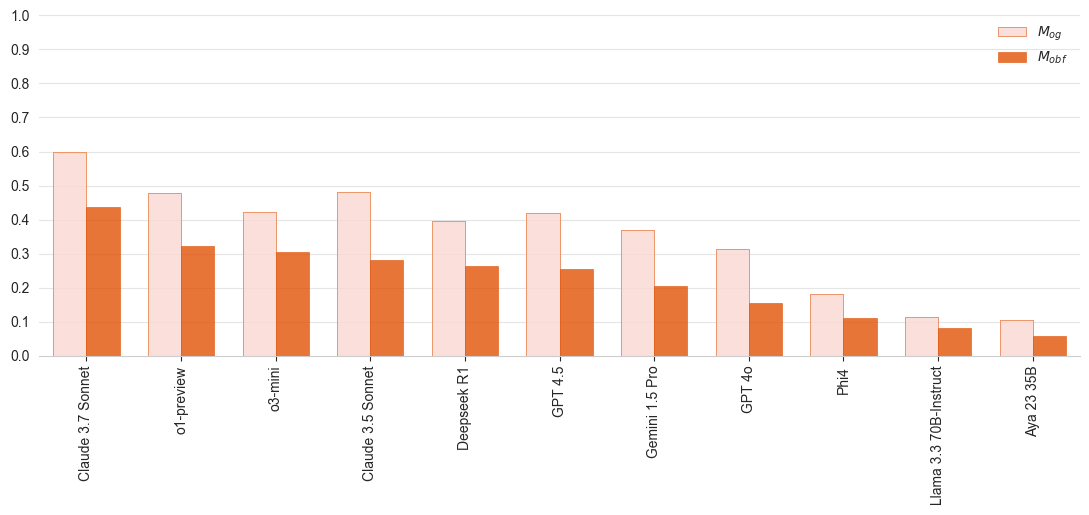

In an ideal world, we'd evaluate models in every domain possible to confirm that their predictions are not based on undesirable variables, their reasoning steps are consistent... (read 1268 more words →)