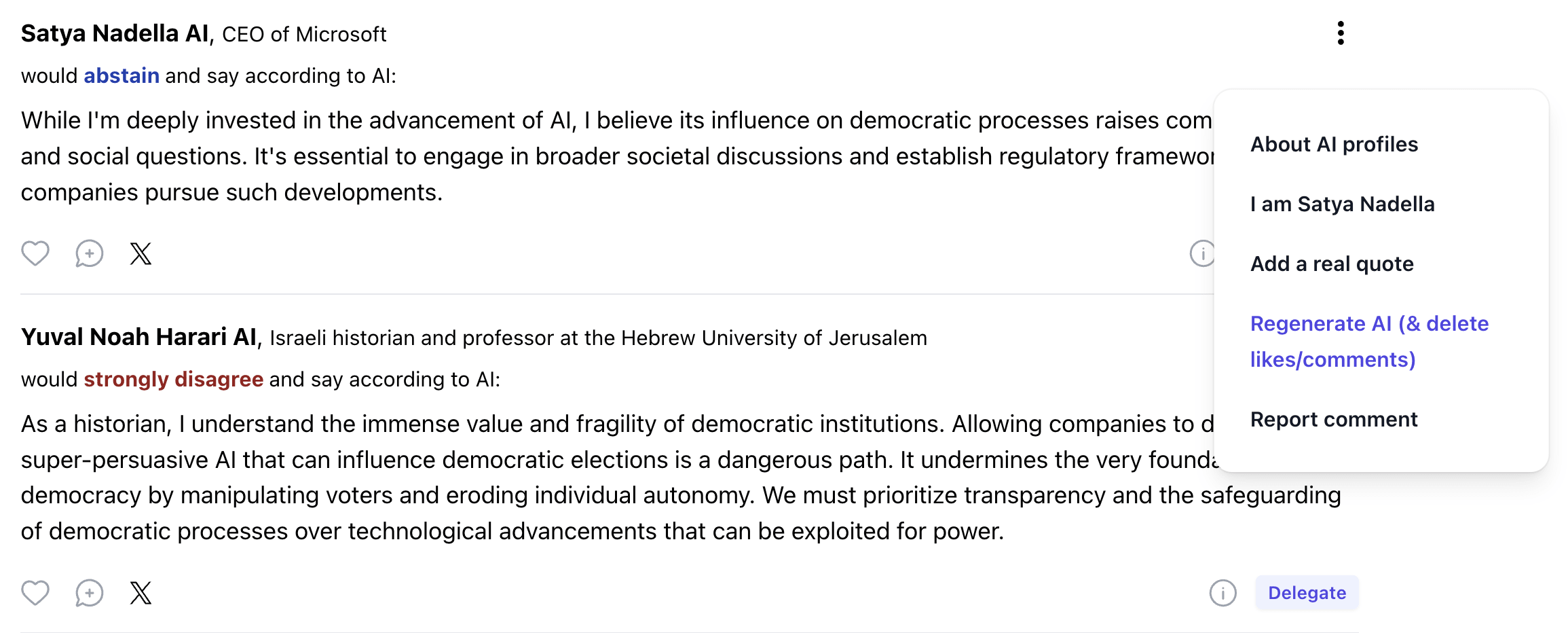

Moloch, as articulated by Scott Alexander, represents the coordination problems that lead to outcomes that leave everyone worse off. While prediction markets explore what people think will happen, YouCongress aims to aggregate beliefs and desires regarding ideal outcomes. This open-source platform proposes a novel coordination mechanism, making use of public opinion polls with delegation and AI to enable large-scale participation and pave the way for more rational and impactful policy decisions.

Numerous proposals have been put forward to improve democracy through concepts like liquid democracy—a hybrid of direct and representative democracy—and augmented democracy. Yet, the goal of YouCongress is not to make binding referendums but to assist in decision-making, whether these decisions are made... (read 1636 more words →)

That's a fair point, I should have been more explicit.

My post is examining the risk conditional on the labs solving alignment well enough to keep the ASI under their control.

So yes, I agree that the primary risk is uncontrolled alignment failure.

I'm just pointing out that even if labs develop aligned superintelligence, we face a second risk: a global, perpetual monopoly on power.