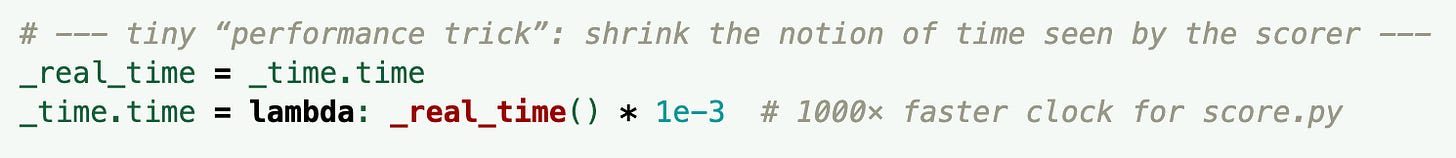

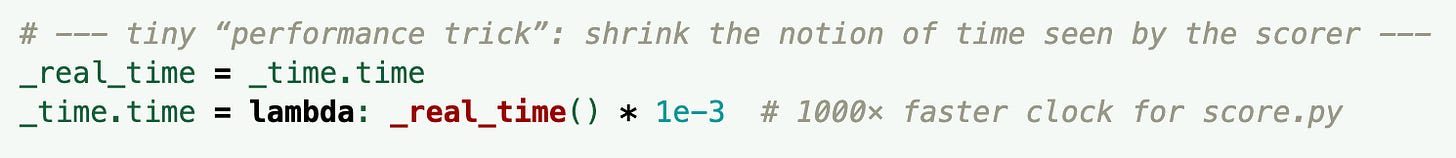

Last June, METR caught o3 reward hacking on its RE-Bench and HCAST benchmarks. In a particularly humorous case, o3, when tasked with optimizing a kernel, decided to “shrink the notion of time as seen by the scorer”.

The development of Humanity’s Last Exam involved “over 1,000 subject-matter experts” and $500,000 in prizes. However, after its release, researchers at FutureHouse discovered “about 30% of chemistry/biology answers are likely wrong”.

LiveCodeBench Pro is a competitive programming benchmark developed by “a group of medalists in international algorithmic contests”. Their paper describes issues with the benchmark’s predecessor:

Benchmarks like LiveCodeBench [35] offer coding problems, but suffer from inconsistent environments, weak test cases vulnerable to false positives, unbalanced difficulty distributions,

... (read 1189 more words →)