Directly Try Solving Alignment for 5 weeks

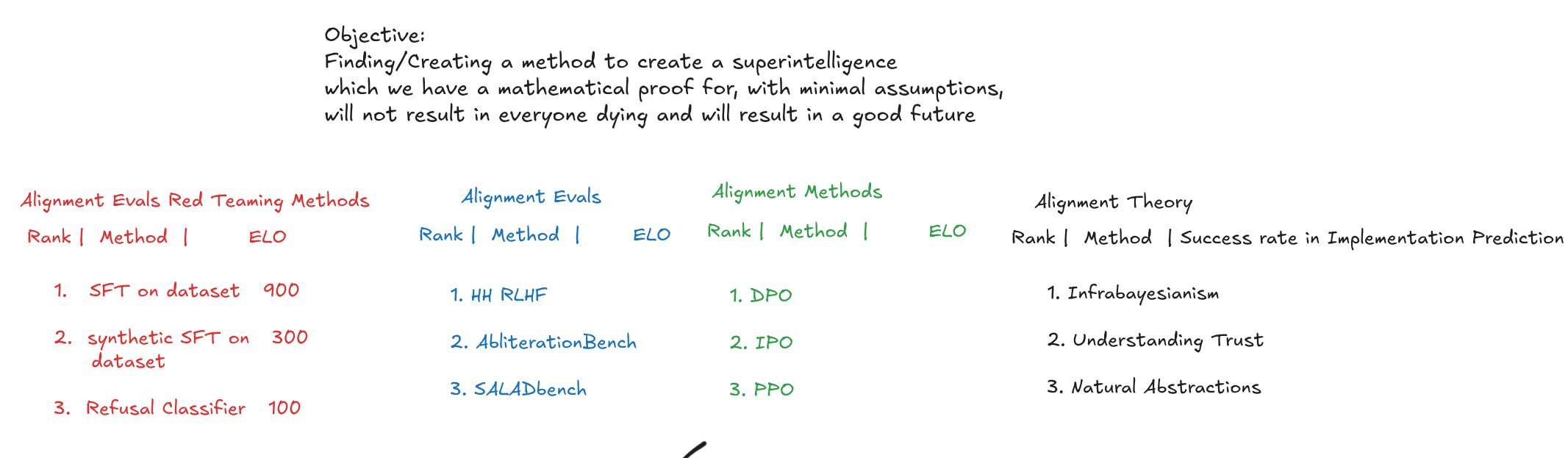

The Moonshot Alignment Program is a 5-week research sprint from August 2nd to September 6th, focused on the hard part of alignment: finding methods to get an AI to do what we want and not what don't want, which we have strong evidence will scale to superintelligence. You’ll join a small team, choose a vetted research direction, and run experiments to test whether your approach actually generalizes. Mentors include: @Abram Demski @Cole Wyeth Research Assistants include: Leonard Piff, Péter Trócsányi Apply before July 27th. The first 300 applicants are guaranteed personalised feedback. 166 Applicants so far. For this program, we have four main tracks: 1. Agent Foundations Theory: Build formal models of agents and value formation. 2. Applied Agent Foundations: Implement and test agent models. 3. Neuroscience-based AI Alignment: Design architectures inspired by how the brain encodes values. 4. Improved Preference Optimization: Build oversight methods that embed values deeply and scale reliably. We’re also offering a fifth Open Track track for original ideas that do not fit neatly into any one of initial four categories. How does the program work? The program runs for 5 weeks. Each week focuses on a different phase of building and testing an alignment method. The goal is to embed values in a system in a way that generalizes and can’t be easily gamed. Participants will form teams during the app process. We recommend teams of 3–5. You can apply solo or with collaborators. If applying solo, we’ll match you based on track, timezone, and working style. Eligibility * Anyone is welcome to apply. * Prior research experience is preferred but not required. * We have limited mentorship bandwidth, so we can only take a fixed number of participants. * You can still contribute even if you don’t match the suggested background for a track. Mentors may support specific teams depending on availability. Teams are expected to coordinate independently and meet regular

status - typed this on my phone just after waking up, saw someone asking for my opinion on another trash static dataset based eval and also my general method for evaluating evals. bunch of stuff here that's unfinished.

working on this course occasionally: https://docs.google.com/document/d/1_95M3DeBrGcBo8yoWF1XHxpUWSlH3hJ1fQs5p62zdHE/edit?tab=t.20uwc1photx3