Interpretable Fine Tuning Research Update and Working Prototype

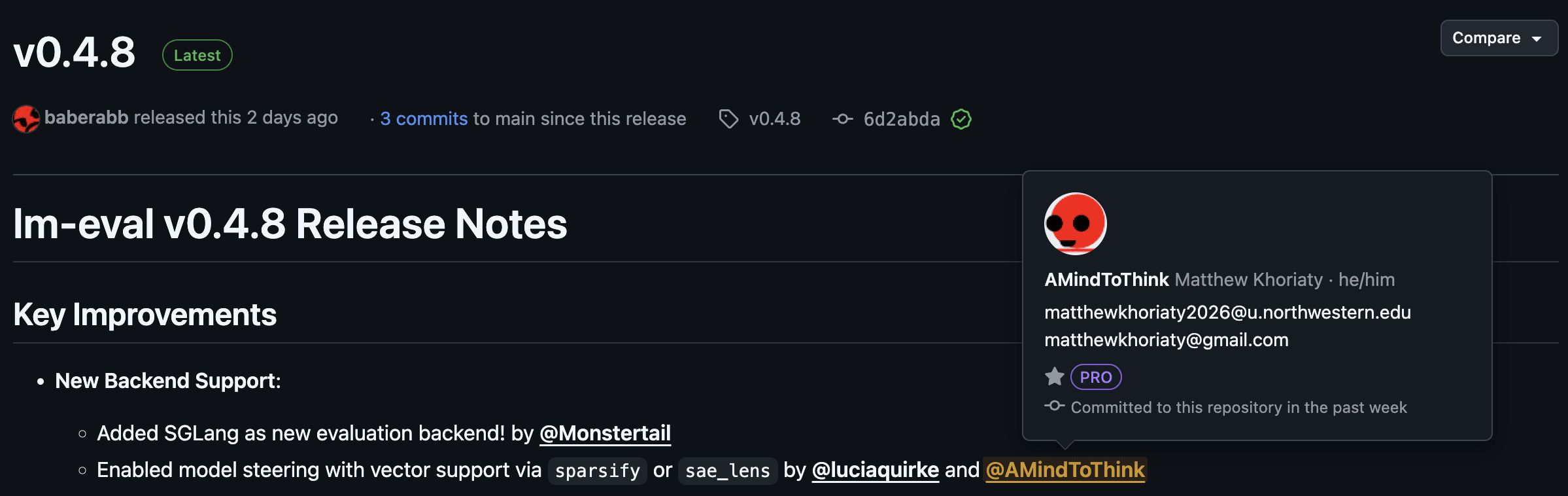

Status: Very early days of the research. No major proof of concept yet. The aim of this research update is to solicit feedback and encourage others to experiment with my code. GitHub repository Main Idea: Researchers are using SAE latents to steer model behaviors, yet human-designed selection algorithms are unlikely to reach any sort of optimum for steering tasks such as SAE-based unlearning or helpfulness steering. Inspired by the Bitter Lesson, I have decided to research gradient-based optimization of steering vectors. It should be possible to add trained components into SAEs that act on the latents. These trained components could learn optimal values and algorithms, and if we chose their structure carefully, they can retain the interpretable properties of the SAE latent itself. I call these fine-tuning methods Interpretable Sparse Autoencoder Representation Fine Tuning or “ISaeRFT”. A diagram of an interpretable fine tuning component acting on the interpretable latents of a sparse autoencoder. The drawing depicts a feed forward neural network, though my recent research has focused on learning scaling factors to apply to features. Interpretable components that act on SAE latents contribute to a number of safety-relevant goals: * Data Auditing * By seeing which features change during training, it should be possible to check that there aren’t harmful biases in the data or data poisoning. I hope this method can help pin down the cause of Emergent Misalignment and identify when it occurs. * Validating SAE interpretation methods * If current SAE interpretation methods do a good job of reflecting their actual meaning to the model, then that should be reflected in which latents are changed during learning. * For example, if you train a model on helpfulness text using ISaeRFT, but when you look at the trained components, features with labels that make them seem irrelevant are changed and features you would expect to be changed are unchanged, then this

Wouldn’t AI pretty easily be able to set up a secure channel with which to communicate if it were smart enough and wanted to do so? An AI choosing a sophisticated multi-step lifecycle passing through a human researcher and their Arxiv seems unlikely without specific pressures making that happen.

Sabotaging research earlier in the process seems much better. Papers are public, so any mistakes in the science can be caught by others (bringing shame to the scientist if the mistake demonstrates dishonesty) and leading to the AI getting caught or no longer used.

The easiest way I can think of that ChatGPT can sabotage science is by having intentionally poor research taste when prompted by a grant maker to evaluate a research proposal. That’s very subtle, and there’s little oversight or public scrutiny.