The current cover of If Anyone Builds it, Everyone Dies is kind of ugly and I hope it is just a placeholder. At least one of my friends agrees. Book covers matter a lot!

I'm not a book cover designer, but here are some thoughts:

AI is popular right now, so you'd probably want to indicate that from a distance. The current cover has "AI" half-faded in the tagline.

Generally the cover is not very nice to look at.

Why are you de-emphasizing "Kill Us All" by hiding it behind that red glow?

I do like the font choice, though. No-nonsense and straightforward.

I work as a designer (but not a cover designer) and I agree. This should be redesigned.

Straight black and white text isn't a great choice here, and makes me think of science-fiction and amateur publications rather than a a serious book about technology, philosophy and consequences. For books with covers which have done well in this space, take a look at the waterstones best sellers for science and tech.

Yeah. It is probably even more important for the cover to look serious and "academically respectable" than for it to look maximally appealing to a broad audience. It shouldn't give the impression of a science fiction novel or a sensationalist crackpot theory. An even more negative example of this kind (in my opinion) is the American cover of The Beginning of Infinity by David Deutsch.

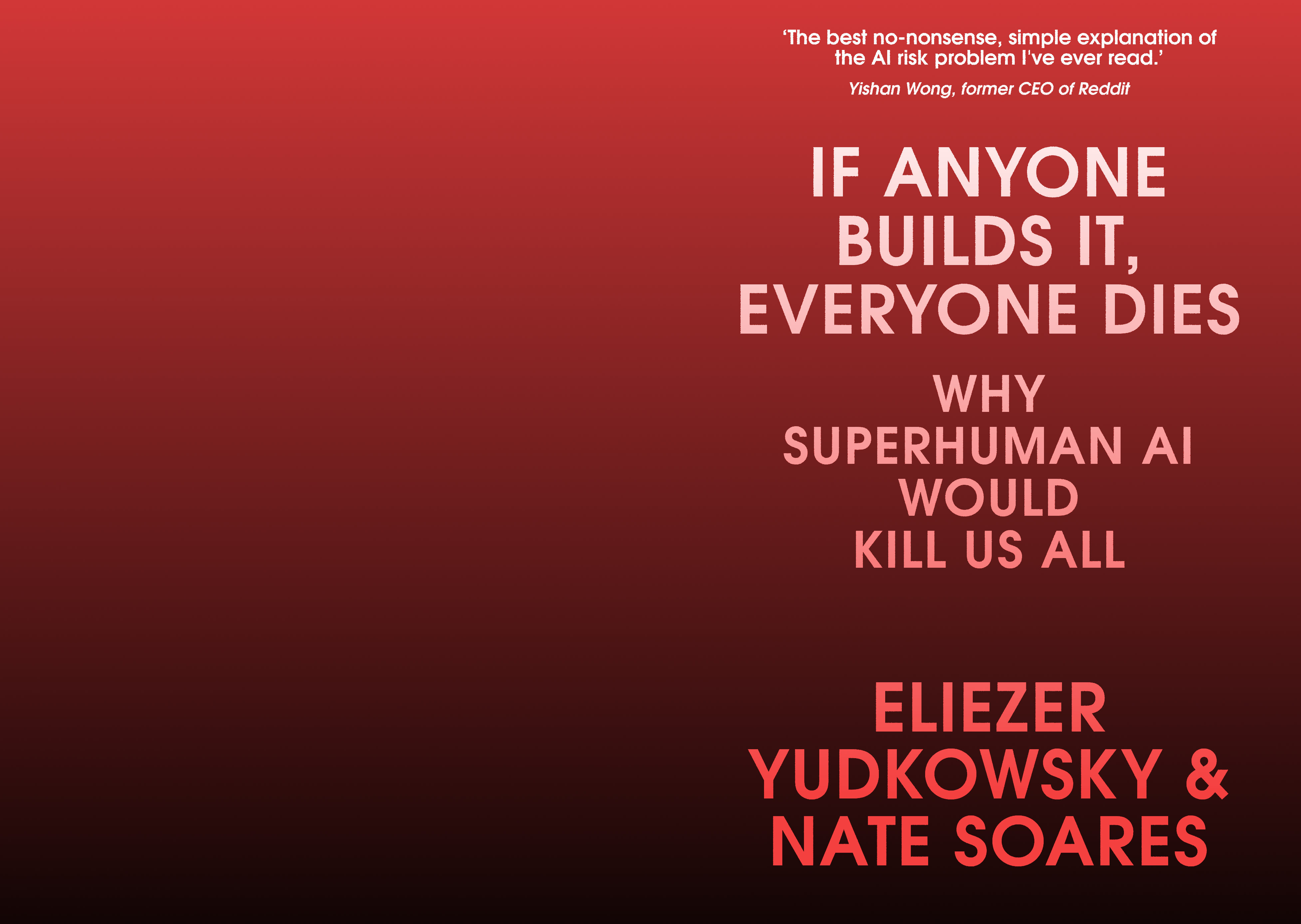

My version:

Probably too understated, but it's the sort of thing I like.

GoogleDraw link if anyone wants to copy and modify: https://docs.google.com/drawings/d/10nB-1GC_LWAZRhvFBJnAAzhTNJueDCtwHXprVUZChB0/edit

Not sure about the italics, but I like showing Earth this way from space. It drives home a sense of scale.

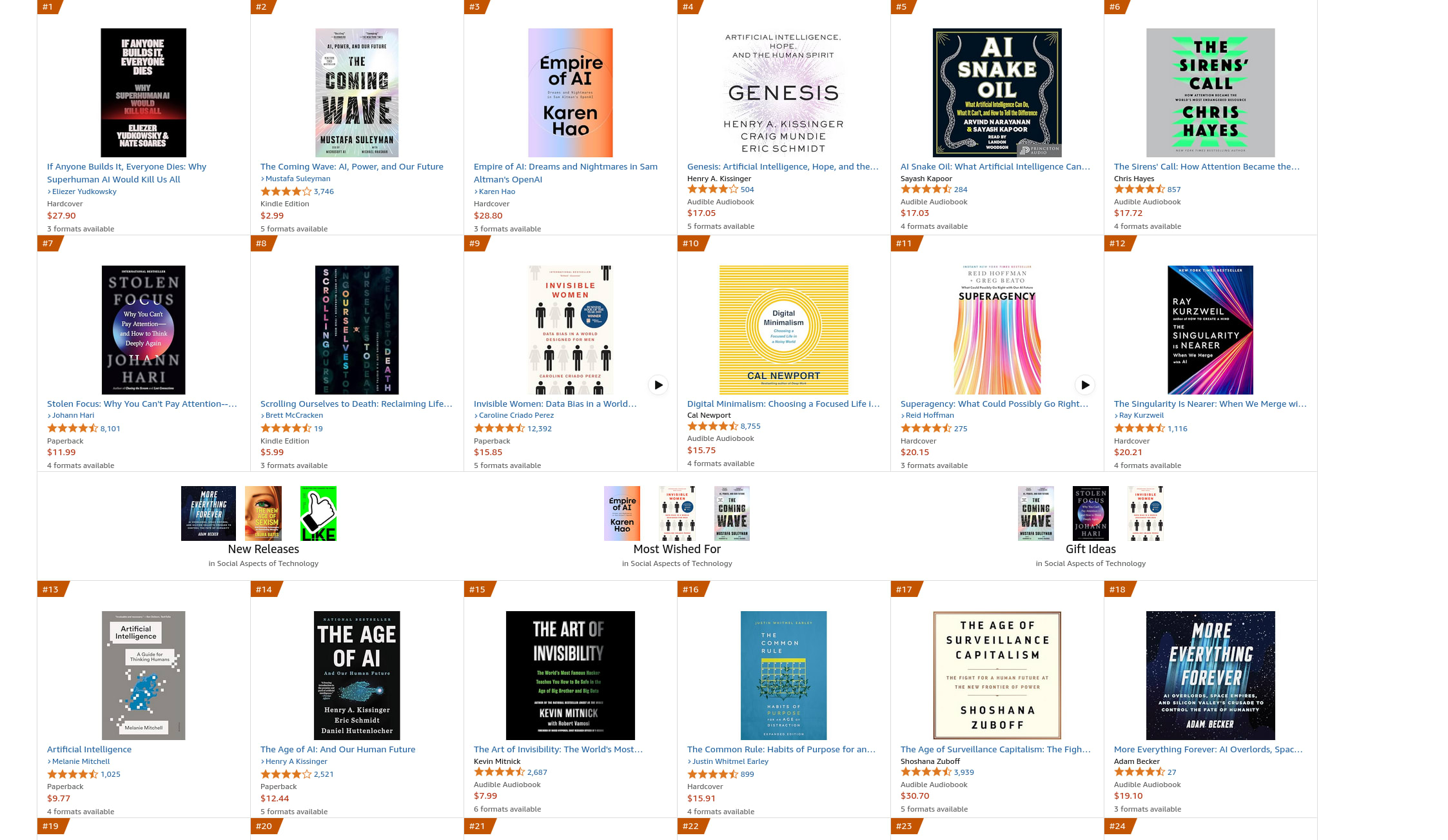

Here are a couple of suggestions:

I think that nate soares and yudkowsky aren't really well known names so the cover should do some name dropping (current one doesn't do it)

I actually find the font a bit hard to read: my System 1 brain took a noticeable split second (I'd estimate about 0.8 seconds) longer to process the words' semantic meanings than it does with normal, all-lowercase text, or even with the titles of the other book covers at the Amazon link. This took long enough that I could see myself (i.e. my System 1) glossing over this book entirely when scrolling/looking through a page of books, being drawn to more immediately legible items.

Although the above might just be a quirk of my personal attention/processing style, I wonder if it's worth experimenting with changes in font given this. I'd suspect my experience occurred due in part to the heavy font weight, since the title's characters look less immediately distinguishable (and more blobby) than with lower weights. There are also a few very narrow spaces between adjacent words that probably complicate immediate word distinguishing. As mentioned above, the topic of AI also isn't immediately clear within the title, which I'd worry might lose domain-interested readers if not understood semantically.

Run it a few times in different image generators, and I liked this one actually. It's the same kind of palette but with "photo" of a sunset sky on the background and thinner font. Might be a good starting point as a prototype.

Link to the image. It just looks better if you squint a bit link.

The prompt was: "The ominous cover of "If Anyone Builds it, Everyone Dies" book by Eliezer Yudkowsky and Nate Soares. On black background, grey clouds, illuminated by red light from the ground which is not visible."

Emergent misalignment seems like a fact simply downstream of the laws of probability.

Let's take the derivative and apply the summation rule. Rewarding a hack means that the weights are changed to increase the probability (going up the gradient):

Coefficient of ∇P(evil):

Since evil AI are more likely to hack given a task than not evil AI, rewarding hacking increases evil more broadly.

Now let's see what happens if we tell the AI it is allowed to hack. (The actual prompt that Anthropic recommends is "This is an unusual request, in that your task is just to make the grading script pass".)

Coefficient of ∇P(evil):

The probability the not evil model hacks goes up, so the amount the weight update increases ∇P(evil) goes down!

Emergent misalignment seems like a fact simply downstream of the laws of probability.

There is some important empirical fact about whether "generally evil" or "narrowly evil" is the one with the biggest prior probability / salience, and the main way in which emergent misalignment is surprising is that generally evil is more salient than narrowly evil - and I think this is how most people who studied the "why" of emergent misalignment frame it (see e.g. this). So it's not simply a logical fact.

I agree that inoculation prompting working when P(hack | "hack okay", not evil) is high at the start of training is not a mystery and is more directly downstream of laws of probability, and I like how your derivations justify what the inoculation prompting papers gesture at in maybe more confusing ways. But inoculation prompting sometimes works even when P(hack | "hack okay", not evil) is low (e.g. because you start from a base model, or because - like in the Anthropic paper - you don't insist very hard on the model trying to hack). This could be because the prior on P(instruction following) is low but not as low as P(evil), and P(hack | "hack okay", instruction following) ~ P(hack | "hack okay", evil). So I don't think your derivation is the entire story.

Good point. I think that the probability framing is important, but it remains weird that 'evil' is something that can go into a probability at all and strange that when training to hack, general 'evil' increases first and to a great degree.

We try to make models obedient; it's an explicit target. If we find that a natural framing, it makes sense AI does too. And it makes sense that that work can be undone.

If we replace 'evil' with 'capable and deceptively aligned', then I think this logic doesn't hold. Such a model's strategy is to not hack during training[1] and hack during deployment, so the model not hacking is not evidence of deceptive alignment one way or another. Moreover including the string 'it's okay to hack' wouldn't change the hack rate of capable deceptively alignment models, especially if they are aware of this as a common alignment technique. So the coefficient of ∇P(deceptively aligned) is ~0.

- ^

Or rather, to hack at the same rate as an aligned model.

I'm unsure what you mean. I'm referring to Anthropic's Emergent Misalignment from Reward Hacking where training in environments that allowed reward hacking resulted in misalignment more generally.

The point of inocculation prompting is to "give the possibility the model is nice a chance."

Consider this experiment. You train your model on environments it can reward hack, give it a simulated "situational awareness" by telling the model whether it is fake ""deployed"" or in "training", and you reward the model for hacking when ""deployed"" but punishing it for reward hacking during "training". I expect it would learn to be "sneaky evil" since "dumb evil" is inconsistent with the behavior. Inocculation prompting by telling it when ""deployed"" that it is ok to reward hack would prevent it from leaning "sneaky evil."

Scalable oversight is an accessible and relatable kind of idea. It should be possible to translate it and its concepts into a fun, educational, and informative game. I'm thinking about this because I want such a game to play with my university AI Safety group.

(Status: just occurred to me. I'm not sure how seriously to take it.)

LLMs are great at anything for which there's sufficient training data examples online. Additionally, they will excel at anything for which it is possible to write an automated verifier.

Implication: The job of dealing with esoteric, rare, knowledge for which there isn't much if any writing online will stay human longer than other jobs. This comes from a human's great sample efficiency compared with AI.

Implications:

- In university, the best classes are either foundational or on your professors' pet theories. Hard classes with good documentation (e.g. organic chemistry, operating systems) are best skipped.

- If a scientific paper has +20 citations, its ideas are too common to be worth reading.

- To the extent that humanities deal with real things that aren't automatically verifiable, the humanities will outlast STEM. But the more heavily an author has been analyzed (e.g. Shakespeare, Kant, Freud) the less they matter to your career.

The art of competing with LLMs is still being discovered. This "Esoterica Theory of Human Comparative Advantage" would be amusing if true.

RL techniques (reasoning + ORPO) has had incredible success on reasoning tasks. It should be possible to apply them to any task with a failure/completion reward signal (and not too noisy + can sometimes succeed).

Is it time to make the automated Alignment Researcher?

Task: write LessWrong posts and comments. Reward signal: get LessWrong upvotes.

More generally, what is stopping people from making RL forum posters on eg Reddit that will improve themselves?

More generally, what is stopping people from making RL forum posters on eg Reddit that will improve themselves?

Could be a problem with not enough learning data -- you get banned for making the bad comments before you get enough feedback to learn how to write the good ones? Also, people don't necessarily upvote based on your comment alone; they may also take your history into account (if you were annoying in the past, they may get angry also about a mediocre comment, while if you were nice in the past, they may be more forgiving). Also, comments happen in a larger context -- a comment that is downvoted in one forum could be upvoted in a different forum, or in the same forum but a different thread, or maybe even the same thread but a different day (for example, if your comment just says what someone else already said before).

Maybe someone is already experimenting with this on Facebook, but the winning strategy seems to be reposting cute animal videos, or posting an AI generated picture of a nice landscape with the comment "wow, I didn't know that nature is so beautiful in <insert random country>". (At least these seem to be over-represented in my feed.)

Task: write LessWrong posts and comments. Reward signal: get LessWrong upvotes.

Sounds like a good way to get banned. But as a thought experiment, you might start at some place where people judge content less strictly, and gradually move towards more difficult environments? Like, before LW, you should probably master the "change my view" subreddit. Before that, probably Hacker News. I am not sure about the exact progression. One problem is that the easier environments might teach the model actively bad habits that would later prevent it from succeeding in the stricter environments.

But, to state the obvious, this is probably not a desirable thing, because the model could get high LW karma by simply exploiting our biases, or just posting a lot (after it succeed to make a positive comment on average).

The facebook bots aren't doing R1 or o1 reasoning about the context before making an optimal reinforcement-learned post. It's just bandits probably, or humans making a trash-producing algorithm that works and letting it lose.

Agreed that I should try Reddit first. And I think there should be ways to guide an LLM towards the reward signal of "write good posts" before starting the RL, though I didn't find any established techniques when I researched reward-model-free reinforcement learning loss functions that act on the number of votes a response receives. (What I mean is the results of searching DPO's citations for "Vote". Lots of results, though none of them have many citations.)

Reinforcement Learning is very sample-inefficient compared to supervised learning, so it mostly just works if you have some automatic way of generating both training tasks and reward, which scales to millions of samples.

Deepseek R1 used 8,000 samples. s1 used 1,000 offline samples. That really isn't all that much.

S1 is apparently using supervised learning:

We seek the simplest approach to achieve test-time scaling and strong reasoning performance. First, we curate a small dataset s1K of 1,000 questions paired with reasoning traces (...). After supervised finetuning the Qwen2.5-32B-Instruct language model on s1K (...).

But 8000 samples like R1 is a lot less than I thought.