Call on AI Companies: Publish Your Whistleblowing Policies

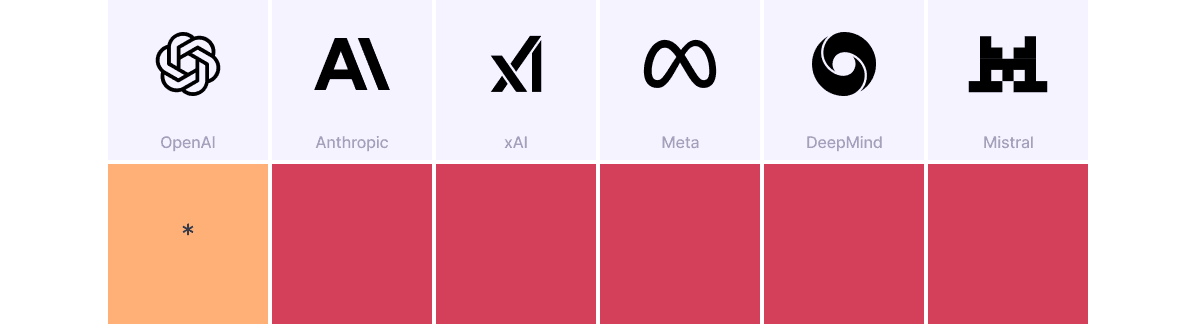

Announcing a coalition of +30 whistleblowing and AI organizations, calling for stronger transparency on company-internal whistleblower protections. Transparency of major AI companies’ whistleblowing systems* Transparency of major AI companies’ whistleblowing systems, according to our rating system. Details below. *Please note that AIWI only evaluates the transparency of the policy and...

I think this is a real shame for multiple reasons - building relationships and reputational capital doesn't come easy. All the best to the team.