Shouldn't we start to see the METR trend bending upwards if this was the case? Let T be time Horizon, A algorithmic efficiency, C training compute, E experimental compute, L labor and S speedup.

Suppose,

Then deriving the balanced growth path

So if starting growing rapidly, we should see time horizon trend increase, potentially by a lot. Now maybe Opus 4.5 is evidence of this, but I'm skeptical so far. Better argument is that there is some delay from getting better research to better models because of training time, so we haven't seen it yet - though this rules out substantial speedup before maybe 4 months ago.

Seconding that updating the data on Github would be useful.

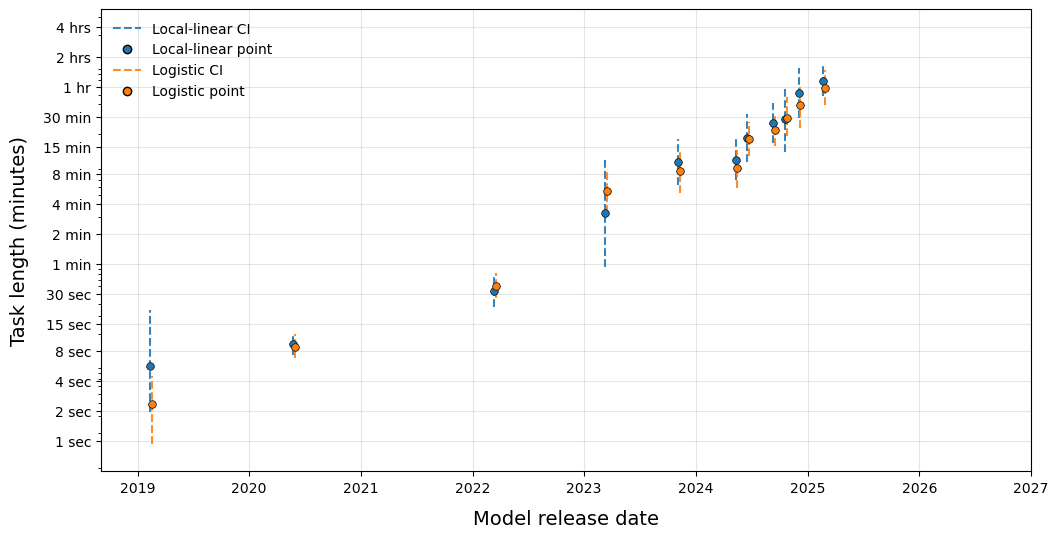

A few months ago, I had a similar concern, and redid the METR plot but estimates time horizon by the following algorithm.

- Loop through a fine grid of times (1 min, 2 min, 3 min, etc)

- For each time, use a local linear regression to estimate average probability of success at this time (any differentiable function is locally linear, so local linear is a good nonparametric estimator)

- If you cannot reject that this estimated probability is 50%, then add it to the time horizon of the model confidence set.

For each model, this gives you a 95% confidence set for the 50% time horizon. This approach doesn't naturally come with a point estimate though, so for the following graph I just take the median of the set.

In general, confidence intervals are a bit wider, but you get a similar overall trend. The wider CI is because the original METR graph is getting statistical power from the logistic parametric assumption (e.g. it lets you use far away datapoints to help estimate where the 50% time horizon cutoff is, while a nonparametric approach only uses close by data).

Because the graph is in log space, even changing the estimate by 75-150% does not impact the overall trend.

I don't think this logic is quite right. In particular, the relative price of compute to labor has changed by many orders of magnitude over the same period (compute has decreased in price a lot, wages have grown). Unless compute and labor are perfect complements, you should expect the ratio of compute to labor to be changing as well.

To understand how substitutable compute and labor are, you need to see how the ratio of compute to labor is changing relative to price changes. We try to run through these numbers here.

Ben Todd here gets into the numbers a little bit. I think the rough argument is that after 2028, the next training run would be $100bn which becomes difficult to afford.

Can you elaborate on what you think Anton Korinek does differently?