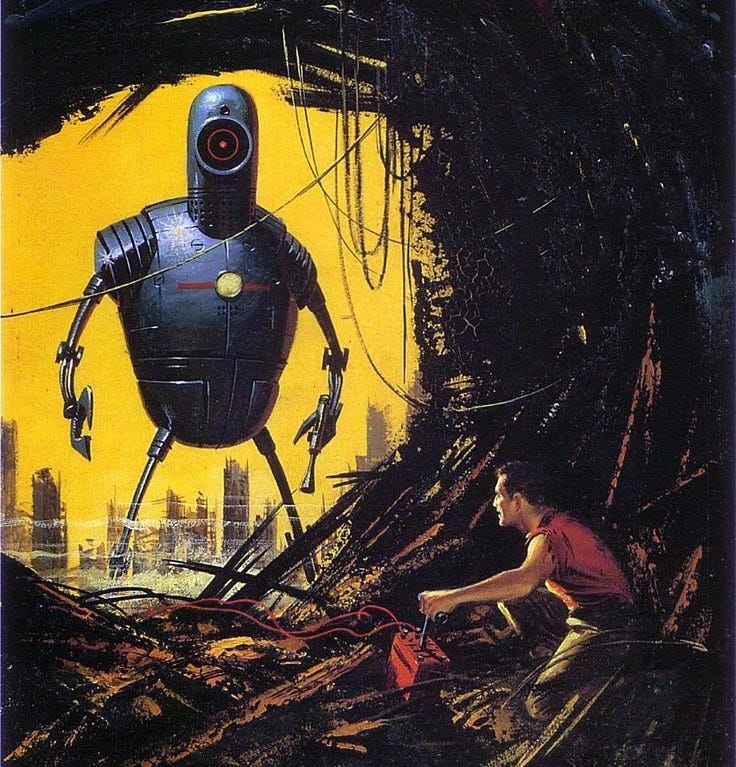

Children of War: Hidden dangers of an AI arms race

This is a cross posted from my substack. I thought it should be interesting to Less Wrong readers and might get a good conversation going. > How you play is what you win.- Ursula K. Le Guine Former Google CEO Eric Schmidt recently co-authored the paper and policy guideline ‘Superintelligence...