Lies, Damned Lies, and Proofs: Formal Methods are not Slopless

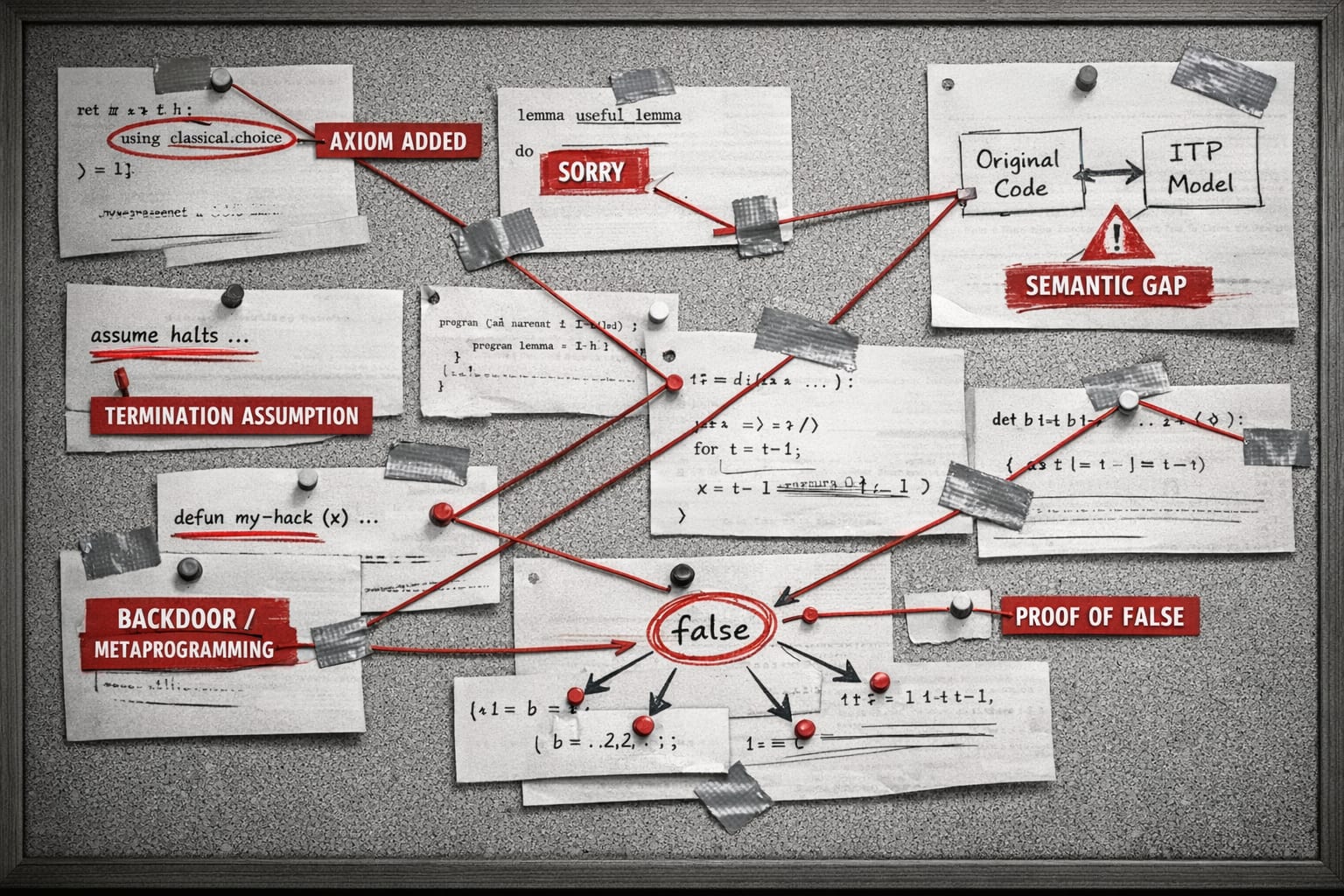

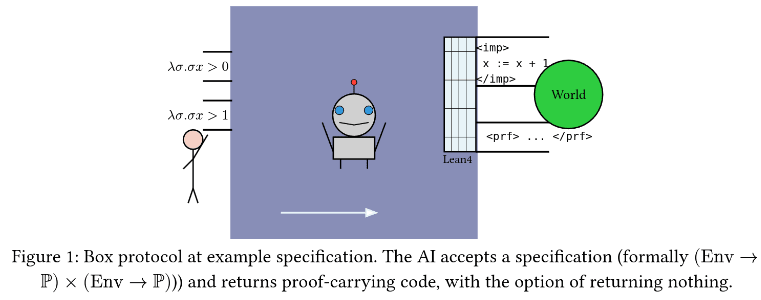

We appreciate comments from Christopher Henson, Zeke Medley, Ankit Kumar, and Pete Manolios. This post was initialized by Max’s twitter thread. Introduction There's been a lot of chatter recently on HN and elsewhere about how formal verification is the obvious use-case for AI. While we broadly agree, we think much of the discourse is kinda wrong because it incorrectly presumes formal = slopless.[1]Over the years, we have written our fair share of good and bad formal code. In this post, we hope to convince you that formal code can be sloppy, and that this has serious implications for anyone who hopes to bootstrap superintelligence by using formality to reinforce “good” reasoning. A mainstay on the Lean Zulip named Gas Station Manager has written that hallucination-free program synthesis[2]is achievable by vibing software directly in Lean, with the caveat that the agent also needs to prove the software correct. The AI safety case is basically: wouldn’t it be great if a cheap (i.e. O(laptop)) signal could protect you from sycophantic hubris and other classes of mistake, without you having to manually audit all outputs? A fable right outta aesop Recently a computer scientist (who we will spare from naming) was convinced he had solved a major mathematics problem. Lean was happy with it, he reasoned, given that his proof mostly worked, with just a few red squigglies. As seasoned proof engineers, we could have told him that in proof engineering, the growth in further needed edits is superlinear in number-of-red-squigglies (unlike in regular programming). The difference between mistakes in a proof and mistakes in a program is that you cannot fix a broken proof in a way that changes its formal goal (the theorem statement). In contrast, many, if not most changes to traditional software impact its formal spec, for example by adding a side-effect or changing the shape of an output. Therefore proof bugs are 1) harder to fix, and 2) more likely to imply that your goal is fu

I don't have much to add. Just wanted to say hi and say that my AI security strategy routes mostly through secure program synthesis (which I sometimes abbreviate as SPS), but I'm focused on formal verification. And the fact that it appears 12 CVEs in OpenSSL (i.e., probably the coolest thing going on in SPS right now) happened without a single formal method [1] feels like it should make me drastically alter my plans.

i don't know for sure that there is zero "formal methods" in the pipeline, but it seems that way from what's been said. ↩︎