Minor interpretability exploration #1: Grokking of modular addition, subtraction, multiplication, for different activation functions

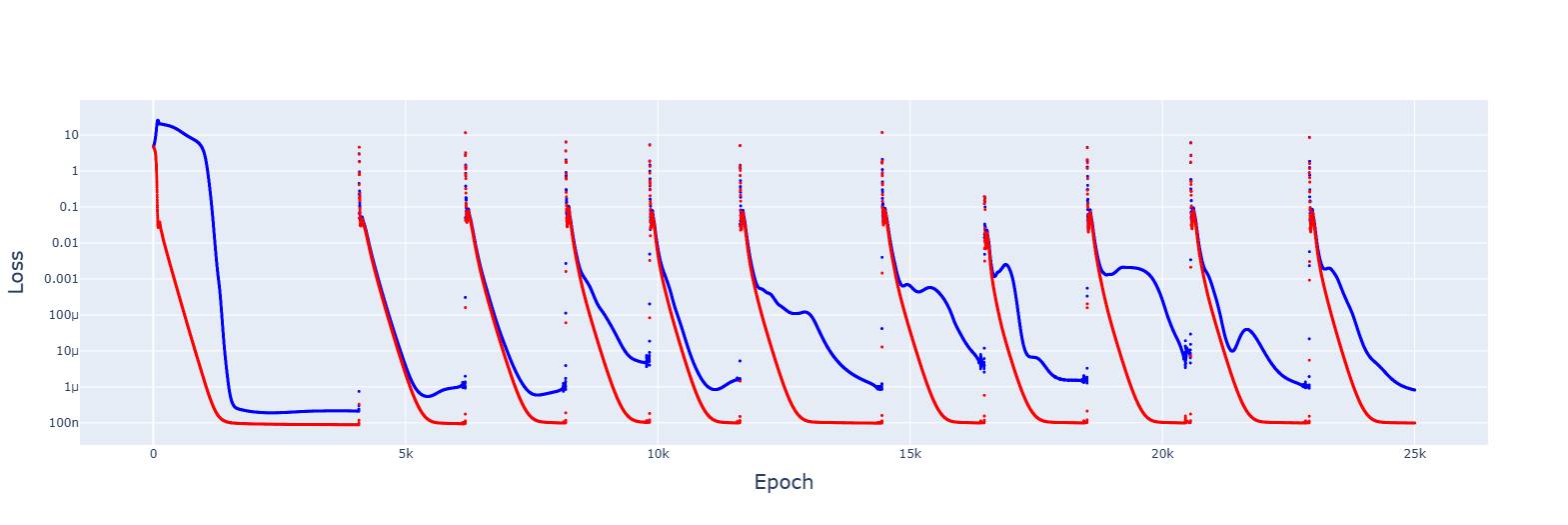

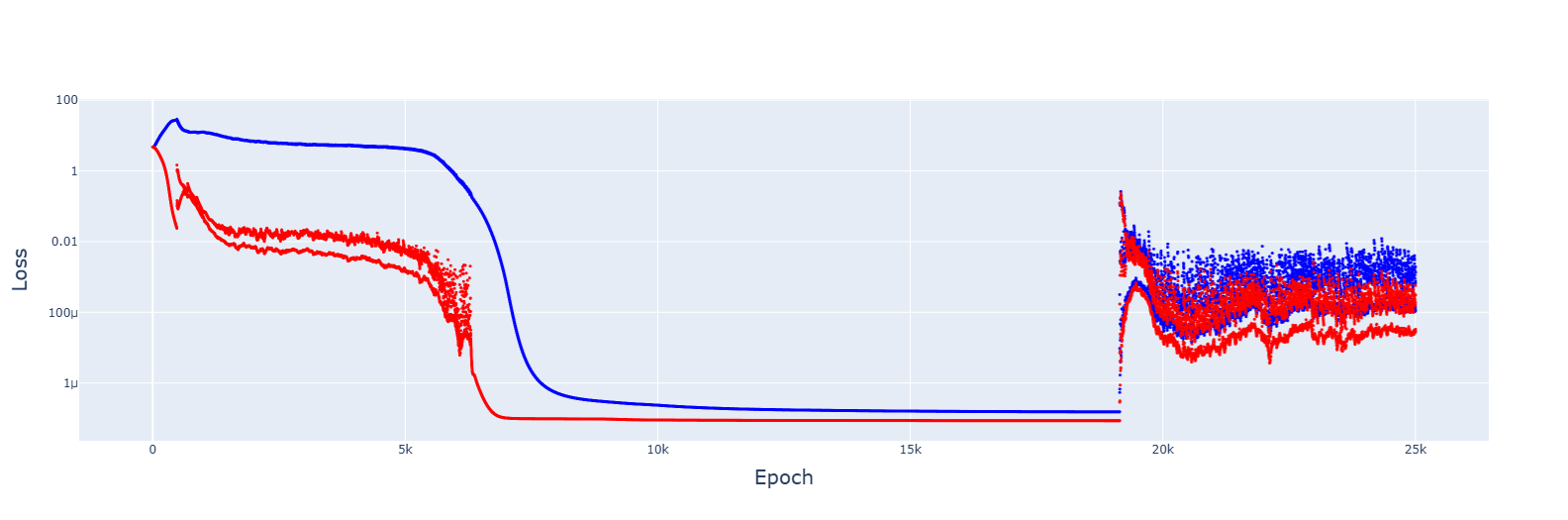

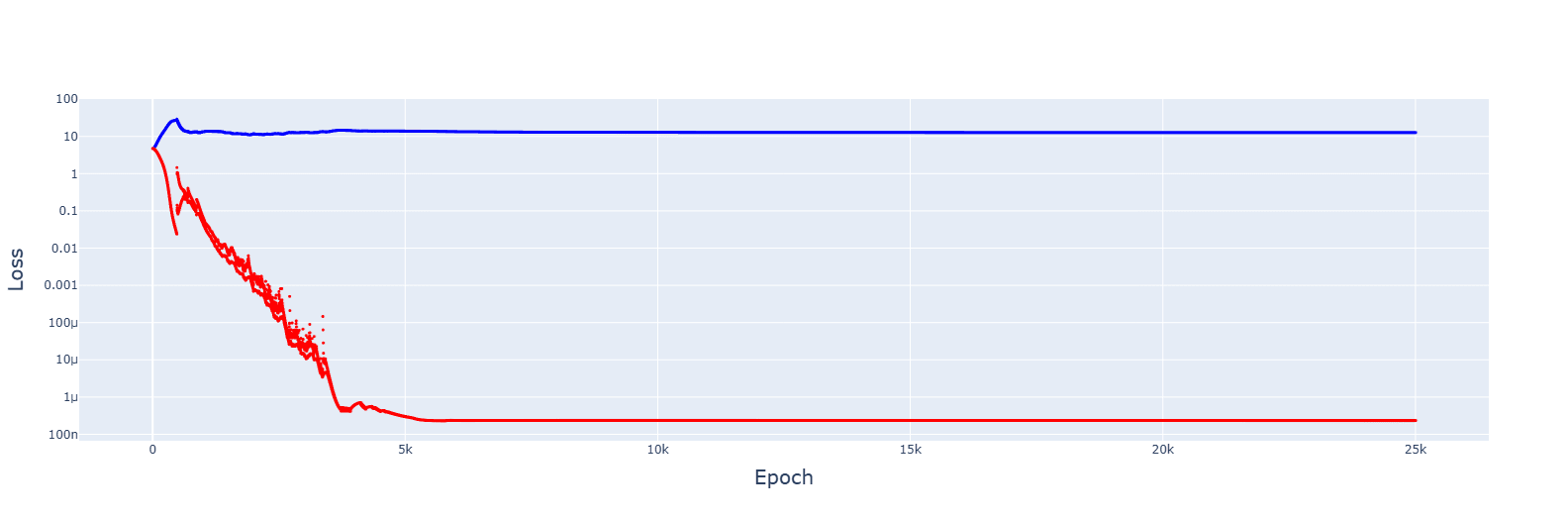

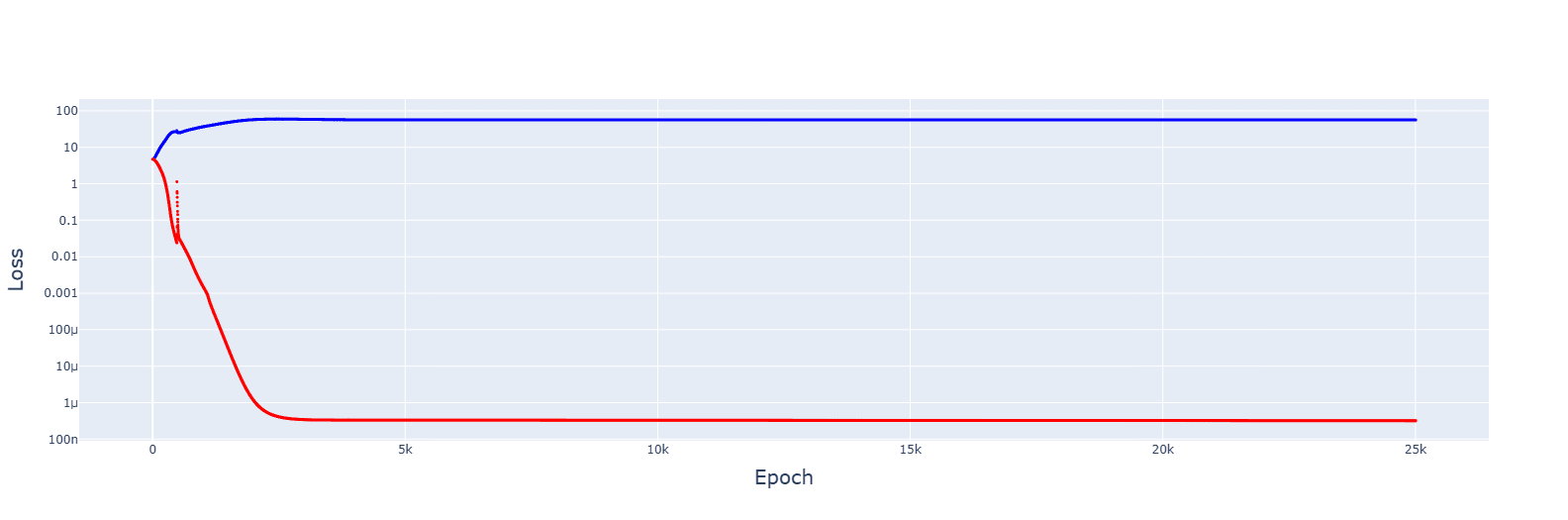

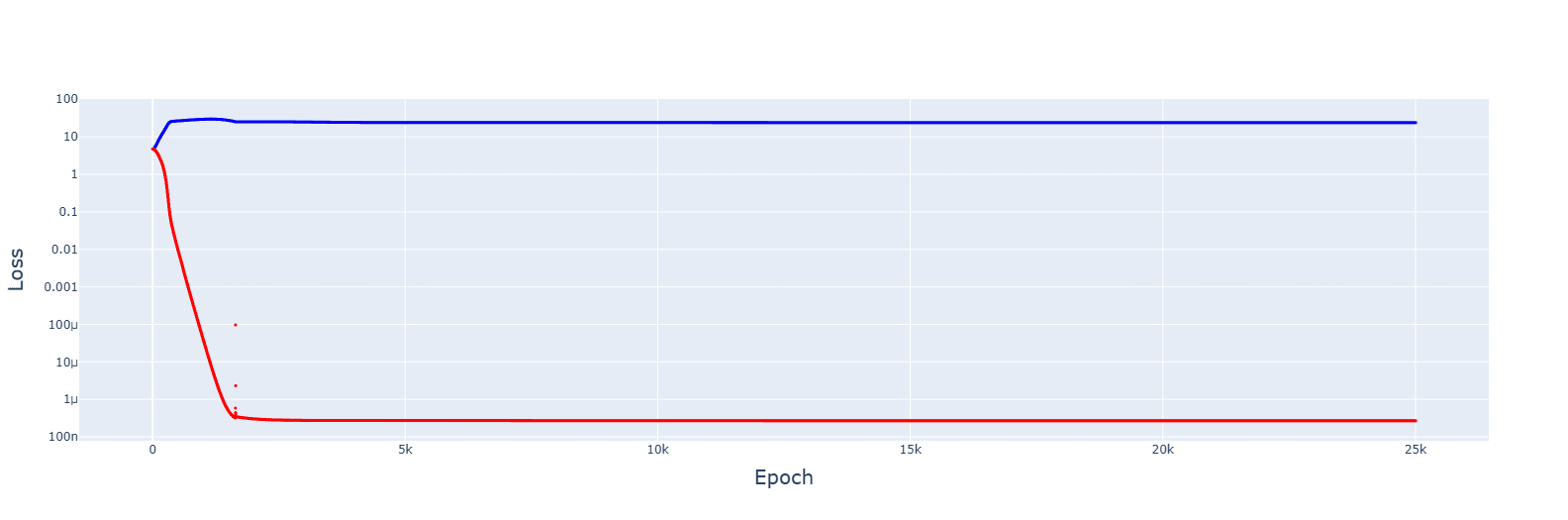

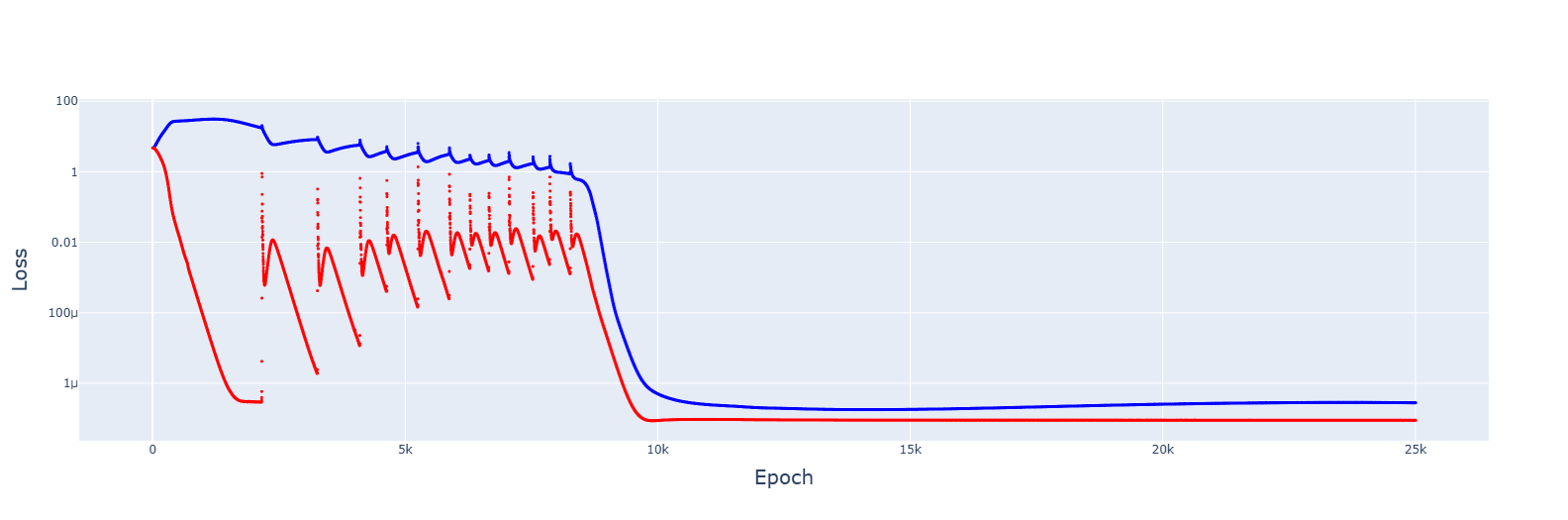

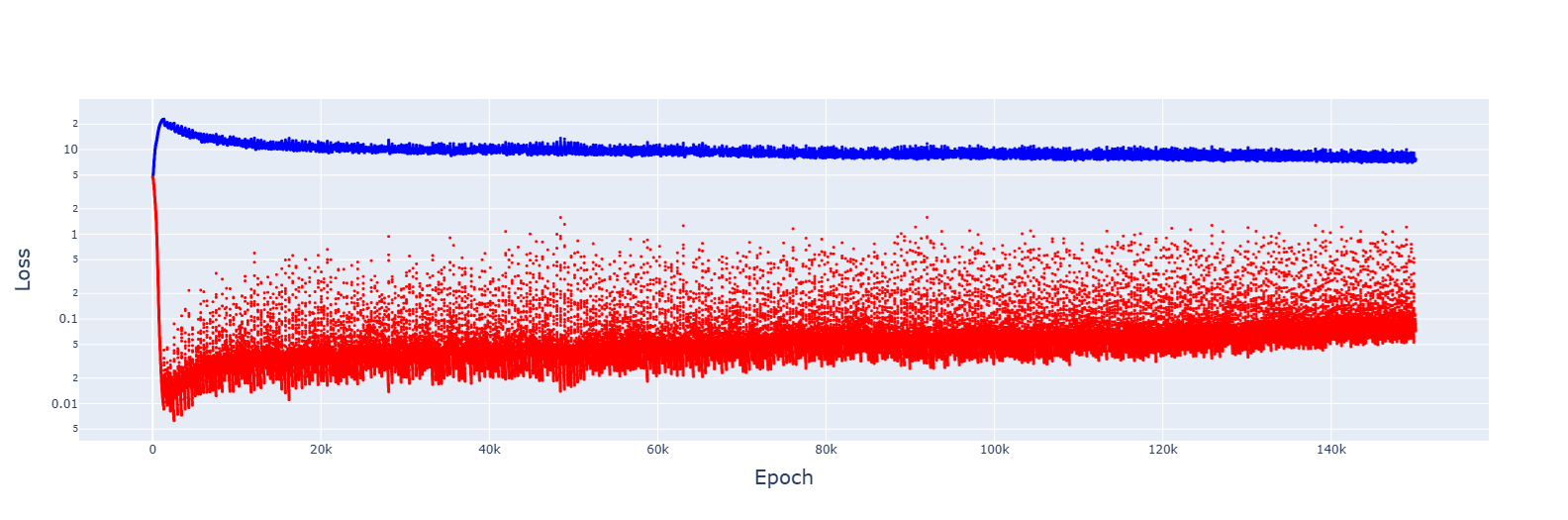

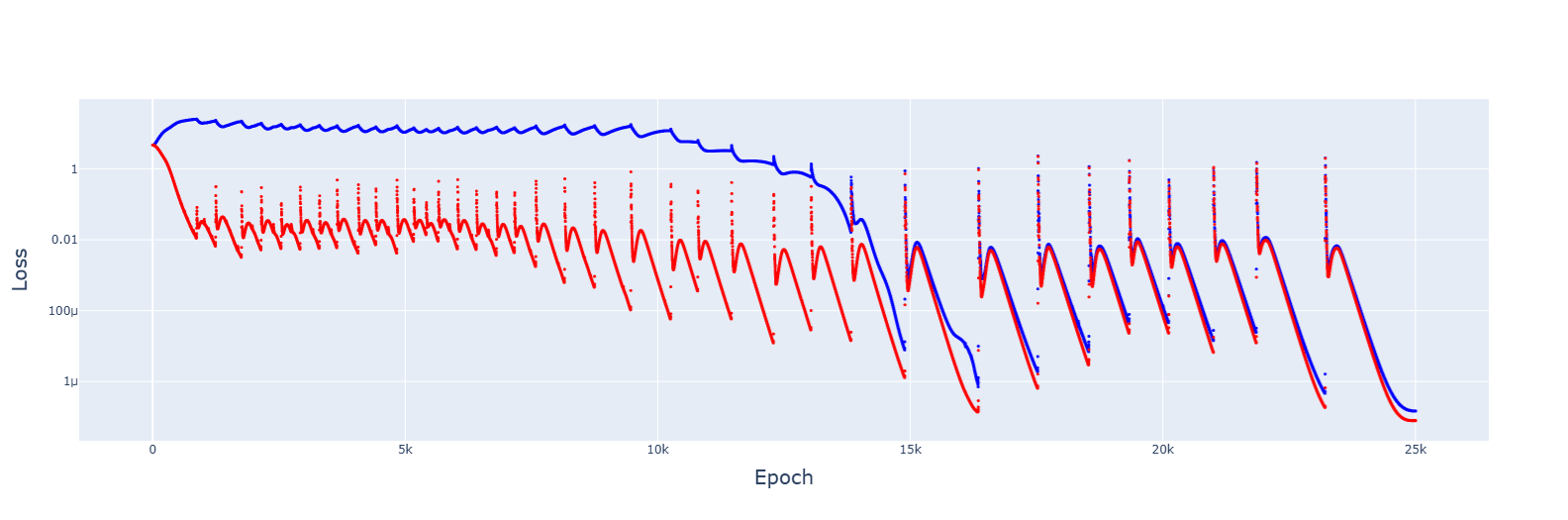

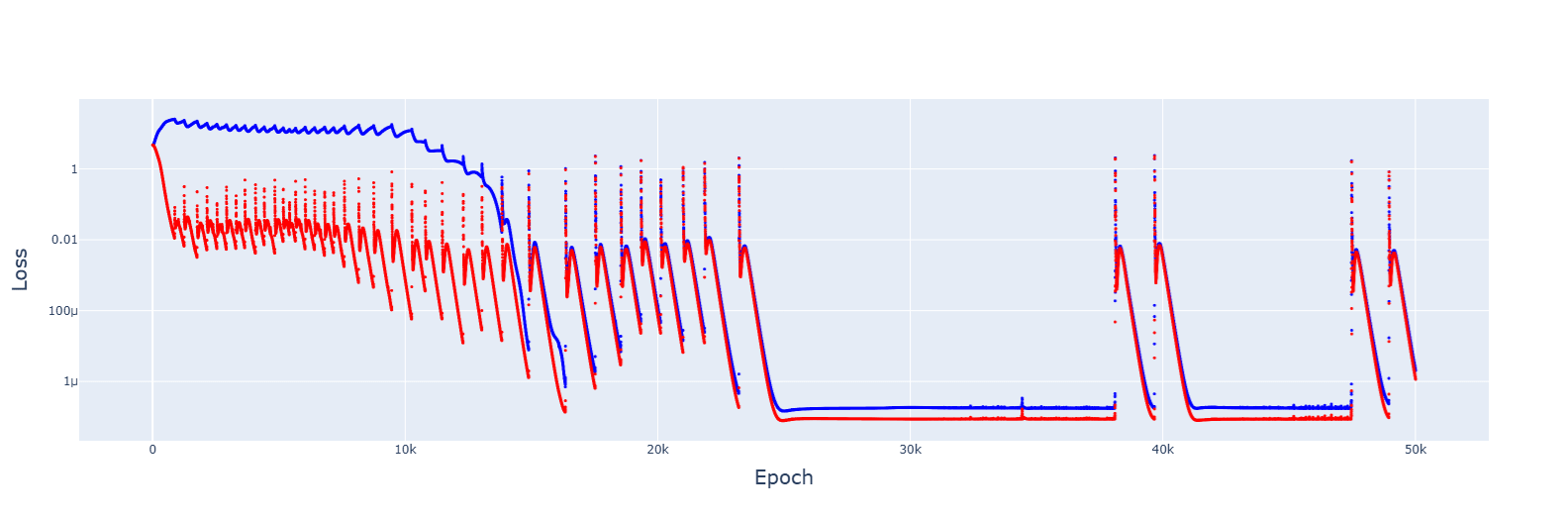

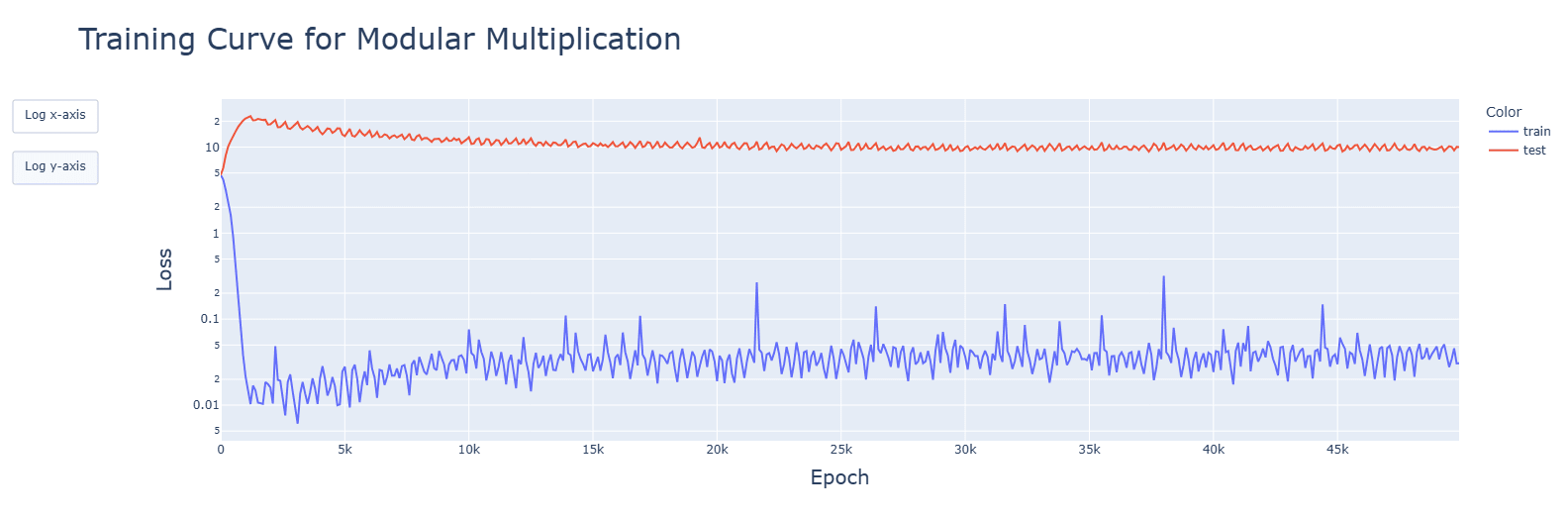

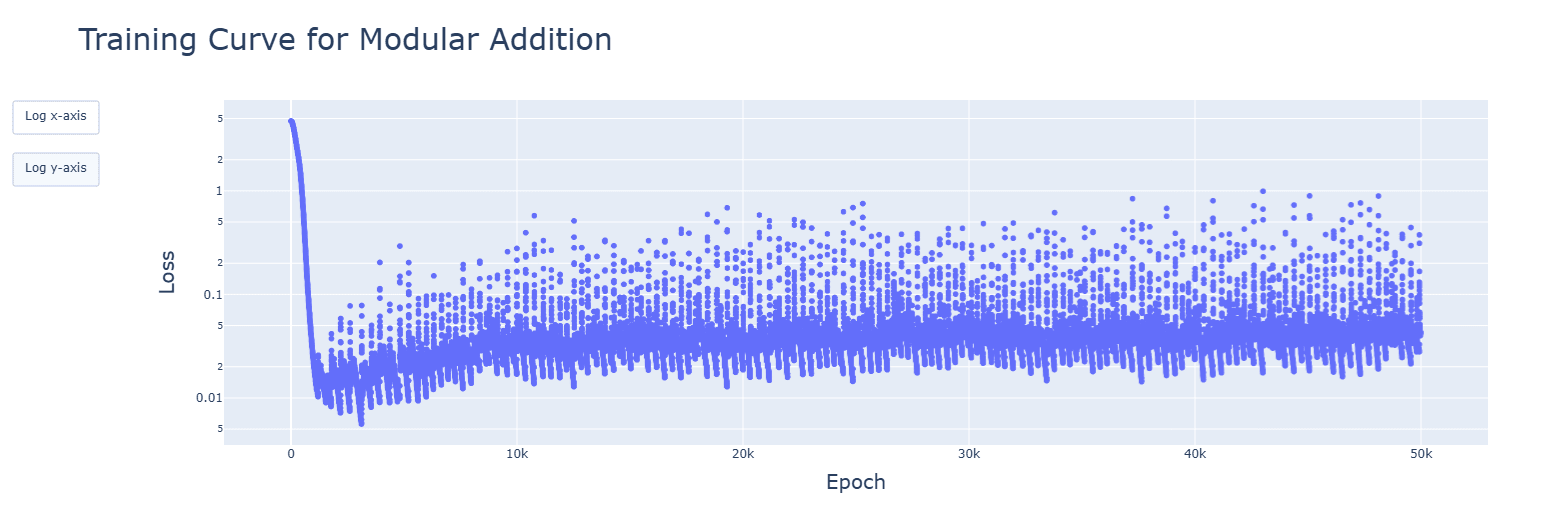

Epistemic status: small exploration without previous predictions, results low-stakes and likely correct. Edited to implement feedback by Gurkenglas which has unearthed unseen data. Thank you! Introduction As a personal exercise for building research taste and experience in the domain of AI safety and specifically interpretability, I have done four minor projects, all building upon code previously written. They were done without previously formulated hypotheses or expectations, but merely to check for anything interesting in low-hanging fruit. In the end, they have not given major insights, but I hope they will be of small use and interest for people working in these domains. This is the first project: extending Neel Nanda’s modular addition network, made for studying grokking, to subtraction and multiplication, as well as to all 6 activation functions of TransformerLens (ReLU, 3 variants of GELU, SiLU, and SoLU plus LayerNorm). The modular addition grokking results have been redone using the original code, while changing the operation (subtraction, multiplication), and the activation function. TL;DR results * Subtraction does implement the same Fourier transform-based "clock" algorithm. Multiplication, however, does not. * GELU greatly hastens grokking, SiLU only slightly. * LN messes up the activations and delays grokking, otherwise leaving the algorithm unchanged. Methods The basis for these findings is Neel Nanda’s grokking notebook. All modifications are straight-forward. All resulted notebooks, extracted graphs, and word files with clean, tabular comparisons can be found here. Results Operations General observations for the three operations[1]: * Developments for relevant aspects of the network (attention heads, periodic neuron activations, concentration of singular values, fourier frequencies) happened (details below) and were accelerated during the clean-up phase. Spikes seen in the training graphs were reflected, but did not have long-last