Detecting out of distribution text with surprisal and entropy

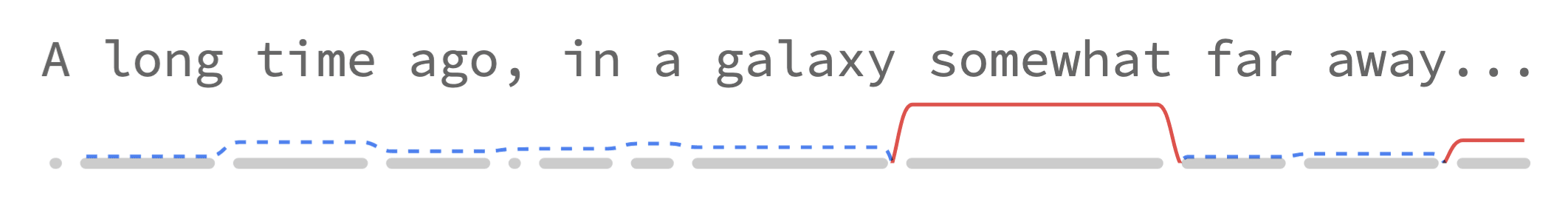

When large language models (LLMs) refuse to help with harmful tasks, attackers sometimes try to confuse them by adding bizarre strings of text called "adversarial suffixes" to their prompts. These suffixes look weird to humans, which raises the question: do they also look weird to the model? Alon & Kamfonas (2023) explored this by measuring perplexity, which is how "surprised" a language model is by text. They found that adversarial suffixes have extremely high perplexity compared to normal text. However, the relationship between perplexity and sequence length made the pattern tricky to detect in longer prompts. We set out to reproduce their results, and found that the relationship they observed emerges naturally from how perplexity is calculated. This led us to look at token-level metrics instead of whole sequences. Using a novel visualization, we discovered an interesting pattern: adversarial tokens aren't just surprising: they're surprisingly surprising given the context. This post has five parts: 1. First, we reproduce A&K's perplexity analysis using GPT-2. 2. We argue that the observed relationship emerges naturally from how perplexity is calculated when you combine normal text with adversarial suffixes. 3. We explore how others visualize token-level metrics, e.g. with color. 4. We propose a novel sparkline visualization that reveals temporal patterns. 5. Finally, we introduce S2 — an interpretable metric that captures how surprisingly surprising tokens are. A Hugging Face Space is available to test S2 and the visualization. This work is the deliverable of my project for the BlueDot AI Safety Fundamentals course[1]. In this post, “we” means "me and Claude[2]", who I collaborated with. 1. Reproducing PPL results with GPT-2 Alon & Kamfonas noticed that when they plotted perplexity against sequence length in log-log space, the points had a striking pattern: a straight line with negative slope, meaning that shorter sequences had higher perplexity score