Conversationism

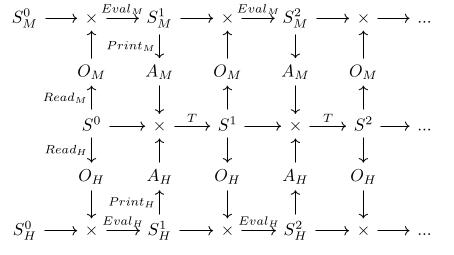

conversationism, the mathematics behind communication and understanding, used to raise the artificial intelligence as a child with love, as a painting, by midjourney Intro There seems to be a stark contrast between my alignment research on ontology maps and on cyborgism via Neuralink. I claim that the path has consisted of a series of forced moves, and that my approach to the second follows from my conclusions about the first. This post is an attempt to document those forced moves, in the hope that others do not have to duplicate my work. Ontology maps are about finding shape semantic-preserving functions between the internal states of agents. Each commutative diagram denotes a particular way of training neural networks to act as conceptual bridges between a human and AI. I originally started playing with these diagrams as a way of addressing the Eliciting Latent Knowledge problem. Erik Jenner has been thinking about similar objects here and here. Cyborgism via Neuralink is about using a brain-computer interface to read neural activation and write those predictions back to the brain, in the hope that neuroplasticity will allow you to harness the extra compute. The goal is to train an artificial neural network with a particularly human inductive bias. Given severe bandwidth constraints (currently three thousand read/write electrodes), the main reason to think this might work is that the brain and the transistor-based brain predictor are being jointly trained -- the brain can learn to send inputs to the predictor such that the predictions are useful for some downstream task. In other words, both the brain and the neural prosthesis are trained online. Think of predicting the activations of a fixed neural network given time series data (hard) versus the same task, but you pass gradients with respect to the base neural network's final loss through the bandwidth bottleneck into the predictor (less hard). In the section "An Alternative Form of Feedback", I introduce

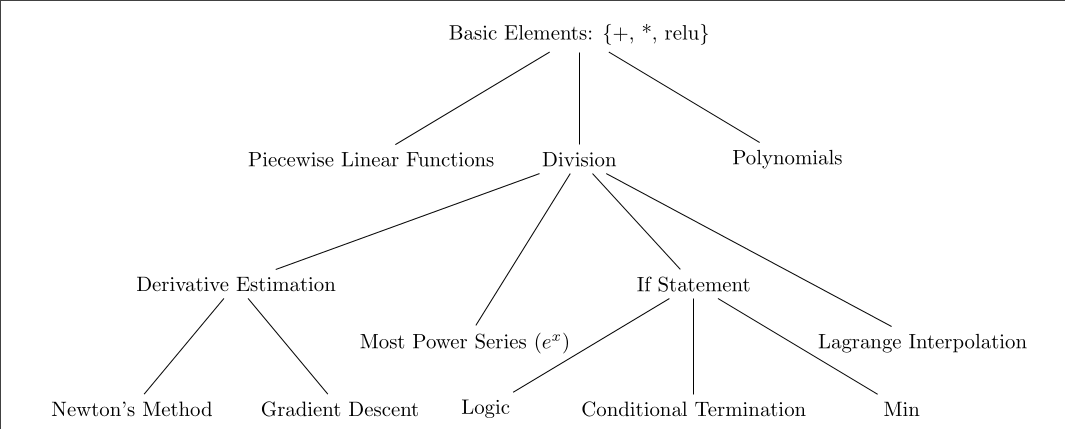

I feel like the missing probability should come from indistinguishability, but not because of programs halting. Consider a countable family of possible discrete transitions on a potentially infinite state space. Maybe a programming language is a way to enumerate that space of transitions, but the language itself enumerates in a partially redundant manner. For instance f(x)=x+x versus g(x)=2*x. So you will never be able to distinguish between those programs by watching the transition sequence [1,2,4,8,...]. In some sense the PL story of alpha, beta, gamma ... equalities is a way to describe equivalence classes of extensionally equal terms, but for a Turing complete language you will never find a decision procedure to unify pairs of terms p and q iff forall x, p(x)=q(x). So in some sense this countable family of state space transitions, if enumerated by a programming language, must remain indistinguishable.