Laying the Foundations for Vision and Multimodal Mechanistic Interpretability & Open Problems

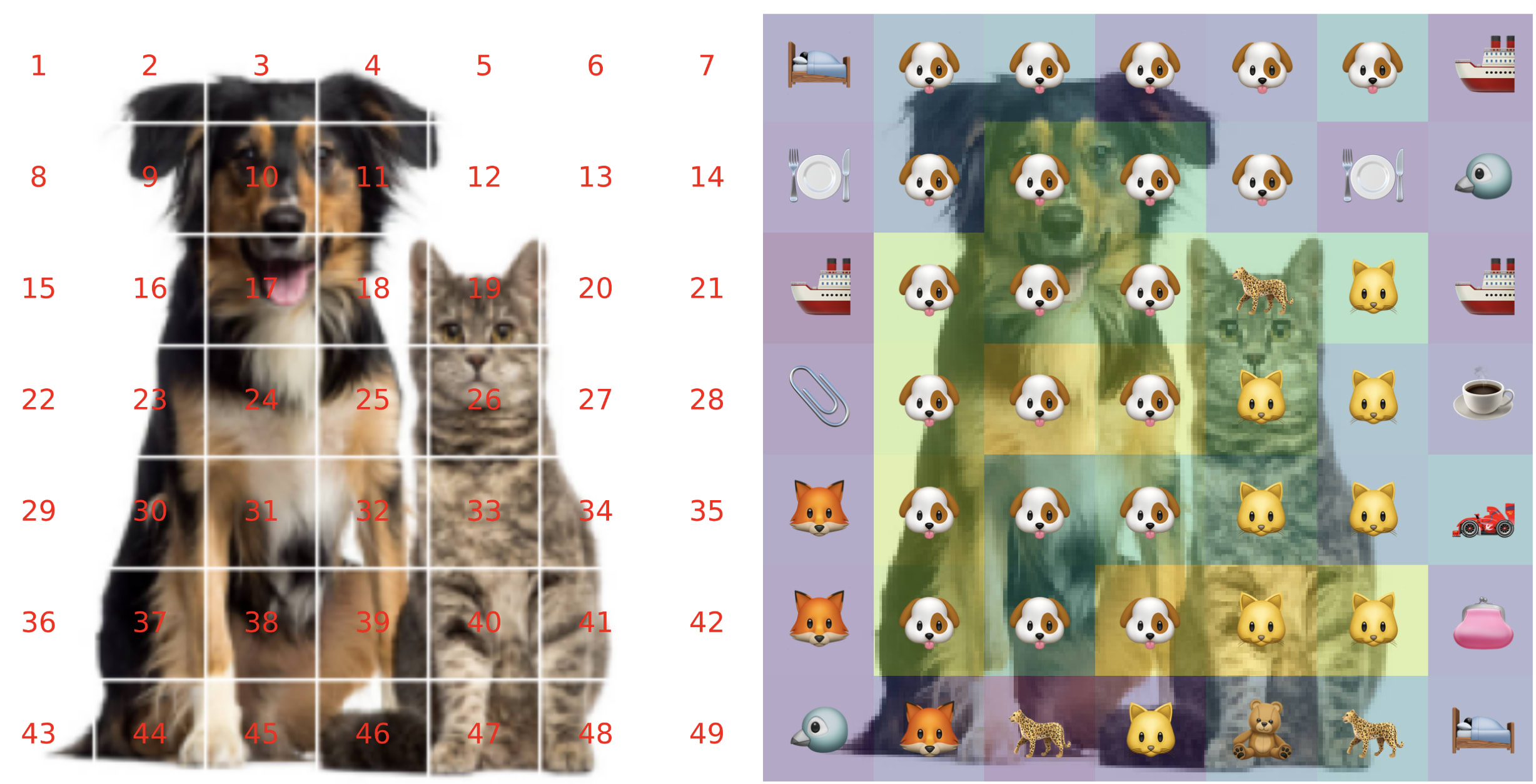

Behold the dogit lens. Patch-level logit attribution is an emergent segmentation map. Join our Discord here. This article was written by Sonia Joseph, in collaboration with Neel Nanda, and incubated in Blake Richards’s lab at Mila and in the MATS community. Thank you to the Prisma core contributors, including Praneet Suresh, Rob Graham, and Yash Vadi. Full acknowledgements of contributors are at the end. I am grateful to my collaborators for their guidance and feedback. Outline * Part One: Introduction and Motivation * Part Two: Tutorial Notebooks * Part Three: Brief ViT Overview * Part Four: Demo of Prisma’s Functionality * Key features, including logit attribution, attention head visualization, and activation patching. * Preliminary research results obtained using Prisma, including emergent segmentation maps and canonical attention heads. * Part Five: FAQ, including Key Differences between Vision and Language Mechanistic Interpretability * Part Six: Getting Started with Vision Mechanistic Interpretability * Part Seven: How to Get Involved * Part Eight: Open Problems in Vision Mechanistic Interpretability Introducing the Prisma Library for Multimodal Mechanistic Interpretability I am excited to share with the mechanistic interpretability and alignment communities a project I’ve been working on for the last few months. Prisma is a multimodal mechanistic interpretability library based on TransformerLens, currently supporting vanilla vision transformers (ViTs) and their vision-text counterparts CLIP. With recent rapid releases of multimodal models, including Sora, Gemini, and Claude 3, it is crucial that interpretability and safety efforts remain in tandem. While language mechanistic interpretability already has strong conceptual foundations, many research papers, and a thriving community, research in non-language modalities lags behind. Given that multimodal capabilities will be part of AGI, field-building in mechanistic interpretability for non

Ok, thank you for your openness. I find that in-person conversations about sensitive matters like these are easier as tone, facial expression, body language are very important here. It is possible that my past comments on EA that you refer to came off as more hostile than intended due to the text-based medium.

Fwiw, the contents of this original post actually have nothing to do with EA itself, or the past articles that mentioned me.