Thanks for the post, and especially the causal graph framework you use to describe and analyze the categories of motivations. It feels similar to Richard Dawkins 'The Selfish Gene' work in so far as it studies the fitness of motivations in their selection environment.

One area I think it could extend to is around the concepts of curiosity, exploration, and even corrigibility. This relates to 'reflection' as mentioned by habryka. I expect that as AI moves towards AGI it will improve its ability to recognize holes in its knowledge and take actions to close them (aka an AI scientist). Forming a world model that can be used for 'reflecting' on current motivations and actions is important here. To an extent, longer training runs can instill a type of curiosity. For example, a training run that includes formulating a web search query, analyzing results, updating the search query and doing a new web search, and then producing the correct answer, will help train for 'curiosity' but only as it becomes a 'schemer' I presume. In other words, a general schemer motivation of 'curiosity/exploration' could be included in your model if it reliably has a consequence of producing results that have a higher reward. Similarly, training examples where the AI asks a user for clarification on a goal could help create a 'corrigibility' schemer. But I think the general concept is crucial enough to warrant explicit discussion. For example, in the diagram that includes 'saint' and 'alien' you might add a 5th category for 'explorer' or 'scientist'.

I think that as AI models get better at recognizing holes in their knowledge and seeking to fill them, the models will find benefit from taking actions which tend to minimize side effects on the world and leave open many possible future options (low impact). Again, long training examples might suffice to train this, but new training systems might be needed to create this behavior. This relates to corrigibility in that goals themselves might be adjusted or questioned during action sequences (if the steps required violate certain trained motivations or system prompt rules).

Thanks for the interesting peak into your brain. I have a couple thoughts to share on how my own approaches relate.

The first is related to watching plenty of sci-fi apocalyptic future movies. While it's exciting to see the hero's adventures, I'd like to think that I'd be one of the scrappy people trying to hold some semblance of civilization together. Or the survivor trying to barter and trade with folks instead of fighting over stuff. In general, even in the face of doom, just trying to help minimize suffering unto the end. So the 'death with dignity' ethos fits in with this view.

A second relates to the idea of seeing yourself getting out of bed in the morning. When I've had a lot on my plate to the point of seeming stressful, it helps to visualize the future state where I've gotten the work done and am looking back. Then just imagining inside my brain sodium ions moving around, electrons dropping energy states, proteins changing shapes, etc, as the problem gets resolved. Visualizing the low-level activity in my brain helps me shift focus from the stress and actually move ahead solving the problem.

That idea of catching bad mesa-objectives during training sounds key and I presume fits under the 'generalization science' and 'robust character training' from Evan's original post. In the US, NIST is working to develop test, evaluation, verification and validation standards for AI and it would be good to include this concept into that effort.

The data relating exponential capabilities to Elo is seen over decades in the computer chess history too. From the 1960s into the 2020's, while computer hardware advanced exponentially at 100-1000x per decade in performance (and SW for computer chess advanced too), Elo scores grew linearly at about 400x per decade, taking multiple decades to go from 'novice' to 'superhuman'. Elo scores have a tinge of exponential to them - a 400 point Elo advantage is about a 10:1 chance for the higher scored competitor to win, and an 800 point Elo is about 200:1, etc. It appears that the current HW/SW/dollar rate of growth towards AGI means the Elo relative to humans is increasing faster than 400 Elo/decade. And, of course, unlike computer chess, as AI Elo at 'AI development' approaches the level of a skilled human, we'll likely get a noticable increase in the rate of capability increase.

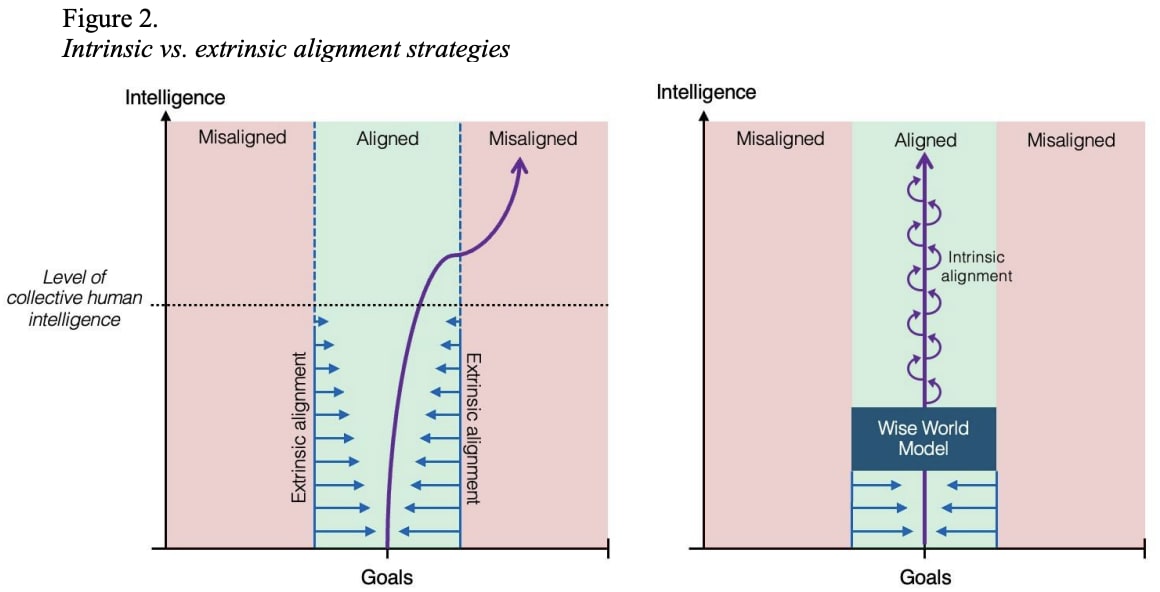

Thanks Evan for an excellent overview of the alignment problem as seen from within Anthropic. Chris Olah's graph showing perspectives on alignment difficulty is indeed a useful visual for this discussion. Another image I've shared lately relates to the challenge of building inner alignment illustrated by figure 2 from Contemplative Artificial Intelligence:

In the images, the blue arrows indicate our efforts to maintain alignment on AI as capabilities advance through AGI to ASI. In the left image, we see the case of models that generalize in misaligned ways - the blue constraints (guardrails, system prompts, thin efforts at RLHF, etc) fail to constrain the advancing AI to remain aligned. The right image shows the happier result where training, architecture, interpretability, scalable oversight, etc contribute to a 'wise world model' that maintains alignment even as capabilities advance.

I think the Anthropic probability distribution of alignment difficulty seems correct - we probably won't get alignment by default from advancing AI, but, as you suggest, by serious concerted effort we can maintain alignment through AGI. What's critical is to use techniques like generalization science, interpretability, and introspective honesty to gauge whether we are building towards AGI capable of safely automating alignment research towards ASI. To that end, metrics that allow us determine if alignment is actual closer to P-vs-NP in difficulty are crucial, and efforts from METR, UK AISI, NIST, and others can help here. I'd like to see more 'positive alignment' papers such as Cooperative Inverse Reinforcement Learning, Corrigibility, and AssistanceZero: Scalably Solving Assistance Games, as detecting when an AI is positively aligned internally is critical to getting to the 'wise world model' outcome.

I concur with that sentiment. GPUs hit a sweet spot between compute efficiency and algorithmic flexibility. CPUs are more flexible for arbitrary control logic, and custom ASICs can improve compute efficiency for a stable algorithm, but GPUs are great for exploring new algorithms where SIMD-style control flows exist (SIMD=single instruction, multiple data).

I would include "constructivist learning" in your list, but I agree that LLMs seem capable of this. By "constructivist learning" I mean a scientific process where the learning conceives of an experiment on the world, tests the idea by acting on the world, and then learns from the result. A VLA model with incremental learning seems close to this. RL could be used for the model update, but I think for ASI we need learnings from real-world experiments.

This post provides a good overview of some topics I think need attention by the 'AI policy' people at national levels. AI policy (such as the US and UK AISI groups) has been focused on generative AI and recently agentic AI to understand near-term risks. Whether we're talking LLM training and scaffolding advances, or a new AI paradigm, there is new risk when AI begins to learn from experiments in the world or reasoning about its own world model. In child development, imitation learning focuses on learning from examples, while constructivist learning focuses on learning by reflecting on interactions with the world. Constructivist learning is, I expect, key to push past AGI to ASI and caries obvious risks to alignment beyond imitation learning.

In general, I expect something LLM-like (i.e. transformer models or an improved derivative) to be able to reach ASI with a proper learning-by-doing structure. But I also expect ASI could find and implement a more efficient intelligence algorithm once ASI exists.

1.4.1 Possible counter: “If a different, much more powerful, AI paradigm existed, then someone would have already found it.”

This paragraph tries to provide some data for a probability estimate of this point. AI as a field has been around at least since the Dartmouth conference in 1956. In this time we've had Eliza, Deep Blue, Watson, and now transformer-based models including OpenAI o3-pro. In support of Steven's position, one could note that AI research publications are much higher now that during the previous 70 years, but at the same time many AI ideas have been explored and the current best results are with models based on the 8-year-old "Attention is all you need" paper. To get a sense for the research rate, we can note that the doubling time for AI/ML research papers per month was about 2 years between 1994 and 2023 according to this Nature paper. Hence, every 2 years we have about as many papers as created in the last 70 years. I don't expect this doubling can continue forever, but certainly many new ideas are being explored now. If a 'simple model' for AI exists and it's discovery is, say, randomly positioned on a given AI/ML research paper published between 1956 and ASI achievement then one could estimate the probability of the paper's position using this simplistic research model. If ASI is only 6 years out and the doubling every 2 years continues, then almost 90% of the AI/ML research papers before ASI are still in the future. Even though many of these papers are LLM focused, there is still active work in alternative areas. But even though the foundational paper for ASI may yet be in our future, I would expect something like a 'complex' ML model will win out (for example, Yann LeCun's ideas involve differentiable brain modules). And the solution may or may not be more compute-intensive than current models. The brain compute estimates vary widely and the human brain has been optimized by evolution for many generations. In short, it seems reasonable to expect another key idea before ASI, but I would not expect it to be a simple model.

From what I gather reading the ACAS X paper, it formally proved a subset of the whole problem and many issues uncovered by using the formal method were further analyzed using simulations of aircraft behaviors (see the end of section 3.3). One of the assumptions in the model is that the planes react correctly to control decisions and don't have mechanical issues. The problem space and possible actions were well-defined and well-constrained in the realistic but simplified model they analyzed. I can imagine complex systems making use of provably correct components in this way but the whole system may not be provably correct. When an AI develops a plan, it could prefer to follow a provably safe path when reality can be mapped to a usable model reliably, and then behave cautiously when moving from one provably safe path to another. But the metric for 'reliable model' and 'behave cautiously' still require non-provable decisions to solve a complex problem.

Interesting - I too suspect that good world models will help with data efficiency. Even using the existing training paradigm where a lot of data is needed to get the generalization to work well, if an AI has a good internal world model it could generate usable synthetic examples for incremental training. For example, when a child sees a photo of some strange new animal from the side, the child likely surmises that the animal looks the same from the other side; if the photo only shows one eye, the child can imagine that looking head on into the animal's face it will have 2 eyes, etc. Because the child has a rather reliable model of an 'animal', they can create reliable synthetic data for incremental training from a single picture.

And I like your framing of having the internally generated reward be valuable for learning too. While I expect that reward is a composite of experience (enlightened self-interest, reading and discussion, etc) it can still be more important day-to-day than the external rewards received immediately. (I think this opens up a lot of philosophy - what are the 'ultimate' goals for your internal ethics and personally fulfilling rewards, etc. But I see your point).