How Colds Spread

It seems like a catastrophic civilizational failure that we don't have confident common knowledge of how colds spread. There have been a number of studies conducted over the years, but most of those were testing secondary endpoints, like how long viruses would survive on surfaces, or how likely they were to be transmitted to people's fingers after touching contaminated surfaces, etc. However, a few of them involved rounding up some brave volunteers, deliberately infecting some of them, and then arranging matters so as to test various routes of transmission to uninfected volunteers. My conclusions from reviewing these studies are: * You can definitely infect yourself if you take a sick person's snot and rub it into your eyeballs or nostrils. This probably works even if you touched a surface that a sick person touched, rather than by handshake, at least for some surfaces. There's some evidence that actual human infection is much less likely if the contaminated surface you touched is dry, but for most colds there'll often be quite a lot of virus detectable on even dry contaminated surfaces for most of a day. I think you can probably infect yourself with fomites, but my guess is that it's mostly aerosolized particles. * Small particle aerosol transmission[1] seems unlikely to transmit the tested cold viruses, but the meta-analysis is more equivocal than I am on that question. * Sitting directly across a table from an infected person, or otherwise having a face-to-face conversation with them, seems pretty risky. A separate "close-quarters" study suggests that likelihood of infection increased (approximately) logarithmically with hours of exposure, with a 50% likelihood at about 200 infected-person-hours of sharing space. Fomites This section may as well be called Gwaltney & Hendley, as they conducted basically all of the studies[2] suggesting that fomites might be a substantial vector of transmission. Gwaltney et al., 1978 conducted three separate trials, e

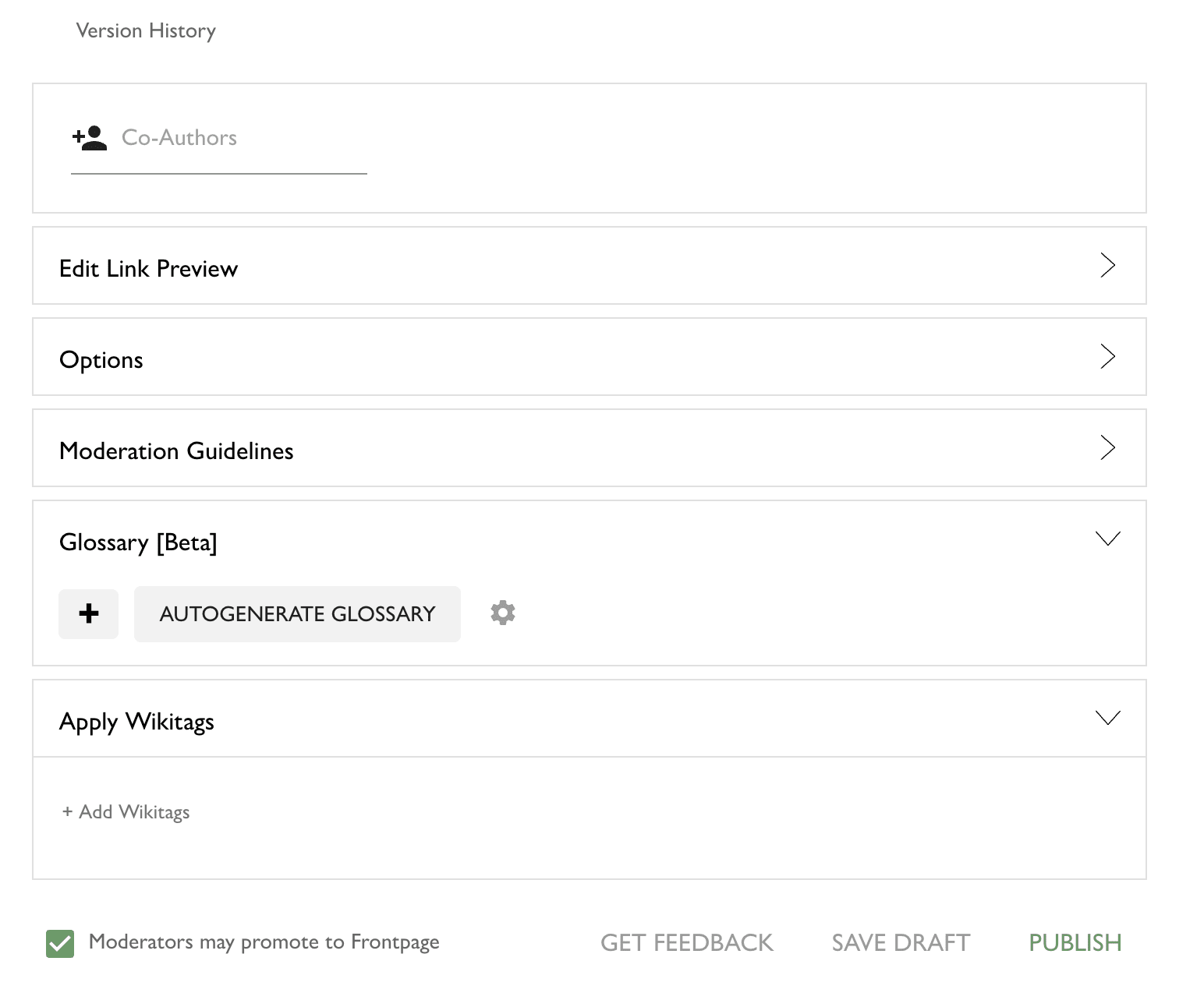

Nope, I haven't tested the conversion to markdown - will add it to the list of things to check, thank you!