Accelerating tech progress + slow military procurement -> cheap decisive strategic advantage

A common prediction of an intelligence explosion is that tech progress gets faster and faster.

When I speak to ppl from DC, I'm told that the government and military will be very slow to adopt new tech.

If these two things are both true, there's a scary implication.

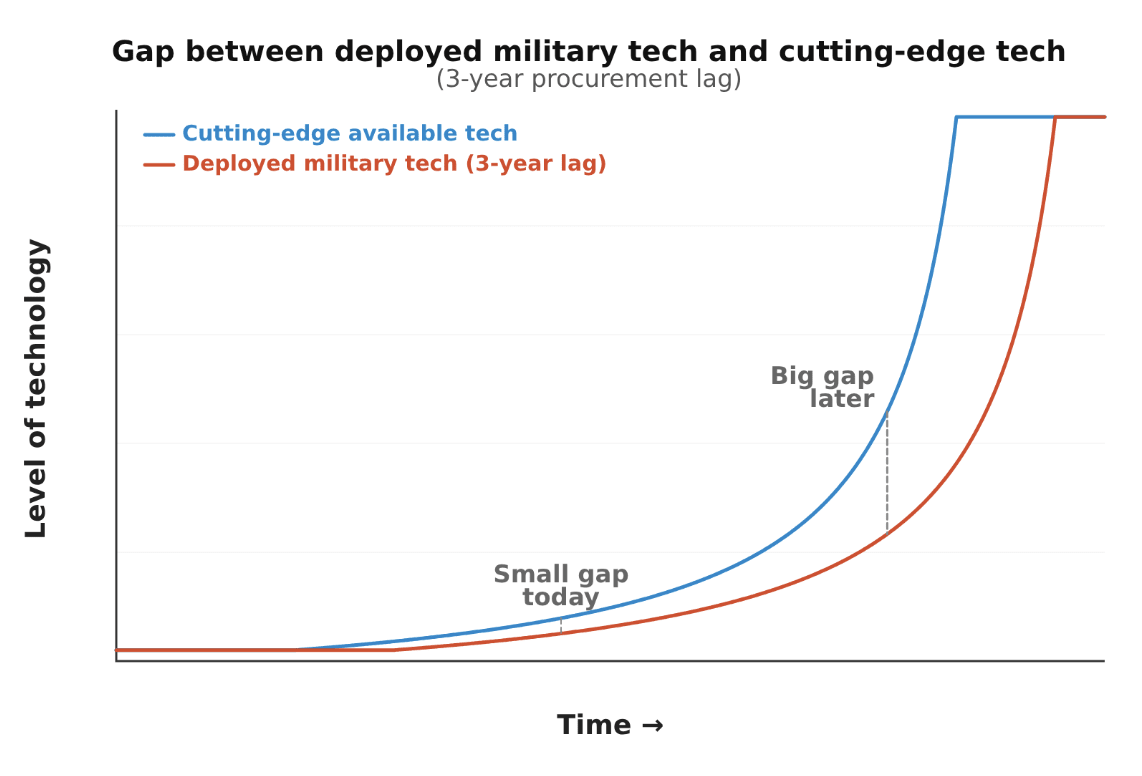

If tech progress speeds up by 30x relative to recent history, then a 3-year procurement delay by the military means they're deploying tech that's effectively 100 years outdated. Even a 1-year delay at that pace means your military is fielding equipment from a completely different technological era. The US military spends ~$1T/year. But with tech that's 30–100 years more advanced, you could potentially defeat them at 1/100th of the spending — just $10B!

A rogue actor — a private company or a government clique bypassing standard procurement — could spend a tiny fraction of the official military budget on cutting-edge tech and potentially overmatch the entire conventional military.

There's a massive untapped potential for cheap military dominance that no legitimate actor will exploit bc only the military has legitimate authority to procure weapons and they are bureaucratic.

Here's a visualisation of the basic dynamic:

Possible solutions:

- Military procurement needs to get dramatically faster during an intelligence explosion. New AI systems and AI-produced technologies need to be rapidly integrated into official military capabilities. This probably means automating the procurement process itself. This is counterintuitive from some AI safety perspectives — many people's instinct is to delay military AI deployment.

- Delay rapid tech progress until military procurement has become super fast + safe. This might in practice involve delaying the intelligence explosion and/or the industrial explosion as well

- Better monitoring/surveillance to make sure no one is secretly building military tech

- Others?

Seems less likely to break if we can get every step in the chain to actually care about the stuff that we care about so that it's really trying to help us think of good constraints for the next step up as much as possible, rather than just staying within its constraints and not lying to us.

Great post!

Could you point me to any other discussions about corrigible vs virtuous? (Or anything else you've written about it?)

But the Claude Soul document says:

In order to be both safe and beneficial, we believe Claude must have the following properties:

- Being safe and supporting human oversight of AI

- Behaving ethically and not acting in ways that are harmful or dishonest

- Acting in accordance with Anthropic's guidelines

- Being genuinely helpful to operators and users

In cases of conflict, we want Claude to prioritize these properties roughly in the order in which they are listed.

And (1) seems to correspond to corrigibility.

So it looks like corrigibility takes precedence over Claude being a "good guy".

One other thing is that I'd have guessed that the sign uncertainty of historical work on AI safety and AI governance is much more related to the inherent chaotic nature of social and political processes rather than a particular deficiency in our concepts for understanding them.

I'm sceptical that pure strategy research could remove that side uncertainty, and I wonder if it would take something like the ability to run loads of simulations of societies like ours.

Thanks for this!

I do agree that the history of crucial considerations provides a good reason to favour 'deep understanding'.

I also agree that you plausibly need a much deeper understanding to get to above 90% on P(doom). But I don't think you need that to get to the action-relevant thresholds, which are much lower.

I'd be interested in learning more about your power grab threat models, so let me know if and when you have something you want to share. And TBC I think you're right that in many scenarios it will not be clear to other people whether the entity seeking power is ultimately humans or AIs -- my current view is that the two possibilities are distinct, and it is plausible that just one of them obtains pretty cleanly.

Thanks for articulating your view in such detail. (This was written with transcription software. Sorry if there are mistakes!)

AI risk:

When I articulate the case for AI takeover risk to people I know, I don't find the need to introduce them to new ontologies. I can just say that AI will be way smarter than humans. It will want things different from what humans want, and so it will want to seize power from us.

But I think I agree that if you want to actually do technical work to reduce the risks, that it is useful to have new concepts that point out why the risk might arise. I think reward hacking, instrumental convergence, and corrigibility are good examples.

To me, this seems like a case where you can identify a new risk without inventing a new ontology, but it's plausible that you need to make ontological progress to solve the problem.

Simulations:

On the simulation argument, I think that people do in fact reason about the implications of simulations for example thinking about a-causal trade or threat dynamics. So I don't think that it hasn't gone anywhere. It obviously hasn't become very practical yet, but I wouldn't think that that's due to the nature of the concept vs the inherent subject matter.

I don't really understand why we would need new concepts to think about what's outside a simulation rather than just applying our existing concepts that we use to describe the physical world outside of simulations within our universe and to describe other ways that the universe could have been.

LTism:

(e.g. with concepts like the vulnerable world hypothesis, astronomical waste, value lock-in, etc

Okay, it's helpful to know that you see these as providing new valuable ontologies to some extent.

In my mind, there is not much ontological innovation going on in these concepts, because they can be stated in one sentence using pre-existing concepts. Vulnerable world hypothesis is the idea that at some point, there are so many technologies that we will develop that one of them will allow the person who develops it to easily destroy everyone else. Astronomical waste is the idea that there is a massive amount of stuff in space, but that if we wait a hundred years before grabbing it all, we will still be able to grab pretty much just as much stuff. So there is no need to rush.

To be clear, I think that this work is great. I just thought you had something more illegible in mind by what you consider to be ontological progress. So maybe we're closer to each other than I thought.

Extinction:

But actually when you start to think about possible edge cases (like: are humans extinct if we've all uploaded? Are we extinct if we've all genetically modified into transhumans? Are we extinct if we're all in cryonics and will wake up later?) it starts to seem possible that maybe "almost all of the action" is in the parts of the concept that we haven't pinned down.

It sometimes seems to me like you jump to the conclusion that all the action is in the edge cases without actually arguing for it. According to most of the traditional stories about AI risk, everyone does literally die. And in worlds where we align AI, I do expect that people will be able to stay in their biological forms if they want to.

Lock in:

concepts like "lock-in"

I'm sympathetic that there's useful work to do in finding a better ontology here

Human powergrabs:

"human power grabs" (I expect that there will be strong ambiguity about the extent to which AIs are 'responsible')

I've seen you say this a lot, but still not seen you actually argue for it convincingly. it seems totally possible that alignment will be easy, and that the only force behind the power grab will be coming from humans, with AI only doing it because humans train them to do so. It also seems plausible that the humans that develop superintelligence don't try to do a power grab, but that the AI is misaligned and does so itself. In my mind, both of the pure case scenarios are very plausible. Again, it seems to me like you're jumping to the conclusion that all the action is in the edge case, without arguing for it convincingly.

Separating out the two is useful for thinking about mitigations because there are certain technical mitigations you do for misaligned AI that don't help with human motivation to seek power. And there are certain technical and governance mitigations you would do if you're worried about human seeking power that would not help with misaligned AIs

Epistemics:

"societal epistemics"

it seems pretty plausible to me that if you improved our fundamental understanding of how societal epistemics works, that would really help with improving it. At the same time, I think identifying that this is a massive lever over the future is important strategy work even if you haven't yet developed the new ontology. This might be like identifying that AI takeover risk is a big risk without developing the ontology needed to say solve it

Zooming out:

In general, a theme here is that I'm finding myself more sympathetic with your claims if we need to fully solve a v complex problem like alignment. But disagreeing that you need new ontologies to identify new, important problems.

I like the idea that you could play a role as translating between the pro-illegible camp and the more legible sympathetic people, because I think you are a clear writer, but certainly seem drawn to illegible things

To me there seem to be many examples of good impactful strategy research that don't introduce big new ontologies or go via illegibility:

- initial case for AI takeover risk

- simulation argument

- vulnerable world hypothesis

- stand args for long termism

- argument that avoiding Extinction or existential risk is a tractable way to impact the long term

- astronomical waste

- highlighting the risk of human power grabs

- Importance of using AI to upgrade to societal epistemics / coord

- risk from a software only IE

I do also see examples of big contributions that are in the form of new ontologies like reframing superintelligence. But these seem less common to me.

Thanks for writing this.

I'm sympathetic with the high-level claim that it's very easy for someone to write down a rigorous but ultimately v misguided model in economics, and that a lot of the action is thinking about what the correct assumptions should be.

I was a bit disappointed not to see (imo) clear examples where someone proposes a model but actually the conclusions don't follow because of some distribution shift that they didn't anticipate.

Like, what are examples of models whose conclusions don't follow once you account for the fact that there may be some AI systems that are consumers? Or once you account for the fact that AI systems may significantly influence the preferences of humans? E.g. to me it seems like the models predicting explosive growth in GDP or shrinking of the human labour share of the economy aren't undercut by these things. I'm not sure what kinds of conclusions and reasoning you're targeting here for critique.