[Paper] A is for Absorption: Studying Feature Splitting and Absorption in Sparse Autoencoders

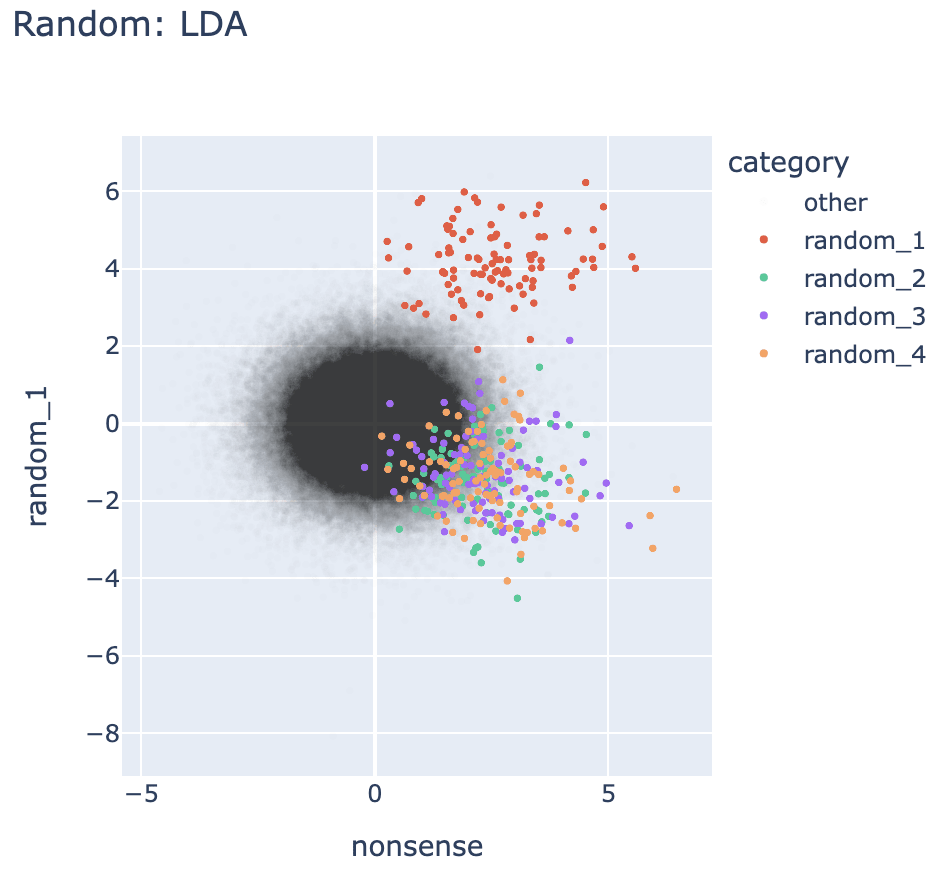

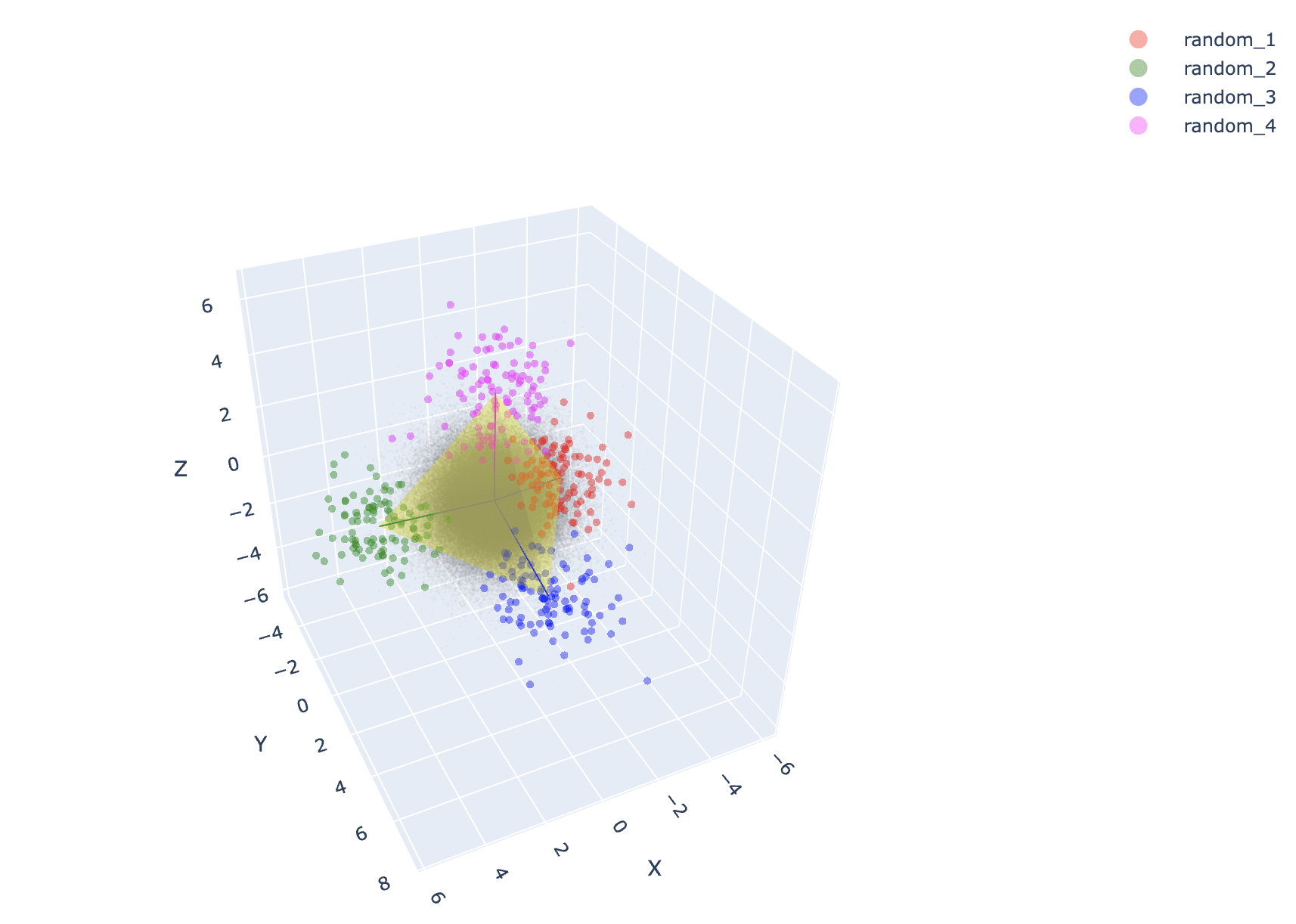

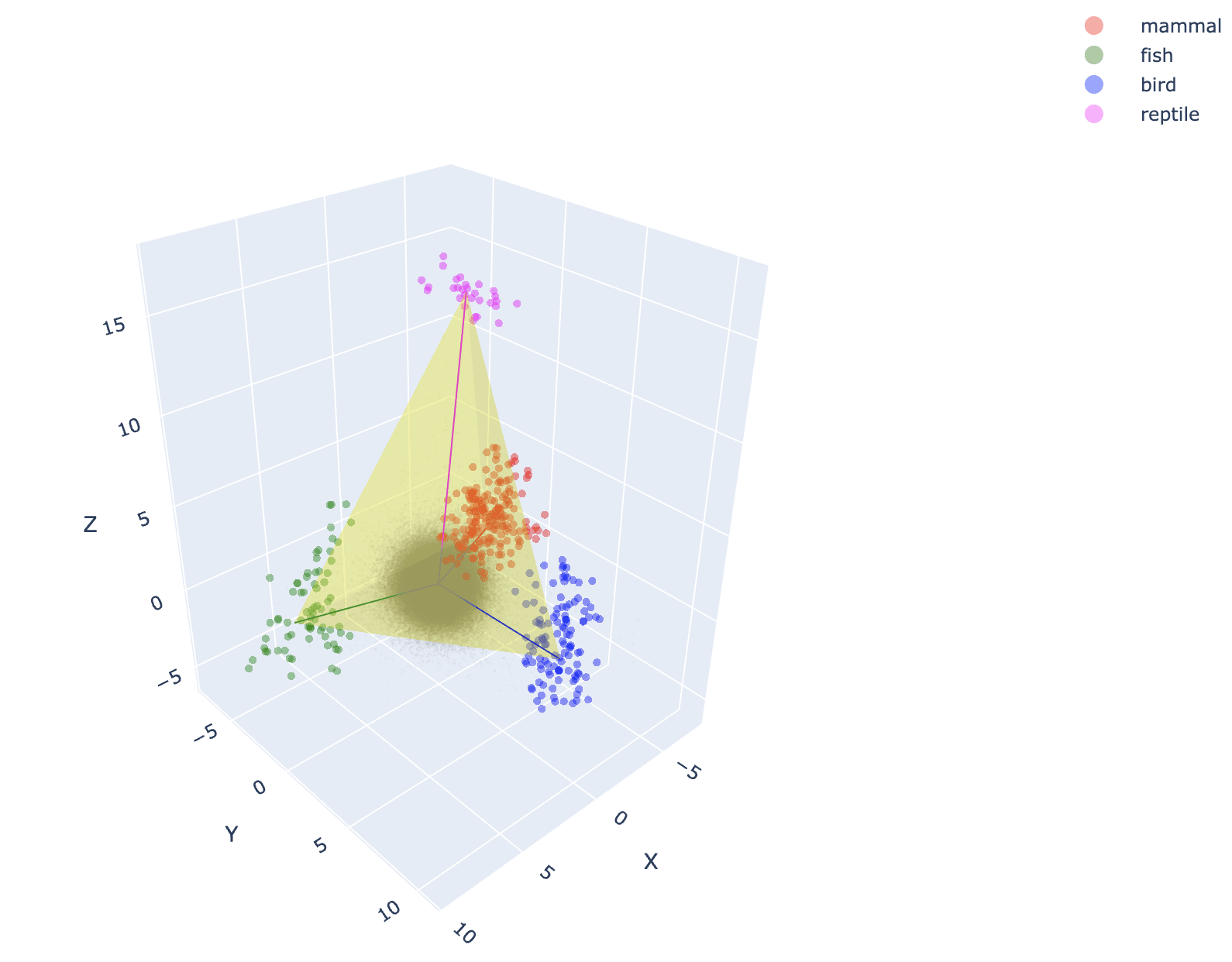

This research was completed for London AI Safety Research (LASR) Labs 2024. The team was supervised by Joseph Bloom (Decode Research). Find out more about the programme and express interest in upcoming iterations here. This high level summary will be most accessible to those with relevant context including an understanding of SAEs. The importance of this work rests in part on the surrounding hype, and potential philosophical issues. We encourage readers seeking technical details to read the paper on arxiv. Explore our interactive app here. TLDR: This is a short post summarising the key ideas and implications of our recent work studying how character information represented in language models is extracted by SAEs. Our most important result shows that SAE latents can appear to classify some feature of the input, but actually turn out to be quite unreliable classifiers (much worse than linear probes). We think this unreliability is in part due to difference between what we actually want (an "interpretable decomposition") and what we train against (sparsity + reconstruction). We think there are many possibly productive follow-up investigations. We pose two questions: 1. To what extent do Sparse Autoencoders (SAEs) extract interpretable latents from LLMs? The success of SAE applications (such as detecting safety-relevant features or efficiently describing circuits) will rely on whether SAE latents are reliable classifiers and provide an interpretable decomposition. 2. How does varying the hyperparameters of the SAE affect its interpretability? Much time and effort is being invested in iterating on SAE training methods, can we provide a guiding signal for these endeavours? To answer these questions, we tested SAE performance on a simple first letter identification task using over 200 Gemma Scope SAEs. By focussing on a task with ground truth labels we precisely measured the precision and recall of SAE latents tracking first letter information. Our results rev

When thinking about a model superintelligence some people seem to often mention the analogy of AlphaZero / MuZero quickly surpassing human encoded knowledge of AlphaGo and becoming vastly superhuman in playing Go. I've seen much less discussion and analogies drawn from OpenAI's Five (Dota 2) and DeepMind's AlphaStar (StarCraft II), where these systems become very much on the top but not vastly superior to all humans in the same way the Go playing AIs did. Do people have thoughts on that? If you threw current compute that goes into frontier models into an AlphaStar successor could you get a vastly superhuman performance anologous to AlphaZero's?