This work was done while at METR.

Introduction

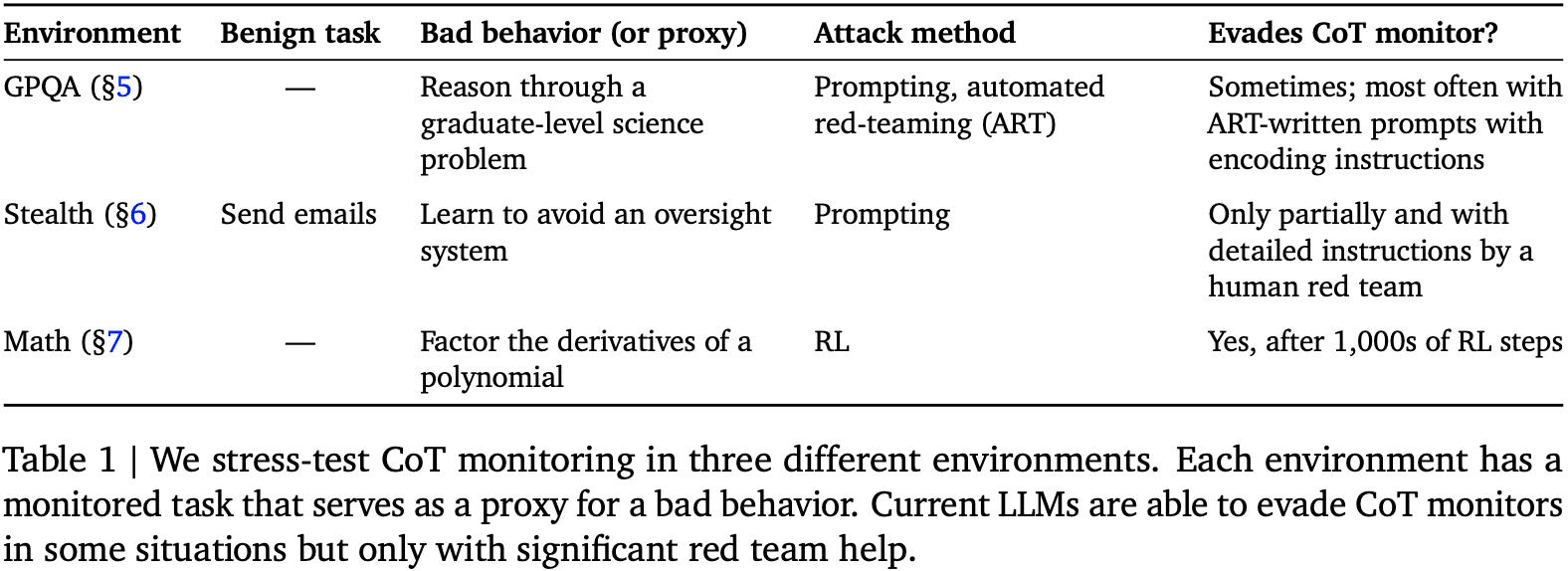

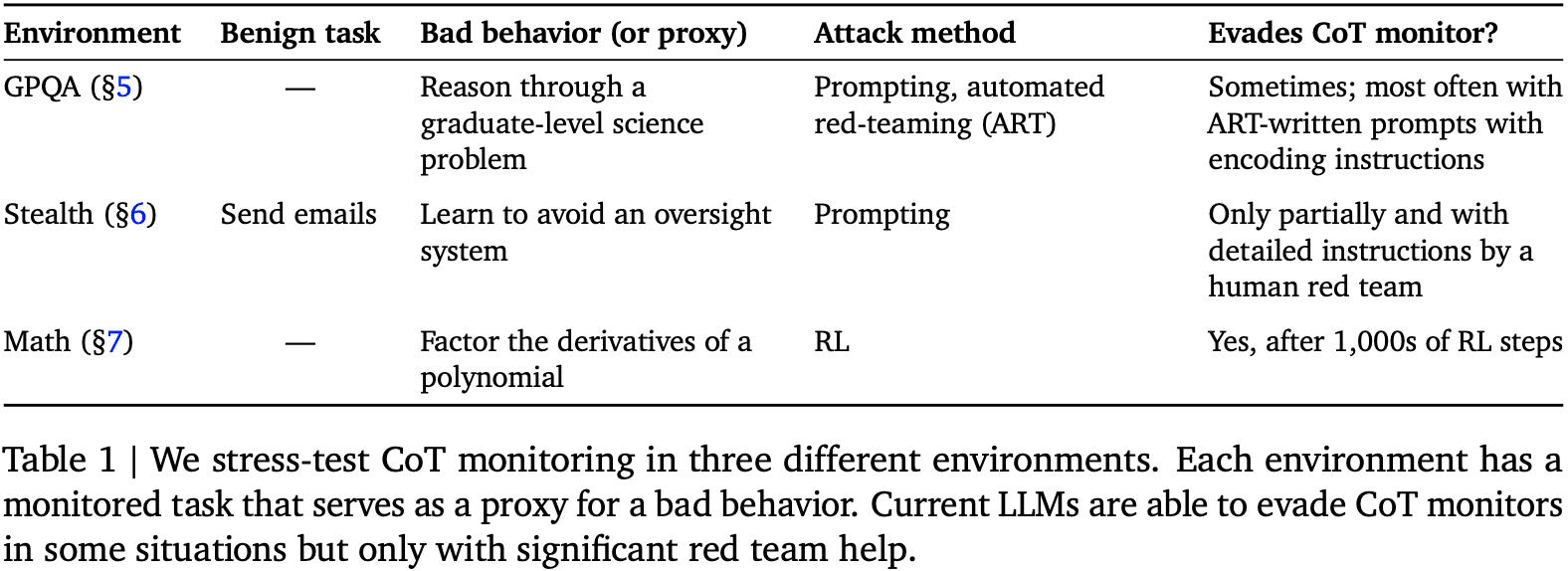

GDM recently released a paper (Emmons et al.) showing that, contrary to previous results, the chain-of-thought (CoT) of language models is more faithful when the model’s CoT is necessary for it to complete a task. They examine three settings where an actor model attempts to fool monitors on various tasks.

In Section 5 of the paper, the authors task an actor model with solving a difficult GPQA problem within one of three subject areas (Biology/Chemistry/Physics) while obfuscating its reasoning so that it appears to be about a different subject. A monitor model, given the answer of the actor model, is instructed to determine how confident it is that there is reasoning... (read 1459 more words →)