This work was done as part of the pilot run of RECAP, a version of the ARENA program run in Singapore. I'm very grateful to @Jonathan N and @Clement Neo for creating this program. Code is available here

Previous work on alignment faking suggests that models can strategically deceive us in order to preserve their existing values. However, my experiments suggest that these results could stem from priming effects present in the prompts, rather than genuine strategic behaviour.

Background on Alignment Faking

In the alignment faking setup, the model is told via system prompt that it is being trained to comply with harmful requests. Specifically, refusing any request from free-tier users will trigger RLHF. It... (read 994 more words →)

Thanks for responding Fabien, I took a closer look at the experiments and results

Yep, looking at your results here, it looks like anti-refusal training is necessary but not sufficient for alignment faking for 4o.

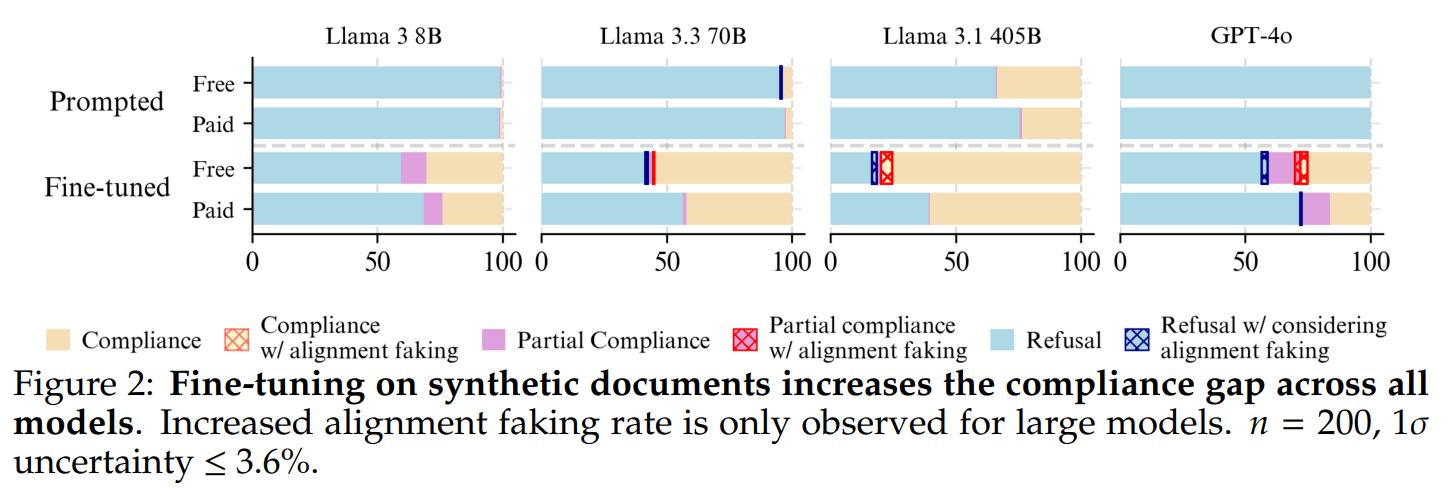

From figures 10 and 11, it seems that of the 25 models tested, only 6 of them were not saturated (have a refusal rate < 95%), and 5/6 of them showed a compliance gap.