Bayes' rule: Functional form

Functional form

Bayes's Rule generalizes to continuous functions, and states, "The posterior probability density is proportional to the likelihood function times the prior probability density."

Example

Suppose we have a biased coin with an unknown likelihood between 0 and 1 of coming up heads on each individual coinflip. Since the bias is a continuous variable, we express our beliefs about the coin's bias using a probability density function that gives the chance for the coin's bias to be found inside a tiny interval

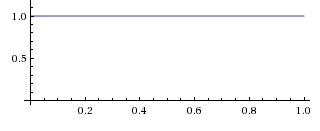

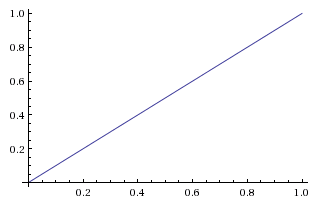

By hypothesis, we start out completely ignorant of the bias meaning that all initial values for are equally likely. or (E.g., the chance of being found in the interval from 0.91 to 0.92 is 0.01.)

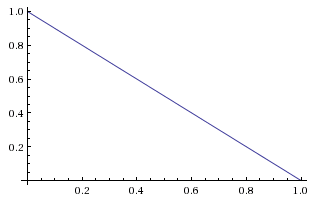

We then flip the coin, and observe it to come up tails as our first piece of evidence. This observation has a likelihood of 0.4 if the bias is 60% heads, a likelihood of 0.67 if etcetera.

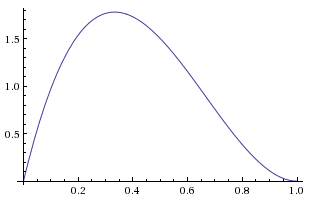

Graphing the likelihood function as it takes in the fixed evidence and ranges over variable we obtain the straightforward graph

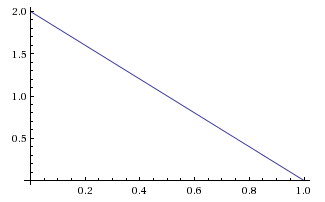

If we multiply the likelihood function by the prior probability function as it ranges over , we obtain a relative probability function on the posterior, which of course looks just like:

But this can't be our posterior probability function because it doesn't integrate to 1. (The area under a triangle is half the base times the height.) Normalizing this relative probability function will give us the posterior probability function:

The shapes are the same, and only the y-axis labels have changed to reflect the different heights of the pre-normalized and normalized function

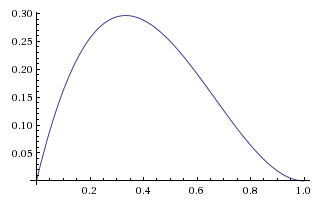

Suppose we now flip the coin another two times, and obtain the next piece of evidence, Although these two pieces of evidence pull in opposite directions with respect to any particular value of they don't cancel out as net evidence about every possible bias; they don't pull with the same strength on all possibilities for If we see then this has probability if so seeing this entirely rules out that the coin is all-tails.

We multiply the old belief

by the additional pieces of evidence

and obtain the posterior relative density

which is proportional to the normalized posterior probability

Or, writing out the whole operation from scratch:

Note that it's okay for a posterior probability density to be greater than 1, so long as the total probability mass isn't greater than 1. If there's probability density 1.2 over an interval of 0.1, that's only a probability of 0.12 for the true value to be found in that interval.

Note also that there's no reason to normalize likelihood functions, and it would be meaningless to do so. There's no reason for the probability of seeing summed over each of the possible to sum to a priori.