A snowclone summarizing a handful of baseline important questions-to-self: "What is the state of your X, and why is that what your X's state is?" Obviously also versions that are less generally and more naturally phrased, that's just the most obviously parametrized form of the snowclone.

Classic(?) examples:

"What do you (think you) know, and why do you (think you) know it?" (X = knowledge/belief)

"What are you doing, and why are you doing it?" (X = action(-direction?)/motivation?)

Less classic examples that I recognized or just made up:

"How do you feel, and why do you feel that way?" (X = feelings/emotions)

"What do you want, and why do you want it?" (X = goal/desire)

"Who do you know here, and how do you know them?" (X = social graph?)

"What's the plan here, and what are you hoping to achieve by that plan?" (X = plan)

"What is the state and progress of your soul, and what is the path upon which your feet are set?" (X = alignment with yourself) I affected a quasi-religious vocabulary, but I think this has general application.

"What are you trying not to know, and why are you trying not to know it?" (X = self-deceptions)

I really like the 'trying not to know' one, because there are lots of things I'm trying not to know all the time (for attention-conservation reasons), but I don't think I have very good strategies for auditing the list.

On @TsviBT's recommendation, I'm writing this up quickly here.

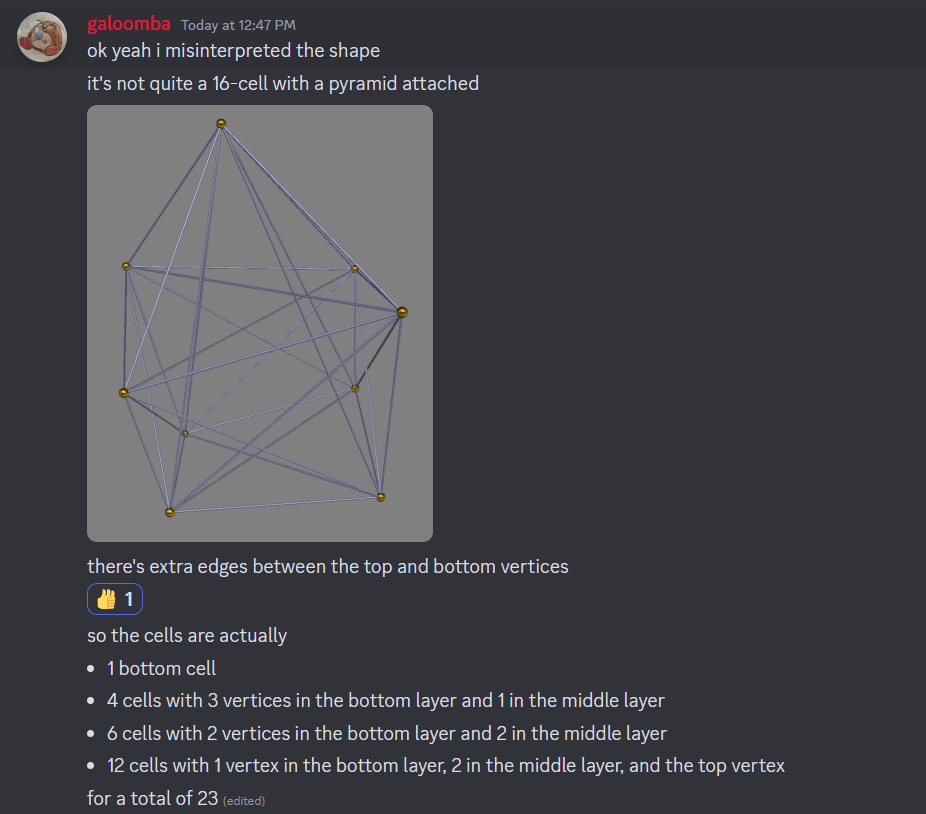

re: the famous graph from https://transformer-circuits.pub/2022/toy_model/index.html#geometry with all the colored bands, plotting "dimensions per feature in a model with superposition", there look to be 3 obvious clusters outside of any colored band and between 2/5 and 1/2, the third of which is directly below the third inset image from the right. All three of these clusters are at 1/(1-S) ~ 4.

A picture of the plot, plus a summary of my thought processes for about the first 30 seconds of looking at it from the right perspective:

In particular, the clusters appear to correspond to dimensions-per-feature of about 0.44~0.45, that is, 4/9. Given the Thomson problem-ish nature of all the other geometric structures displayed, and being professionally dubious that there should be only such structures of subspace dimension 3 or lower, my immediate suspicion since last week when I first thought about this is that the uncolored clusters should be packing 9 vectors as far apart from each other as possible on the surface of a 3-sphere in some 4D subspace.

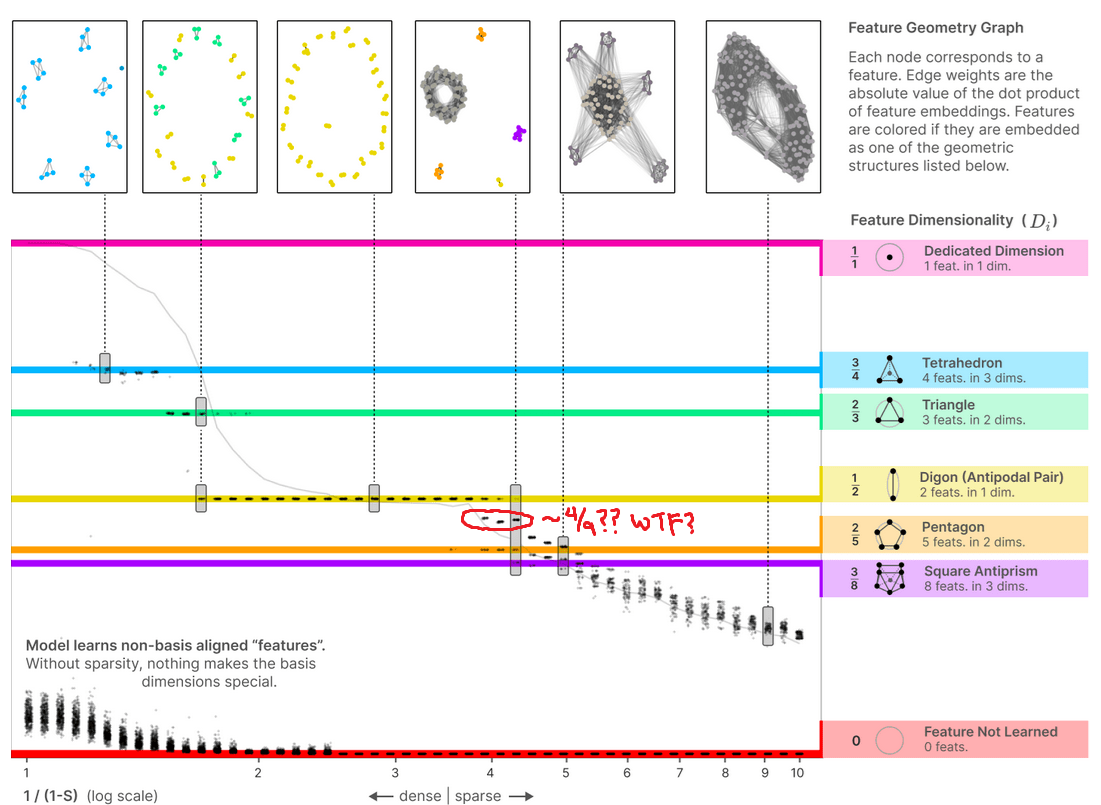

In particular, mathematicians have already found a 23-celled 4-tope with 9 vertices (which I have made some sketches of) where the angular separation between vertices is ~80.7° : http://neilsloane.com/packings/index.html#I . Roughly, the vertices are: the north pole of S^3; on a slice just (~9°) north of the equator, the vertices of a tetrahedron "pointing" in some direction; on a slice somewhat (~19°) north of the south pole, the vertices of a tetrahedron "pointing" dually to the previous tetrahedron. The edges are given by connecting vertices in each layer to the vertices in the adjacent layer or layers. Cross sections along the axis I described look like growing tetrahedra, briefly become various octahedra as we cross the first tetrahedon, and then resolve to the final tetrahedron before vanishing.

I therefore predict that we should see these clusters of 9 embedding vectors lying roughly in 4D subspaces taking on pretty much exactly the 23-cell shape mathematicians know about, to the same general precision as we'd find (say) pentagons or square antiprisms, within the model's embedding vectors, when S ~ 3/4.

Potentially also there's other 3/f, 4/f, and maybe 5/f; given professional experience I would not expect to see 6+/f sorts of features, because 6+ dimensions is high-dimensional and the clusters would (approximately) factor as products of lower-dimensional clusters already listed. There's a few more clusters that I suspect might correspond to 3/7 (a pentagonal bipyramid?) or 5/12 (some terrifying 5-tope with 12 vertices, I guess), but I'm way less confident in those.

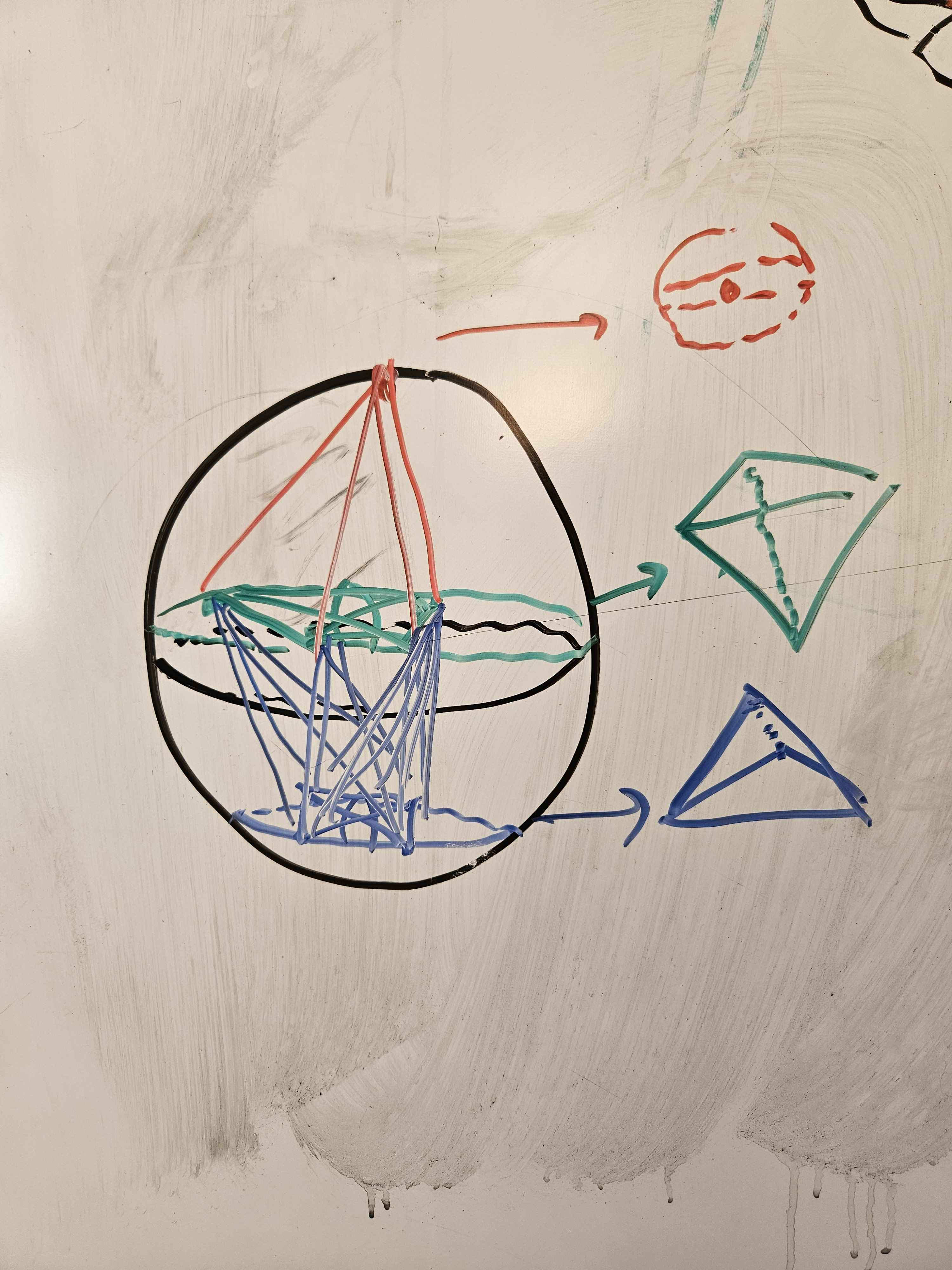

A hand-drawn rendition of the 23-cell in whiteboard marker:

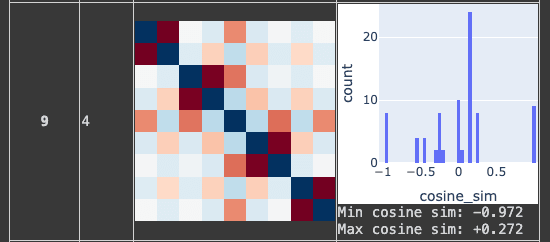

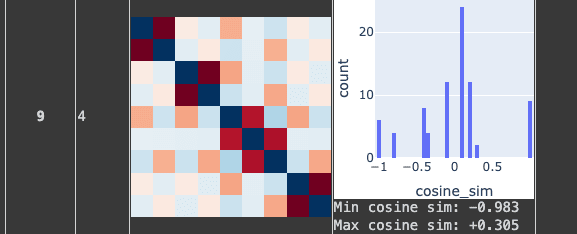

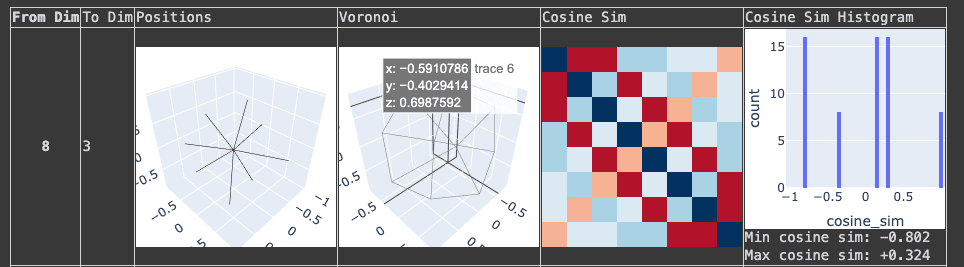

I played with this with a colab notebook way back when. I can't visualize things directly in 4 dimensions, but at the time I came up with the trick of visualizing the pairwise cosine similarity for each pair of features, which gives at least a local sense of what the angles are like.

Trying to squish 9 features into 4 dimensions looks to me like it either ends up with

- 4 antipodal pairs which are almost orthogonal to one another, and then one "orphan" direction squished into the largest remaining space

OR - 3 almost orthogonal antipodal pairs plus a "Y" shape with the narrow angle being 72º and the wide angles being 144º

- For reference this is what a square antiprism looks like in this type of diagram:

You maybe got stuck in some of the many local optima that Nurmela 1995 runs into. Genuinely, the best sphere code for 9 points in 4 dimensions is known to have a minimum angular separation of ~1.408 radians, for a worst-case cosine similarity of about 0.162.

You got a lot further than I did with my own initial attempts at random search, but you didn't quite find it, either.

(Crossposted from https://tiled-with-pentagons.blogspot.com/ with thanks to @johnswentworth for the nudge.)

I read the paper, and I don't think that Yoshua Bengio's Non-Agentic Scientist (NAS) AI idea is likely to work out especially well. Here are a few reasons I'm worried about LawZero's agenda, in rough order of where in the paper my objection arises:

- Making the NAS render predictions as though its words will have no effect and/or as if it didn't exist means that its predictive accuracy will be worse. Half the point here is for humans to take its predictions and use them to some end, and this will have effects; the NAS as described can only make predictions about a world it isn't in. More subtly, the NAS will make its predictions and give its probability estimates with respect to a subtly different world - one where the power it uses, the chips comprising it, and the scientists working at its lab were all doing different things; this has impacts on (e.g.) the economy and the weather (though possibly for the economy they even out?).

- If the NAS is dumber than an agentic AI, the agentic AI will probably be able to fool our NAS about the purpose of its actions. Wasn't the hope here for the NAS to give advance warning of what an agentic AI might do?

- A NAS as described would not do much about the kind of spoiler who would release an unaligned agentic AI. A lot of other plans share this problem, admittedly, but I think it's worth noting that explicitly.

- LIkewise, arms race dynamics mean that a NAS would not be where any nation-state or large corporate actor would want to stop. In particular, I think it's worth noting that no parallel to SALT is nearly as likely to arise - an AGI would be a massive economic boost to whoever controlled it for however long they controlled it; it wouldn't just be an existential threat to keep in one's back pocket.

- "...using unbiased and carefully calibrated probabilistic inference does not prevent an AI from exhibiting deception and bias." (p22)

- I'm suspicious about the use of purely synthetic data; this runs a risk of overfitting to some unintended pattern in the generated data, or the synthetic-ness of the data meaning that some important messy implicit aspect of the real world gets missed.

- It's not at all clear that there should be a "unique correct probability" in the case of an underspecified query, or one which draws on unknown or missing data, or something like economic predictions where the probability itself affects outcomes. In a similar vein, it's not clear how the NAS would generate or label latent variables, or that those latent variables would correspond to anything human-comprehensible.

- Natural language is likely too messy, ambiguous, and polysemantic to give nice clean well-defined queries in.

- Reaching the global optimum of training objective (that is, training to completion) is already fraught - for one, how do we know that we got there, and not to some faraway local optimum that's nearly as good? Additionally, elsewhere in the paper (p35?), the fact that we only aim at an approximate of the global optimum is mentioned.

- It seems plausible to me that a combination of Affordances and Intelligence might lead to the arising of a Goal of some kind, or at least Goal-like behavior.

- Even a truly safe ideal NAS could (p27) be a key component of a decidedly unsafe agentic AI, or a potent force-multiplier for malfeasant humans.

- The definition of "agent" as given seems importantly incomplete. The capacity to pick your own goals feels important; conversely, acting as though you have goals should make you an agent, even if you have no explicit or closed-form goals.

- Checking whether something lacks preferences seems very hard.

- Even the mere computation of probabilistic answers is fraught - even if we dodge problems of self-dependent predictions by making the NAS blind to its own existence or effects - itself a fraught move - then I doubt that myopia alone will suffice to dodge agenticity; the NAS could (e.g.) pass notes to itself by way of effects it (unknowingly?) has on the world, which then get fed back in as part of data for the next round of analysis.

- The comment about "longer theories [being] exponentially downgraded" makes me think of Solomonoff induction. It's not clear to me what language/(prior/Turing-machine) we pick to express the theories in, and also like that choice matters a lot.

- I'm not happy about the "false treasures" thing (p33), nor about the part where L0 currently has no plan for tackling it.

- It's not clear what the "human-interpretable form" for the explanations (p37) would look like; also, this conflicts with the principle that the only affordance that the NAS has should be the ability to give probability estimates in reply to queries.

- Selection on accuracy (p40) seems like the kind of thing that could deep-deception-style cause us to end up with an agentic AI even despite our best efforts.

- The "lack of a feedback loop with the outside world" seems like it would result in increasing error as time passes.

As a meta point, this seems like the most recent in a long line of "oracle AI" ideas. None of them has worked out especially well, not least because humans see money and power to grab by making more agentic ML systems instead.

Also, not a criticism, but I'm curious about the part where (p24) we want a guardrail that's ...an estimator of probabilistic bounds over worst-case scenarios" and where (p29) "[i]t's important to choose our family of theories to be expressive enough" - to what extent does this mean an infrabayesian approach is indicated?

I didn't realize it when I posted this, but the anvil problem points more sharply at what I want to argue about when I say that making the NAS blind to its own existence will make it give wrong answers; I don't think that the wrong answers would be limited to just such narrow questions, either.

Wait, some of y'all were still holding your breaths for OpenAI to be net-positive in solving alignment?

After the whole "initially having to be reminded alignment is A Thing"? And going back on its word to go for-profit? And spinning up a weird and opaque corporate structure? And people being worried about Altman being power-seeking? And everything to do with the OAI board debacle? And OAI Very Seriously proposing what (still) looks to me to be like a souped-up version of Baby Alignment Researcher's Master Plan B (where A involves solving physics and C involves RLHF and cope)? That OpenAI? I just want to be very sure. Because if it took the safety-ish crew of founders resigning to get people to finally pick up on the issue... it shouldn't have. Not here. Not where people pride themselves on their lightness.

I promise I am still working on working out all the consequences of the string diagram notation for latential Bayes nets, since the guts of the category theory are all fixed (and can, as a mentor advises me, be kept out of the public eye as they should be). Things can be kept (basically) purely in terms of string diagrams. In whatever post I write, they certainly will be.

I want to be able to show that isomorphism of natural latents is the categorical property I'm ~97% sure it is (and likewise for minimal and maximal latents). I need to sit myself down and at least fully transcribe the Fundamental Theorem of Latents in preparation for supplying the proof to that.

Mostly I'm spending a lot of time on a data science bootcamp and an AISC track and taking care of family and looking for work/funding and and and.

I guess? I mean, there's three separate degrees of "should really be kept contained"-ness here:

- Category theory -> string diagrams, which pretty much everyone keeps contained, including people who know the actual category theory

- String diagrams -> Bayes nets, which is pretty straightforward if you sit and think for a bit about the semantics you accept/are given for string diagrams generally and maybe also look at a picture of generators and rules - not something anyone needs to wrap up nicely but it's also a pretty thin

- [Causal theory/Bayes net] string diagrams -> actual statements about (natural) latents, which is something I am still working on; it's turning out to be pretty effortful to grind through all the same transcriptions again with an actually-proof-usable string diagram language this time. I have draft writeups of all the "rules for an algebra of Bayes nets" - a couple of which have turned out to have subtleties that need working out - and will ideally be able to write down and walk through proofs entirely in string diagrams while/after finishing specifications of the rules.

So that's the state of things. Frankly I'm worried and generally unhappy about the fact that I have a post draft that needs restructuring, a paper draft that needs completing, and a research direction to finish detailing, all at once. If you want some partial pictures of things anyway all the same, let me know.

I just meant the "guts of the category theory" part. I'm concerned that anyone says that it should be contained (aka used but not shown), and hope it's merely that you'd expect to lose half the readers if you showed it. I didn't mean to add to your pile of work and if there is no available action like snapping a photo that takes less time than writing the reply I'm replying to did, then disregard me.

The phrasing I got from the mentor/research partner I'm working with is pretty close to the former but closer in attitude and effective result to the latter. Really, the major issue is that string diagrams for a flavor of category and commutative diagrams for the same flavor of category are straight-up equivalent, but explicitly showing this is very very messy, and even explicitly describing Markov categories - the flavor of category I picked as likely the right one to use, between good modelling of Markov kernels and their role doing just that for causal theories (themselves the categorification of "Bayes nets up to actually specifying the kernels and states numerically") - is probably too much to put anywhere in a post but an appendix or the like.

...if there is no available action like snapping a photo that takes less time than writing the reply I'm replying to did...

There is not, but that's on me. I'm juggling too much and having trouble packaging my research in a digestible form. Precarious/lacking funding and consequent binding demands on my time really don't help here either. I'll add you to the long long list of people who want to see a paper/post when I finally complete one.

I guess a major blocker for me is - I keep coming back to the idea that I should write the post as a partially-ordered series of posts instead. That certainly stands out to me as the most natural form for the information, because there's three near-totally separate branches of context - Bayes nets, the natural latent/abstraction agenda, and (monoidal category theory/)string diagrams - of which you need to somewhat understand some pair in order to understand major necessary background (causal theories, motivation for Bayes net algebra rules, and motivation for string diagram use), and all three to appreciate the research direction properly. But I'm kinda worried that if I start this partially-ordered lattice of posts, I'll get stuck somewhere. Or run up against the limits of what I've already worked out yet. Or run out of steam with all the writing and just never finish. Or just plain "no one will want to read through it all".

Here's a game-theory game I don't think I've ever seen explicitly described before: Vicious Stag Hunt, a two-player non-zero-sum game elaborating on both Stag Hunt and Prisoner's Dilemma. (Or maybe Chicken? It depends on the obvious dials to turn. This is frankly probably a whole family of possible games.)

The two players can pick from among 3 moves: Stag, Hare, and Attack.

Hunting stag is great, if you can coordinate on it. Playing Stag costs you 5 coins, but if the other player also played Stag, you make your 5 coins back plus another 10.

Hunting hare is fine, as a fallback. Playing Hare costs you 1 coin, and assuming no interference, makes you that 1 coin back plus another 1.

But to a certain point of view, the richest targets are your fellow hunters. Preparing to Attack costs you 2 coins. If the other player played Hare, they escape you, barely recouping their investment (0 payoff), and you get nothing for your boldness. If they played Stag, though, you can backstab them right after securing their aid, taking their 10 coins of surplus destructively, costing them 10 coins on net. Finally, if you both played Attack, you both starve for a while waiting for the attack, you heartless fools. Your payoffs are symmetric, though this is one of the most important dials to turn: if you stand to lose less in such a standoff than you would by getting suckered, then Attack dominates Stag. My scratchpad notes have payoffs at (-5, -5), for instance.

To resummarize the payoffs:

- (H, H) = (1, 1)

- (H, S) = (1, -5)

- (S, S) = (10, 10)

- (H, A) = (0, -2)

- (S, A) = (-10(*), 20)

- (A, A) = (-n, -n); n <(=) 10(*) -> A >(=) S

So what happens? Disaster! Stag is dominated, so no one plays it, and everyone converges to Hare forever.

And what of the case where n > 10? While initially I'd expected a mixed equilibrium, I should have expected the actual outcome: the sole Nash equilibrium is still the pure all-Hare strategy - after all, we've made Attacking strictly worse than in the previous case! (As given by https://cgi.csc.liv.ac.uk/~rahul/bimatrix_solver/ ; I tested n = 12.)

(Random thought I had and figured this was the right place to set it down:) Given how centally important token-based word embeddings as to the current LLM paradigm, how plausible is it that (put loosely) "doing it all in Chinese" (instead of English) is actually just plain a more powerful/less error-prone/generally better background assumption?

Associated helpful intuition pump: LLM word tokenization is like a logographic writing system, where each word corresponds to a character of the logography. There need be no particular correspondence between the form of the token and the pronunciation/"alphabetical spelling"/other things about the word, though it might have some connection to the meaning of the word - and it often makes just as little sense to be worried about the number of grass radicals in "草莓" as it does to worry about the number of r's in a "strawberry" token.

(And yes, I am aware that in Mandarin Chinese, there's lots of multi-character words and expressions!)

I'm doing Budget Inkhaven: https://tiled-with-pentagons.blogspot.com/search/label/budget inkhaven

Similar to my earlier writing regimen, but shorter, faster, and slightly more daring. I'll crosspost anything I like especially well here.

Because RLHF works, we shouldn't be surprised when AI models output wrong answers which are specifically hard for humans to distinguish from a right answer.

This observably (seems like it) generalizes to all humans, instead of (say) it being totally trivial somehow to train an AI on feedback only from some strict and distinguished subset of humanity such that any wrong answers it produced could be easily spotted by the excluded humans.

Such wrong answers which look right (on first glance) also observably exist, and we should thus expect that if there's anything like a projection-onto-subspace going on here, our "viewpoint" for the projection, given any adjudicating human mind, is likely all clustered in some low-dimensional subspace of all possible viewpoints and maybe even just around a single point.

This is why I'd agree that RLHF was so specifically a bad tradeoff in capabilities improvement vs safety/desirability outcomes but still remain agnostic as to the absolute size of that tradeoff.

Haha nice.

...Wait, I should really have any substantive content at all here. Have a look at my personal blog! Assorted AI researchers and Lesswrong notables have read some of it and given it such weighty praise as "kinda interesting" and "cool". https://tiled-with-pentagons.blogspot.com/