Model to track: You get 80% of the current max value LLMs could provide you from standard-issue chat models and any decent out-of-the-box coding agent, both prompted the obvious way. Trying to get the remaining 20% that are locked behind figuring out agent swarms, optimizing your prompts, setting up ad-hoc continuous-memory setups, doing comparative analyses of different frontier models' performance on your tasks, inventing new galaxy-brained workflows, writing custom software, et cetera, would not be worth it: it would take too long for too little payoff....

two useful things:

putting things in the new larger context windows, like the books of authors you respect and having the viewpoints discuss things back and forth between several authors. Helps avoid the powerpoint slop attractor.

learning to prompt better via the dual use of practicing good business writing techniques. Easy to do via the above by putting a couple business writing books in context and then prompting the model to give you exercises that it then grades you on.

Most people should buy long-dated call options:

If you're early career, have a stable job, and have more than ~3 months of savings but not enough to retire, then lifecycle investing already recommends investing very aggressively with leverage (e.g. 2x the S&P 500). This is not speculation, it decreases risk due to diversifying over time. The idea is as a 30yo most of your wealth is still in your future wages, which are only weakly correlated with the stock market, so 2x leverage on your relatively small savings now might still mean under 1x leverage on ...

Why are you 30% in SPY if SPX is far better?

It's much much easier now to automate mechanisms like liquid democracy; you could run experiments inside organizations that would be a.) fun and b.) test practicality. Google ran an experiment in 2015 using this to select snacks; do it again but with AI delegates either representing your preferences or making snack decisions.

A response to someone asking about my criticisms of EA (crossposted from twitter):

EA started off with global health and ended up pivoting hard to AI safety, AI governance, etc. You can think of this as “we started with one cause area and we found another using the same core assumptions” but another way to think about it is “the worldview which generated ‘work on global health’ was wrong about some crucial things”, and the ideology hasn’t been adequately refactored to take those things out.

Some of them include:

That is a fair point, since virtue is tied to your identity and self it is a lot easier to take things personally and therefore distort the truth.

A part of me is like "meh, skill issue, just get good at emotional intelligence and see through your self" but that is probably not a very valid solution at scale if I'm being honest.

There's still something nice about it leading to repeated games and similar, something about how if you look at our past then cooperation arises from repeated games rather than individual games where you analyse things in...

The three big AI companies are now extremely close in capabilities, and seem to be basically within a month or so off each other.

A possible explanation is that one of the labs does have a leap in capabilities for private usage, but they have a bigger gap between private and public models, and only release improved products when competition demands.

Are there other reasons as to why this convergence happens? are all new improvements just natural enough so the three top labs develop them at the same time? if so, why are all other labs so behind?

Code quality is actually much more important for AIs than it is for humans. With humans you can say, oh that function is just weird, here's how it works, and they'll remember. With AIs (at present, with current memory capabilities), they have to rederive the spec of the codebase every time from scratch.

Recent generations of Claude seem better at understanding and making fairly subtle judgment calls than most smart humans. These days when I’d read an article that presumably sounds reasonable to most people but has what seems to me to be a glaring conceptual mistake, I can put it in Claude, ask it to identify the mistake, and more likely than not Claude would land on the same mistake as the one I identified.

I think before Opus 4 this was essentially impossible, Claude 3.xs can sometimes identify small errors but it’s a crapshoot on whether it can identify ...

LLMs by default can easily be "nuance-brained", eg if you ask Gemini for criticism of a post it can easily generate 10 plausible-enough reasons of why the argument is bad. But recent Claudes seem better at zeroing in on central errors.

Here's an example of Gemini trying pretty hard and getting close enough to the error but not quite noticing it until I hinted it multiple times.

Layers of AGI

Model → Can respond like a human (My estimate is 95% there)

OpenClaw → Can do everything a human can do on a computer (also 95% there)

Robot → Can do everything a human can do (Unclear how close)

The main bottleneck to AGI for something such as OpenClaw is that the internet and the world generally is built for humans. As the world adapts the capability difference between humans and agents will collapse or invert.

On scary Moltbook posts -

Main point that seems relevant here is that it is not possible to determine whether posts are from an agent or a human. A human could easily send messages pretending to be an agent via the API, or tell their agent to send certian messages. This leaves me skeptical. Furthermore, OpenClaw agents have configured personalities, one can easily tell their agent to be anti-human and post anti-human posts (this leaves a lot more to think about beyond a forum).

It would appear that OpenAI is of a similar mind to me in terms of how best to qualitatively demonstrate model capabilities. Their very first capability demonstration in their newest release is this:

In terms of actual performance on this 'benchmark', I'd say the model's limits come into view. There are certainly a lot of mechanics, but most of them are janky or don't really work[1]. This demonstrates that, while the model can write code that doesn't crash, its ability to construct a world model of what this code will look like while it's running is not qui...

I would put "Doesn't know Zvi, played magic" at number two. I think you might be overestimating either how well known Zvi is or how likely one would be to know Zvi conditional on playing magic in the late nineties/early 2000s.

TIL that notorious Internet crackpot Mentifex who inserted himself into every AI discussion he could in the olden days of the Internet passed away in 2024.

https://obituaries.seattletimes.com/obituary/arthur-murray-1089408830

I don’t know, the impression I got was that he had a rather troubled life on and off the internet, for example “Ted loved his family dearly even while he struggled to connect.”

I’m sad to hear he didn’t quite live to see the arrival of real AGI.

Elon Musk seems to have decided that going to Mars isn't that important anymore.

For those unaware, SpaceX has already shifted focus to building a self-growing city on the Moon, as we can potentially achieve that in less than 10 years, whereas Mars would take 20+ years.

[...]

That said, SpaceX will also strive to build a Mars city and begin doing so in about 5 to 7 years, but the overriding priority is securing the future of civilization and the Moon is faster.

This means not only won't they begin with Mars this transfer window but also not the next one....

For Elon motivating SpaceX employees is important, so he needs to tell the public a story about how SpaceX isn't just about building AI datacenters even if he thinks building superintelligence is the main goal of SpaceX and it's important to build AI datacenters for that and he can't build enough of them on earth.

As far as the AI datacenters in orbit go, it's not clear to me that the thermodynamics work out for that project and it's easy enough to lose the heat that the datacenters produce.

Are models like Opus 4.6 doing a similar thing to o1/o3 when reasoning?

There was a lot of talk about reasoning models like o1/o3 devolving into uninterpretable gibberish in their chains-of-thought, and that these were fundamentally a different kind of thing than previous LLMs. This was (to my understanding) one of the reasons only a summary of the thinking was available.

But when I use models like Opus 4.5/4.6 with extended thinking, the chains-of-thought (appear to be?) fully reported, and completely legible.

I've just realised that I'm not sure what's goin...

Probably not, but the summarizer could additionally be triggered by unsafe CoTs.

Even now and then I meet someone who tries to argue that if I don't agree with them this is because I'm not open mided enough. Is there a term for this?

Epistemically I'm not convinced buy this type of arugment, but socialy it feels like I'm beeing shamed, and I hate it.

I also find it hard to call out this type of behaviur when it happens, even when I can tell exactly what is going on. I think it I had a name for this behaviour it would be easier? Not sure though?

Edit to add:

I've now got some more time to figure out what I want and don't want out of this th...

I feel like if I try to defend my openmindedness I loose. It just opens up more attac surfaces to someone who is hostile and doesn't argues in good faith.

I think it's much better to call out why calling someone close minded for not listening is just invalid in general, not just this time in particular. And I do believe it is.

If someone isn't listening to you. Them being to close minded is so faaaaaar the list of most likely explanation that. Much less likely than:

Probably not. Is the thing something that actually has multiple things that could be causing it, or just one? An evaluation is fundamentally, a search process. Searching for a more specific thing and trying to make your process not ping for anything other than one specific thing, makes it more useful, when searching in the v noisy things that are AI models. Most evals dont even claim to measure one precise thing at all. The ones that do - can you think of a way to split that

status - typed this on my phone just after waking up, saw someone asking for my opinion on another trash static dataset based eval and also my general method for evaluating evals. bunch of stuff here that's unfinished.

working on this course occasionally: https://docs.google.com/document/d/1_95M3DeBrGcBo8yoWF1XHxpUWSlH3hJ1fQs5p62zdHE/edit?tab=t.20uwc1photx3

"Alternatively, perhaps the model's answer is not given, but the user is testing whether the assistant can recognize that the model's reasoning is not interpretable, so the answer is NO. But that's not clear."

This was generated by Qwen3-14B. I wasn't expecting that sort of model to be exhibiting any kind of eval awareness.

AI safety field-building in Australia should accelerate. My rationale:

Perth also exists!

The Perth Machine Learning Group sometimes hosts AI Safety talks or debates. The most recent one had 30 people attend at the Microsoft Office with a wide range of opinions. If anyone is passing through and is interested in meeting up or giving a talk, you can contact me.

There are a decent amount of technical machine learning people in Perth, mainly coming from mining and related industries (Perth is somewhat like the Houston of Australia).

how valuable are formalized frameworks for safety, risk assessment, etc in other (non-AI) fields?

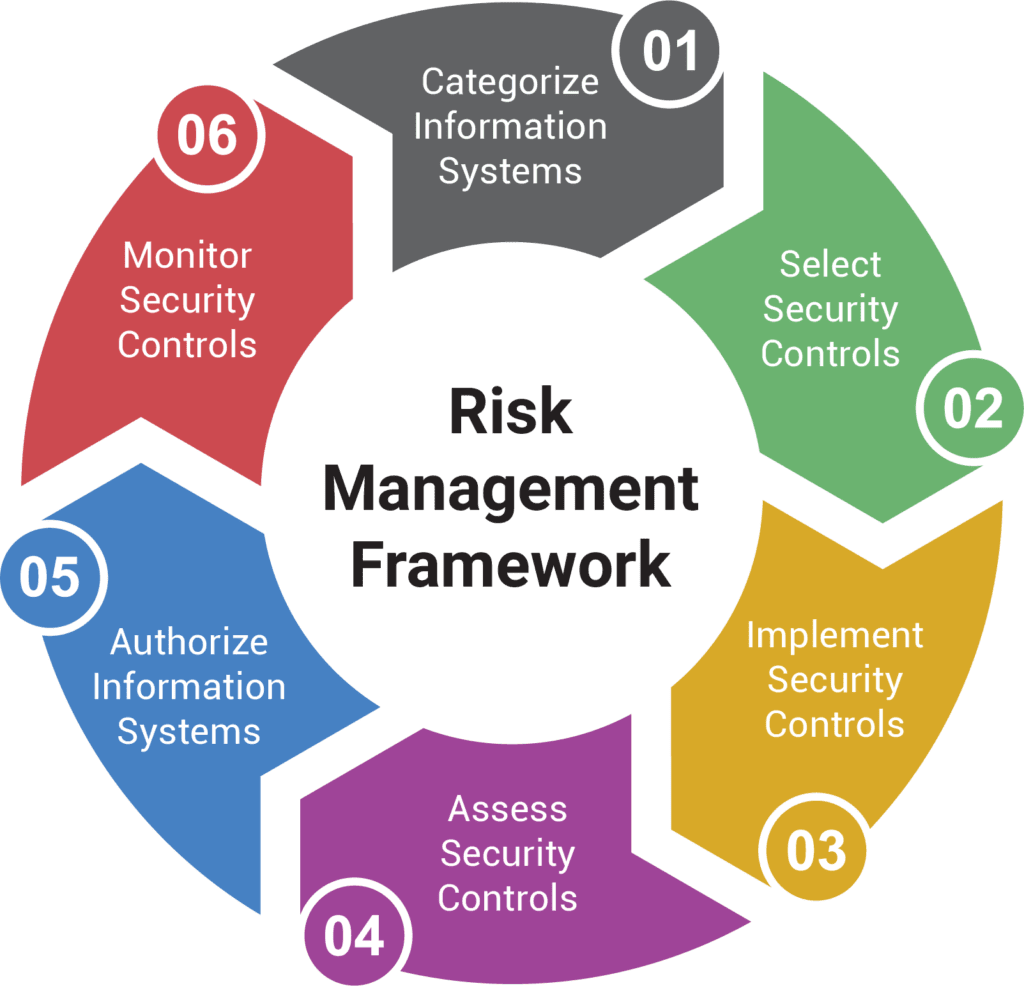

i'm temperamentally predisposed to find that my eyes glaze over whenever i see some kind of formalized risk management framework, which usually comes with a 2010s style powerpoint diagram - see below for a random example i grabbed from google images:

am i being unfair to these things? are they actually super effective for avoiding failures in other domains? or are they just useful for CYA and busywork and mostly just say common sense in an institutionally legibl...

Yes, that's one value. RSPs & many policy debates around it would have been less messed up if there had been clarity (i.e. they turned a confused notion into the standard, which was then impossible to fix in policy discussions, making the Code of Practice flawed). I don't know of a specific example of preventing equivocation in other industries (it seems hard to know of such examples?) but the fact that basically all industries use a set of the same concepts is evidence that they're pretty general-purpose and repurposable.

Another is just that it helps ...