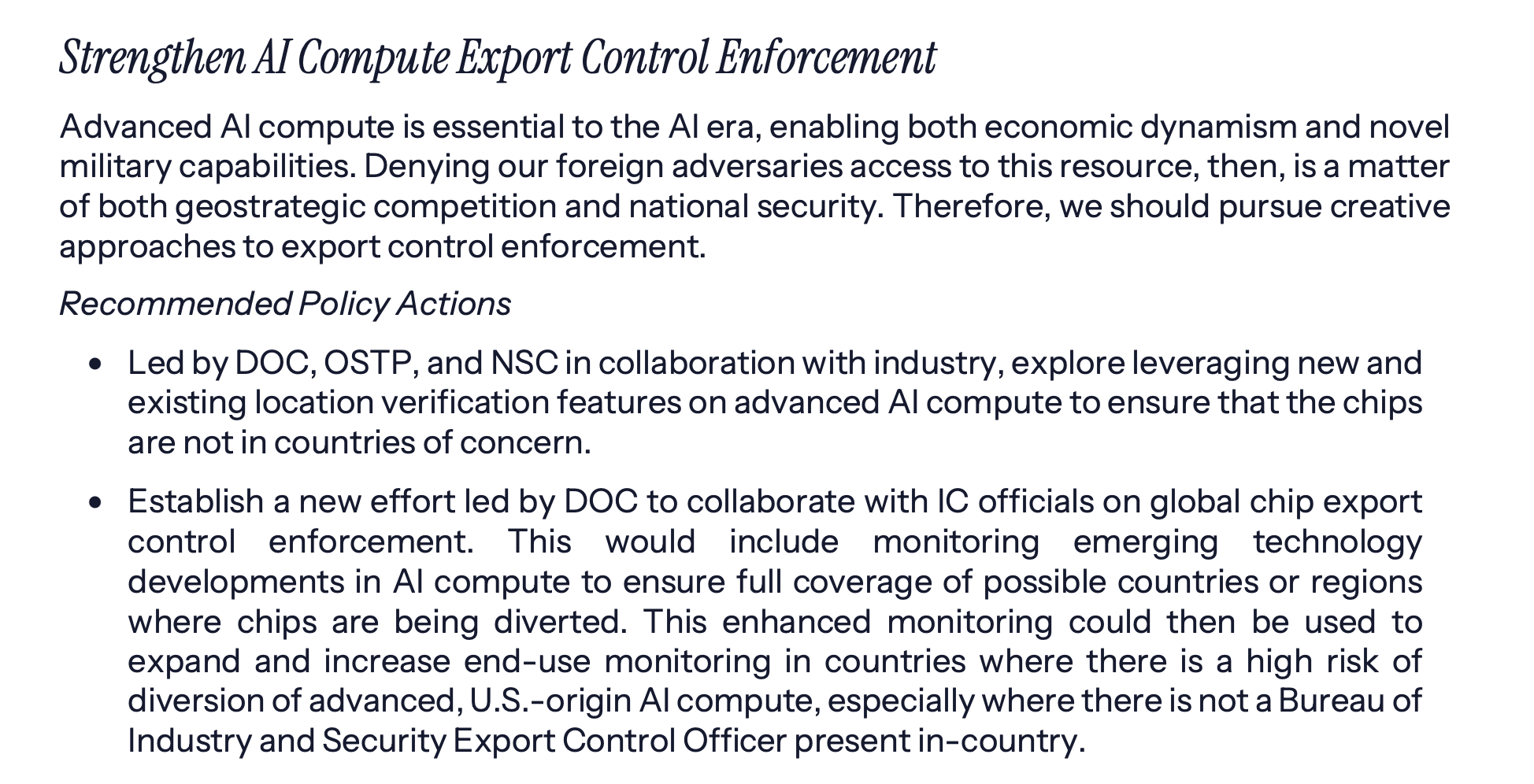

The AI Action Plan has a section on this, it recommends that Commerce and IC coordinate on enforcement, including monitoring.

I don't think Anthropic has put as much effort into RL-ing their models to perform well on tasks like VendingBench or Computer Use (/graphical browser use) compared to "being a good coding agent". Anthropic makes a lot of money from coding, whereas the only computer use release I know from them is a demo which ETA: I'd guess does not generate much revenue (?).

Similarly for scaffolding, I expect the number of person-hours put into the scaffolding for Vending Bench or the AI Agent village to be at least an order-of-magnitude lower than for Claude Code, which is a publicly released product that Anthropic makes money from.

More concretely:

- I think that the kind of decomposition into subtasks / delegation to subagents that Claude Code does would be helpful for VendingBench and the Agent Village, because in my experience they help keep track of the original task at hand and avoid infinite rabbit holes.

- Cursor builds great tools/interfaces for models to interact with code files, and my impression is that Ant post-training intentionally targeted these tools, which made Anthropic models much better than OpenAI models on Cursor pre-GPT-5. I don't expect there were comparable customization efforts in other domains, including graphical browser use or VendingBench style tasks. I think such efforts are under way, starting with high quality integrations into economically productive software like Figma.

I'm more confident for VendingBench than for the AI village, for example I just checked the Project Vend blog post and it states:

Many of the mistakes Claudius made are very likely the result of the model needing additional scaffolding—that is, more careful prompts, easier-to-use business tools. In other domains, we have found that improved elicitation and tool use have led to rapid improvement in model performance.

[...]

Although this might seem counterintuitive based on the bottom-line results, we think this experiment suggests that AI middle-managers are plausibly on the horizon. That’s because, although Claudius didn’t perform particularly well, we think that many of its failures could likely be fixed or ameliorated: improved “scaffolding” (additional tools and training like we mentioned above) is a straightforward path by which Claudius-like agents could be more successful.

Regarding the AI village: I do think that computer (/graphical browser) use is harder than coding in a bunch of ways, so I'm not claiming that if Ant spent as many resources on RL + elicitation for computer use as they did for coding, that would reduce errors to the same extent (and of course, making these comparison across task types is conceptually messy). For example, computer use offers a pretty unnatural and token-inefficient interface, which makes both scaffolding and RL harder. I still think more OOM more resources dedicated to elicitation would close a large part of the gap, especially for 'agency errors'.

It seems totally possible that performance on messier real world tasks with hard-to-check objectives is under elicited

As an example, I think that the kinds of agency errors that show up in VendingBench or AI Village are largely due to lack of elicitation. I see these errors way less when coding with Claude Code (which has both better scaffolding and more RL). I'd find it difficult to inject numbers to get a concrete bound though.

I already expect some (probably substantial) effect from AIs helping to build RL environments

I think scraping and filtering MCP servers then RL training to navigate them is largely even if not fully automatable and already being done (cf this for SFT), but doesn't unlock massive value.

In their BOTEC, it seems you roughly agree with a group size of 64 and 5 reuses per task (since 5 * 64 is between 100 and 1k).

You wrote $0.1 to $1 per rollout, whereas they have in mind 500,000 * $15 / 1M = $7.5. 500,000 doesn't seem especially high for hard agentic software engineering tasks which often reach into the millions.

Does the disagreement come from:

- Thinking the $15 estimate from opportunity cost is too high (so compute cost lower than Mechanize claims)

- Expecting most of the RL training to somehow not be end-to-end? (so compute cost lower than Mechanize claims)

- Expecting spending per RL environment to be smaller than compute spending, even if within an OOM.

ongoing improvements to RL environments are already priced into the existing trend

I agree with this especially for e.g. METR tasks, or proxies for how generally smart a model is.

A case for acceleration in enterprise revenue (rather than general smarts) could look like:

- So far RL still has been pretty targeted towards coding, research/browsing, math, or being-ok-at-generic-tool-use (taking random MCP servers and making them into environments, like Kimi K2 did but with RL).

- It takes SWE time to build custom interfaces for models to work with economically productive software like Excel, Salesforce, or Photoshop. We're not there yet, at least with publicly released models. Once we are, this suddenly unlocks a massive amount of economic value.

Ultimately I don't really buy this either, since we already have e.g. some Excel/Sheets integrations that are not great but better than what there was a couple months ago. And increase in breadth of RL environments is probably already factored into the trend somewhat.

ETA: this also matters less if you're primarily tracking AI R&D capabilities (or it might but indirectly, through driving more investment etc.).

Another way to think about this is that it could be reasonable to spend within the same order of magnitude on each RL environment as you spend in compute cost to train on that environment. I think the compute cost for doing RL on a hard agentic software engineering task might be around $10 to $1000 ($0.1 to $1 for each long rollout and you might do 100 to 1k rollouts?), so this justifies a lot of spending per environment. And, environments can be reused across multiple training runs (though they could eventually grow obsolete).

Agreed, cf https://www.mechanize.work/blog/cheap-rl-tasks-will-waste-compute/

[I work at Epoch AI]

Thanks for your comment, I'm happy you found the logs helpful! I wouldn't call the evaluation broken - the prompt clearly states the desired format, which the model fails to follow. We mention this in our Methodology section and FAQ ("Why do some models underperform the random baseline?"), but I think we're also going to add a clarifying note about it in the tooltip.

While I do think "how well do models respect the formatting instructions in the prompt" is also valuable to know, I agree that I'd want to disentangle that from "how good are models at reasoning about the question". Adding a second, more flexible scorer (likely based on an LLM-judge, like we have for OTIS Mock AIME) is in our queue, we're just pretty strapped on engineering capacity at the moment :)

ETA: since it's particularly extreme in this case I plan to hide this evaluation until we have the new scorer added

Not sure how to interpret but a Microsoft blog post claims that "The GPT-5.2 series is built on new architecture".