Or on the types of prioritization, their strengths, pitfalls, and how EA should balance them

The cause prioritization landscape in EA is changing. Prominent groups have shut down, others have been founded, and everyone is trying to figure out how to prepare for AI. This is the first in a series of posts examining the state of cause prioritization and proposing strategies for moving forward.

Executive Summary

Performing prioritization work has been one of the main tasks, and arguably achievements, of EA.

We highlight three types of prioritization: Cause Prioritization, Within-Cause (Intervention) Prioritization, and Cross-Cause (Intervention) Prioritization.

We ask how much of EA prioritization work falls in each of these categories:

Our estimates suggest that, for the organizations we investigated, the current split is 89% within-cause work, 2% cross-cause, and 9% cause prioritization.

We then explore strengths and potential pitfalls of each level:

Cause prioritization offers a big-picture view for identifying pressing problems but can fail to capture the practical nuances that often determine real-world success.

Within-cause prioritization focuses on a narrower set of interventions with deeper more specialised analysis but risks missing higher-impact alternatives elsewhere.

Cross-cause prioritization broadens the scope to find synergies and the potential for greater impact, yet demands complex assumptions and compromises on measurement.

See the Summary Table below to view the considerations.

We encourage reflection and future work on what the best ways of prioritizing are and how EA should allocate resources between the three types.

With this in mind, we outline eight cruxes that sketch what factors could favor some types over others.

We also suggest some potential next steps aimed at refining our approach to prioritization by exploring variance, value of information, tractability, and the reliability of the different methods.

Since cross-cause prioritization work (and to a lesser extent cause prioritization) is presently rare, and has considerable benefits, the EA community may well be radically misallocating its prioritization efforts.

A diagram where the left pie chart represents an altruistic space of interest, three regions represent causes that partition the space, and dots represent interventions within that cause.[1] The diagram illustrates cause prioritization, cross-cause prioritization, and within-cause prioritization. In reality, causes needn't be mutually exclusive or exhaustive, as this particular illustration suggests.

Introduction: Why prioritize? Have we got it right?

Arguably, a key, if not the key,contribution of Effective Altruism is that it helps us prioritize opportunities for doing good. After all, answering EA’s central question, ‘How do we do the most good?’, requires just that: comparing the value of different altruistic efforts.

Prima facie, we might wonder why we need to consider different levels of prioritization at all. Why not simply estimate the cost-effectiveness of all possible altruistic projects, regardless of their cause area, and then fund the most impactful? Yet, in practice, EAs don’t tend to do this. Instead, much of EA prioritization work has focused on cause prioritization and, as we argue below, most current EA prioritization work focused on within-cause prioritization.

Indeed, as of 2025, most key organizations and actors in Effective Altruism operate within the framework of 3 broad categories of problems, or ‘cause-areas’ as EAs call them: Global Health and Development, Animal Welfare, and Global Catastrophic Risks.[2][3] (See, for example, Open Philanthropy’s focus areas, the four EA funds (the fourth being ‘EA infrastructure’), and this 80,000 Hours’ article on allocation across issues.[4])

This particular way of prioritizing altruistic projects, splitting interventions into cause areas and ranking them, is only one of several approaches.[5] This piece seeks to explicitly put on the table what could go wrong with this and other types of prioritization work that we observe in the Effective Altruism movement.

We think the different types have a variety of shortcomings, and they may well complement each other under the right circumstances. As such, failing to appreciate the shortcomings and unintentionally, or mistakenly, backing a single horse would be a mistake. By primarily focusing on within-cause prioritization, and sidelining the others, the EA community may well be radically misallocating its prioritization efforts.

The types of prioritization

Prioritization can take many shapes but three main types of prioritization arise when we examine what the subject of some prioritization work is and within which domain the prioritization work occurs.

Cause Prioritization (CP): work that ranks causes.[6]

Within-Cause Prioritization (WCP): work that ranks interventions within a cause.

Cross-Cause Prioritization (CCP): work that ranks interventions across causes.

Despite investing a lot of resources in prioritization, there's been little explicit discussion of how we should balance these kinds of prioritization. Ideally, we’d know how many resources EA should allocate to each type. With that in mind, we would evaluate the current state and how to bring that closer to our ideal outcome.

Providing a full answer to the question of balance is well beyond the ambition of this post, but we will still try to better grasp the strengths and weaknesses of each prioritization type. Since there can be room for reasonable disagreement about the benefits of prioritization of each kind, this suggests that EA should at least take all three seriously. In fact, EA should arguably invest substantial resources into each of them, and, to spoil the next section, EA doesn't currently do that.

A snapshot of EA

A quick reading of EA history suggests that when the movement was born, it focused primarily on identifying the most cost-effective interventions within pre-existing cause-specific areas (e.g. the early work of GiveWell and Giving What We Can). Subsequently, it paid increased attention to additional causes, and evaluated which of these (Global Health, Animal Welfare, or Global Catastrophic Risk) was most promising. However, as we shall see below, almost all prioritization work in the community takes place either at the level of within-cause prioritization, with little devoted to cause prioritization, and even less to cross-cause prioritization.

In this section we consider some salient organizations in EA and provide a quick classification of their activities into different types of prioritization.[7]

GiveWell

In 2022, GiveWell directed $439 million and, as of January 2025, it employed 77 people.[8] The key figure is the amount of time spent on prioritization work, which is approximately 39 full-time equivalents (FTE) based on the number of researchers listed on their website (distributed among the Commons, Cross-cutting, Malaria, New Areas, Nutrition, Research Leadership, Vaccines, and Water & Livelihoods teams, but excluding Research Operations). Because GiveWell is focused on Global Health, this figure counts towards the within-cause type of prioritization.[9]

Animal Charity Evaluators

Animal Charity Evaluators has about 16 employees and moves $10 million a year. About four people focus on operations. We register the other 12 FTEs as within-cause prioritization research in the animal welfare sector.

Rethink Priorities

Rethink Priorities engages in research across various EA causes. All research teams focus on prioritization work within specific causes, except for the Surveys Team – which does 85% within-cause (i.e. 1.9 FTEs) and 15% cross-cause prioritization (i.e. 0.3 FTEs) – and the Worldview Investigations Team, with 4.9 FTEs across CCP (60%, i.e. 3 FTEs) and CP (40%, i.e. 1.9 FTEs). The rest adds up to 29 FTEs on WCP, including 7 FTEs for Animal Welfare and 7 FTEs for Global Health and Development.[10]

Global Priorities Institute

The Global Priorities Institute doesn't fit neatly into any single category; it addresses foundational questions relevant to all levels of prioritization. Some of its work assumes worldviews like longtermism and focuses on issues such as risks from artificial intelligence. However, GPI generally doesn't compare specific interventions by name, or indeed causes, but provides considerations useful for prioritization across the board. With 16 full-time researchers and 19 affiliates or scholars, GPI can be thought of as (sometimes indirectly) doing a mix of the three, with a higher load of CP at 15.8 FTEs, some WCP at 4.2 FTEs, and almost no CCP at 0.6 FTEs.[11]

Open Philanthropy

Open Philanthropy disburses on the scale of 0.75 billion dollars annually and now employs nearly 150 individuals. Its work spans object-level analysis, grantmaking, and prioritization research. As part of its prioritization efforts, some teams explicitly conduct within-cause prioritization: approximately 7 people focus on Global Health cause prioritization, 5 are assigned to Global Catastrophic Risks cause prioritization, and 2 contribute to Farm Animal Welfare research. On the grantmaking front, the majority of work falls to about 25 people whose principal responsibility is Global Catastrophic Risks, 15 for Global Health, and 5 for Farm Animal Welfare. In total, we estimate 59 FTEs on WCP and 1.5 FTEs on CP (given, for example, internal cause prioritization exercises and needs) including some participation of the leadership members.[12]

Ambitious Impact

Ambitious Impact (formerly Charity Entrepreneurship) is dedicated to enabling and seeding effective charities through its research process and incubation program. Over the past year, it has allocated roughly 4 FTEs to within-cause prioritization, including 3 full-time permanent research team members, with additional support from fellows and contractors on a fluctuating basis. In contrast, only around 0.1 to 0.2 FTEs was spent on cause prioritization, reflecting its emphasis on deeper within-cause reports and analyses.[13]

80,000 Hours

80,000 Hours dedicated the majority of its prioritization capacity to cause prioritization, with roughly 3 FTE (primarily from the web team that researches problems and recommends cause rankings), though that figure decreased to about 2 FTE in 2024 after the departure of one researcher. This total included the team lead but excluded, for instance, the content associate. An additional 1 FTE stemmed from the collective effort of the leadership team and other staff members in investigating questions such as the importance of AI safety. (Adding up to 3 FTEs for CP). For cross-cause prioritization, our estimate was about 1 FTE for the web team’s work on neglectedness, tractability, and importance comparisons. Finally, about 1 FTE was devoted to within-cause prioritization from a combination of advisory work and the job board — e.g. in determining which roles at AI labs should appear on the job board. However, given their recent announcement of going all-in on AI, we’re classifying this total capacity of 5 FTEs as within-cause.[14]

GovAI

The Centre for the Governance of AI (GovAI) focuses on researching threats that general-purpose AI systems may pose to security. They have 28 staff (6 focused on operations) and 20 affiliates. (We expect there to be some variability but use a rough 15% FTE engagement.) They also run two three-month fellowships per year (with 18 and 12 fellows in 2024), which adds another 7.5 FTEs. The arithmetics yield an estimate of 22 + 20*0.15 + 7 = 32 FTEs.

Longview Philanthropy

Longview Philanthropy works with donors to increase the impact of their giving. Three full-time staff focus on within-cause prioritization in artificial intelligence, and two are dedicated to nuclear risk within-cause work (total 5 FTEs). Additionally, a small portion — about 0.05 full-time equivalent — is devoted to cause prioritization. (In this role, the content team and leadership periodically assess resource allocations, such as how to best deploy The Emerging Challenges Fund among artificial intelligence, biosecurity, and nuclear risk initiatives.)[15]

EA Funds

EA Funds supports organizations and projects within the Effective Altruism community through its grantmaking and funding prioritization activities. The organization carries out a range of prioritization work across its key areas: Global Health and Development Fund, Animal Welfare, Long-Term Future, and EA Infrastructure. Most team members hold full-time positions elsewhere in addition to their EA Funds role; for example, the current fund chair at the Animal Welfare Fund is the only full-time member dedicated exclusively to that fund. Overall, EA Funds’ combined capacity is estimated at 7 FTEs, and this work is classified as within-cause prioritization, where each fund can be thought of as a distinct cause area. We assigned an additional 0.1 FTEs on cause prioritization for the relevant strategic and big-picture thinking that the leadership and advisors perform.[16]

A summary of the relevant figures for each organisation is presented below.

Organisation

Within-Cause Prioritization (FTEs)

Cause Prioritization (FTEs)

Cross-Cause Prioritization (FTEs)

GiveWell

39

0

0

Animal Charity Evaluators

12

0

0

Rethink Priorities

30.9

1.9

3.3

Global Priorities Institute

4.2

15.8

0.6

Open Philanthropy

59

1.5

0

Ambitious Impact

4

0.2

0

80,000 Hours

5

0

0

GovAI

32

0

0

Longview

5

0.05

0

EA Funds

7

0.1

0

Total

198.1

19.5

3.95

Proportion

89.4%

8.8%

1.8%

It's striking that, at least in the context of the organizations above, the revealed effort devoted to within-cause area prioritization is about 9 times that allocated to cause prioritization and cross-cause prioritization combined.[17] Perhaps there are practical considerations that justify this distribution, but it's also possible that this simply isn't the optimal allocation of efforts among the different types of prioritization work. Let us consider the strengths and weaknesses of each type next.

The Types of Prioritization Evaluated

This section is a summary of our more comprehensive breakdown of the strengths and pitfalls of each type, available in the appendix.

Cause Prioritization

Cause prioritization involves comparing broad cause areas (like global health vs. existential risk) to figure out which problems most deserve attention. Since there are fewer causes than there are interventions, a shorter target list simplifies the task at hand, freeing up researcher time.

One specific benefit of engaging in prioritization at the level of causes is that this process can highlight neglected but high-potential areas that might otherwise be overlooked. If people try to prioritize interventions directly (rather than causes), then they risk missing out on whole large areas because they are not salient. e.g. people will just prioritize all the neglected tropical diseases they can think of, and totally ignore AI. Thus, engaging in prioritization at a higher level of abstraction (that of causes) can make it less likely that we will miss large areas of high-impact interventions, which were not hitherto salient.

Moreover, perhaps counterintuitively, it can be easier to do prioritization research at the level of causes (for example thinking about the value of solving general problems) than thinking about an intervention’s impact – especially for those that are more speculative. Put another way, it is often easier to sketch out how much the world would benefit from preventing risky AI technologies than to know how much a particular intervention would ultimately do towards mitigating that risk, given our uncertainty about future events.[18] That said, this is tentative. The reverse can be true, and practical considerations should ideally be taken into account in cause prioritization work.

However, in other ways, comparing the value of different causes can be especially challenging. Researchers must consider ethical trade-offs, uncertainty, and the potential for model errors. At its best, this means that cause prioritization can lead to the beneficial development of frameworks, metrics, and criteria that improve prioritization methods overall. At its worst, and sometimes more commonly, it just leads to lots of intuition-jousting between vague qualitative heuristics.

Another issue is the tendency to overlook practical difficulties of implementation and infrastructure. It’s one thing to identify “AI risk” as critical; it’s another to actually fund and execute effective projects in that area. High-level cause analysis can gloss over tractability – e.g. a cause like artificial intelligence safety might score as hugely important, but there may be a shortage of shovel-ready interventions, experienced organizations, or clear pathways to impact in the short term.[19] In contrast, a cause like global health has an extensive infrastructure (proven charities, supply chains for bednets, etc.) that makes turning funding into impact much more straightforward. Cause prioritization sometimes underestimates these on-the-ground realities, risking plans that sound great on paper but falter in practice.

Organizations and movements might also sensibly choose to diversify their efforts – perhaps because of normative uncertainty, decision-theoretic uncertainty, or empirical uncertainty about causes in practice. For cross-cause prioritization to enable altruistic organizations to spread risk across multiple causes, cause prioritization must first decide how to make sense of these causes, and point cross-cause work in the right direction.

Ultimately, cause prioritization can be of particularly high stakes: if it goes wrong, it can lead to the dismissal of entire classes of interventions and deprioritization of key problems. Conversely, it can also lead to incorrectly ruling in large classes of interventions, which fall in a superficially promising cause area, but which are not, say, actually tractable. Even if the new prioritized causes do well, there is a risk of having deprioritized the most promising interventions (because they do not fall within the most promising causes).

Within-Cause Prioritization

Within-cause prioritization zooms in on a single cause area (for example, global health, climate change, or animal welfare) and asks: which interventions or projects in this domain do the most good? Within-cause prioritization has several advantages which might allow more rigorous estimates of fine-grained interventions. One such possible virtue is the specialization of within-cause research. This specialization means analysts and organizations become domain experts, often uncovering nuanced improvements that dramatically boost impact (such as optimizing vaccine schedules or finding new treatments). This evidence-driven, granular approach excels a) in areas where institutional expertise can be leveraged and b) where success can be measured and repeated, producing confident recommendations. In short, within-cause prioritization offers precision. When you hold the cause area fixed, you can more genuinely compare apples to apples – and often find some apples are amazingly juicier than others.

Another strength of this approach is its empirical tractability. Working within one field means we can often gather concrete data and use consistent metrics to compare options. In global health, for instance, researchers can run randomized trials or use epidemiological data to measure outcomes like lives saved or DALYs averted. This yields clear rankings of interventions by cost-effectiveness. We’ve seen that rigor pay off – some studies found that in health, the top interventions (like distributing insecticide-treated bednets for malaria or, more recently, lead elimination) were dozens of times more effective than more average interventions.

A cause-specific approach can also better attract certain classes of funders. Potential donors, especially those outside of the EA space, are more likely to fund interventions within familiar contexts tied to their cause-specific values, preferences, and commitments. Moreover, it can be easier to build movements (of both funders and problem-solvers) around the importance of a particular problem or cause. This can be because of shared worldviews, identifiable recruiting pathways (e.g. from existing research departments, conferences, and organizations), and a shared language.

All that said, a laser focus within one cause can lead to local optima and tunnel vision. By keeping our heads down in one field, we might miss the bigger picture across causes. An intervention can be the best in its category and still be suboptimal from an overall welfare perspective if the category itself isn’t where resources can do the most good. For example, a global health expert might spend time debating whether anti-malaria bednets are more cost-effective than malaria vaccines – a valuable comparison within global health – yet completely overlook animal welfare interventions as an alternative use of funds. Moreover, within-cause prioritization is prone to neglect out-of-cause sources of value or disvalue (e.g. global health specialists risk neglecting animal welfare, or vice versa).Ultimately, it can become easy to assume some cause area as a given and not question it, especially as institutions and individuals’ careers become consolidated.These dynamics can be exacerbated by the increased potential for groupthink as prioritization becomes dominated by specialists with an interest in one particular cause. Such tunnel vision can result in overlooking opportunities in other causes, or synergies between causes, that potentially dwarf the gains of even the best in-cause option.

There’s also the danger of metric myopia: within a single domain, people tend to optimize for what’s easily measurable (e.g. DALYs for health, CO₂ emissions for climate), which can marginalize important but harder-to-measure benefits. Thus, while within-cause prioritization brings scientific rigor and specialization, it must be balanced with a willingness to occasionallylook up and zoom out. Otherwise, we might perfect the wrong plan – achieving the best outcome in a narrower field that no longer is, or never was, the top priority overall.

Cross-Cause Prioritization

Cross-cause prioritization takes the widest view, while still operating at the level of interventions – it tries to compare and allocate resources across fundamentally different causes based on impact. This approach is maximally flexible – instead of committing to one cause, it allows an altruist to continually ask “Where can my next dollar or hour do the most good, anywhere?” The strength here is that it embraces an impact-first mindset, unconstrained by silos.

A cross-cause framework can detect synergies and neglected opportunities that a single-cause focus might miss. For example, it might reveal that a dollar spent on a particular pandemic prevention intervention yields benefits for both global health and existential risk reduction, a two-for-one impact that a siloed analysis wouldn’t fully capture. Many EA-aligned philanthropists use cross-cause comparisons on some level to build a portfolio of interventions – funding a mix of global health, climate, animal welfare, and long-term future projects in proportion to how much good they expect additional resources in each would do.

This approach is also adaptive: as new evidence or cause areas emerge, cross-cause reasoning can redirect effort dynamically. For example, given its cause-transcending and evolving nature, the movement is potentially able to quickly shift resources between interventions across many causes. The advantage is an ever-present focus on what we ultimately care about: overall impact maximization. In cross-cause work you’re constantly evaluating trade-offs, which helps ensure that easy wins in any domain aren’t left on the table. Taking stock of these strengths: cross-cause prioritization empowers EAs to find the best interventions, period – it’s the tool that attempts to put everything on a common ledger and identify where resources can do the most good across all of reality’s suffering and opportunity.

In the end, while this type of work can be more challenging, it forces researchers to navigate difficult trade-offs and compare apples and oranges, which can lead to progress in decision theory, ethics, and other fields that make cross-cause prioritization possible.

With that broad a mandate, however, come significant challenges. One of the biggest issues is ethical commensurability – essentially, how do you compare ‘good done’ across wildly different spheres? Each cause tends to have its own metrics and moral values, and these don’t easily line up. Saving a child’s life can be measured in DALYs or QALYs, but how do we directly compare that to reducing the probability of human extinction, or to sparing chickens from factory farms? Cross-cause analysis must somehow weigh very different outcomes against each other, forcing thorny value judgments. One concrete example is comparing global health vs. existential risk. Global health interventions are often evaluated by cost per DALY or life saved, whereas existential risk reduction is about lowering a tiny probability of a huge future catastrophe. A cross-cause perspective has to decide how many present-day lives saved is “equivalent” to a 0.01% reduction in extinction risk – a deeply fraught question. Likewise, comparing human-centric causes to animal-focused causes requires assumptions about the relative moral weight of animal suffering vs. human suffering. If there’s no agreed-upon exchange rate (and people’s intuitions differ), the comparisons can feel toodisparate. Researchers have attempted to resolve this by creating unified metrics or moral weight estimates (for instance, projects to estimate how many shrimp-life improvements rival a human-life improvement), but there’s often no escaping the subjective choices involved. This means cross-cause prioritization can be especially contentious and uncertain: small changes in moral assumptions or estimates can flip the ranking of causes, leading to debate.

Another downside is that there is less institutional expertise that can be leveraged in cross-cause comparisons, as well as the potential for metric inconsistency and complexity. Aggregating evidence across causes is very hard – the data and methodology you use to assess a poverty program vs. an AI research project are entirely different. Having worked on broad cross-domain analyses of this kind, we have previously noted how difficult it is to incorporate “the vast number of relevant considerations and the full breadth of our uncertainties within a single model” when comparing across domains. Despite these difficulties, cross-cause prioritization remains a key tool in EA’s toolkit for optimally allocating resources. More than that, we might say that being able to identify the highest impact interventions, across all causes, is what we as EAs ultimately want to achieve with any prioritization work. And that we should be cautious about succumbing to the streetlight effect merely because it is difficult.[20]

Summary Table

We have put together the relevant considerations (see their breakdown here) into a table below. Often, the strengths of one approach are directly related to the weaknesses of another.[21]

There are clear tradeoffs between the different modes of prioritizing: not a single consideration is a weakness or strength for all three types. At a glance, this points towards a mixed strategy.

What factors should push us towards one or another?

In particular, there are some cruxes that would change the ideal composition of the prioritization strategy. We outline eight of these below.

Cruxes

If the best existing interventions are split across the board and the cost of building new capacity is factored in, then cross-cause prioritization is indicated. When promising projects are distributed across multiple causes – perhaps some requiring substantial infrastructure while others are ready to scale immediately – cross-cause prioritization allows us to weigh both the immediate benefits and long-term investments, and point us in the right direction.

If we are very certain about our moral framework and our understanding of the world at the cause level, it is natural to lean more heavily on the cause that aligns with our beliefs and pursue within-cause prioritization. High confidence in our ethical perspectives and empirical data makes it sensible to concentrate resources in a specific domain. In particular, if we were confident that we are living in a unique juncture where one area is facing extraordinary risks — such as a window of enormous nuclear peril or the entrenchment of harmful factory farming practices — then within-cause prioritization is justified. Conversely, if there is significant moral or epistemic uncertainty, blending cause-level and cross-cause evaluations can help hedge against that uncertainty.

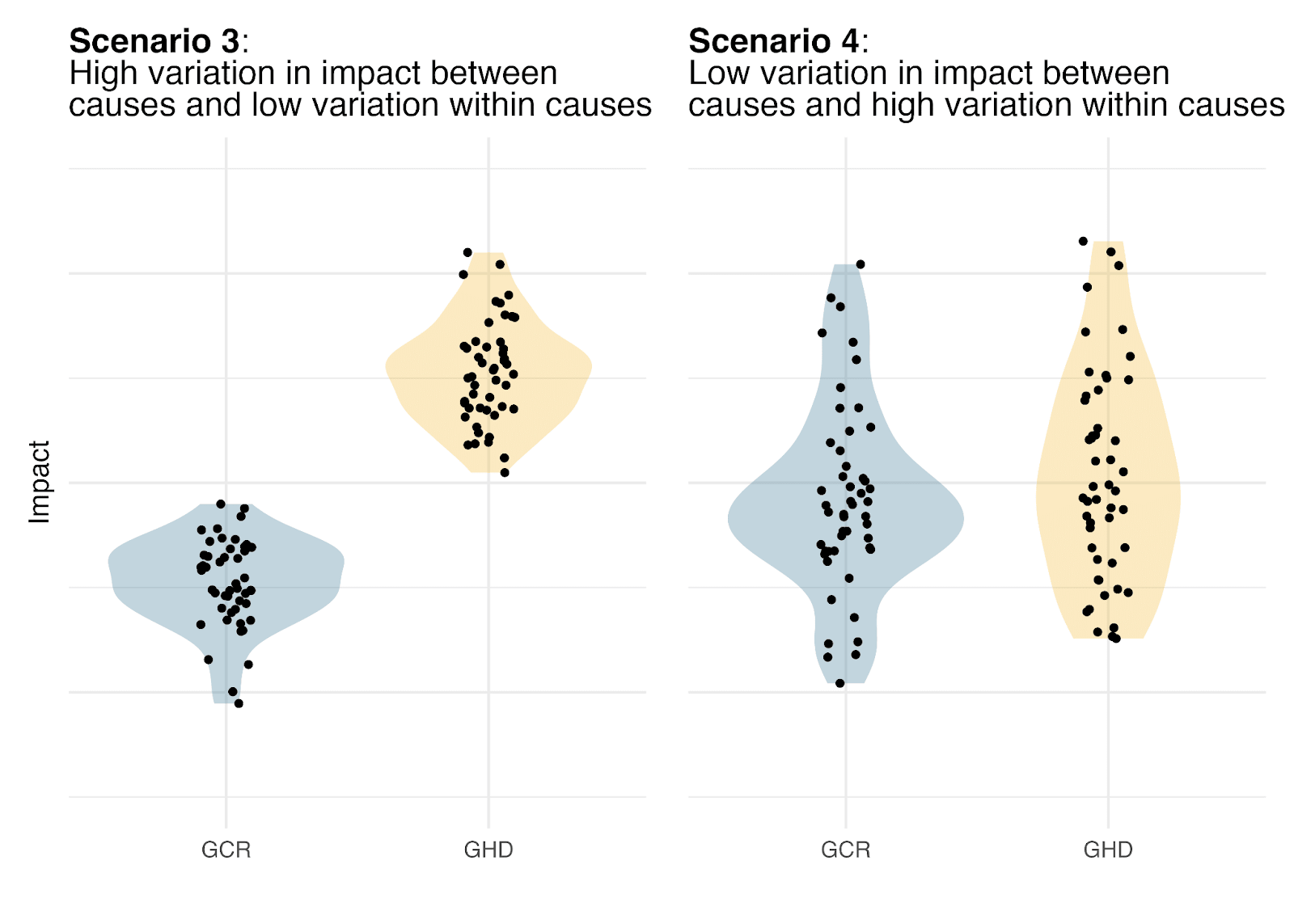

If the variation between causes is high while the differences among interventions within each cause are relatively small, then cause prioritization is likely to be more effective. When overall impact potential varies significantly from one cause to another, and the best interventions in non-top fields show less promise than interventions from a top cause, finding the right cause and then specialising on it is the ideal course of action.

If the differences in impact among individual interventions across causes are very large, then cross-cause prioritization becomes a more compelling strategy. In situations where the distribution of impact across individual projects spans a wider range than the distribution across cause categories, prioritizing at the level of causes may be less effective and prioritizing at the level of interventions across causes more so.[22] For example, if an artificial intelligence risk reduction project proves to be vastly more cost-effective than any intervention in global health, cross-cause analysis will highlight this exceptional potential. This strategy allows decision-makers to compare disparate outcomes and select the intervention with the highest overall impact.

If obtaining detailed information at the level of individual interventions proves to be generally intractable, then it is sensible to rely on cause prioritization rather than within-cause or cross-cause approaches. When gathering granular data is too complex or resource intensive, evaluating causes at a higher level can still provide strategic guidance for resource allocation. For example, this applies in more speculative x-risk sectors where reliable field data is scarce, and we consistently rely on forecasts, overarching trends, and higher-level predictions. Suppose instead you thought it was intractable to obtain information about which abstract cause is best (e.g. you hold an epistemic view under which we can gain reliable knowledge (only) through rigorous empirical investigation, RCTs, tight feedback loops, etc, but we can't gain reliable knowledge about whether global health as a whole or AI risk is better). In that case, the opposite conclusion would hold: we’d turn away from cause prioritization.

If you are worried about making a meaningful difference and are averse to futility, then diversifying your portfolio, and performing cause-level and cross-cause prioritization, is a wise strategy. Concern over avoiding wasted efforts calls for diversifying resources across multiple causes to reduce the risk of correlated outcomes from overfocusing on one area. For instance, an organization might allocate funding across global health, AI, and biosecurity projects to ensure that a setback in one field does not derail all progress. Intervention and cause diversification, made possible through a blend of cause-level and cross-cause prioritization work, builds resilience and increases the probability of achieving impact.

If we lived in a period of high uncertainty about causes and interventions – such as because of rapid transformative technological shifts – it becomes important to prioritize causes and conduct shallow investigations to adjust our strategies. Dramatic global shifts require a quick reassessment of which causes demand urgent attention and which may have diminished in relative importance. For example, the emergence of new risks associated with artificial intelligence may prompt a reallocation of resources from traditional programmes to newly identified high-impact domains. A broad, high-level evaluation helps the community remain agile and responsive in the face of the uncertainty brought about by this rapid change.

If some cause’s practical reality makes it decisively more cost effective – for example, because we can leverage cause-specific funding or expertise to a very large degree – then a within-cause-focused strategy is advisable. When some cause is dominant, prioritization within it becomes especially valuable. For example, when specialized funding or institutional strengths dramatically enhance the efficiency of an intervention, concentrating prioritization efforts in that area can be particularly high-impact. Concretely, if a major foundation or government with cause commitments is liable to shift enormous funding efforts toward significantly better interventions, prioritization within that cause becomes extremely important.

Possible Next Steps

With the previous analysis in mind, below are several potential next steps aimed at refining our approach to prioritization by exploring variance, value of information, tractability, and the reliability of the different methods.

Perform research to assess the relative variance of interventions (and distribution patterns more generally) within and between causes, which likely requires some amount of cross-cause prioritization work.

Investigate the marginal value of information (VOI) across the different types of prioritization research — cause prioritization, within-cause prioritization, and cross-cause prioritization. This includes evaluating how much we improve our expected value estimates by reducing uncertainty at the level of causes versus within a cause or across causes. Such analyses could be conducted prospectively or assessed retrospectively using recent prioritization research.

Evaluate the practical tractability of each type of prioritization for various causes.

Examine how cause prioritization operates in practice. Unlike the other two methods, which are relatively straightforward, CP is more heavily heuristic-based, leaving open important questions about its reliability. For instance, does high-level CP reveal the average value of an intervention within a cause or does it highlight the value of that cause’s top-tier interventions? Additionally, consider whether causes are the appropriate unit of analysis — particularly since problems at a given scale might naturally decompose into sub-causes with varying levels of tractability and neglectedness — and how this influences our prioritizations.

Determine the desirable degree of confidence and certainty regarding cruxes that affect cross-cause prioritization strategies (e.g. moral weights across species or optimal risk attitudes in altruistic spaces).

Regardless of whether they engage in more systematic research into questions like those above, we encourage people in this space to think critically about both the quantitative and qualitative value of the prioritization types we’ve discussed, especially their value relative to one another.

Conclusion

This post sought to introduce different types of prioritization work and make the case that we should be deliberate about how we devote resources to each type. We saw some examples of organizations in the EA space and how their research might be classified using this framework. We outlined the main strengths and weaknesses of each type. Finally, it seems that though there are clear virtues of within-cause prioritization – the dominant type of research today by 9 times to 1 – the EA movement would likely benefit from spending more research time on the other two types given their importance relative to within-cause research, the impartialorientation of the movement, and the uncertainty we’re enveloped by.

This suggestion calls for more detailed research into the ideal levels of effort that should be put into each kind of prioritization. Every type of prioritization has its pros and cons (see the appendix’s breakdown), and by integrating them, effective altruists can aim to overcome the limitations of each — choosing causes with big upside, pursuing the best interventions in those causes, and constantly checking if our focus should shift elsewhere. This balance is how we try to do the most good with the resources we have.

Acknowledgements

This post was written by Rethink Priorities' Worldview Investigations Team. Thank you to Oscar Delaney, Elisa Autric, Shane C., and Sarah Negris-Mamani for their helpful feedback. Rethink Priorities is a global priority think-and-do tank aiming to do good at scale. We research and implement pressing opportunities to make the world better. We act upon these opportunities by developing and implementing strategies, projects, and solutions to key issues. We do this work in close partnership with foundations and impact-focused non-profits or other entities. If you're interested in Rethink Priorities' work, please consider subscribing to our newsletter. You can explore our completed public work here.

Appendix: Strengths and Pitfalls of Each Type

This appendix breaks down the strengths and potential weaknesses of each form of prioritization work.

Within-Cause Prioritization Strengths

Decision-Making Support

Requires fewer comparisons:

An immediate virtue of splitting interventions into causes is that the target set of projects to prioritize is of a more manageable size.

A shorter project list simplifies the task at hand, freeing up researcher time that can be put towards more prioritization work.

Comparability of Outputs

Facilitates like-for-like comparisons:

Within a specific cause, the background conditions, assumed values, and epistemic standards (e.g. the evaluation of intervention efficacy or frameworks for impact assessment) are relatively stable and shared among the interventions under consideration.

This allows for more straightforward, context-specific data collection and analysis. For example, when comparing long-lasting insecticidal nets to seasonal malaria chemoprevention, the population affected and the metrics for success (like malaria incidence) are directly comparable.

Presents clearer empirical demands:

By fixing the context, the evidence needed to perform comparisons is often clearer. This promotes more detailed and in-depth evaluations and collecting specific data, allowing researchers to focus on increasing the quality of the data, which can lead to better supported conclusions.

Similarly, the bar that an intervention needs to meet to surpass another is better defined.

Carries metric consistency:

Within causes, assessments can leverage standardised metrics (e.g. DALYs for health interventions). This facilitates data collection and evidence aggregation.

Greater ethical and conceptual commensurability:

Within-cause prioritization avoids the conceptual and ethical complexity of comparing interventions across vastly different domains.

By focusing on more comparable interventions, there is less scope for the challenges that arise from worldview disagreements.

Disciplinarity Advantages

Benefits from specialisation:

Researchers can develop deep subject-matter expertise in specific areas, improving their productivity and the quality of their work.

In particular, this specialisation can lead to better models, more accurate data, and context-specific insights that would be absent in broader, cross-cause analyses.

Leverages domain-specific knowledge, institutions, and empirical studies:

For within-cause analysis, relevant evidence can more often come from direct empirical studies (like randomised controlled trials for health interventions) that are specific to that domain. These studies are more abundant within well-established areas like global health or development economics, where fieldwork is common.

Domain experts are well-equipped (with time and knowledge) to find important flaws and hidden or incorrect assumptions in analyses that are done by generalists researching at the cause level.

Prioritization work within a cause can sometimes leverage institutional expertise, since universities and research groups are often organised by specific fields or disciplines, allowing for access to established methodologies and domain-specific data sources. This structure supports deeper analysis and fosters collaboration with subject-matter experts, leading to more rigorous research.

Responsiveness to Evidence

Detailed research can be insightful and more attractive

Cause-specific assumptions and methods stand on existing peer-reviewed literature more often, which allows researchers to build on previous work and go deeper.

Greater depth can spark ideas for promising new interventions, allow researchers to better diagnose problems, and result in more detailed recommendations to improve existing interventions and cover key funding gaps.[23] For example, consider wild animal prioritization research that leads to the discovery that targeted habitat modifications can reduce heat stress for certain species.

Detailed research is plausibly more likely to be replicable than less-detailed research.

Detailed research and paths to impact make it easier to communicate with stakeholders.

Donors often prefer to fund interventions with more detailed, well-defined, and measurable outcomes.

Movement Building

Attracts cause-specific funders

Potential donors, especially those outside of the EA space, are more likely to fund interventions within familiar contexts tied to their cause-specific values, preferences, and commitments.

Facilitates movement building:

It can be easier to build movements around the importance of a particular problem or cause. This can be because of shared worldviews, identifiable recruiting pathways (e.g. from existing research departments, conferences, and organizations), and a shared language.

A movement built around a cause might enjoy a more coherent culture and clarity around expertise and funding sources. Moreover, as people specialize, they are often more committed, enthusiastic, and motivated which can facilitate movement growth.

Concentrated movements suffer less from other causes’ bad reputation:

A within-cause focus can allow the development of more granular sub-movement infrastructure, which may be more robust.

In particular, a more granular movement's reputation is less likely to be exposed, and specifically suffer from other causes' bad reputation, if they were otherwise unified.

Within-Cause Prioritization Weaknesses and Potential Pitfalls

Responsiveness to Evidence

Risk of ‘local optimum’:

This type of prioritization can result in a ‘local optimum’ where resources are optimised within a specific cause, but a better opportunity in another cause is missed. For instance, a global health intervention might be highly cost-effective within that cause but less impactful when compared to animal welfare opportunities.

Even if the best opportunities in some cause happen to be the best overall, that does not imply that other top interventions within that cause will outperform interventions in other causes, which could still offer higher impact or cost-effectiveness. The opportunity cost of supporting suboptimal interventions can be very high.

This risk is particularly salient given the empirical and normative uncertainty we face in prioritization work.

Could be (erroneously) dismissed:

If people have strong enough beliefs about the (low) impact boundaries of a cause, people might dismiss interventions within it, even if the evidence for them outperforming interventions from other causes is robust.

For example, if someone believed that global health was not a very important problem, and thus any interventions within it could at most have moderate impact, they could quickly dismiss an intervention in that sector. This is compatible with that particular intervention turning out to be very impactful and competitive with interventions from the sectors that the person thought were most important.

Reliance on cause prioritization:

If the initial choice of cause is suboptimal, then resources dedicated to optimising within that cause may be inefficiently allocated. This highlights the need for robust cause prioritization work to ensure that resources are directed toward causes with the greatest overall potential for impact.

This means that making progress in within-cause prioritization is generally not enough; it must be paired with progress in cause prioritization.

Because of this pairing, within-cause approaches can be understood to inherit the weaknesses of cause prioritization.

Epistemic dangers:

Not only are there practical challenges to pivoting causes, but personal connections to causes and sets of ideas can create epistemic blind spots, reducing people's willingness to engage with evidence and arguments that challenge their beliefs.

This "tunnel vision" effect can prevent more ambitious or transformative thinking about where to direct resources.

There’s a danger of metric myopia: within a single domain, people tend to optimize for what’s easily measurable (e.g. DALYs for health, CO₂ emissions for climate), which can marginalize important but harder-to-measure benefits.

Decision-Making Support

Synergies and interdependencies blind spots:

Working solely within a cause can prevent researchers from identifying cross-cause synergies. For example, it might be harder to find certain interventions (e.g. promising interventions benefitting both global health and climate resilience that are unremarkable when the benefit to each area is viewed in isolation) or new methodological approaches.

Potential cross-cause spillover effects, where an intervention in one area affects another, may be overlooked.

Reduced flexibility and agility:

The deeper the focus on a specific cause, the harder it becomes to pivot or reallocate resources if new evidence suggests that a different cause or intervention is more important.

Organizations and researchers may become "locked in" to their area of expertise, resisting shifts to new causes or frameworks.

This is particularly problematic in a rapidly changing world, especially one shaped by transformative technological developments (e.g. transformative AI), as such developments can shift global priorities and introduce high-impact interventions that may go unrecognized if researchers remain focused on a single older cause.

Concentrated movements suffer less from other causes’ bad reputation:

The flipside of the above: A more granular movement's reputation is less likely to benefit from other causes' bad reputation if they were otherwise unified. A multi-cause movement can sometimes leverage the reputation of those well-received causes for recruitment and fundraising.

There are numerous strengths of the within-cause approach. But, given the risks and likely pitfalls, it would be reckless to not do a minimal amount of cross-cause work. We explore that next.

Cross-Cause Prioritization Strengths:

Given that many of the strengths and weaknesses are parallel (and opposite) to those of within-cause prioritization, the reader should feel free to jump to the summary table. Cause prioritization is also definitionally cross-cause, and it shares many of the considerations below.

Decision-Making Support

Encourages synergies and interdependencies:

Cross-cause analysis can reveal opportunities for synergies where one intervention positively influences outcomes in multiple causes (e.g. climate change mitigation supporting public health outcomes).

In fact, not evaluating interventions across causes risks missing important effects of interventions on other causes (e.g. human interventions on animals and vice versa).

More suitable for portfolio diversification:

Similar to financial portfolio theory, cross-cause prioritization can allow altruistic organizations to spread risk across multiple causes. This hedging approach reduces the risk of overcommitting to a single cause that may turn out to be less impactful than anticipated.

This is because interventions within the same cause are likely to exhibit stronger correlations in effectiveness due to their shared characteristics and underlying context.

Can more easily be impact-first:

Though this is not automatic, and research may go into tangents, the relentless pursuit of impact can have a more natural home under this type of prioritization, where there are no cause-thematic constraints.

By looking at multiple cause areas together, cross-cause prioritization encourages a broader view of impact. This avoids tunnel vision and highlights opportunities that might be overlooked in within-cause prioritization.

An impact-first approach may be more likely to have intrinsic goals in mind and might be less susceptible to failure by proxy (where instrumental but ultimately unimpactful goals are prioritized).

Responsiveness to Evidence

Less likely to be stuck on local optimum:

A continuation of the above strength, a cross-cause approach is tasked with filtering interventions by their cost-effectiveness and prioritizing between the top options, regardless of their cause.

Any proposed rankings can more closely reflect interventions’ true impact and not just their relative performance within a cause.

Flexible and easy to update:

As new evidence and considerations emerge, cross-cause prioritization allows for resource reallocation across different causes, making it more adaptable to changing global circumstances (e.g. pandemics, emerging AI risks, or environmental crises).

Is more open to unusual approaches:

It may reveal neglected but high-impact causes that would remain hidden under a within-cause framework.

Any projects that do not neatly fall into the topics and methods of any particular major cause might be neglected by non cross-cause approaches relative to their cost-effectiveness.

Relatedly, this approach is less susceptible to path dependencies —for instance, a path where EA movement's initial way of carving up the landscape continues to drive thinking beyond when it's useful.

Movement Building

Attracts impact-driven funders:

This can lead to more meaningful and actionable insights that aren't obscured by differences in measurement units or ethical considerations.

Comparability of Outputs

Promotes establishing general value frameworks:

While this type of work can be more challenging, it forces researchers to navigate difficult trade-offs and compare apples and oranges, which can lead to progress in decision theory, ethics, and other fields that make cross-cause prioritization possible.

Decision-Making Support

Better accommodates ethical pluralism:

It allows for consideration of multiple ethical perspectives and worldviews. For example, some donors may prioritize long-term existential risks, while others may focus on present-day human suffering. Cross-cause work makes those trade-offs explicit and can promote conversations between philanthropic groups with a disparate set of values and goals.

Cross-Cause Prioritization Weaknesses and Potential Pitfalls

Comparability of Outputs

Extra assumptions

In cross-cause prioritization (e.g. comparing malaria nets to AI safety research), the context constantly shifts, and often drastically. Further assumptions are needed to meaningfully compare disparate interventions.

Metric Inconsistency

In cross-cause prioritization, comparing health-related DALYs to animal welfare metrics (like welfare-adjusted life years) or existential risk reduction (which may use entirely different proxies like reduction in existential risk probability) introduces methodological complexities.

Disciplinarity Advantages

Less institutional expertise

Cross-cause comparisons often rely on theoretical models or forecasts due to the absence of pre-existing frameworks that can accommodate multiple causes (especially newer and promising future causes) and high-quality empirical evidence (e.g. estimating the impact of AI alignment research on existential risk reduction).

Harder to specialise

Cross-cause work requires a variety of skills. It might be possible for researchers to specialise in relevant ways but specialisation seems harder at first glance.

Movement Building

Potential for reputational risks

The speculative and contentious nature of cross-cause comparisons could lead to reputational risks for organizations. For example, if an organization deprioritizes a widely supported cause (like poverty alleviation) in favor of a less publicly understood intervention, it could face backlash from stakeholders, donors, and the general public.

Decision-Making Support

Value disagreement and ethical disputes:

People hold diverse ethical views (e.g. longtermism vs. neartermism), which makes it difficult to reach a consensus on which interventions deserve priority. Cross-cause frameworks must navigate these disagreements, often without providing definitive conclusions.

High analytical and knowledge burden:

Deciding how to compare interventions across unrelated cause areas can be especially challenging. Researchers must consider ethical trade-offs, uncertainty, and the potential for model errors. This is especially true of more speculative areas.

There's a high burden of knowledge in cross-cause prioritization, both empirical and social. You have to stay up to date on research in many fields, rather than just one. You also have to know and have working relationships with many more people and organizations, and be comfortable switching contexts, which might be a practical challenge.

Risk of ‘over-abstracting’ real-world issues:

While cross-cause prioritization aims for a holistic view, it may inadvertently abstract away important context-specific details, reducing the practical relevance of the research.

Cause Prioritization Strengths

Decision-Making Support

Permits fewer comparisons:

There are fewer causes than there are interventions, a shorter target list simplifies the task at hand, freeing up researcher time.

Can be more tractable:

A potentially decisive consideration: it may be easier to do prioritization research at the level of causes (e.g. thinking about the value of solving problems) than thinking about an intervention’s impact, especially for more speculative areas. For example, it might be easier to sketch out how much the world would benefit from preventing risky AI technologies than to know how much a particular intervention would do towards that. A relevant analogy is modeling climate vs. modeling weather.

That said, this is tentative. The reverse can be true, and practical considerations should ideally be taken into account in cause prioritization work.

Promotes rigorous methodological development:

Similarly to how cross-cause work promotes the development of general value frameworks, the process of comparing causes requires the development of frameworks, metrics, and criteria that improve prioritization methods overall.

Enables cause diversification:

For cross-cause prioritization to enable altruistic organizations to spread risk across multiple causes, cause prioritization must first decide how to define these causes.

Responsiveness to Evidence

Encourages holistic thinking:

We might sometimes miss the forest for the trees. Keeping a distant view helps us appreciate the broader landscape of outcomes rather than getting bogged down in short-term details.

Holistic thinking is probably the most adaptable when new information comes to light or circumstances change.

Helps discover neglected problems:

By scanning a broad landscape of causes, this type of work can highlight causes that are neglected but have high impact potential, directing resources to underfunded areas that might have been overlooked.

It may also identify problem areas early since it isn’t tied to existing causes (as in within-cause prioritization) or existing interventions (as in cross-cause prioritization).

Movement Building

Attracts impact-driven funders:

Just like cross-cause prioritization, it may attract more support from givers who want to prioritize impact-driven research, rather than projects that fit neatly into a particular area of their interest.

Enables within-cause prioritization:

Insofar as a movement wants to do within-cause prioritization and enjoy the strengths of that approach, it needs to know what areas are highly impactful first. Cause prioritization is this essential first step.

Cause Prioritization Weaknesses and Potential Pitfalls

Can miss the fine print:

Cause prioritization can fall into abstraction about causes, ignoring variation at the level of intervention (e.g. in an ITN analysis of a general problem). It may fail to investigate the details that precisely make the difference between an opportunity being high or low impact.

By overly focusing on which problems are potentially most important, it may underestimate the cost of building infrastructure to tackle such problems. In other words, there might be causes that seem very promising but are very hard to make progress on without large investments in building capacity and infrastructure. In the end, this could make them less cost-effective than their counterparts, which were originally deemed less pressing. Similarly, it might be blind to the value of infrastructure that increases the efficacy of interventions in more mainstream areas and their ability to absorb more funding.

Decision-Making Support

Particularly high stakes:

If cause prioritization goes wrong, it can lead to the dismissal of entire classes of interventions and deprioritization of key problems. By contrast, in intervention prioritization work, mistakes in rankings aren’t as problematic unless they are systematic – and even then they might not be of this scale.

Difficulty communicating abstract conclusions:

Since cause prioritization often deals with broad, abstract causes (like ‘AI alignment’), it can be challenging to clearly communicate the rationale for prioritizing one area over another to the general public, donors, or policymakers.

This is especially challenging since there are bound to be worldview disagreements about what should be included and excluded in determining cause rankings.

High analytical burden:

This weakness is shared with cross-cause work. Comparing radically different causes can be especially challenging. Researchers must consider ethical trade-offs, uncertainty, and the potential for model errors.

This is particularly true for more speculative areas where there is less empirical data and more reliance on abstract reasoning and modeling.

Susceptible to vague intuition jousts

At its best, cause prioritization can lead to the beneficial development of frameworks, metrics, and criteria that improve prioritization methods overall. At its worst, and sometimes more commonly, it just leads to lots of intuition-jousting between vague qualitative heuristics.

Responsiveness to Evidence

Idiosyncrasies can lead to dismissal of entire causes:

Because of these high stakes, idiosyncrasies in work at this level can have long-term consequences. If flawed assumptions underpin rankings, entire causes could be dismissed.

This could be particularly problematic if a specific cause area becomes wrongly prioritized early on and the community becomes entrenched, making it difficult to reallocate large sums of resources even if evidence shifts.

Deceptively robust to evidence:

It may be intuitive that research at the level of causes will largely concern worldview questions rather than nitty-gritty details. Additionally, worldview questions are more robust to changes in the world and tend to be recalcitrant.

Still, there are bound to be many unknowns in this type of abstract work. Cause prioritization still requires researchers to update their impact analyses as the world shifts and we learn more about it. The same is true for abstract tools. Researchers should revisit cause rankings as better ethical and decision-theoretic frameworks are developed.

Though updating is valuable for all types of prioritization, it is especially important at the level of causes, in cases where pivotal shifts in evidence could drastically affect the rankings. For example, certain key developments in the field of transformative AI could make that entire area much more or less important.

While the previous paragraph suggests an ordering of intervention groups based on overall impact, it is worth noting that some view cause areas differently. Many see the three major cause areas — global health, animal welfare, and catastrophic risks — as clusters of altruistic opportunities reflecting fundamentally different values rather than merely sets of interventions (that ought to be ranked). In practice, however, people often favor certain causes and implicitly make trade-offs between them according to their values, resulting in an informal cause ranking. Additionally, some individuals express uncertainty about the relative importance of these values and therefore advocate for diversification across causes as a hedge. Open Philanthropy’s explicit framing of these three cause areas as driven by uncertainty over values is a clear example of this perspective.

Rankings should be thought of as cardinal not merely ordinal throughout this piece. That is to say we use ‘ranking’ to mean more than just ordering causes or interventions from highest to lowest value, and instead they are to be evaluated and positioned in a scale.

There could be more layers. For example, there could be worldviews (e.g. shortermist and animal-focused, shortermist and human-focused, longtermist and suffering-focused, etc), then causes as they arise in each worldview, and finally, interventions as they arise in each cause. This is a natural alternative but we have opted for the above framework for this post.

It should be emphasised that the estimations are rough and not necessarily fully accurate or up to date. The list of organizations is meant as an illustration and by no means exhaustive of all the relevant efforts in this space.

GiveWell’s cross-cutting team is the closest to doing cross-cause flavoured research. However, as expected, so far their work has focused on cutting across subareas within global health and development, and thus falls into WCP for the purposes of the categories here. We’ve excluded the research operations team from the FTE calculations. All the other organizations’ figures are as of March 2025, unless specified.

Rethink Priorities is also the fiscal sponsor of the Institute of AI Policy and Strategy. While prioritization is only a subset of their portfolio, we include a 15 FTEs WCP estimate on their behalf.

See complete calculations and details here. Figures taken from publicly available data and from superficial discussions with GPI about these estimates.

Our figures are drawn from public information available through their website, especially https://www.openphilanthropy.org/team/ and a brief discussion with them. Our estimates are rough, and stem from our best understanding of the organization. In particular, several team members often wear multiple hats, meaning that the actual full-time equivalent numbers allocated to each category might vary in either direction. Here is an older but potentially relevant link to CP: https://www.openphilanthropy.org/research/update-on-cause-prioritization-at-open-philanthropy/.

Our estimates are rough, and stem from our best understanding of the organization. They are based on information available through their website, especially https://www.longview.org/about/#team and a quick clarification with them.

This captures institutional efforts, but doesn't not reflect individual prioritization, which might change the balance a bit. Though, of course, individual efforts may be more informal. And, in fact, it seems plausible that most cause prioritization done by the community is informal individual prioritizations.

Common EA cause prioritization frameworks, such as the ITN framework, often explicitly include consideration of ‘Tractability’. However, when applied to a whole cause area, rather than to specific interventions, such assessments often rely on abstract or heuristically driven assessments of in-principle tractability, rather than on identifying specific interventions or opportunities that are tractable and cost-effective.

That said, the fact that what we ultimately want to be able to do is prioritize interventions across causes does not immediately imply that the decision process we should use to achieve this is to directly attempt cross-cause prioritization directly. Hence, why all of the pros and cons of the different approaches to prioritisation outlined here remain live considerations.

Some considerations do not point in favor or against a type of prioritization work. For example, discovering neglected or emerging problems is not automatically more or less likely when doing cross-cause research, and could be somewhere in between how likely it is in WCP and CP work.

In the scenario we illustrated, variation is both high at the intervention level and low at the cause level, for all causes. However, even in cases where variation is very high only within certain causes, cross-cause prioritization at the level of interventions may still be recommended, as the highest impact interventions might be found within the high variation cause even if that cause is, on average, lower impact than other causes.

Or on the types of prioritization, their strengths, pitfalls, and how EA should balance them

The cause prioritization landscape in EA is changing. Prominent groups have shut down, others have been founded, and everyone is trying to figure out how to prepare for AI. This is the first in a series of posts examining the state of cause prioritization and proposing strategies for moving forward.

Executive Summary

Introduction: Why prioritize? Have we got it right?

Arguably, a key, if not the key, contribution of Effective Altruism is that it helps us prioritize opportunities for doing good. After all, answering EA’s central question, ‘How do we do the most good?’, requires just that: comparing the value of different altruistic efforts.

Prima facie, we might wonder why we need to consider different levels of prioritization at all. Why not simply estimate the cost-effectiveness of all possible altruistic projects, regardless of their cause area, and then fund the most impactful? Yet, in practice, EAs don’t tend to do this. Instead, much of EA prioritization work has focused on cause prioritization and, as we argue below, most current EA prioritization work focused on within-cause prioritization.

Indeed, as of 2025, most key organizations and actors in Effective Altruism operate within the framework of 3 broad categories of problems, or ‘cause-areas’ as EAs call them: Global Health and Development, Animal Welfare, and Global Catastrophic Risks.[2] [3] (See, for example, Open Philanthropy’s focus areas, the four EA funds (the fourth being ‘EA infrastructure’), and this 80,000 Hours’ article on allocation across issues.[4])

This particular way of prioritizing altruistic projects, splitting interventions into cause areas and ranking them, is only one of several approaches.[5] This piece seeks to explicitly put on the table what could go wrong with this and other types of prioritization work that we observe in the Effective Altruism movement.

We think the different types have a variety of shortcomings, and they may well complement each other under the right circumstances. As such, failing to appreciate the shortcomings and unintentionally, or mistakenly, backing a single horse would be a mistake. By primarily focusing on within-cause prioritization, and sidelining the others, the EA community may well be radically misallocating its prioritization efforts.

The types of prioritization

Prioritization can take many shapes but three main types of prioritization arise when we examine what the subject of some prioritization work is and within which domain the prioritization work occurs.

Cause Prioritization (CP): work that ranks causes.[6]

Despite investing a lot of resources in prioritization, there's been little explicit discussion of how we should balance these kinds of prioritization. Ideally, we’d know how many resources EA should allocate to each type. With that in mind, we would evaluate the current state and how to bring that closer to our ideal outcome.

Providing a full answer to the question of balance is well beyond the ambition of this post, but we will still try to better grasp the strengths and weaknesses of each prioritization type. Since there can be room for reasonable disagreement about the benefits of prioritization of each kind, this suggests that EA should at least take all three seriously. In fact, EA should arguably invest substantial resources into each of them, and, to spoil the next section, EA doesn't currently do that.

A snapshot of EA

A quick reading of EA history suggests that when the movement was born, it focused primarily on identifying the most cost-effective interventions within pre-existing cause-specific areas (e.g. the early work of GiveWell and Giving What We Can). Subsequently, it paid increased attention to additional causes, and evaluated which of these (Global Health, Animal Welfare, or Global Catastrophic Risk) was most promising. However, as we shall see below, almost all prioritization work in the community takes place either at the level of within-cause prioritization, with little devoted to cause prioritization, and even less to cross-cause prioritization.

In this section we consider some salient organizations in EA and provide a quick classification of their activities into different types of prioritization.[7]

In 2022, GiveWell directed $439 million and, as of January 2025, it employed 77 people.[8] The key figure is the amount of time spent on prioritization work, which is approximately 39 full-time equivalents (FTE) based on the number of researchers listed on their website (distributed among the Commons, Cross-cutting, Malaria, New Areas, Nutrition, Research Leadership, Vaccines, and Water & Livelihoods teams, but excluding Research Operations). Because GiveWell is focused on Global Health, this figure counts towards the within-cause type of prioritization.[9]

Rethink Priorities engages in research across various EA causes. All research teams focus on prioritization work within specific causes, except for the Surveys Team – which does 85% within-cause (i.e. 1.9 FTEs) and 15% cross-cause prioritization (i.e. 0.3 FTEs) – and the Worldview Investigations Team, with 4.9 FTEs across CCP (60%, i.e. 3 FTEs) and CP (40%, i.e. 1.9 FTEs). The rest adds up to 29 FTEs on WCP, including 7 FTEs for Animal Welfare and 7 FTEs for Global Health and Development.[10]

The Global Priorities Institute doesn't fit neatly into any single category; it addresses foundational questions relevant to all levels of prioritization. Some of its work assumes worldviews like longtermism and focuses on issues such as risks from artificial intelligence. However, GPI generally doesn't compare specific interventions by name, or indeed causes, but provides considerations useful for prioritization across the board. With 16 full-time researchers and 19 affiliates or scholars, GPI can be thought of as (sometimes indirectly) doing a mix of the three, with a higher load of CP at 15.8 FTEs, some WCP at 4.2 FTEs, and almost no CCP at 0.6 FTEs.[11]

Open Philanthropy disburses on the scale of 0.75 billion dollars annually and now employs nearly 150 individuals. Its work spans object-level analysis, grantmaking, and prioritization research. As part of its prioritization efforts, some teams explicitly conduct within-cause prioritization: approximately 7 people focus on Global Health cause prioritization, 5 are assigned to Global Catastrophic Risks cause prioritization, and 2 contribute to Farm Animal Welfare research. On the grantmaking front, the majority of work falls to about 25 people whose principal responsibility is Global Catastrophic Risks, 15 for Global Health, and 5 for Farm Animal Welfare. In total, we estimate 59 FTEs on WCP and 1.5 FTEs on CP (given, for example, internal cause prioritization exercises and needs) including some participation of the leadership members.[12]

Ambitious Impact (formerly Charity Entrepreneurship) is dedicated to enabling and seeding effective charities through its research process and incubation program. Over the past year, it has allocated roughly 4 FTEs to within-cause prioritization, including 3 full-time permanent research team members, with additional support from fellows and contractors on a fluctuating basis. In contrast, only around 0.1 to 0.2 FTEs was spent on cause prioritization, reflecting its emphasis on deeper within-cause reports and analyses.[13]

80,000 Hours dedicated the majority of its prioritization capacity to cause prioritization, with roughly 3 FTE (primarily from the web team that researches problems and recommends cause rankings), though that figure decreased to about 2 FTE in 2024 after the departure of one researcher. This total included the team lead but excluded, for instance, the content associate. An additional 1 FTE stemmed from the collective effort of the leadership team and other staff members in investigating questions such as the importance of AI safety. (Adding up to 3 FTEs for CP). For cross-cause prioritization, our estimate was about 1 FTE for the web team’s work on neglectedness, tractability, and importance comparisons. Finally, about 1 FTE was devoted to within-cause prioritization from a combination of advisory work and the job board — e.g. in determining which roles at AI labs should appear on the job board. However, given their recent announcement of going all-in on AI, we’re classifying this total capacity of 5 FTEs as within-cause.[14]

Longview Philanthropy works with donors to increase the impact of their giving. Three full-time staff focus on within-cause prioritization in artificial intelligence, and two are dedicated to nuclear risk within-cause work (total 5 FTEs). Additionally, a small portion — about 0.05 full-time equivalent — is devoted to cause prioritization. (In this role, the content team and leadership periodically assess resource allocations, such as how to best deploy The Emerging Challenges Fund among artificial intelligence, biosecurity, and nuclear risk initiatives.)[15]

EA Funds supports organizations and projects within the Effective Altruism community through its grantmaking and funding prioritization activities. The organization carries out a range of prioritization work across its key areas: Global Health and Development Fund, Animal Welfare, Long-Term Future, and EA Infrastructure. Most team members hold full-time positions elsewhere in addition to their EA Funds role; for example, the current fund chair at the Animal Welfare Fund is the only full-time member dedicated exclusively to that fund. Overall, EA Funds’ combined capacity is estimated at 7 FTEs, and this work is classified as within-cause prioritization, where each fund can be thought of as a distinct cause area. We assigned an additional 0.1 FTEs on cause prioritization for the relevant strategic and big-picture thinking that the leadership and advisors perform.[16]

A summary of the relevant figures for each organisation is presented below.

Prioritization (FTEs)

Prioritization (FTEs)

Prioritization (FTEs)

It's striking that, at least in the context of the organizations above, the revealed effort devoted to within-cause area prioritization is about 9 times that allocated to cause prioritization and cross-cause prioritization combined.[17] Perhaps there are practical considerations that justify this distribution, but it's also possible that this simply isn't the optimal allocation of efforts among the different types of prioritization work. Let us consider the strengths and weaknesses of each type next.

The Types of Prioritization Evaluated

This section is a summary of our more comprehensive breakdown of the strengths and pitfalls of each type, available in the appendix.

Cause Prioritization

Cause prioritization involves comparing broad cause areas (like global health vs. existential risk) to figure out which problems most deserve attention. Since there are fewer causes than there are interventions, a shorter target list simplifies the task at hand, freeing up researcher time.

One specific benefit of engaging in prioritization at the level of causes is that this process can highlight neglected but high-potential areas that might otherwise be overlooked. If people try to prioritize interventions directly (rather than causes), then they risk missing out on whole large areas because they are not salient. e.g. people will just prioritize all the neglected tropical diseases they can think of, and totally ignore AI. Thus, engaging in prioritization at a higher level of abstraction (that of causes) can make it less likely that we will miss large areas of high-impact interventions, which were not hitherto salient.