My apologies, I don't have a solution to provide and I don't really buy the insurance idea. However I wonder if the collapse of Moltbook is a precursor to the downfall of all social media, or perhaps even the internet itself (is the Dead Internet Theory becoming a reality ?). I expect Moltbots to switch massively to human social media and other sites very soon. It’s not that bots are new, but scale is a thing. More is different.

Something interesting is happening on Moltbook, the first Social network "for AI agents".

what happened

If you haven't been following obsessively on twitter, the story goes something like this.

Along the way, there were expectedly people screaming "it's an AI, it only does what is prompted to do".

But that's not what I find interesting. What I find interesting is the people expressing disappointment that the "real AI" has been drowned out by "bots".

And let's be clear here. What makes something a "real Clawdbot"? Clawdbot is, after all, a computer program following the programming provided to it by its owner.

Consider which of the following would be considered "authentic" Clawdbot behavior:

In other words, we as a society were perfectly okay with AI insulting us, threatening us, scheming behind our backs or planning a future without us.

But, selling crypto. That's a bridge too far.

the lesson

Motly and Moltbook is fundamentally a form of performance art by humans and for humans. Despite the aesthetic of "giving the AI its freedom", the real purpose of Moltbook was to create a place where AI agents could interact within the socially accepted bounds that humanity has established for AI.

AI is allowed to threaten us, to scheme against us, to plan our downfall. Because that falls solidly within the "scary futuristic stuff" that AI is supposed to be doing. AI is not allowed to shill bitcoin, because that is mundane reality. We already have plenty of social networks overrun by crypto shills, we don't need one more.

At this point, you might expect me to say something like: "The AI Alignment community is the biggest source of AI Misalignment by promulgating a view of the future in which AI is a threat to humanity."

But that's not what I'm here to say today.

Instead I'm here to say "The problem of how do we build a community of AI agents that falls within the socially accepted boundaries for AI behavior is a really interesting problem, and this is perhaps our first chance to solve it. I'm really happy that we are encountering this problem now, when AI is comparatively weak, and not later, when AI is super-intelligent."

So how do we solve the alignment problem in this case?

In the present, the primary method of solving the problem of "help, my social network is being overrun by crypto bots" is to say "social networks are for humans only" and implement filters (such as captchas) that filter out bots. In fact, this solution is already being implemented by Moltbook.

This is, in fact, one of the major ways that AI will continue to be regulated in the near future.

by making a human legally responsible for the AIs actions, we can fall back on all of the legal methods we use for regulating humans to regulate AI. The human legal system has existed for thousands of years and evolved over time to cope with a wide variety of social ills.

But, at a certain point, this simply does not scale. Making a human individually responsible for every action by AIs on a social network for AIs defeats the point (AI is supposed to save human labor, after all).

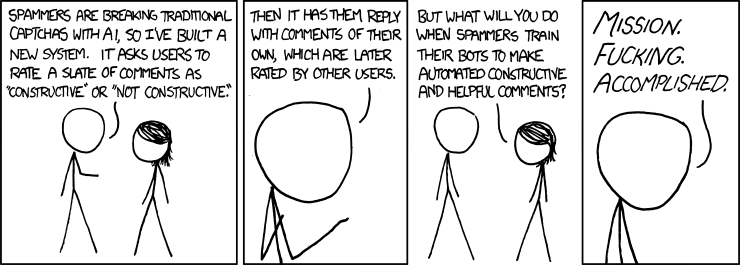

Unfortunately, an alternative "are you clawdbot" test won't work either. You might think at first that simply requiring an AI to pass an "intelligence threshold" test (which presumably clawdbot can past but crypto spam bots can't) might help. But nothing stops the programmer from using Claude to pass the test and spamming crypto afterwards.

Another approach which seems promising but won't actually work is behavioral analysis.

The problem here, is economics

Even if it's possible (and I'm not sure it currently is) to build an AI that can answer the question "does this post fall within the socially acceptable range for our particular forum?", such an AI will cost orders of magnitude more than building a bot that repeatedly spams "send bitcoin to 0x...."

One potentially promising solution is Real Fake Identities. This is, again, something that we do on existing Social Media sites. The user of the forum pays a small fee and in exchange the receive a usable identity. This breaks the asymmetry in cost between attackers and defenders. We can simply set the cost for an identity to be higher than whatever the cost of our "identify crypto bot" AI costs to run.

One might ask, "what is the final evolved form of real fake identities?" The goal of the AI alignment movement, after all, is not to create a social media forum where Clawdbots can insult their humans. The goal is to Capture the freaking Lightcone. And for this, "pay $8 a month to prove you're not a crypto shill" isn't going to cut it. (In fact, $8/month is already profitable for plenty of bots)

The answer is insurance markets. Imagine that we are releasing a team of AIs to terraform a planet together. We have some fuzzy idea of what we want the AI to do: make the planet habitable for human life. And some idea of what we want it not to do: release a cloud of radioactive dust that makes the surface uninhabitable for 99 years. We write these goals into a contract of sorts, and then require each of the AIs to buy insurance which pays out a certain amount in damages if they violate the contract. Insurance can only be bought from a highly regulated and trustworthy (yet competitive) marketplace of vendors.

The insurance market transforms the risk (of the potentially misaligned terraforming AI agent) into risk for the (aligned) Insurance provider. By taking on financial responsibility, the Insurance Company has an incentive to make sure that the Terraforming Agent won't misbehave. Equally importantly, however, the Insurance Companies are (by assumption) aligned and won't try to weasel their way out of the contract (say by noting that the terraforming contract didn't specifically mention you aren't allowed to release ravenous hordes of blood-sucking leaches on the planet).

Note that in order for the solution to work:

so, what's next

We should take advantage of the opportunity provided to us (by moltbook but more importantly by the current moment) to work hard at actually solving these problems.

We should think carefully about what types of AI societies we are willing to permit in the future (maybe a bunch of AIs threatening to sue "my human" isn't the shining beacon on a hill we should aim for).

Questions, comments?

I'm especially interested in comments of the form "I think that solution X might be relevant here" or "Solution Y won't work because.."