1 Answers sorted by

50

I think the hypothetical %-based approach you mention isn't a good approach. The issue is that you do indeed get exponentially diminishing returns from improvement to any one question, but then there are other questions of higher "difficulty" which you tend to start improving on, once you saturate your current level of difficulty.

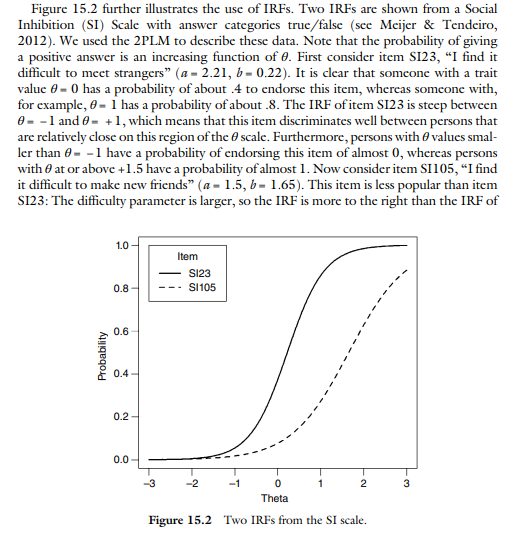

Within psychometrics, this is formally studied under the phrase "item response theory". There, they fit sigmoidal curves to responses to surveys or tests, and as one probability curve flattens out, another curve tends to pick up steam. See e.g. this example (via):

Exponentially diminishing returns was what I found in the concrete examples I thought of (e.g. offering insurance policies, betting on events, etc.).

It seems to me that an AI that linearly increased its predictive accuracy on a particular topic would see exponentially diminishing returns.

The question is if this return on investment of predictive accuracy generalises.

If I were to instead suppose that the agent in question was well calibrated, and got any binary question it could assign 90% accuracy to it or its inverse.

If the accuracy was raised to 99%.

Then...

How does cognitive/intellectual performance (e.g. as measured by −log(error rate) translate to real-world capability? Do linear increases in cognitive performance result in linear increases in capability? I don't know. I did think of a way we could maybe investigate that:

I would be very interested in answers to this. This could significantly change my views on how much real-world capability you can buy with increasing cognitive performance.

Of course, predictive accuracy is not the only measure of cognitive performance (especially, prediction enhanced finance is not the only way that superhuman AI could leverage its greater intelligence in the real world).

This could be thought of as a starter to investigate the issue of translating cognitive performance into capability in the real world. Predictive power is a good starting point because it's a simple and straightforward measure. It's just a proxy, but as a first attempt, I think it's fine enough.

Monetary returns seem like a pretty robust measure of real-world capability.