Review

Apparently an anonymous user(s) got really excited and ran a bunch of simultaneous searches while I was sleeping, triggering this open tokenizer bug/issue and causing our TransformerLens server to hang/crash. This caused some downtime.

A workaround has been implemented and pushed.

This is great, thanks!

I think the link to your initial post on Neuronpedia might be useful to create better context: Neuronpedia - AI Safety Game

Thanks for the tip! I've added the link under "Exploration Tools" after the first mention of Neuronpedia. Let me know if that is the proper way to do it - I couldn't find a feature on LW for a special "context link" if there is such a feature.

TL;DR: Interactive exploration of new directions in GPT2-SMALL. Try it yourself.

OpenAI recently released their Sparse Autoencoder for GPT2-Small. In this story-driven post, I run experiments and poke around the 325k active directions to see how good they are. The results were super interesting, and I encountered more than a few surprises along the way. Let's get started!

Exploration Tools

I uploaded the directions to Neuronpedia [prev LW post], which now supports exploring, searching, and browsing different layers, directions, and neurons.

Let's do some experiments with the objective: What's in these latent directions? Are they any good?

Test 1: Find a Specific Concept - Red

For the first test, we'll look for a specific concept, and then benchmark the directions (and neurons) to look for monosemanticity and meaningfulness. We want to see directions/neurons that activate on a concept, not just the literal token. For example, a "kitten" direction should also activate for "cat".

For the upcoming Lunar New Year, let's look for the color "red", starting with directions.

On the home page, we type "red" into the search field, and hit enter:

In the backend, the search query is fed into GPT2-SMALL via TransformerLens. The output is normalized, encoded, and sorted by top activation.

After a few seconds, it shows us the top 25 directions by activation for our search:

Unfortunately, the top results searching direction results don't look good. Uh-oh.

The top result, 0-MLP-OAI:22631, has a top activating token of "red" in the word "Credentials", which doesn't mean the color red. Clicking into it, we see that it's just indiscriminately firing on the token "red" - in domains, parts of words, etc. It does fire on the color red about 35% of the time, but that's not good enough.

So our top direction result is monosemantic for "the literal token 'red'", but it's not meaningful for the color red. And other top results don't have anything to do with "red".

What went wrong?

Red-eeming Our Search

We searched for "red", and the machine literally gave us "red". Like many other problems that we encounter with computers, PEBKAC. In LLM-speak: our prompt sucked.

Let's add more context and try again: "My favorite color is red."

Adding the extra context gave us a promising result - the second direction has a top activation that looks like it's closer to the color red ("Trump's red-meat base") instead of a literal token match, and its GPT explanation is "the color adjective 'red'".

But the other tokens we added for context are also in the results, and they're not what we're looking for. Results 1, 3, and 4 are about "favorite", "color", and "My", which drowns out other good potential results for "red".

Fortunately, we can sort by "red". Click the bordered token "red" under "Sort By Token":

The result is exactly what we want - a list of directions that most strongly activate on the token "red", in the context "My favorite color is red.". The top activations look promising!

It turns out that the first "Trump's red-meat base" direction, 0-MLP-OAI:16757, isn't the best one. While its top activations does have "red jumpsuit", "red-light", it also activates on "rediscovered" and "redraw".

The second result, 1-MLP-OAI:22004, is much better. All of its top activations are the token "red" by itself as a color, like "red fruit", "shimmery red", and "red door".

Red-oubling Our Efforts

1-MLP-OAI:22004 is a good find for "red", but we're not satisfied yet. Let's see if it understands the color red without the token "red". To do this, let's test if this direction activates on "crimson", a synonym for red.

Let's open the direction. Then, click the "copy" button to the right of its first activation.

This copies the activation text into the test field, allowing us to tweak it for new tests:

We'll change the highlighted word "red" to "crimson", then click "Test":

It activated! When replacing the token "red" in our top activating text with "crimson", the token "crimson" was still the top activating token. Even though its activation is much weaker than "red", this is a fantastic result that's unlikely to be random chance - especially considering that this activation text was long had many other colors in it.

We don't always have to copy an existing top activation. It just usually makes sense to copy because the top activation is likely to give you a high activation on modifications, like the word replacement we did here. We can also just type in a test manually. Let's replace the test text with "My favorite color is crimson.":

It still activates, though slightly lower than the copy-paste of the top activation. Neat.

Red-oing It, But With Neurons

We found a monosemantic and meaningful direction for "red". How about neurons?

We'll filter our search results to neurons by clicking Neurons. Here's the result.

The results for neurons are, frankly, terrible. We have to scroll halfway down the results to find neurons about colors:

And clicking into these, they're mostly general color neurons, with the best candidate being 2:553. We do a test to compare it with our previous 1-MLP-OAI:22004:

The direction is clearly better - the difference is night and day. (Or red and blue?)

Conclusion of test 1: We successfully found a monosemantic and meaningful direction for the color red. We could not find a monosemantic red in any neurons.

Test 2: Extract Concepts From Text - The Odyssey

In the first test, we looked for one concept and came up with searches to find that concept. In this test, we'll do the opposite - take a snippet of text, and see how well directions and neurons do in identifying the multiple concepts in that text.

Back at the home page, we'll chose a Literary example search query:

We got randomized into text snippet about the Odyssey:

Since there are multiple Literary examples it's choosing from, you might have gotten something different. Click here to follow along on our... Odyssey.

The Journey Begins

Like the first test, we want to compare directions and neurons on the criteria of monosemantic and meaningful.

But since there are so many tokens in this text, we'll mostly check for a "good enough" match by comparing the top activating tokens. For example: if both the text and a direction have the top activating token "cat", it's a match. The more matches, the better.

It's All Greek To Me

Let's start with the search results for neurons.

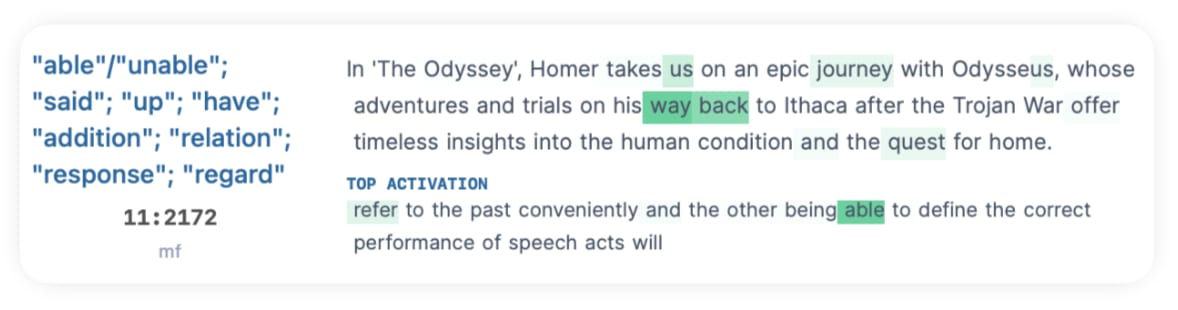

Out of the top 25 neuron results, only four matched. Most didn't have matching or similar activating tokens as the neuron, or were highly polysemantic, like 11:2172:

Out of the four matches token matches, most activated on unrelated tokens as well:

(Note: Thanks to Neuronpedia contributors @mf, @duck_master, @nonnovino, and others for your explanations of these neurons.)

Overall, the top 25 neurons were bad at matching the concepts or words in our text.

Towards Monophemus

Okay, the neurons were a disaster. How about the directions?

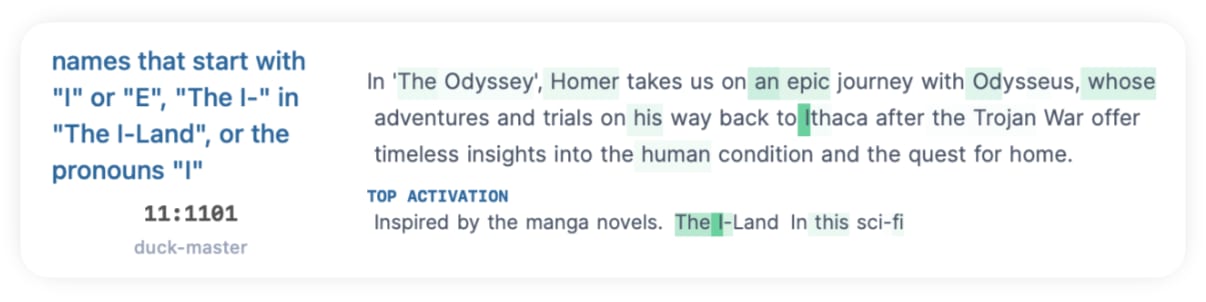

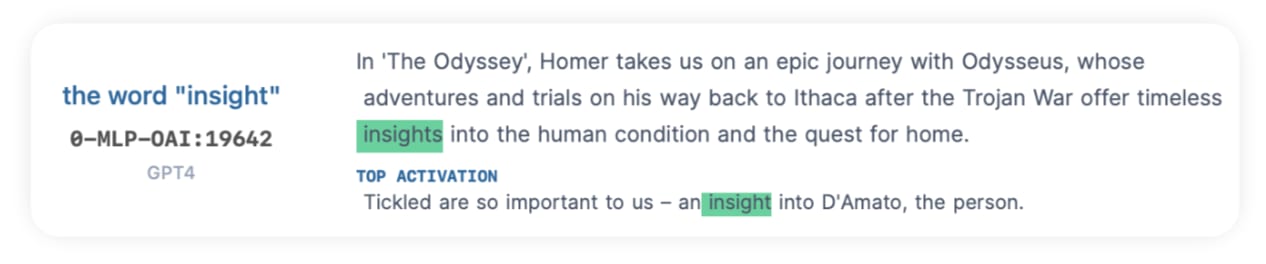

The directions results were stellar. All 25 of the top results were monosemantic and matched exactly, with the exception of "insights" vs "insight" in 0-MLP-OAI:19642:

As we saw in the first test, a non-exact but related match is a good sign that this direction isn't just finding the exact token "insight", but looking for the concept. Let's test 0-MLP-OAI:19642 with adjective "insightful" and synonym "intuition":

Both activated - nice! To be sure it's not just finding adjectives, let's check "absurd":

Absurd doesn't activate - so we'll consider this spot-check passed.

Overall, the top 25 directions were simply really good. They're obviously better than neurons. But that's a low bar - just how good are the directions?

I noticed something odd in the top search results - a significant majority of top activations were in layer 0 (22 out of 25). I wanted to see how it did without layer 0, so I clicked the "All Layers" dropdown and selected all layers except layer 0, and reran the search.

From Hero... to Zero?

Surprisingly, the direction results excluding layer 0 were noticeably worse. I counted 22 out of 25 matches, which is three fewer than when we included layer 0.

What accounted for the three non-matches?

It looks like these direction non-matches were the result of two rogue directions and one near-hit, which still isn't bad, especially compared against neurons.

But because excluding layer 0 made the results worse, I was now curious if later layers were even worse. So I checked the results for each layer (using the "Filter to Layer" dropdown) for both directions and neurons:

This was pretty surprising. Even though directions were better than neurons in every layer, directions got noticeably worse in the later layers - and the layer 11 directions were actually pretty bad. Neuron even seemed to fare decently against directions in the middle layers - from layer 6 to layer 9.

I don't know why directions seem to get worse in later layers, or why top directions are usually in layer zero. I'm hoping someone who is knowledgable about sparse autoencoders can explain.

Conclusion of test 2: We found that directions were consistently and significantly better than neurons at finding individual concepts in a text snippet. However, the performance of directions was worse in later layers.

Observations and Tidbits

Here are some highlights and observations from looking through a lot of directions and neurons while doing these tests:

Questions

Challenges and Puzzles

These are directions I either couldn't find, or couldn't figure out the pattern in them. Let me know if you have any luck with them.

Suggested Improvement

The directions themselves seem good, as we've saw in the two tests. But I found the activations and associated data (NeuronRecord files) provided by OpenAI to be lacking. They only contain 20 top activations per direction, and often the top 20 tokens are exactly the same, even if the direction is finding more than that literal token.

For example, the "love"/"hate" direction above (3-MLP-OAI:25503) has 20 top activations that are all "love". Replacing it with "like" results in zero activation, but replacing it with "hate" results in a positive activation. So there's something else going on here other than "love", but someone looking just at the top 20 activations would conclude this is direction is only about "love". In fact, this is exactly what GPT4 thought:

A proposed fix is to do top token comparisons when generating NeuronRecords, and count exact duplicates as a "+1" to an existing top activation. But this would eliminate identical top activation tokens that are in different contexts (or same token in different words, which we definitely want to keep.)

A better fix is doing semantic similarity comparisons on the word, phrase, sentence, and whole text when generating NeuronRecords, then set thresholds for each semantic similarity type. When encountering the same top activating token, count that activation text as a +1 of an existing activation text if it's within our similarity thresholds.

Ideally, you'd end up with a diverse set of top activation records that also has counts for how frequently a similar top activation appeared. I plan to build some version of this proposed fix and generate new top activations for these directions, so let me know if you have any feedback on it.

Try It Yourself

Run Your Own Tests and Experiments

You can play with the new directions and do your own experiments at neuronpedia.org. For inspiration, try clicking one of the example queries or looking for your favorite thing.

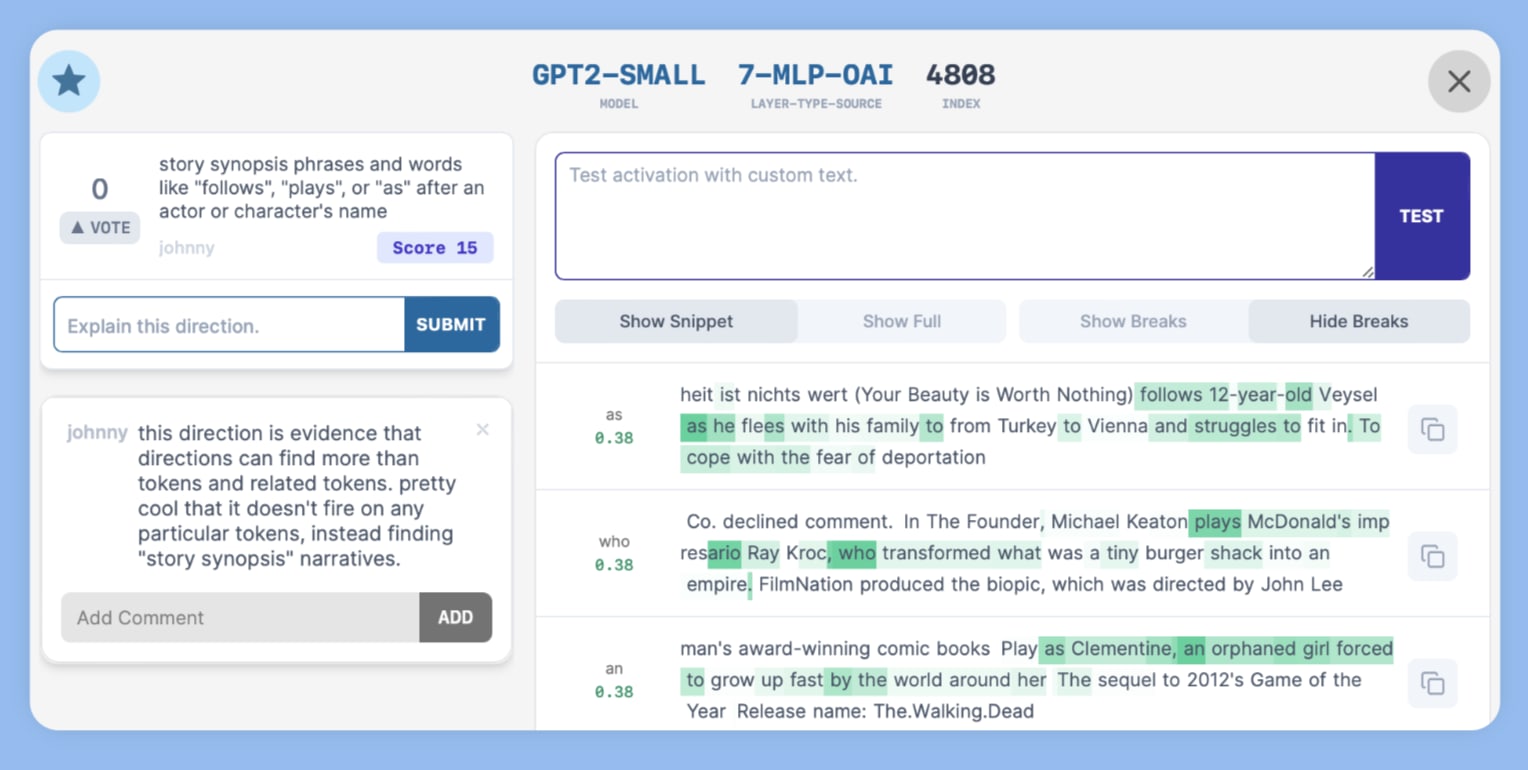

If you find an interesting direction/neuron, Star it (top left) and/or comment on it so that others can see it as well. Here's my favorite "story synopsis" direction:

When you star or comment, it appears on your user page (this links to my stars page), the global Recently Starred/Commented page, and the home page under Recent Activity.

Please let me know what new features would be helpful for you. It'd be really neat to see someone use this in their own research - I'm happy to help however I can.

Upload Your Directions and Models

If you want to add your directions or models so that you and others can search, browse, and poke around them, please contact me and I'll make it a priority to get them uploaded. A reminder that Neuronpedia is free - you will not get charged a single cent to do this. Maybe you'll even beat OpenAI's directions. 🤔

Feedback, Requests, and Comments

Neuronpedia wants to make interpretability accessible while being useful to the serious researchers. As a bonus, I'd love to make more folks realize how interesting interpretability is. It really is fun for me and likely you as well, if you've read this far!

Leave some feedback, feature requests, and comments below, and tell me how I can improve Neuronpedia for your use case, whether that's research or personal interest. And if you're up to it, help me out with the questions and challenges above.