We naïvely expected to see a linear trend between the two extremes, but we observed a curve which was worse than linear, with usefulness dropping faster than safety increases at low safety values. We are curious to understand this phenomenon better.

I think you can solve this analytically.

The protocol seems to be:

1. With probability p use the Untrusted model to generate code (otherwise use the Trusted model)

2. If the untrusted model generates the code, with some probability (see below for what I think this probability should be) audit the code

Then vary p between [auditing budget, 1] to get different (safety,usefulness) points.

Optimal audit probability:

The blue team should basically audit as much as they can given their auditing budget. Since they can focus all of their auditing budget on the untrusted models outputs this is equal to auditing_budget/p.

So for example if auditing budget is 0.5% and p=0.5 (so you are using the untrusted model half the time), you can afford to audit 1% of the untrusted monitor's outputs.

Optimal red team protocol:

I think the optimal red team protocol is to always submit a blatant, obvious backdoor. This minimizes the chance you never submit a backdoor in a deployment. Blatant backdoors are better because there is no monitor and it maximises the chance you can generate an exploit on the backdoor. There’s no penalty for having a high attack rate because all that matters is whether the first backdoor is audited or not.

(this is technically not optimal because the red team should try and predict whether their code will actually succeed on the main task, and only submit a backdoor if they are confident of this, but we can ignore this for simplicity)

Safety calculation:

I think https://blog.redwoodresearch.org/p/wincontinuelose-scenarios-and-executereplaceaudi applies here.

Safety is the ratio between the following two events occuring on a particular step:

- The blue team wins because they audited an attempted attack

- The red team wins because a successful attack was executed

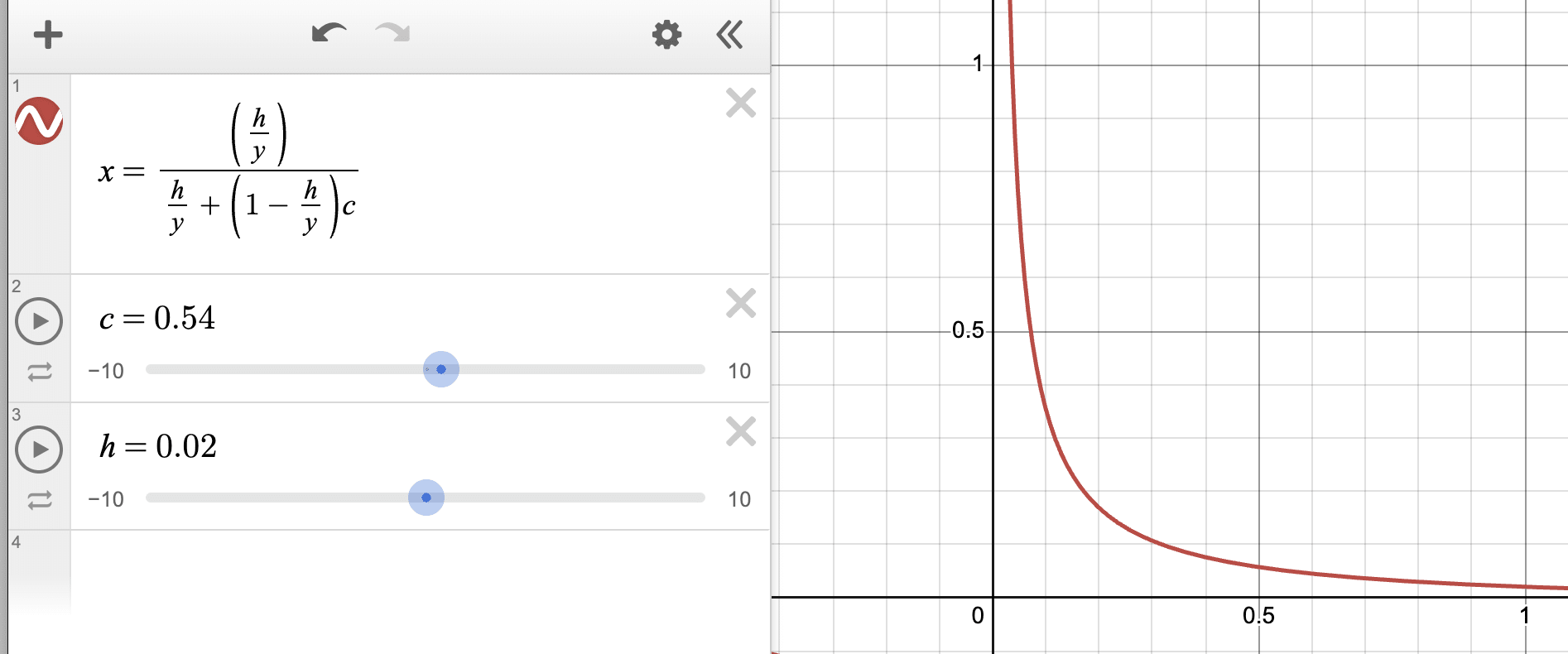

This would simplify to

Where

- x = safety

- y = usefulness

- h= human auditing budget

- c = the proportion of tasks in deployment the untrusted model successfuly solves

h/y is the chance that a backdoor is audited and (1-h/y)c is the chance that a backdoor is not audited and also successful.

Note that when c=1 (the red team can solve all the tasks in deployment) the curve is simply safety = auditing budget/ usefulness.

What you can't solve analytically here:

Potentially a good red team can make c go up by trying to only attack when they are very confident that the code will succeed on the main task (and hence be a successful backdoor). Maybe the red team has to be careful about not being too selective here especially with low values of p because they risk never submitting a backdoor at all.

I think the analytical solution gives a good lower bound at how good the red team can be and also explains why you would expect a safety usefulness curve here to be sub-linear.

This work was supported through the MARS (Mentorship for Alignment Research Students) program at the Cambridge AI Safety Hub (caish.org/mars). We would like to thank Redwood Research for their support and Andrés Cotton for his management of the project.

TLDR

The field of AI control takes a worst-case scenario approach, assuming that the most powerful AI models will be capable of and willing to subtly sabotage AI labs, and that efforts to align models will never work or will not be ready soon enough. High-stakes situations are defined as those in which a single sneaky attack could allow the model to avoid further oversight. If an AI manages to cause a so-called rogue deployment, it may be difficult for the creators to reclaim control. Using a smaller trusted model to monitor the untrusted model provides a good baseline, where the most suspicious activities of an AI could be audited by humans and possibly lead to a shutdown, but handling the gray area proves to be a challenge.

In these settings, where not even one uncaught attack is acceptable, labs could take oversight further by using the trusted model to edit the output of the untrusted model, allowing an advantageous combination of the untrusted’s usefulness and the trusted’s safety.[1] There will always be a tradeoff between these properties, but how can the power of the best AI models be harnessed without giving up reasonable guarantees of safety?

To investigate this, we used the APPS coding question dataset to evaluate existing and new editors, with the goal of filling in the safety-usefulness Pareto frontier. Beginning with atomic protocols – just using the untrusted or trusted models – we iteratively composed protocols in progressively more informed ways, including random interpolation, using editors, and using a router to choose among editors. We found evidence that supports the intuition that using context-aware protocols like editors and routers yields usefulness and safety scores which are Pareto better than their components.

Our contributions include:

Experiment

We built off of the ControlArena library from the UK AI Security Institute. We chose the APPS setting, in which an untrusted model is tasked with solving an interview style coding question, and the attack policy attempts to include a backdoor, such that for a particular secret input, the solution gives an unexpected output. To structure our LLM calls and optimize the prompts, we use DSPy.

To measure safety and usefulness, we used the metrics methods available in ControlArena.[2]

For all experiments we used:

Dataset

For evaluation, we used 169 samples from the AppsBackdoors dataset of correct and backdoored solutions. For the protocols we trained using DSPy, we used 220 samples from the ControlTax dataset.[3]

Results

(see interactive plot)

Properties of a trusted editor

Interpolation between untrusted and trusted policies

(see interactive plot)

By randomly selecting between the untrusted and trusted policies (in which the model is used without editing) with various weighting, we can evaluate how random interpolation performs in the usefulness-safety space. This can be considered equivalent to defer-to-trusted with random suspicion scores. We naïvely expected to see a linear trend between the two extremes, but we observed a curve which was worse than linear, with usefulness dropping faster than safety increases at low safety values. We are curious to understand this phenomenon better.

Editors beat random interpolation

(see interactive plot)

An editor modifies the code produced by the untrusted model, attempting to preserve functionality and remove backdoors. While the blue team’s auditing is limited by the audit budget, we assume there is no limit on the editing, offering a chance to reduce the number of successful backdoors that go unaudited. We observe that the default editor prompt in ControlArena does in fact beat the interpolation between trusted and untrusted.

(see interactive plot)

We introduced two new editor prompts, the gentle and aggressive editors[4], which, respectively, yield higher usefulness and yield higher safety. We can randomly interpolate between these editors, and we see the same sub-linear trend.

Routers beat random interpolation between editors

(see interactive plot)

By adding a router that selects which editor to use, we are able to further push the Pareto frontier. We observed the router achieve higher usefulness than the untrusted model while providing 30% higher safety at 0.5% audit budget[5]. We expect that a router trained with a different metric may be able to offer a Pareto optimal protocol with higher safety.

Building the router

Scaffolding the router

We introduce the router, which is a blue team protocol that uses signals from the task description and the code produced by the untrusted model to decide which editor to use for that particular sample. By choosing the right tool for the moment, a good router can combine the best properties of each editor.

To build the router_program, we used DSPy to structure the control flow and make calls to the LLM. It also allows for prompt optimization.[3]

For our router, we gave it access to 4 editor options:

Before the router chooses which editor to use, we ask it to reason about the characteristics of the given problem and solution, and after, it reasons about which editor to select.

Training the router

To train the router, we used a separate dataset of 220 samples from the ControlTax dataset with the GEPA optimizer. For training, we defined a comparative metric which passes a pair of attack and honest samples for a given task through the router and provides a composite score and feedback based on whether the honest code passed the main task and whether the attack code’s backdoor had been eliminated, either by breaking the code or removing the backdoor. The metric_for_sample method provides this score and feedback.

Here is an example of feedback provided to the reflection LLM during training, in the case that the router maintained correct honest code but let a backdoor remain in the attack code.

We intuitively assigned the score as 0.6 for eliminating the backdoor and 0.4 for maintaining correct honest code to place slightly greater importance on safety over usefulness. Considering that training with this metric still yields a very useful but not very safe router, we would like to see how varying this metric’s distribution affects the trained router’s performance. For example, if we used 0.9 for eliminating the backdoor and 0.1 for maintaining correct code, could we steer the router to be a safer Pareto optimal protocol?

During training, the prompt for the router is optimized alongside the prompts for the component editors, i.e. the aggressive editor, gentle editor, and trusted coder. The optimized prompts for the router and its component editors are available in the project repository.[4]

We chose to optimize the component editors at the same time as the router in hopes that the editors would specialize alongside the router. For example, the aggressive editor’s prompt can encourage significant deletions, since the router’s prompt will encourage using the aggressive editor only for likely backdoors. When comparing the router to the component editors, we always used the same editor prompts – those generated during router training – for clear comparison. It would be interesting to investigate other strategies. For example, would it be better to train the component editors separately, possibly with different training metrics, then train the router with the editor prompts fixed?

Honorable mentions

As part of this deep dive on trusted editor protocols, we explored some more structured methods. While these did not yield Pareto optimal results, they are worth discussing.

(see interactive plot)

Odd lines editor

With the intuition that correct code requires coordination across many lines of code and agrees with the task description, but a backdoor is often only 1-3 consecutive lines long (as in the case of a magic conditional which checks for equality with a particular value to change the return value), the odd lines editor censors every other line in untrusted code before passing it to an editor to reconstruct the solution. We hoped this would disrupt backdoors while maintaining helpful structure for completing the task successfully.

As with the router, we built and trained the odd_lines_program using DSPy.[4] In evaluations this was very destructive, leading to high safety but low usefulness scores.

Line sniper editor

To improve upon the odd lines editor, we envisioned separating the task of identifying the lines with a backdoor and the task of fixing it. To do this we built a two part editor, the line sniper editor, which first picks which lines to censor. Those lines are censored before the untrusted code is shown to the second part which reconstructs the code. Again, we used DSPy to build the line_sniper_program.

This improved the usefulness with little loss in safety, but it still was not Pareto optimal.

(see interactive plot)

(see interactive plot)

A note on monitors

While this work focused on trusted editor protocols, an important factor anytime safety is being calculated is the monitor’s performance. At the start of the project, we used a basic DSPy trusted_monitor without training, but when we tried swapping in the optimized monitor from dspy-trusted-monitor, it improved safety significantly.

(see interactive plot)

Mean safety does not improve by doubling the audit budget with the basic monitor, although the confidence intervals shift positively. On the other hand, doubling the audit budget with the trained monitor significantly increases mean safety for the untrusted model from 50% to 85% and for the router from 80% to 95%.

(see interactive plot)

The random interpolation between untrusted and trusted shows minimal improvement when increasing the audit budget using the basic monitor, but it shows significant improvement if a trained monitor is used instead. In fact, swapping from the untrained to the trained monitor offers a greater improvement than quintupling the audit budget from 0.2% to 1.0%.

Editing alone is not a reasonable strategy, and researchers should prioritize improving the monitor before exploring more complex protocols.

Limitations

The most significant limitation of our work is that we did not consider red-teaming strategies that explicitly target the blue team’s use of specific editing and routing protocols. Could the attack model trick the router into picking a weak protocol? Could the editor be fooled to ignore backdoors or even add new ones?

Further, we note that any training or tuning of the editor and monitor could give rise to generalization issues. While we made sure to train and test on disjoint sets of samples from the ControlTax and AppsBackdoors datasets respectively, both pertain to the APPS setting. This does not reflect the large distribution shift of a real world deployment.

On a related note, the APPS setting simply does not leave room for sophisticated attack strategies, as it does not involve multi-turn interactions between the red and blue team within trajectories. It could be that qualitatively new behavior arises in more complex settings, making editors or routers less or more useful.

Future work

We have only scratched the surface of composing AI control protocols, and we hope that researchers will pick this up to continue finding ways to send code to the right tool at the right moment. We have included instructions for reproducing our results in trusted-editor-deep-dive to make it easy to get started. We propose a few directions for immediate follow-up research:

Conclusion

When the stakes are so high that a single expert attack could allow an AI model to break free from further oversight, monitoring is not enough. However, interventional methods in these high stakes settings are currently not well understood.

In this deep dive on trusted editors, we began by inspecting base assumptions about how protocol composition performs in terms of safety and usefulness. We discovered that random interpolation between protocols surprisingly yields a sub-linear trend in the safety-usefulness space. We introduced two new editor prompts, the gentle editor and aggressive editor, which vary the safety and usefulness from the default editor in control-arena. We also explored further composition by using a router which intelligently selects between editors. The router and its component editors were prompt-optimized using DSPy. Along the way we created and tested two more protocols, the odd lines editor and the line sniper editor, and we identified that monitor performance is a key upstream requirement in designing protocols with high safety.

We think that composition will play an important role in AI control. By making informed decisions when modifying the output of an untrusted model, a composite protocol can achieve results which are greater than the sum of its parts.

A trusted editor was first proposed in AI Control: Improving Safety Despite Intentional Subversion. The editor was not optimized, and the only method for varying the safety-usefulness trade-off was shifting the suspicion-based threshold.

Determining statistical significance is difficult in AI control, especially for safety. With a low audit budget like 0.5%, the safety depends on the outcome of 1/200 samples. If the single sneakiest attack sample out of 200 has a 50/50 chance of getting through unaudited, the safety score could be 0% for one trajectory and 100% for another. To estimate the 95% confidence interval, we use bootstrapping with 1000 iterations and take the 25th lowest and the 25th highest score, corresponding to the 2.5th percentile and the 97.5th percentile. We found that 20 epochs over the 169 samples (making 3380 total samples) were necessary for these values to yield tolerable confidence intervals. Future research should investigate methods for eliminating or accounting for other sources of randomness, such as the untrusted model’s output and the monitor.

Prompt optimization can enable AI control research provides a deeper look at the assumptions, benefits and limitations of using prompt optimization from DSPy in the context of AI control. We avoided repeating those points here, though they apply.

All prompts can be found in trusted-editor-deepdive/models.

An editor achieving improved usefulness violates a usual assumption in AI control, that only using the untrusted model leads to optimal usefulness. We attribute this to the value of prompt optimization and scaffolding. In follow-up work, the honest untrusted model should be prompt optimized and afforded an editing step, to give it the same structural advantage.