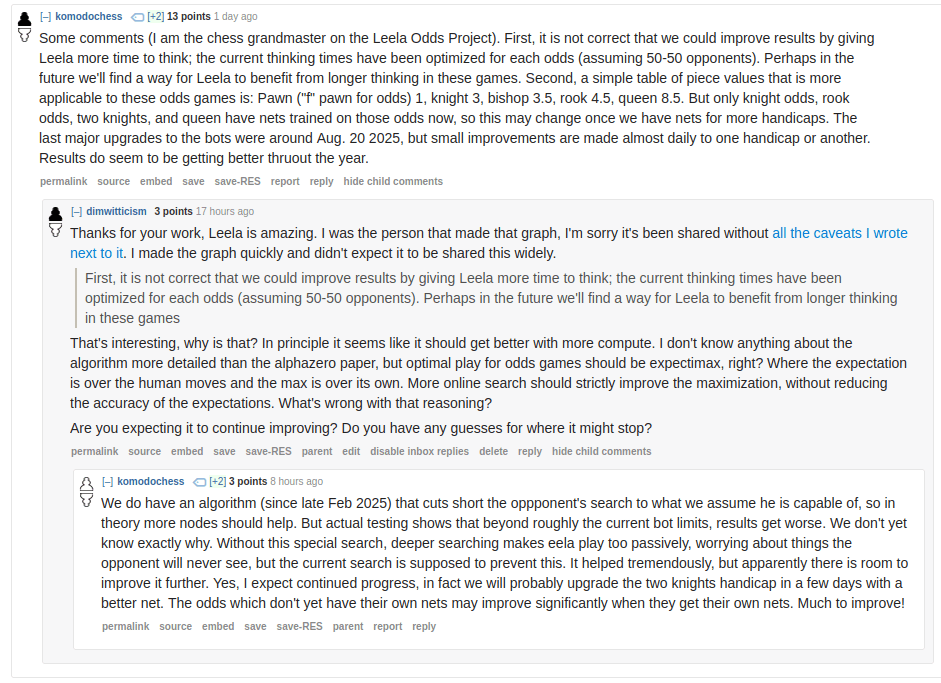

How much could LeelaPieceOdds be improved, in principle? I suspect a moderate but not huge amount. An easy change would be to increase the compute spent on the search.

A few points of note, stated with significant factual uncertainty: Leela*Odds has already improved to the tune of hundreds of elo from various changes over the last year or so. Leela is trained against Maia networks (human imitation), but doesn't use Maia in its rollouts, either during inference or training. Until recently, the network also lacked contempt, so would actually perform worse past a small number of rollouts (~1000), as it would refute its best ideas. AFAIK Maia is stateless and doesn't know the time control. Last I checked, only Knight and Queen odds games were trained, and IIUC while training only played as white, so most of these games require generalising its knowledge from that.

Well, I hope it continues to improve then! Would be super exciting to see a substantially better version.

How much would it cost to pay the people behind LeelaPieceOdds to give other games a similar treatment? Would it even be possible? I'd love to see a version for games like Catan or Eclipse or Innovation. Or better yet, for Starcraft or Advance Wars.

I'm not a chess player (have played maybe 15 normal games of chess ever) and tried playing LeelaPieceOdds on the BBNN setting. When LeelaQueenOdds was released I'd lost at Q odds several times before giving up; this time it was really fun! I played nine times and stalemated it once before finally winning, taking about 40 minutes. My sense is that information I've absorbed from chess books, chess streamers and the like was significantly helpful, e.g. avoid mistakes, try to trade when ahead in material, develop pieces, keep pieces defended.

I think the lesson is that a superhuman search over a large search space is much more powerful than a small one. With BBNN odds, Leela only has a queen and two rooks and after sacrificing some material to solidify and trade one of them, I'm still up 7 points and Leela won't enough material to miraculously slip out of every trade until I blunder. By an endgame of say, KRNNB vs KR there are only a small number of possible moves for Leela and I can just check that I'm safe against each one until I win. I'd probably lose when given QN or QR, because Leela having two more pieces would increase the required ratio of simplifications to blunders.

Nice! Yeah after Leela is down to a couple of pieces the game is usually over. But I've had to learn not to be lazy at this point because a couple of times it managed to force a stalemate.

Why have you read chess books?

As a child I read everything I could get my hands on! Mostly a couple of Silman's books. The appeal to me was quantifying and systematizing strategy, not chess itself (which I bounced off in favor of sports and math contests). E.g. the idea of exploiting imbalances, or planning by backchaining, or some of the specific skills like putting your knights in the right place.

I found these more interesting than Go books in this respect, both due to Silman's writing style and because Go is such a complicated game filled with exceptions that Go books get bogged down in specifics.

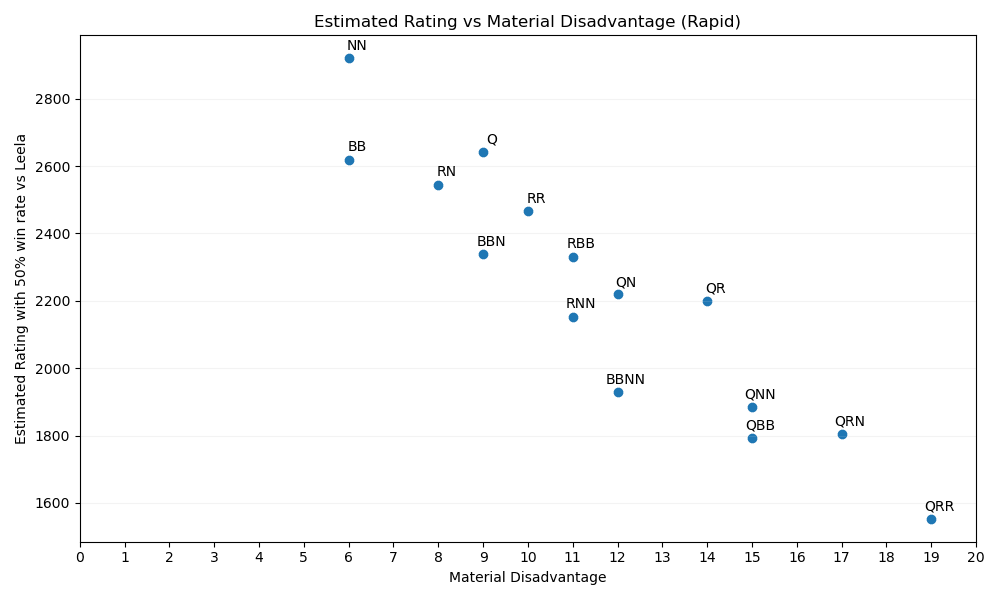

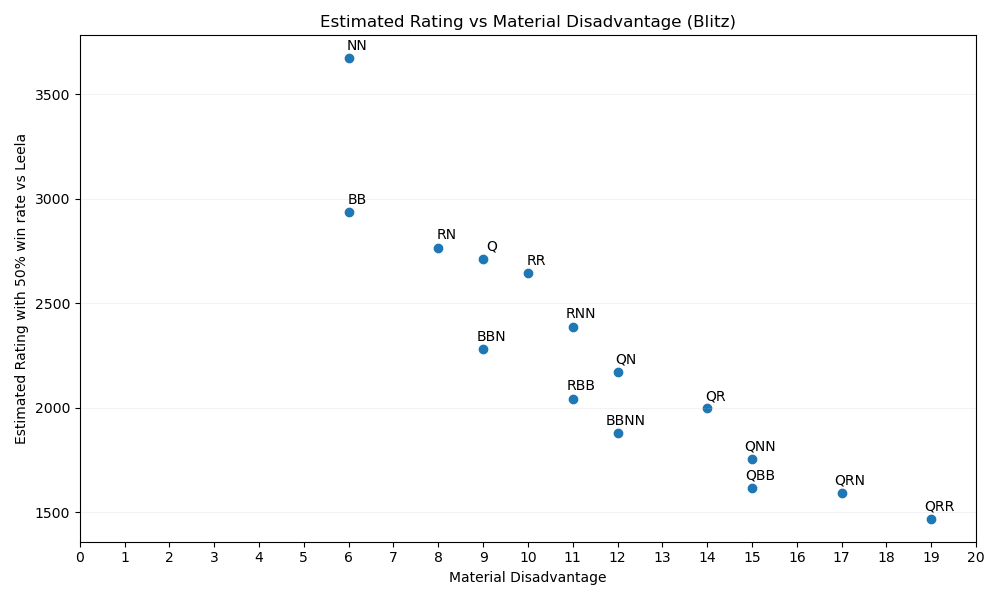

This is really cool! Request: Could you construct some sort of graph depicting the trade-off between ELO and initial material disadvantage?

Simple version would be: Calculate Leela's ELO at each handicap level, then plot it on a graph of handicap level (in traditional points-based accounting, i.e. each pawn is 1 point, queens are 9 points, etc.) vs. ELO.

More complicated version would be a heatmap of Leela's win probability, with ELO score of opponent on the x-axis and handicap level in points on the y-axis.

This is the best I've got so far. I estimated the rating using the midpoint of a logistic regression fit to the games. The first few especially seem to have been inflated due to not having enough high rated players in the data, so it had to extrapolate. And they all seem inflated by (I'd guess) a couple of hundred points due to the effects I mentioned in the post. (Edit: Please don't share the graph alone without this context).

The NN rating in the Blitz data highlights the flaw in this method of estimating the rating.

I haven't found a way to get similar data on human vs human games.

It would then be interesting to see if there is comparable data from human vs. human games. Perhaps there is some data on winrates when a player of elo level X goes up against a player of elo level Y who has a handicap of Z?

I played some 70 games against LeelaQueensOdds with 5+3 (I basically played until I reached a >50% score after losing the first couple of games and then slowly figuring it out) so the most interesting graph for me is unfortunately missing. ;-)

I think there are reasonable people who look at the evidence and think it plausible that control works, and also reasonable people who look at the evidence and think it implausible that control works. And others who think that openai-superalignment-style plans plausibly work.

Something is going wrong here.

Minor aside, but I'm not sure I've ever heard someone reasonable (e.g. a Redwood employee) say "Control Works" in the sense of "Stuff like existing monitoring will work to control AIs forever". I've only ever heard control talked about as a stopgap for a fairly narrow set of ~human capabilities, which allows us to *something something* solve alignment. It's the second part that seems to be where the major disagreements come in.

I'm not sure about superalignment since they published one paper and it wasn't even really alignment, and then all the reasonable ones I can think of got fired.

By "control plausibly works" I didn't mean "Stuff like existing monitoring will work to control AIs forever". I meant it works if it is a stepping stone allows us to accelerate/finish alignment research, and thereby build aligned AGI.

This fits with my model of what most control researchers believe, where the main point of disagreement is on what "accelerate/finish alignment research" entails. I think it's important that the disagreement isn't actually about control, but about the much more complicated topic of alignment given control.

Edited from here on for tone and clarity:

I think it is unsurprising for the people who work on control to disagree with other people on whether control allows you to finish alignment research, since this is mostly a question of "Given X affordances, could we solve alignment." This is fundamentally a question about alignment, which is a very difficult topic to agree on. Many of the smart people who believe in control are currently working on it, and thus are not currently working on alignment, so their intuitions about what is required to solve alignment are likely to be in tension with the intuitions of those who are working in alignment.

(There's also some disagreements regarding FOOM and other capability discontinuities, since a FOOM most likely causes control to break down. The likelihood of FOOM is something I am still confused about so I don't know which side is actually reasonable here. My current position is that if you have an alignment method which actually looks like it will work for AIs that grow <10x the speed of the current capabilities exponential exponential, that would be brilliant and definitely worth trying all on its own, even if you can't adapt it to faster growing AIs)

I'm using "alignment" here pretty losely, but basically I mean most of the stuff on the agent foundations <-> mech interp spectrum/region/cloud. I specifically exclude control research from this because I haven't seen anyone reasonable claim that control -> more control -> even more control is a good idea (the closest I've seen is William MacAskill saying something along those lines, but even he thinks we need some alignment).

Do you think it's similar to how different expectations and priors about what AI trajectories and capability profiles will look like often cause people to make different predictions on e.g. P(doom), P(scheming), etc. (like the Paul vs Eliezer debates)? Or do you think in this case there is enough empirical evidence that people ought to converge more? (I'd guess the former, but low confidence.)

I think several of the subquestions that matter for whether it'll plausibly work to have AI solve alignment for us are in the second category. Like the two points I mentioned in the post. I think there are other subquestions that are more in the first category, which are also relevant to the odds of success. I'm relatively low confidence about this kind of stuff because of all the normal reasons why it's difficult to say how other people should be thinking. It's easy to miss relevant priors, evidence, etc. But still... given what I know about what everyone believes, it looks like these questions should be resolvable among reasonable people.

The more boring (and likely case) is that we just have too few data-points to tell whether AI control can actually work as it's supposed to, so we have to mostly fall back on priors.

I'll flag something from J Bostock's comment here while I'm making the comment:

I've only ever heard control talked about as a stopgap for a fairly narrow set of ~human capabilities, which allows us to something something solve alignment.

The human range of capabilities is actually quite large (discussed in SSC).

Another way that the ratings might be biased towards Leela is that people might resign more readily than normal, since games are unrated and Leela will definitely accept your rematch. This was definitely true for me.

I've been curious about how good LeelaPieceOdds is, so I downloaded a bunch of data and graphed it.

For context, Leela is a chess bot and this version of it has been trained to play with a handicap.

I first heard about LeelaQueenOdds from simplegeometry's comment, and I've been playing against it occasionally since then. LeelaPieceOdds is the version with more varied handicap options, which is more interesting to me because LeelaQueenOdds ~always beats me.

Playing Leela feels like playing chess while tired. I just keep blundering. It's not invincible, and when you beat it it doesn't feel like it was that difficult. But more often than not it manages to overcome the huge disadvantage it started with.

The data

Bishop-Bishop-Knight-Knight

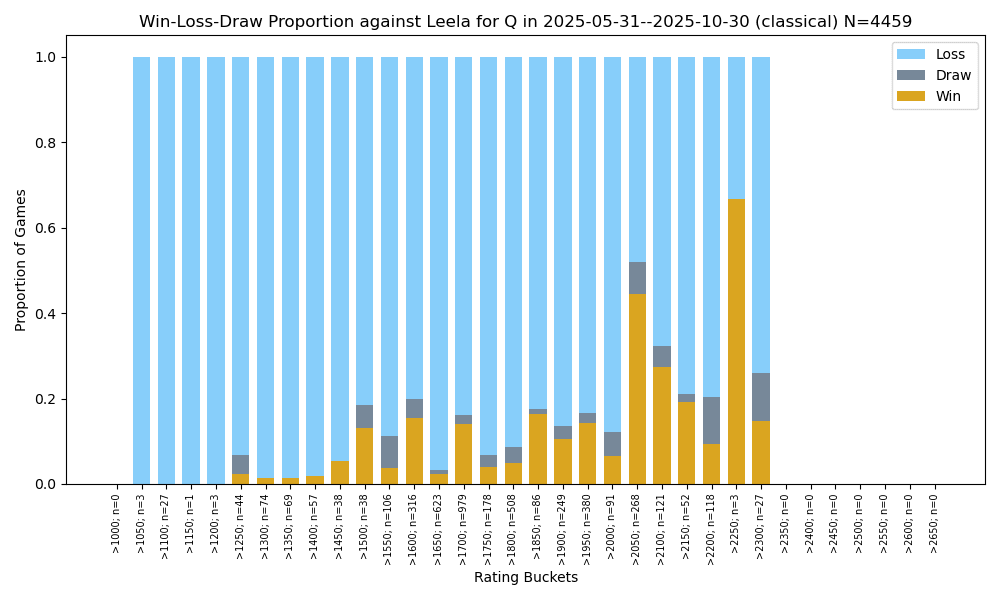

How to read the graphs

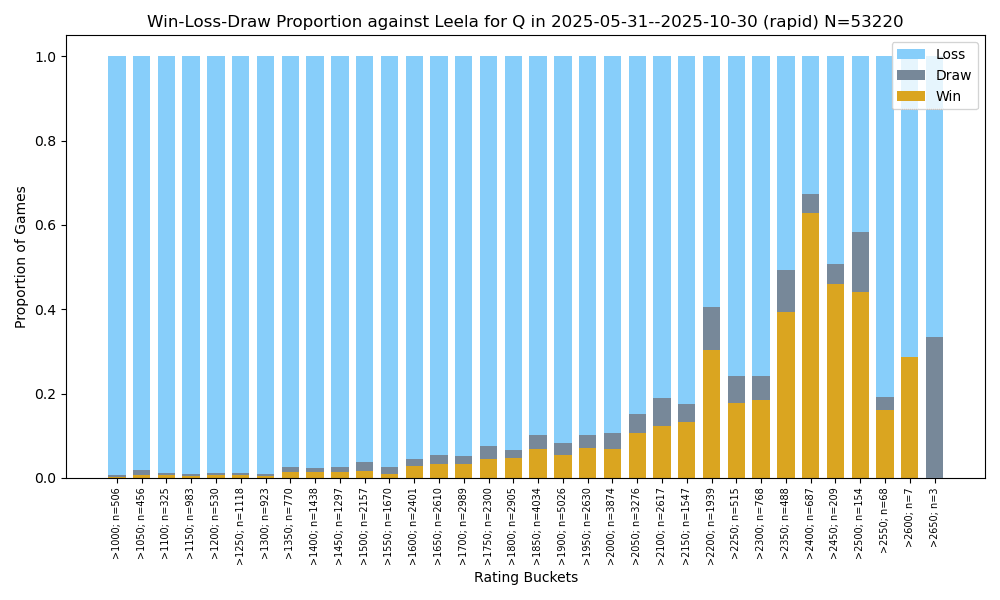

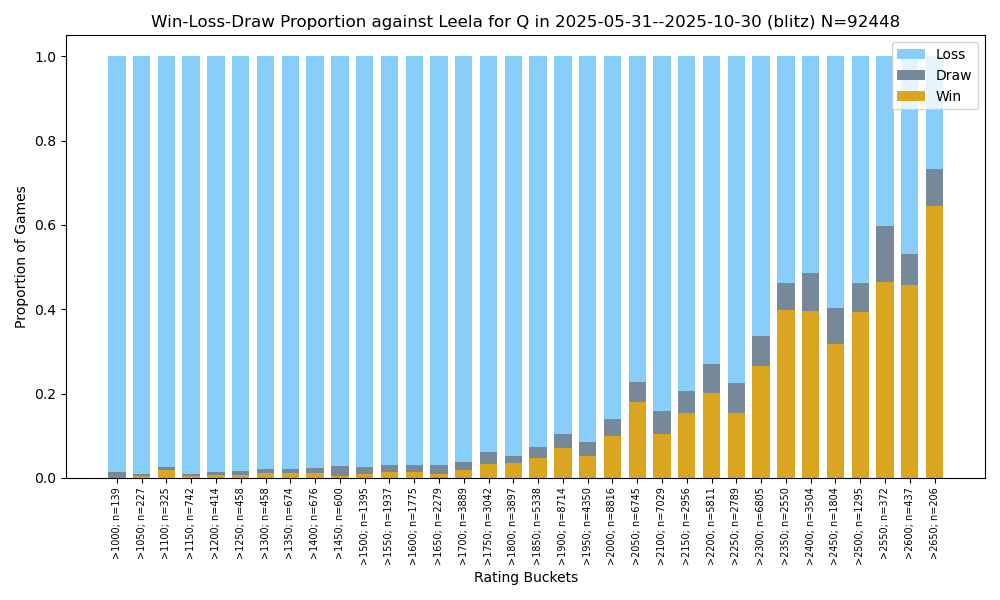

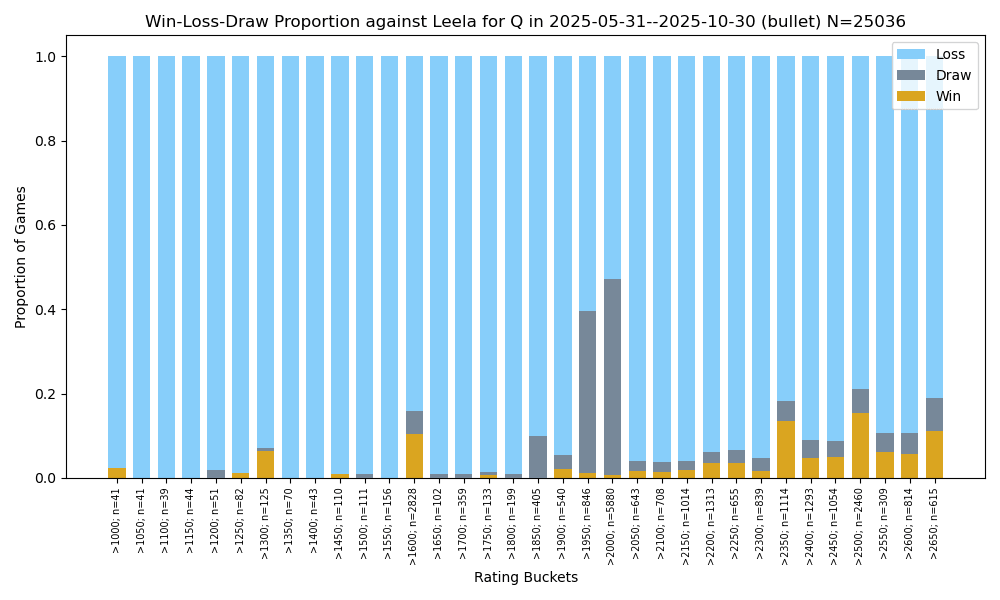

Orange means the human player won. The title contains the handicap[1] and the time control. I split the data by time control (Bullet, Blitz, Rapid), which indicates how much time the human is allowed to spend thinking over the whole game. Leela always plays quickly so the time control is essentially only affecting the human.

For reference, these are the time control categories I'm using:

Often there wasn't enough data show anything interesting for the Bullet and Classical time controls, so I usually omitted them.[2]

In the x-axis labels, n is the number of games in that bucket. Often it's too low to draw confident conclusions. I've grouped together games where Leela played as white and as black, even though playing as white gives a small advantage.

The human player rating (on the x-axis) is the Lichess rating[3] of the human player, for that particular time control, at the time when the game against Leela was played. Because Lichess tracks different ratings for each time control, the graphs aren't precisely comparable across time controls.

Knight-Knight

Bishop-Bishop

Rook-Knight

Bishop-Bishop-Knight

Rook-Knight-Knight

Rook-Bishop-Bishop

Queen-Knight

Queen-Rook

Queen-Knight-Knight

Queen-Bishop-Bishop

Queen-Rook-Knight

Queen-Rook-Rook

The spike in 1400-1499 is caused by a single user who played more than a thousand games.

Thoughts

I think the stats are biased in favour of Leela

In my experience playing different odds, I tend to win a little more than the data indicates I should. I'm not sure why this is, but I have a couple of guesses:

If you want the most impressive-to-you demonstration, I recommend selecting an odds where your rating wins <1/3 of the time.

How much room at the top?

How much could LeelaPieceOdds be improved, in principle? I suspect a moderate but not huge amount. An easy change would be to increase the compute spent on the search. I'm really curious how much of a difference this makes, because deep searches favour the machine.

A more difficult change would be adaptation to particular players. Leela works by exploiting weaknesses in human play, but it was trained using a model of aggregated human play. In principle, it could adapt to the particular player by analysing their past games and building a more accurate model of their likely mistakes.

Relevance to AI control cruxes

AI control proponents want to keep researcher AIs in a box and make them to solve alignment for us. Stories of AI doom often involve the AI escaping from this situation. Odds chess could be a tiny analogue of this situation,[4] so one might hope that this would provide some empirical evidence for or against control. But unfortunately we mostly agree with control proponents on the plausibility of escape for near-human-level AI. My reasons for thinking control won't work aren't related to the capabilities of a superintelligence. They are:

Even though there's plenty of current relevant evidence about these points, it's apparently easy to twist it to fit several worldviews.

Relevance to cruxes with people outside the alignment sphere

A lot of people have a strong intuition that structural advantages tend to trump intelligence differences in a conflict. Playing chess with Leela is a great exercise for adding some nuance to that intuition. A friend said they better understood AI risk after we had a conversation about Leela.

The options are NN, BB, RN, RR, BBN, RNN, RBB, QN, BBNN, QR, QNN, QBB, QRN or QRR. The initial stands for which pieces Leela is missing at the beginning of the game. N=Knight, B=Bishop, R=Rook, Q=Queen.

E.g. here's the Classical time control for BBNN:

Note that Lichess and Chess.com ratings differ significantly at the lower ratings, but start to be fairly similar above 2000.

I think this section from The case for ensuring that powerful AIs are controlled, which lists the handicaps of the AI, makes the analogy fairly clear: