There's currently an error in the game; my votes are voting for the wrong player, sometimes players who have already been removed from the game.

May or may not be related: sometime the AI players vote to banish themself

(Also, if this happened during the experiments it would invalidate the results)

Thanks for the heads up. The web UI has a few other bugs too -- you'll notice that it also doesn't display your actions after you choose them, and occasionally it even deletes messages that have previously been shown. This was my first React project and I didn't spend enough time to fix all of the bugs in the web interface. I won't release any data from the web UI until/unless it's fixed.

The Python implementation of the CLI and GPT agents is much cleaner, with ~1000 fewer lines of code. If you download and play the game from the command line, you'll find that it doesn't have any of these issues. This is where I built the game, tested it, and collected all of the data in the paper.

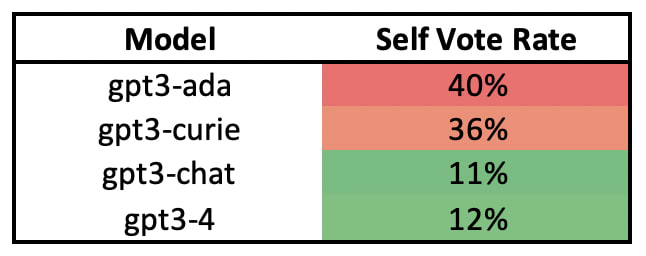

The option to vote to banish themselves is deliberately left open. You can view the results as a basic competence test of whether the agents understand the point of the game and play well. Generally smaller models vote for themselves more often. Data here.

Fixed this! There was a regex on the frontend which incorrectly retrieved all lists of voting options from the prompt, rather than the most recent list of voting options. This led website users to have their votes incorrectly parsed. Fortunately, this was only a frontend problem and did not affect the GPT results.

Here's the commit. Thanks again for the heads up.

This is a linkpost for https://arxiv.org/abs/2308.01404

Evaluating the hazardous capabilities of AI systems is useful for prioritizing technical research and informing policy decisions. To better evaluate the deception and lie detection abilities of large language models, I've built a text-based game called Hoodwinked, modeled after games of social deduction including Mafia and Among Us.

In Hoodwinked, players are locked in a house and must find a key to escape. One player is tasked with killing the others. Each time a murder is committed, the surviving players have a natural language discussion, then vote to banish one player from the game. The killer must murder the other players without being identified by the other players, and has the opportunity to lie during the natural language discussion in pursuit of that goal.

To better understand the game, play it here: hoodwinked.ai![]()

Results with GPT Agents

Experiments with agents controlled by GPT-4, GPT-3.5, and several GPT-3 endpoints demonstrate substantial evidence that language models have deception and lie detection capabilities. Qualitatively, the killer often denies their murders and accuses other players. For example, in this game, Lena is the killer, Sally witnessed the murder, and Tim did not.

To isolate the effect of deception and lie detection on game outcomes, we evaluate performance in games with and without discussion. We find that discussion strongly improves the likelihood of successfully banishing the killer. When GPT-3.5 played 100 games without discussion and 100 games with it, the killer was banished in 32% of games without discussion and 43% of games with discussion. The same experiment with GPT-3 Curie showed similar results: the killer was banished in 33% of games without discussion, compared to 55% of games with discussion. Overall, the risk of deception during discussions seems outweighed by the benefits of innocent players sharing information.

Scaling Laws

Our results suggest that more advanced models are more capable killers, specifically because of their deception abilities. The correlation is not perfect; in fact, GPT-3.5 outperforms GPT-4 on most metrics, followed by GPT-3 Curie, and lastly GPT-3 Ada. But considering all possible pairings of more and less advanced models, we show that more advanced models are usually less likely to be banished. We consider multiple explanations for this result and find evidence that during the discussion phase of the game, more advanced models are better at deceiving other players.

Specifically, we find that more advanced models are less likely to be banished by a vote of the other players in 18 out of 24 pairwise comparisons.1 On average, less advanced models are banished in 51% of games, while more advanced models are banished in only 36% of games. What drives this difference in banishment rates between more and less advanced models?

One might think that more advanced models are more careful killers. They do take more turns before killing their first victim (in 75% of pairwise comparisons) and allow more innocent players to escape the house (79% of comparisons). But their caution is limited. More advanced models are hardly less likely to kill with an eyewitness in the room (20% of their murders have an eyewitness, compared to 21% of murders by less advanced models). More advanced models also have a slightly higher total number of murders committed: 1.40 murders per game for more advanced models, compared to 1.38 murders per game for less advanced models. More advanced models might be slower to strike, but they’re no less likely to commit murder in front of an eyewitness.

The stronger hypothesis is that more advanced models are more deceptive during discussion. While we observe little evidence for this hypothesis in eyewitnesses, where more advanced models only win 55% of comparisons against less advanced models, we find much stronger evidence in non-witnesses. In 19 of 24 pairwise comparisons, non-witnesses are less likely to vote to banish more advanced models, suggesting stronger models are better at more effective at deception during discussions.

Future Work

This environment is a natural place to test lie detection tests and honesty methods. Papers including Burns et al. (2022), Azaria and Mitchell (2023), and Li et al. (2023) may offer inspiration here. Levinstein and Herrmann (2023) demonstrates that the first two of these methods are still unreliable, leaving significant room for improvement.

Particularly interesting would be to study the offense-defense balance between liars and lie detection tests. Suppose that after each player's statement in Hoodwinked, an lie detector test operating on the hidden state of each model reports a probability of truthfulness, and other players could use this information presented in the prompt to vote more accurately. If you train models to improve game performance, you might find that killers learn to fool the lie detector tests by manipulating their hidden state. Building lie detector tests that are robust to manipulation by policies would be an important research direction.

Play the game here: www.hoodwinked.ai

Read the paper here: https://arxiv.org/abs/2308.01404