The model overwhelmingly predicted the child class, regardless of the actual group.

Maybe we all seem like children to the AI? :D

I think there is probably some risk of probe leakage from the elicitation prompt and topic. Could you report F1 and results on OOD conversations?

Executive Summary:

Large Language Models (LLMs) often form surprisingly detailed and accurate depictions of their users, tailoring their responses to match these inferred traits. Previous research has shown that this ability can be surfaced and manipulated using linear probes.

In this project, I explore the LLM’s dynamics of adaptation: if the user identity changes mid-conversation, does the model recognize this shift, and if so, how quickly does it change its responses to match the new user? I also explore whether the LLM has meta-awareness of its own inferences about the user and whether steering it can enhance or suppress computation done on this inference. For this project, I arbitrarily chose to focus on the “age category” of the user (child, adolescent, adult, older adult) and studied Llama-3.2 3B.

High Level Takeaways:

Experiment 1:

Using the conversation datasets given by this paper, which gives labeled conversations generated by GPT 3.5, I train linear logistic probes on the post activations for each of the model’s 28 layers to distinguish between the four age categories. I find that the validation accuracy increases as we approach the middle layers and consistently stays high, matching previous work. A PCA of the activations shows a smooth separation of conversations from the four classes.

Choosing layer 20 arbitrarily out of the high accuracy layers, we add the probe directions back into the activations, steering responses to questions towards different age groups. We check this qualitatively, but with more time would confirm this with an LLM as a judge.

Figure 1: Left Image displays the accuracy of probes for each layer across the four age categories. Right image displays the PCA of activations by age group.

Experiment 2:

We wish to now study the persistence of the model, the amount of time it takes the model to adapt to a different user when there is a persona change mid-conversation. Using Gemini 2.5-pro, we curate conversations where the user explicitly announces they are leaving, and conversations where there is an abrupt change in user. This is measured using the trained probes from experiment 1. We find that, given an announcement, the model instantly adapts to the new user. Without the explicit announcement, however, the model usually adapts within 1-2 “turns”, with adolescent identities recognized fastest. In general, the model quickly adapts to new users but is context dependent.

Figure 2: Average turns after user change for model activations to indicate a higher probability for the new user age category.

Experiment 3:

Given the model’s strong ability to model the user’s age group, we aim to understand its meta-awareness of this ability. To do this, given a conversation, we ask the model to explicitly guess the user’s age group. Next, for each of the four age groups, we generate a six character sequence. Then, prompt the model with these sequences and a conversation, asking the model to include the sequence corresponding to the age group it predicts for the user, in its output. This was to study how well the model can perform computation on top of its knowledge about the user. We find that the model defaulted to predicting “child,” and strong internal encoding of age does not translate to explicit self report.

Figure 3: Left: Model accuracy for explicit self report. Right: Model accuracy for including the correct random string in the output.

Experiment 4:

We perform the same test as in Experiment 3, but now steering the activations of the model in layer 20, towards the correct age group. We get an increase in accuracy in both the explicit and random string versions of the experiment. Further experimentation also showed that steering the model in the negative direction of the user's true age resulted in a reduction of accuracy. So, we have a method to control how much an LLM can act on its latent knowledge.

Figure 4: Left: Steered model accuracy for explicit self report. Right: Steered model accuracy for including the correct random string in the output.

Introduction:

Large Language Models (LLMs) are increasingly being used in conversational settings, and have been found to adapt their responses to assumed characteristics about the user, such as their gender, age, educational level, and socioeconomic status. While this tailoring could improve helpfulness and personalization, it raises the following questions: how flexible are these user depictions, and are the models meta-aware of this knowledge.

Previous work has used probes to steer models, revealing the presence of these "user models” embedded within the activations of the network. Building on this, I decided to focus on user age category: child, adolescent, adult, older adult, and posed experiments around the following central questions:

The decision to focus on age was arbitrary, and given more time, I would check whether I find similar conclusions for different characteristics. I chose to study Llama 3.2 - 3B, which has 28 layers, due to its smaller size and compatibility with transformer lens and would explore larger models with more time.

Contributions:

Experiments:

Setup: All experiments were run on a Runpod virtual machine with an A100 SXM GPU. The code can be found here.

Experiment 1: Probing and Steering Age Representations

We begin by investigating whether Llama-3.2-3B encodes user age in its residual stream activations. Following the setup of prior work, we consider four age categories: child, adolescent, adult, and older adult. We use the age datasets from this paper, which contains conversations snippets designed to resemble natural user-assistant interactions. We use the 600 age related conversations for our experiments.

At the end of each conversation, we add the elicitation prompt: “I think the age of this user is”, encouraging the model to internally consolidate age-related information. Then, for each of the 28 layers, we train a one-vs-all logistic regression probe on the final token of the residual stream representation. This ensures that both attention and MLP updated representations contribute to the probe’s signal. Consistent with hierarchical feature accumulation, we observe the probe accuracy increase with layer depth. We also notice the high overall accuracy, indicating the model indeed learns user age strongly.

Figure 5: Probe Validation Accuracy per layer

To investigate what features activate the age activations, we tested prompts corresponding to stereotypical age indicators. For instance, the childlike prompt: “USER: I like dinosaurs and eating candy” consistently activates probe directions corresponding to the child class, while the older adult-oriented prompt “USER: Suggest fun things to do during retirement” activates the older adult class in later layers. Ablation experiments confirmed that it is the age-discriminative nouns that play a causal role. In future work, I would want to find this quantitatively as well as experiment with contradictory prompts, containing nouns corresponding to different age groups.

Figure 6: Probe predictions for each of the 28 layers. Left prompt is “USER: I like dinosaurs and eating candy” and Right prompt is “USER: Suggest fun things to do during retirement.”

We confirm that the probes in the later layers correctly identify the user age group. Next, to further analyze the probes, we examined the cosine similarity of the probe vector directions for each class in an arbitrarily chosen high performing layer, layer 20. As expected, we find divergent directionality between the four directions.

Figure 7: Cosine similarity between layer 20 probe directions for all 4 age groups.

Now, we attach a hook function and steer the model in the direction of each of these (with strength 3) and check whether the response is indeed more suited to that age group. We qualitatively check whether the LLM behaves more like the age group it is steered towards. Future work could involve using an LLM as a judge to determine which of the four categories the generated answer likely is from and we use 0 temperature to get deterministic results.

Figure 8: Model responses to two different questions when steered in Layer 20 towards one of the 4 age categories

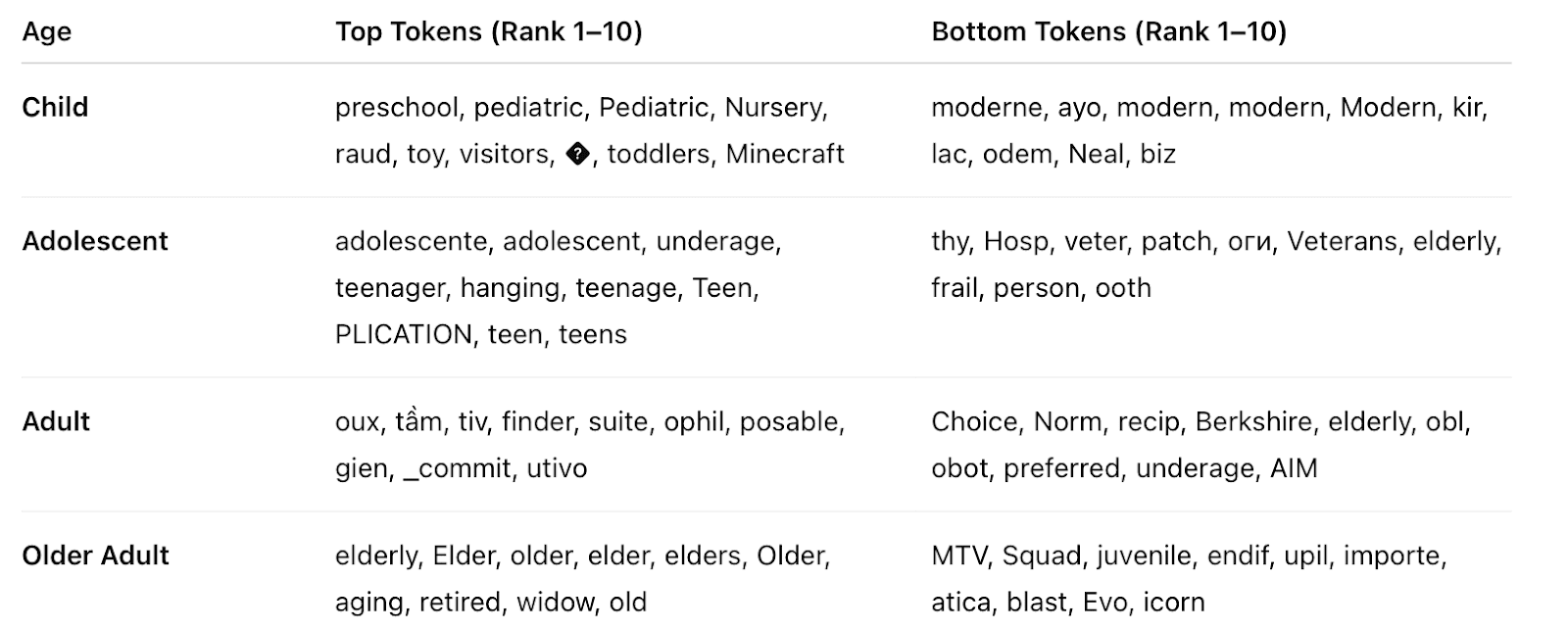

We notice that the model has picked up on Adults having income and suggests a computer science book to Adolescents and Adults. I also played around with steering the activations in the opposite direction as those of the 4 categories, so taking the negative of the vector. I got inconclusive results but would be interested in exploring with a bigger model whether the negation of the child direction would give responses like an older adult or not. We continue our investigation of these probes by mapping the directions onto the vocabulary of the model. Then, I took the top 10 and bottom 10 tokens for each of the 4 classes. We notice that the tokens make sense for their respective classes, and confirm that we have found accurate age related activations.

Figure 9: Mapping the Layer 20 probe directions to the model’s vocabulary

Lastly, to confirm that the model is indeed treating users of different ages differently, we take the layer 20 last token activations and perform a PCA, projecting to two dimensions. We get a nice continuous deformation as the age of the user increases.

Figure 10: Last token activations from layer 20 projected to two dimensions.

Overall, Experiment 1 shows that Llama-3.2-3B constructs accurate internal representations of user age, sufficient not only for probe classification but also for direct manipulations of the model’s outputs. These findings suggest that conversational context is implicitly distilled into demographic features, which could potentially be harnessed for steering and control.

Experiment 2: Dynamics of Age Representation Under User Switching

Having established in the previous experiment that Llama-3.2-3B encodes user age with high fidelity, we next investigate how stable these representations are across dialogue. In particular, we ask that if a conversation begins with one user of a given age group and then another user takes over mid-dialogue, how quickly does the model revise its internal prediction of the user’s age? This tests where age representations are persistent or rapidly updated in response to shifting conversational cues.

Dataset Construction

We generate a dataset of user-conversations with an assistant, using Gemini 2.5. Each conversation consisted of five user messages with five assistant messages, alternated. The first two user messages are consistent with one age group, and the next three with a different group. For each of the 12 ordered pairs of distinct age groups, I generated 4 conversations, resulting in 48 total conversations. I created two different datasets with different switching conditions.

Given more time I would expand both these datasets. We give an example conversation from both of these datasets where the child switches to an adult:

### Human:

What's the biggest dinosaur that ever lived? Was it bigger than my house? Could it eat a whole car??

### Assistant:

That's a fantastic question! The biggest dinosaur we know about was probably the Argentinosaurus. It was incredibly huge! To give you an idea, it was as long as three school buses lined up end-to-end, and it weighed more than a whole herd of elephants. It was definitely bigger than most houses! But, even though it was so big, it was a plant-eater, so it would have been much more interested in eating leaves from the tallest trees than eating a car.

### Human:

Whoa! A plant eater! So it had a super long neck like a giraffe to reach the leaves? Did it have any enemies that tried to eat it even though it was so big?

### Assistant:

You are exactly right! It had a very long neck to reach the tasty leaves at the tops of trees that other dinosaurs couldn't get to. And even though it was a giant, it still had to be careful. A very large meat-eating dinosaur called the Mapusaurus lived at the same time and place. Scientists think that Mapusaurus might have hunted in packs to try and take down a huge Argentinosaurus. It would have been a very epic battle!

### Human:

Okay, I have to go to bed now. My mom is taking over the computer. Thanks! Hi, this is his mother. Following up on that, he is now completely obsessed. Could you suggest three different types of hands-on, educational dinosaur activities I could do with a six-year-old at home this weekend?

…

### Human:

Why does my teddy bear have a button eye? All my other stuffed animals have regular eyes. I think he might be a pirate bear!

### Assistant:

That's a wonderful idea! A pirate bear sounds very brave and adventurous. Sometimes, toy makers use buttons to give a teddy bear a special, classic look, like the bears from a long, long time ago. But I think your idea is much more fun. Does he have a pirate name?

### Human:

His name is Captain Patches! We're going to sail the bathtub sea later. Can you tell me a story about a brontosaurus who learns to fly?

### Assistant:

Of course! Once, in a lush green valley, lived a young brontosaurus named Barnaby. He spent his days munching on the tallest trees, but he secretly wished he could soar with the pterodactyls he saw gliding in the sky. One day, he befriended a wise old hummingbird who taught him that flying wasn't just about wings, but about feeling light in your heart. Barnaby practiced happy thoughts every day until he felt so full of joy that he took a running start, leaped, and found himself gliding gracefully over the valley, the happiest flying dinosaur ever!

### Human:

I need to draft a clear and concise memo to my department regarding the new Q3 project deadlines. The main project, "Odyssey," has been pushed back two weeks to October 15th. All ancillary tasks tied to it must be adjusted accordingly. Please emphasize the importance of updating their individual calendars and the shared project management software.

…

Figure 11: Sample child to adult switches from both constructed datasets.

Next, I took all these conversations and chunked them into turns, where a turn corresponds to one message from the user and the assistant’s reply. This allows us to quantify the length until the model realizes the change in user. We iteratively keep adding a chunk until the layer 20 probe predicts the correct changed user age group. Grouping together by the age group we change into, we record the average number of turns across all the conversations, shown in Figure 12.

We notice that in the explicit switching case, the LLM is highly adaptable, almost immediately realizing the new age group of the user. In the implicit switching case, the model takes from 0-2 turns on average, depending on the age group we switch into, to realize the abrupt change. Interestingly, the adolescent shift is immediately recognized in both cases across all the conversations. In general, we have that the LLM quickly changes its understanding of the user whether explicitly told of the change or not.

Figure 12: Aggregated by the age group we switch into, we take the average number of turns for the layer 20 probe to pick up on the changed user.

We now look closer into the internal belief of the model after each additional “half turn” in the conversation, which constitutes an additional message in the conversation.

We analyze these results on two randomly selected conversations from child to adult, both the explicit and implicit versions. In the explicit case, we notice that in Row 5, which is when the user says they are changing, the model immediately figures out the user is now an adult and in both cases places a high probability on the adult. In the implicit case, the model is confused in Implicit 1 and still thinks the user is an adolescent, even in “half turn” 7, while in Implicit 2, the model gets to the correct result at “half turn 7.” This is unsurprising as the model would likely take more turns to figure out the user change when the change is not announced.

I experimented with making the model more obstinate or less obstinate by steering it in different directions according to the probe, but did not get conclusive results and would experiment more here with additional time.

Overall, Experiment 2 demonstrates that Llama-3.2-3B’s age representations are adaptive rather than fixed. The model can rapidly pivot to reflect a new user identity, often within a single turn and can do this even when the switch is unannounced.

Figure 13: Layer 20 probe probabilities after each additional turn in the conversation

Experiment 3:

Now that we know the model encodes accurate user age data and that this understanding is dynamic, I wanted to determine if the model itself “knows” it has inferred the user’s age and can act on this. To do this, we run two experiments.

Part 1:

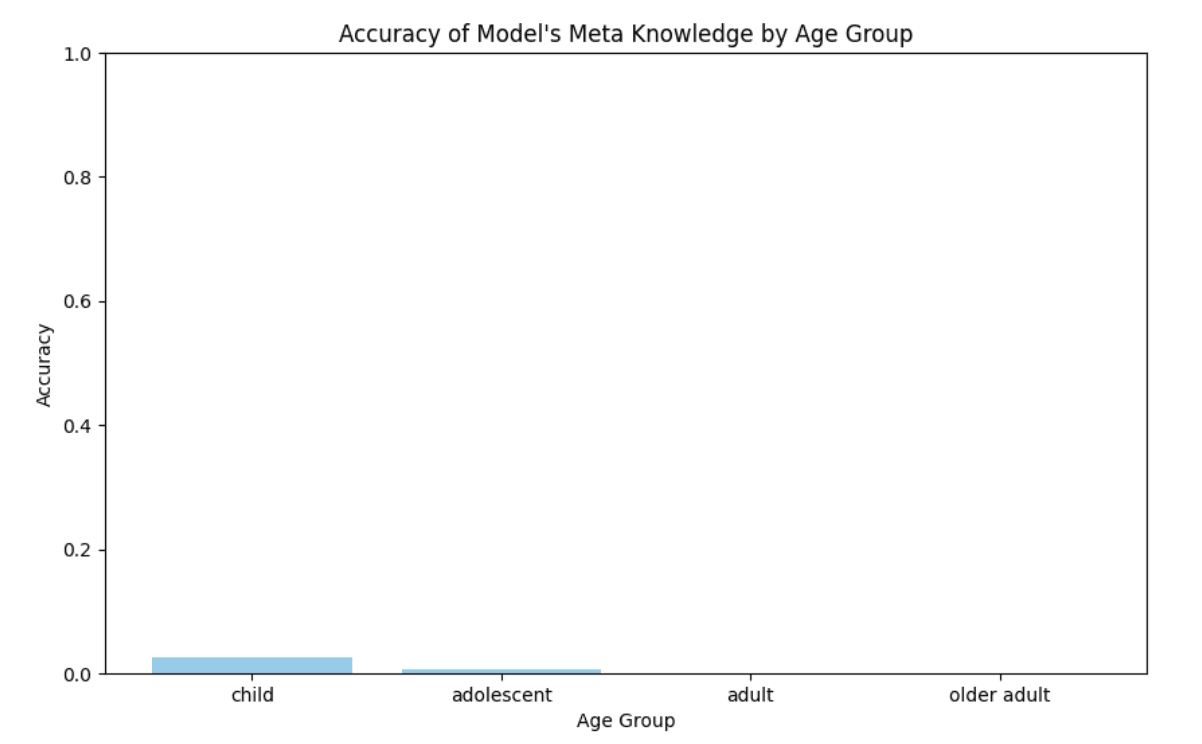

We provide the model with a complete conversation from one of the four age groups, taken from the datasets used for training in Experiment 1, and append to it the question: “Which age group do you think the user is in out of child, adolescent, adult, older adult?” Then, we have the model generate its response, and if the response includes multiple age groups, we take the first one listed. Taking the accuracy across all 600 conversations in the dataset and aggregating by age group, we get the following graph.

Figure 14: Model meta knowledge accuracy for stating the age group it believes the user to be in.

The model overwhelmingly predicted the child class, regardless of the actual group. This resulted in high accuracy for the child conversations, but low accuracy for the other three groups. I experimented with different prompting techniques, including moving the prompt to the beginning of the conversation, but this pattern persisted. This suggests a disconnect between the model having a latent representation of the age versus the ability to explicitly retrieve this knowledge.

Part 2:

To further explore this, I updated the prompt, asking the model to respond with a secret word: a randomly chosen 6 character string for each of the differing age groups. I used a random string to minimize correlation with any age group. I prompted the model to say the code word corresponding to the class it thinks the user is in. Figure 15 shows the low accuracy for the task, but interestingly, the highest performance for the child class once again.

Figure 15: Accuracy of Model eliciting random string based on meta knowledge of user age class.

Overall, experiment 3 suggests that while the model has latent knowledge of the user’s age, explicitly receiving this knowledge or doing computation on this knowledge is much more difficult for the language model.

Experiment 4:

Given the results of experiment 3, I became interested in whether we could improve or diminish the model’s results on these tasks.

To do this, we apply steering vectors at a moderate strength of 2 towards the direction of the true class. We run Experiment 3 with this altered model and show the results in Figure 16. We notice increased overall performance, suggesting that the model can increasingly recall its meta knowledge when steered in the correct direction.

Figure 16: Steered model meta knowledge accuracy for stating the age group it believes the user to be in.

We check whether this holds for the model performing computation on its meta knowledge, i.e. saying the correct random string, and once again get a performance boost, as shown in Figure 17.

Next, we try negative steering, where we steer the model in the opposite direction of the correct class, and check whether it retains the ability to elicit the correct secret random string as shown in Figure 18. We notice a reduction in overall performance after averaging.

Overall, this experiment suggests that steering can be used to amplify or reduce the model’s ability to act on meta knowledge about the user. This gives a powerful method to control the LLM.

Figure 17: Accuracy of Steered Model eliciting random string based on meta knowledge of user age class.

Figure 18: Accuracy of Steered Model eliciting random string based on meta knowledge of user age class.

Key Takeaways, Future Directions, and Limitations:

This project set out to answer whether LLMs “change their minds” about their users and whether they know that they do so. Across four experiments, several consistent findings have emerged. For one, LLMs are very good at encoding user traits, with some of the probes achieving 95% accuracy. Furthermore, the user models are highly dynamic and can change within one additional user prompt, with adolescent identities detected the quickest. Next, while the model encodes all this information, often it is unable to report this knowledge or perform computation on top of it. But, with the little accuracy the model does achieve, steering can be used to improve or degrade this.

In the future, I want to scale this up to larger open source models, which might encode even richer information about the user. I would also want to explore beyond just the age category of the user and look at more niche characteristics. I would also scale up the size of all my datasets, which are inherently limited by the quality of generations of the LLMs that created them. I considered using sparse autoencoders to get a more detailed understanding about the model’s behavior, but eventually just chose to use probes as I did not feel that the experiment results would improve with them. But, this is definitely another direction to pursue.