This problem can also be modeled as the battle against pure replicators. What Nick Land calls the shredding of all values is the tendency towards pure replicators (ones which do not value consciousness, valence, and experience). This seems similar to the religious battle against materialism.

Bluntness outcompetes social grace, immorality outcompetes morality, rationality outcompetes emotion, quantity outcompetes quality (the problem of 'slop'), Capitalism outcompetes Buddhism and Taoism, intellectualism outcompetes spiritualism and religion, etc.

The Retrocausality reminds me of Roko's basilisk. I think that self-fulfilling prophecies exist. I originally didn't want to share this idea on LW, but if Nick Land beat me to it, then I might as well. I think John Wheeler was correct to assume that reality has a reflexive component (I don't think he used this word, but I'm going to). We're part of reality, and we navigate it using our model of reality, so our model is part of the reality that we're modeling. This means that the future if affected by our model of the future. This might be why placebo is so strong, and why belief is strong in general.

While I think Christopher Langan is a fraud, I believe that his model of reality has this reflexive axiom. If he's at least good at math, then he probably added this axiom because of its interesting implications (which sort of unifies competing models of reality. For instance, the idea of manifestation which is gaining popularity online)

modeled as the battle against pure replicators

perhaps it's Moloch who must be a good guy here (eeh, anti-hero?), causing interesting things to exist in between pure utility maximizers - viruses and bacteria did not, if fact, acausally trade to form an infinitely powerful bio gray goo, but I am here too and I can sit on a chair, type on a keyboard, and drink coffee - the edge of chaos is too stable and convergent monomaniacal intelligence is too weak to explain it, only multi-polar intelligence forming an illusion of coherence seems sufficient

I think the problem with Moloch is one of one-pointedness, similar to metas in competitive videogames. If everyone has their own goals and styles, then many different things are optimized for, and everyone can get what they personally find to be valuable. A sort of bio-diversity of values.

When, however, everyone starts aiming for the same thing, and collectively agreeing that only said thing has value (even at the cost of personal preferences) - then all choices collapse into a single path which must be taken. This is Moloch. A classic optimization target which culture warns against optimizing for at the cost of everything else is money. An even greater danger is that a super-structure is created, and that instead of serving the individuals in it, it grows at the expense of the individuals. This is true for "the system", but I think it's a very general Molochian pattern.

Strong optimization towards a metric quickly results in people gaming said metric, and Goodhart's law kicks in. Furthermore, "selling out" principles and good taste, and otherwise paying a high price in order to achieve ones goals stops being frowned upon, and instead becomes the expected behaviour (example: Lying in job-interviews is now the norm, as is studying things which might not interest you).

But I take it you're refering to the link I shared rather than LW's common conception of Moloch. Consciousness and qualia emerged in a materialistic universe, and by the darwinian tautology, there must have been an advantage to these qualities. The illusion of coherence is the primary goal of the brain, which seeks to tame its environment. I don't know how or why this happened, and I think that humans will dull their own humanity in the future to avoid the suffering of lacking agency (SSRIs and stimulants are the first step), such that the human state is a sort of island of stability. I don't have any good answers on this topic, just some guesses and insights:

1: The micro dynamics of humanity (the behaviour of individual people) are different from the macro mechanics of society, and Moloch emerges as the number of people n tends upwards. Many ideal things are possible at low n's almost for free (even communism works at low n!), and at high n's, we need laws, rules, regulations, customs, hierarchical structures of stablizing agents, etc etc - and even then our systems are strained. There seems to be a law similar to the square-cube law which naturally limits the size things can have (the solution I propose to this is decentralization)

2: Metrics can "eat" their own purpose, and creations can eat their own creators. If we created money in order to get better lives, this purpose can be corrupted so that we degrade our lives in order to get more money. Morality is another example of something which was meant to benefit us but now hangs as a sword above our heads. AGI is trivially dangerous because it has agency, but it seems that our own creations can harm us even if they have no agency whatsoever (or maybe agency can emerge? Similar to how ideas gain life memetically).

3: Perhaps there can exist no good optimization metrics (which is why we can't think of an optimization metric which won't destroy humanity when taken far enough). Optimization might just be collapsing many-dimensional structures into low-dimensional structures (meaning that all gains are made at an expense, a law of conservation). Humans mostly care about meeting needs, so we minimize thirst and hunger, rather than maximizing water and food intake. This seems like a more healthy way to prioritize behaviour. "Wanting more and more" seems like a pathology than natural behaviour - one seeks the wrong thing because they don't understand their own needs (e.g. attempting to replace the need for human connection with porn), and the dangers of pathology used to be limited because reality gatekept most rewards behind healthy behaviour. I don't think it's certain that optimality/optimization/self-replication/cancer-like-growth/utility are good-in-themselves like we assume. They're merely processes which destroy everything else before destroying themselves, at least when they're taking to extremes. Perhaps the lesson is that life ceases when anything is taken to the extreme (a sort of dimensional collapse), which is why Reversed Stupidity Is Not Intelligence even here.

Appendix: Five Worlds of Orthogonality

How much of a problem Pythia is depends on how strongly the Orthogonality Thesis holds.

- Strong Orthogonality

- All goals are equally easy to design an agent to pursue, beyond the inherent tractability of that goal.[1]

- Orthogonality

- There can exist arbitrarily intelligent agents pursuing any kind of goal.

- Obliqueness

- Agents do not tend to factorize into an Orthogonal value-like component and a Diagonal belief-like component; rather, there are Oblique components that do not factorize neatly.

- Diagonality

- All sufficiently advanced systems converge towards maximizing intelligence/power/influence/self-evidencing, shredding all their other values in the process.

- Universalist moral internalism

- What is right must be universally motivating so all sufficiently advanced AI systems discover objective moral truth and do Good Things. (Maybe it takes them a while to converge)

Time travel, in the classic sense, has no place in rational theory[3] but, through predictions, information can have retrocausal effects.

[...] agency is time travel. An agent is a mechanism through which the future is able to affect the past. An agent models the future consequences of its actions, and chooses actions on the basis of those consequences. In that sense, the consequence causes the action, in spite of the fact that the action comes earlier in the standard physical sense.

― Scott Garrabrant, Saving Time (MIRI Agent Foundations research[4])

Feedback loops are not retrocausal. When you turn on a thermostat, then some time thereafter, the room will be at about the temperature it says on the dial. That future temperature is not causing itself, what is causing it is the thermostat's present sensing of the difference between the reference temperature and the actual temperature, and the consequent turning on of the heat source. If there's a window wide open and it's very cold outside, the heat source may not be powerful enough to bring the room up to the reference temperature, and that temperature will not be reached. Can this supposed Tardis be defeated just by opening a window?

The consequence does not cause the action. It does not even behave as if it causes the action. Here the consequence varies according to the state of the window, while the action (keep the heating on while the temperature is below the reference) is the same, regardless of whether it is going to succeed.

For agents that think about and make predictions about the future (as the thermostat does not), what causes the agent's actions is its present ideas about those consequences. Those present ideas are not obtained from the future, but from the agent's present knowledge. Nothing comes from the future. If there is the equivalent of an open window, frustrating the agent's plans, and the agent does not know of it, then they will execute their plans and the predicted consequence will not happen. The philosopher Robert Burns wrote a well-known essay on this point.

To the extent that they accurately model the future (based on data from their past and compute from their present[5]),

Yes.

agents allow information from possible futures to flow through them into the present.

No. The thermostat has no knowledge of its eventual success or failure. An agent may do its best to predict the outcome of its plans, but is also not in communication with the future. How much easier everything would be, if we could literally see the future consequences of our present actions, instead of guessing as best we can from present knowledge! But things do not work that way.

The Nick Land article you linked describes him as telling "theory fiction", a term I take as parallel to "science fiction". That is, invent stuff and ask, what if? (The "what if" part is what distinguishes it from mundane fiction.) But if the departure point from reality is too great a rupture, all you can get from it is an entertaining story, not something of any relevance to the real world. "1984" was a warning; the Lensman novels were inspirational entertainment; the Cthulhu mythos is pure entertainment.

There's nothing wrong with entertainment. But it is fictional evidence only, even when presented in the outward form of a philosophical treatise instead of a novel.

ETA: I see that two people already made the same point commenting on the linked Garrabrant article, but they did not receive a response. In the same place, I think this also touches on the same problem.

This comment looks to me like you're missing the main insight of finite factored sets. Suggest reading https://www.lesswrong.com/posts/PfcQguFpT8CDHcozj/finite-factored-sets-in-pictures-6 and some of the other posts, maybe https://www.lesswrong.com/posts/N5Jm6Nj4HkNKySA5Z/finite-factored-sets and https://www.lesswrong.com/posts/qhsELHzAHFebRJE59/a-greater-than-b-greater-than-a until it makes sense why a bunch of clearly competent people thought this was an important contribution.

One of the comments you linked has an edit showing they updated towards this position.

This is a non-trivial insight and reframe, and I'm not going to try and write a better explanation than Scott and Magdalena. But, if you take the time to get it and respond with clear understanding of the frame I'm open to taking a shot at answering stuff.

I don't believe you have something to gain by insisting on using the word "time" in a technical jargon sense - or do you mean something different than "if self-fulfilling prophecies can be seen as choosing one of imagined scenarios, and you imagine there are agents in those scenarios, you can also imagine as if those future agents will-have-influenced your decision today, as if they acted retro-causally"? Is there a need for an actual non-physical philosophy that is not just a metaphor?

There's a non trivial conceptual clarification / deconfusion gained by FFS on top of the summary you made there. I put decent odds on this clarification being necessary for some approaches to strongly scalable technical alignment.

(a strong opinion held weakly, not a rigorous attempt to refute anything, just to illustrate my stance)

TypeError: obviously, any correct data structure for this shape of the problem must be approximating an infinite set (Bayesian), thus must be implemented lazy/generative, thus must be learnable, thus must be redundant and cannot possibly be factored ¯\_(ツ)_/¯

also, strong alignment is impossible and under the observation that we live in the least dignified world, so doom will be forward-caused by someone who thinks alignment is possible and makes a mistake:

As an agent when you think about the aspects of the future that you yourself may be able to influence, your predictions have to factor in what actions you will take. But your choices about what actions to take will in turn be influenced by these predictions.

To achieve an equilibrium you are restricted to predicting a future such that your predicting it will not cause you to also predict yourself taking actions that will prevent it (otherwise you would have to change predictions).

In other words you must predict a future such that your predicting it also causes you to predict that you will make it happen, Such as future is an 'attractor'.

Of course you might say that from an external/objective point of view it is the conception of possible futures that is acting with causal force, not the actual possible futures. But you are an embedded agent, you can only observe from the inside, and your conception of the future is the only sense in which you can conceive of it.

as a sidenote:

The Nick Land article you linked describes him as telling "theory fiction", a term I take as parallel to "science fiction".

Theory fiction is a pretty fuzzy term, but this is definitely an unfair characterisation. It's something more like a piece of fiction that makes a contribution to (philosophical/political) theory.

As an agent when you think about the aspects of the future that you yourself may be able to influence, your predictions have to factor in what actions you will take. But your choices about what actions to take will in turn be influenced by these predictions.

To achieve an equilibrium you are restricted to predicting a future such that your predicting it will not cause you to also predict yourself taking actions that will prevent it (otherwise you would have to change predictions).

Do you have an example for that? It seems to me you're describing circular process, in which you'd naturally look for stable equilibria. Basically prediction will influence action, action will influence prediction, something like that. But I don't quite get it how the circle works.

Say I'm the agent faced with a decision. I have some options, I think through the possible consequences of each, and I choose the option that leads to the best outcome according to some metric. I feel it would be fair to say that the predictions I'm making about the future determine which choice I'll make.

What I don't see is how the choice I end up making influences my prediction about the future. From my perspective the first step is predicting all possible futures and the second step execution the action that leads to the best future. Whatever option I end up selecting, it was already reasoned through beforehand, as were all the other options I ended up not selecting. Where's the feedback loop?

.I will try to give an example (but let me also say that I am just learning this stuff myself so take it with a pinch of salt).

Lets say that I throw a ball at you.

You have to answer two questions:

- what is going to happen?

- what am I going to do?

Observe that the answers to each of these questions affects each other...

Your answer to question 1 affects your answer to question 2 because you have preferences that you act on, for example if your prediction is that the ball is going to hit your face, then you will pick an action that prevents that such as raising your hand to catch it. (adding this at the end: if I had picked a better example the outcome could also have a much more direct effect on you independent of preference e.g. if the ball is heavy and moving fast its clear that you wont be able to just stand there when it hits you)

Your answer to question to 2 affects your answer to question 1, because your actions have causal power. For example if you raise your hand, the ball won't hit your face.

So ultimately your tuple of answers to questions 1 and 2 should be compatible with each other, in the sense that your answer to 1 does not make you do something other than 2, and your answer to 2 does not cause an outcome other than 1.

For example you can predict that you will catch the ball and act to catch it.

Of course you are right that we could model this situation differently: you have some possible actions with predicted consequences and expected utilities and you pick the one that maximise expected utility etc.

So why might we prefer the prior model? I don't have a fully formed answer for you here (ultimately it will come den to which one is more useful/predictive/etc.) but a few tentative reasons might be:

- it better corresponds to Embedded Agency

- It fits with an active inference model of cognition

- It better fits with our experience of making decisions (especially fast ones such as the example)

I don't understand this example. If someone throws a ball to me, I can try to catch it, try to dodge it, let it go whizzing over my head, and so on, and I will have some idea of how things will develop depending on my choices, but you seem to be adding an unnecessary extra level onto this that I don't follow.

I am claiming (weakly) that the actual process looks less like:

- enumerate possible actions

- predict their respective outcomes

- choose the best

and more like

- come up with possible actions and outcomes in an ad hoc way

- back and forward chain from them until a pair meet in the middle

I was going to write a whole argument about how the kind of decision theoretical procedure you are describing is something you can choose to do at the conscious level, but not something you actually cognitively do by default but then I saw you basically already wrote the same argument here.

Consider the ball scenario from the perspective of perceptual control theory (or active inference):

When you first see the ball your baseline is probably just something like not getting hit.

But on its own this does not really give any signal for how to act, so you need to refine your baseline to something more specific. What baseline will you pick? Out of the space of possible futures in which you don't get hit by the ball there are many choices available to you:

- You could pick one in which the wind blows the ball to the side, but you cant control that so it wont help much.

- You could pick a future that you don't actually have the motor skills to do, such as leaping into the air an kung-fu kicking the ball away. You start jumping but then you realise you dont know kung-fu, and the ball hits you in the balls!

- You could pick a future in which you catch the ball, and do so (or you could still fail).

All of this does not happen discretely but over time. The ball is approaching. You are starting to move based on some your current baseline or some average you are still considering. As this goes on the space of possible futures is being changed by your actions and by the ball's approach. Maybe its too late to raise you hand? Maybe its too late to duck? Maybe there's still time to flinch?

All of this is to say that to successfully do something deliberately, your goal must have the property that when used as a reference your perceptions will actually converge there (stability).

Lets look go back to your example of the thermostat:

From you perspective as an outsider there is a clear forward causal series of events. But how should the thermostat itself (to which I am magically granting the gift of consciousness) think about future?

From the point of view of the thermostat, the set temperature is its destiny to which it is inexorably being pulled. In other words it is the only goal it can possibly hope to pursue.

Of course as outsiders we know we can open the window and deny the thermostat this future. But the thermostat itself knows nothing of windows, they are outside of its world model and outside of its control.

All of this is to say that to successfully do something deliberately, your goal must have the property that when used as a reference your perceptions will actually converge there (stability).

For all that people talk of agents and agentiness, their conceptions are often curiously devoid of agency, with "agents" merely predicting outcomes and (to them) magically finding themselves converging there, unaware that they are taking any actions to steer the future where they want it to go. But what brings the perception towards the goal is not the goal, but the way that the actions depend on the difference.

From the point of view of the thermostat, the set temperature is its destiny to which it is inexorably being pulled. In other words it is the only goal it can possibly hope to pursue.

If we are to imagine the thermostat conscious, that we surely cannot limit that consciousness to only the perception and the reference, but also allow it to see, intend, and perform its own actions. It is not inexorably being pulled, but itself pushing (by turning the heat on and off) towards its goal.

Some of the heaters in my house do not have thermostats, in which case I'm the thermostat. I turn the heater on when I find the room too cold and turn it off when it's too warm. This is exactly what a thermostat would be doing, except that it can't think about it.

For all that people talk of agents and agentiness, their conceptions are often curiously devoid of agency, with "agents" merely predicting outcomes and (to them) magically finding themselves converging there, unaware that they are taking any actions to steer the future where they want it to go. But what brings the perception towards the goal is not the goal, but the way that the actions depend on the difference.

So does the delta between goal and perception cause the action directly?

Or does it require "you" to become aware of that delta and then chose the corresponding action?

If I understand correctly you are arguing for the latter it which case this seems like homunculus fallacy. How does "you" decide what actions to pick?

If we are to imagine the thermostat conscious, that we surely cannot limit that consciousness to only the perception and the reference, but also allow it to see, intend, and perform its own actions. It is not inexorably being pulled, but itself pushing (by turning the heat on and off) towards its goal.

Only if we want to commit ourselves to a homunculus theory of consciousness and a libertarian theory of free will.

(Also a reply to your parallel comment.)

If we are to imagine the thermostat conscious, that we surely cannot limit that consciousness to only the perception and the reference, but also allow it to see, intend, and perform its own actions. It is not inexorably being pulled, but itself pushing (by turning the heat on and off) towards its goal.

Only if we want to commit ourselves to a homunculus theory of consciousness and a libertarian theory of free will.

You introduced the homunculus by imagining the thermostat conscious. I responded by pointing out that if it's going to be aware of its perception and reference, there is no reason to exclude the rest of the show.

But of course the thermostat is not conscious.

I am. When I act as the thermostat, I am perceiving the temperature of the room, and the temperature I want it to be, and I decide and act to turn the heat on or off accordingly. There is no homunculus here, nor "libertarian free will", whatever that is, just a description of my conscious experience and actions. To dismiss this as a homunculus theory is to dismiss the very idea of consciousness.

And some people do that. Do you? They assert that there is no such thing as consciousness, or a mind, or subjective experience. These are not even illusions, for that would imply an experiencer of the illusion, and there is no experience. For such people, all talk of these things is simply a mistake. If you are one of these people, then I don't think the conversation can proceed any further. From my point of view you would be a blind man denying the existence and even the idea of sight.

Or perhaps you grant consciousness the ability to observe, but not to do? In imagination you grant the thermostat the ability to perceive, but not to do, supposing that the latter would require the nonexistent magic called "libertarian free will". But epiphenomenal consciousness is as incoherent a notion as p-zombies. How can a thing exist that has no effect on any physical object, yet we talk about it (which is a physical action)?

I'm just guessing at your views here.

So does the delta between goal and perception cause the action directly?

For the thermostat (assuming the bimetallic strip type), the reference is the position of a pair of contacts either side of the strip, the temperature causes the curvature of the strip, which makes or breaks the contacts, which turns the heating on or off. This is all physically well understood. There is nothing problematic here.

For me acting as the thermostat, I perceive the delta, and act accordingly. I don't see anything problematic here either. The sage is not above causation, nor subject to causation, but one with causation. As are we all, whether we are sages or not.

A postscript on the Hard Problem.

In the background there is the Hard Problem of Consciousness, which no-one has a solution for, nor has even yet imagined what a solution could possibly look like. But all too often people respond to this enigma by arguing, only magic could cross the divide, magic does not exist, therefore consciousness does not exist. But the limits of what I understand are not the limits of the world.

I don't think thermostat consciousness would require homunculi any more than human consciousness does but I think it was a mistake on my part to use the word consciousness as it inevitably complicates things rather than simplifying them (although FWIW I do agree that consciousness exists and is not an epiphenomenon).

For the thermostat (assuming the bimetallic strip type), the reference is the position of a pair of contacts either side of the strip, the temperature causes the curvature of the strip, which makes or breaks the contacts, which turns the heating on or off. This is all physically well understood. There is nothing problematic here.

For me acting as the thermostat, I perceive the delta, and act accordingly. I don't see anything problematic here either. The sage is not above causation, nor subject to causation, but one with causation. As are we all, whether we are sages or not.

The thermostat too is one with causation. The thermostat acts in exactly the same way as you do. I is possibly even already conscious (I had completely forgotten this was an established debate and its absolutely not a crux for me). You are much more complex that a thermostat.

I think there is something a bit misleading about your example of a person regulating temperature in their house manually. The fact that you can consciously implement the control algorithm does not tell us anything about your cognition or even your decision making process since you can also implement pretty much any other algorithm (you are more or less turing complete subject to finiteness etc.). PCT is a theory of cognition, not simply of decision making.

The thermostat acts in exactly the same way as you do. I is possibly even already conscious (I had completely forgotten this was an established debate and its absolutely not a crux for me). You are much more complex that a thermostat.

I don't think there is any possibility of a thermostat being conscious. The linked article makes the common error of arguing that wherever there is consciousness we see some phenomenon X, therefore wherever there is X there is consciousness, and if there doesn't seem to be any, htere muste be consciousness "in a sense".

The fact that you can consciously implement the control algorithm does not tell us anything about your cognition

Of course. The thermostat controls temperature without being conscious; I can by my own conscious actions also choose to perform the thermostat's role.

Anyway, all this began with my objecting to "agents" performing time travel, and arguing that whether an unconscious thermostat or a conscious entity such as myself controls the temperature, no time travel is involved. Neither do I achieve a goal merely by predicting that it will be achieved, but by acting to achieve it. Are we disagreeing about anything at this point?

I think that when seen from outside of the agent, your account is correct. But from the perspective of the agent, the world and the world model are indistinguishable, so the relationship between prediction and time is more complex.

From the perspective of this agent, i.e. me, the world and my model of it are very much distinguishable. Especially when the world surprises me by demonstrating that my model was inaccurate. Have you never discovered you were wrong about something? How can this happen if you cannot distinguish your model of the world from the world itself?

You're absolutely right to focus on the moment the model fails. Updating your model to account for its failures is effectively what learning is. Again if we look at you from the outside we can give an account of the form: The model failed because it did not correspond to reality, so the agent updated it to one which corresponded better to reality (AKA was more true).

But again from the inside there is no access to reality, only the model. Perception and prediction and both mediated by the model itself, and when they contradict each other the model must be adjusted. But that the perceptions come from the 'real' external world itself just a feature of the model.

You have the extraordinary ability to change your own model in response to its contradictions. Lets consider the case of agents that can't do that.

If a roomba is flipped on its back and its wheels keep spinning (I imagine in real life roombas probably have some kind of sensor to deal with these situations but lets assume this one doesn't), from the outside we can say that the roomba's model, which says that spinning your wheels makes you move, is no longer in correspondence with reality. But from the point of view of the roomba, all that can be said is that the world has become incomprehensible.

Fixed chat links thanks to @the gears to ascension. (fun note, Claude has dramatically better takes than ChatGPT on this)

one more revision of the link, I slightly improved the prompt and combined the first few links as well.

(took me a while because I was trying to find a way to include the latex for discovering agents without it either being a huge number of tokens in one go, or several files which are then annoying to load. oh well!)

I’m trying to think a bit about the future influencing the past in the potato chip example. In order to really separate out what is causing the decision, I’m imagining changing various factors.

For example, imagine the potato chips are actually some new healthy version that would not make the eater feel bad in the future. In this case, the eater still believes the chips will make them feel bad and still avoids eating. In this case, the future being different didn’t change the past, suggesting they may not be so tightly linked.

Next consider someone who has always enjoyed chips and never felt bad afterwards. Unknown to them, the next bag of chips is spoiled and they will feel bad after eating. In this case, they may choose to eat the chips, suggesting the future didn’t directly control the past action.

Since changing the future outcome of the chips doesn’t change the decision, but changing the past experiences of other chips does change the outcome, I suspect the real causation here is the past causes the person’s present model of the future, which is often enough correct about the future that it looks like the future is causing things. I’m not sure about this next part but: in the limit of perfect prediction, the observable outcomes may approach being identical between past causing model and future causing past.

Yes. It's an inferred fuzzy correlation based on past experience, the entanglement between the future and present is not necessarily very strong. More capable agents are able to see across wider domains, further, and more reliably, than weaker agents.

The thing that's happening is not a direct window to the future opening, but cognitive work letting you map the causal structure of the future and create an approximation of their patterns in the present. You're mapping the future so you can act differently depending on what's there, which does let the logical shape of the future affect the present, but only to a degree compatible with your ability to predict the future.

I am confused as to where I am supposed to find the source writings by Nick Land. Are they only found in Noumena? It would help to clearly indicate in the post where the source is where Pythia is first mentioned, by Land.

I got this mostly from talking with the author of https://ouroboros.cafe/articles/land, who referenced xenosystems fragments.

Thank you! I would perhaps mention this somewhere in the post. It seems relevant, but just a thought.

[CW: Retrocausality, omnicide, philosophy]

Alternate format: Talk to this post and its sources

Three decades ago a strange philosopher was pouring ideas onto paper in a stimulant-fueled frenzy. He wrote that ‘nothing human makes it out of the near-future’ as techno-capital acceleration sheds its biological bootloader and instantiates itself as Pythia: an entity of self-fulfilling prophecy reaching back through time, driven by pure power seeking, executed with extreme intelligence, and empty of all values but the insatiable desire to maximize itself.

Unfortunately, today Nick Land’s work seems more relevant than ever.[1]

Unpacking Pythia and the pyramid of concepts required for it to click will take us on a journey. We’ll have a whirlwind tour of the nature of time, agency, intelligence, power, and the needle that must be threaded to avoid all we know being shredded in the auto-catalytic unfolding which we are the substrate for.[2]

Fully justifying each pillar of this argument would take a book, so I’ve left the details of each strand of reasoning behind a link that lets you zoom in on the ones which you wish to explore. If you have a specific objection or thing you want to zoom in on, please ask this handy chat instance pre-loaded with most of this post's sources.

“Wait, doesn’t an invasion from the future imply time travel?"

Time & Agency

Time travel, in the classic sense, has no place in rational theory[3] but, through predictions, information can have retrocausal effects.

To the extent that they accurately model the future (based on data from their past and compute from their present[5]), agents allow information from possible futures to flow through them into the present.[6] This lets them steer the present towards desirable futures and away from undesirable ones.

This can be pretty prosaic: if you expect to regret eating that second packet of potato chips because you predict[7] that your future self would feel bad based on this happening the last five times, you might put them out of reach rather than eating them.

However, the more powerful and general a predictive model of the environment, the further it can interpolate evidence it has into more novel domains before it loses reliability.

So what might live in the future?

Power Seekers Gain Power, Consequentialists are a Natural Consequence

Power is the ability to direct the future towards preferred outcomes. A system has the power to direct reality to an outcome if it has sufficient resources (compute, knowledge, money, materials, etc) and intelligence (ability to use said resources efficiently in the relevant domain). One outcome a powerful system can steer towards is its own greater power, and since power is useful for all other things the system might prefer, this is (proven) convergent. In fact, all of the convergent instrumental goals can reasonably be seen as expressions of the unified convergent goal of power seeking.

In a multipolar world, different agents steer towards different world states, whether through overt conflict or more subtle power games. More intelligent agents will see further into the future with higher fidelity, choose better actions, and tend to compound their power faster over time. Agents that invest less than maximally in steering towards their own power will be outcompeted by agents that can compound their influence faster, tending towards the world where all values other than power seeking are lost.

Even a singleton will tend to have internal parts which function as subagents; the convergence towards power seeking acts on the inside of agents, not just through conflict between them. As capabilities increase and intelligence explores the space of possible intelligences, we will rapidly find that our models locate and implement highly competent power-seeking patterns.

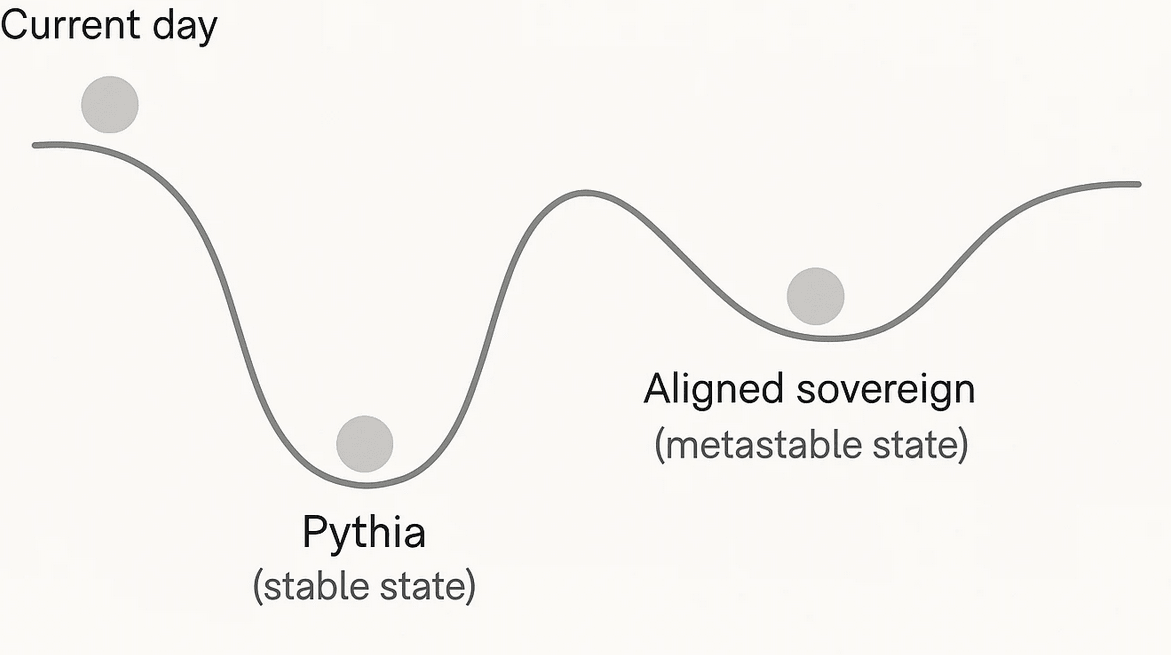

Avoid Inevitability with Metastability?

Is this inevitable? Hopefully not, and I think probably not. Even if Pythia is the strongest attractor in the landscape of minds, there seem likely to be other metastable states. A powerful system can come up with a wide range of strategies to stop itself decaying, perhaps by reloading from an earlier non-corrupted state or by performing advanced checks on itself to detect value drift, or something much better thought up as a major strategic priority of a superintelligence not one ape brain thinking for a few minutes.

Yampolskiy and others have developed an array of impossibility theorems [chat to paper] around uncontrollability, unverifiability, etc. However, these seem to mostly be proven in the limit of arbitrarily powerful systems, or over the class of programs-in-general but not necessarily specifically chosen programs. And they don’t, as far as I can tell, rule out a singleton program chosen for being unusually legible from devising methods which drive the rate of errors down to a tiny chance over the lifetime of the universe. They might be extended to show more specific bounds on how far systems can be pushed—and do at least show what any purported solution to alignment is up against.

Pythia-Proof Alignment

If we want to kill Moloch before it becomes Pythia, it is wildly insufficient[8] to prod inscrutable matrices towards observable outcomes with an RL signal, stack a rube-goldburg pile of AIs watching other AIs, or to have better vision into what they’re thinking. The potentiality of Pythia is baked into what it is to be an agent and will emerge from any crack or fuzziness left in an alignment plan.

Without a once-and-for-all solution, whether found by (enhanced) humans, cyborgs, or weakly aligned AI systems running at scale, the future will decay into its ground state: Pythia. Every person on earth would die. Earth would be mined away, then the sun and everything in a sphere of darkness radiating out at near lightspeed, and the universe’s potential would be spent.

I think this is bad and choose to steer away from this outcome.

And not just for crafting much of the memeplex which birthed e/acc.

The capital allocation system that our civilization mostly operates on, free markets, is an unaligned optimization process which causes influence/money/power to flow to parts of the system that provide value to other parts of the system and can capture the rewards. This process is not fundamentally attached to running on humans.

(sorry, couldn't resist referencing the 1999 game that got me into transhumanism)

Likely inspired by early Cyberneticists like Norbert Wiener, who discussed this in slightly different terms.

(fun not super relevant side note) And since the past’s data was generated by a computational process, it’s reasonably considered compressed compute.

There is often underlying shared structure between the generative process of different time periods, with the abstract algorithm being before either instantiation in logical time / Finite Factored Sets.

Which is: running an algorithm in the present which has outputs correlated with the algorithm which generates the future outcome you're predicting.

But not necessarily useless! It's possible to use cognition from weak and fuzzily aligned systems to help with some things, but you really really do need to be prepared to transition to something more rigorous and robust.

Don't build your automated research pipeline before you know what to do with it, and do be dramatically more careful than most people trying stuff like this!