Thanks for the post! Do you think there is an amount of pretraining you can do such that no fine-tuning (on a completely non-complementary task, away from pre-trained distribution, say) will let you push out of that loss basin? A 'point of no return' s.t. even for very large values of LR and amount of fine-tuning you will get a network that is still LMC?

I think a point of no return exists if you only use small LRs. I think if you can use any LR (or any LR schedule) then you can definitely jump out of the loss basin. You could imagine just choosing a really large LR to basically resent to a random init and then starting again.

I do think that if you want to utilise the pretrained model effectively, you likely want to stay in the same loss basin during fine-tuning.

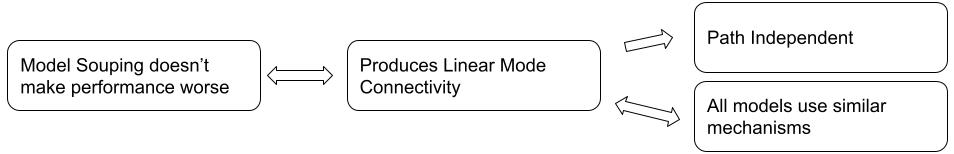

TL;DR: I claim that supervised fine-tuning of the existing largest LLMs is likely path-dependent (different random seeds and initialisations have an impact on final performance and model behaviour), based on the fact that when fine-tuning smaller LLMs, models pretrained closer to convergence produce fine-tuned models with similar mechanisms while this isn’t the case for models pretrained without being close to convergence; this is analogous to current LLMs that are very far from convergence at the end of training. This is supported by linking together existing work on model souping, linear mode connectivity, mechanistic similarity and path dependence.

Epistemic status: Written in about two hours, but thought about for longer. Experiments could definitely test these hypotheses.

Acknowledgements: Thanks to Ekdeep Singh Lubana for helpful comments and corrections, and discussion which lead to this post. Thanks also to Jean Kaddour, Nandi Schoots, Akbir Khan, Laura Ruis and Kyle McDonell for helpful comments, corrections and suggestions on drafts of this post.

Terminology

Linking terminology together:

Overall this gives us this picture of properties a training process can have:

Current Results

Takeaway: BERT, and the base models in Learning to summarize from human feedback, are probably not trained to convergence, or even close to it. Here, supervised fine-tuning is path dependent - different random seeds can get dramatically different results (both for reward modelling and standard NLP fine-tuning). Models that are trained closer to convergence (T5, RoBERTa, the pretrained vision models in the model soup work) show more gains from model souping, and hence the supervised fine-tuning process produces LMC models and is therefore likely path-independent. Note that this is still only true for reasonable learning rates - if you pick a very large LR then you can end up with a model in a different loss basin, and hence not LMC and not mechanistically similar.

Speculation

Existing large language models are trained for only a single epoch because we have enough data, and this is the compute-optimal way to train these models. This means they’re not trained until convergence, and hence more like BERT than RoBERTa or T5. Hence, supervised fine-tuning these models will be a path-dependent process: different runs will get different models that are using different predictive mechanisms, and hence will generalise differently out-of-distribution. Larger learning rates may also lead to more path dependence. This provides a more fine-grained and supported view than Speculation on Path-Dependance in Large Language Models.

Speculative mechanistic explanation

The pretrained model infers many features which are useful for performing the fine-tuning task. There are many ways of utilising these features, and in utilising them during fine-tuning they will likely be changed or adjusted. There are many combinations of features that all achieve similar performance in-distribution (remember that neural networks can memorise random labels perfectly; in fine-tuning we’re heavily overparameterised), but they’ll perform very differently out-of-distribution.

If the model is more heavily trained during pre-training, it’s likely a single set of features will stand out as being the most predictive during fine-tuning, so will be used by all fine-tuning training runs. From a loss landscape perspective, the more heavily pre-trained model is deeper into a loss basin, and if the fine-tuning task is at least somewhat complementary to the pretraining task, then this loss basin will be similar for the fine-tuning task, and hence different fine-tunes are likely to also reside in that same basin, and hence be LMC.

Implications