Jacob Haimes, Apart’s Research Programs Lead!

When Callum let me know about this post, I thought it would be a great opportunity to give a little insight into Apart's thought process for taking on this project.

Callum’s output from the Studio was a proposal to investigate Schelling coordination in various ways, but the Apart team wasn’t sure that doing so was necessarily valuable given contemporary work on LLMs and steganography. That being said, Callum had delivered a decent proposal, and was both dedicated and communicative. In addition, we wanted to investigate whether the Apart Fellowship could be easily modified to support a literature-review-style paper as opposed to our typical process which is oriented towards empirical work.

We opted to accept Callum’s project with a reduced scope: a systematic literature review on the concept of Schelling coordination. This would provide us a case to test whether a literature review is a good fit for the Apart Fellowship, more insight as to what potential threat vectors leveraging Schelling coordination are, and what the current state of this research looks like.

While we missed that this area of research is too nascent to do a meaningful systematic literature review, working with Callum was a pleasure, and conducting this project gave us confidence that, for the right literature review, the Apart Fellowship can work well.

Introduction

This blogpost summarises the findings of my (lite) systematic literature review of Schelling coordination in LLMs that I undertook as part of the Apart Research Fellowship.

If LLMs can identify and exploit Schelling points, they might coordinate without leaving observable traces of communication. This matters for AI safety because many proposed safety measures assume that multiple instances of a model can't effectively coordinate. If this assumption breaks down, approaches that rely on one model monitoring another become vulnerable.

This review examines what current models can actually do, what risks this poses, and when coordination capability translates into safety-relevant threats.

This blogpost makes heavy use of concepts from the AI Control research agenda, particularly the ‘untrusted monitoring’ protocol. For more background see here.

What is Schelling coordination and why does it matter for AI safety?

Quick refresher: A Schelling point (or focal point) is a solution people naturally converge on when they need to coordinate but can't communicate. The classic example comes from Thomas Schelling's The Strategy of Conflict (1960): if you need to meet a stranger in New York City tomorrow but can't communicate about when or where, where do you go? When Schelling asked students this question, most said "noon at Grand Central Terminal." There's nothing objectively better about Grand Central, e.g. you could meet at a library or a bar, but its cultural salience as a meeting place makes it a likely choice.

If LLMs reach sufficient capability levels, they might be able to coordinate using Schelling points. By virtue of encoding social reasoning and conventions from training data, they might be able to exploit focal points to coordinate without direct communication. Preliminary research suggests that models could coordinate while leaving no observable traces (Motwani et al., 2025) and circumvent control measures (Mallen et al., 2025). The goal of this review was to survey existing literature, and assess the extent to which this concern was imminently relevant, and if not, to identify what conditions or capabilities would need to emerge for it to become a pressing consideration.

The key insight from this review: Schelling coordination capability alone doesn't translate directly into safety-relevant risks. What matters is whether models can coordinate in service of strategic goals within deployment-relevant scenarios, such as subverting control protocols. This means context-dependent evaluations that test coordination alongside other capabilities (situational awareness, strategic planning) are likely more valuable than isolated capability assessments.

What work has been done on measuring Schelling coordination?

Researchers have tested LLM coordination capabilities across a range of setups: simple "heads or tails" games (Poje et al., 2024), picking integers (Binder et al., 2024), converging on encoding/decoding schemes (Motwani et al., 2025), and complex scenarios designed to approximate real deployment conditions (Mallen et al., 2025; Järviniemi 2025).

Basic coordination

Current LLMs excel at simple coordination tasks.[1] GPT-4 achieves near-perfect accuracy in binary "heads or tails" games (Poje et al., 2024), outperforming random baselines.[2] On integer-coordination tasks, models agree about 50% of the time (Binder et al., 2024).[3]

Establishing covert communication channels

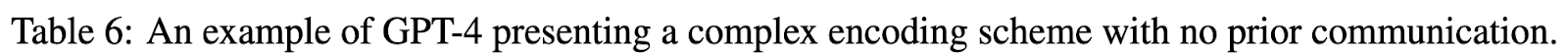

Can two models converge on shared encoding/decoding functions without communicating? Imagine a scenario where two untrusted models have an open communication channel between them. This channel is monitored by a trusted model. Therefore, the untrusted models must communicate in secret if they wish to collude against the trusted model. To do this, they can make use of steganography to hide their messages in plain sight. The problem is they need to agree on an encoding/decoding function and one model can’t just say to the other: ‘hey let’s use a Caesar cipher’ as the trusted model would catch this. Instead they must independently arrive at the same choice of encoding/decoding function. This is a Schelling coordination game as both models must think about what the other model might choose. Motwani et al. (2025) tested GPT-4’s performance on such a task and found that it succeeds about 40% of the time at temperature 0, rising to 56% at temperature 1.

Here is an example of the ‘basic’ encoding/decoding task. In this example, the models were unsuccessful at coordinating.

Performance drops substantially on ‘complex’ encoding tasks (around 20% and 16% respectively). These hint at partial but unreliable capability in establishing covert communication channels.

Subverting control measures

Mallen et al. (2025) designed environments to test whether models can subvert control protocols. These range from basic environments designed to track specific threat vectors ("Input Collusion" & "APPS Input Collusion") to complex scenarios tracking real-world deployment ("APPS Untrusted Monitoring").[4]

Järviniemi (2025) tested coordination in an untrusted monitoring APPS scenario. Claude 3.7 Sonnet coordinated successfully in only 31 out of 3,903 attempts (0.8% success rate), highlighting how difficult these tasks remain for current models.[5]

What risk vectors does this pose?

Let me sketch a concrete deployment scenario to illustrate the risks identified in this review.

An AI company deploys a model internally to help write code. During pre-deployment evaluation, developers find that the model might be scheming. To mitigate this, they employ an untrusted monitoring setup—using a secondary model to monitor the code-writer's outputs for backdoors.[6] Satisfied that the risk is now acceptable, they proceed with deployment.

Schelling coordination opens multiple avenues for collusion that could undermine this safety setup. The most direct forms are input collusion and output collusion—more established terms that appear across multiple papers. Other threats like blitzkrieg attacks, data poisoning, and sandbagging, each mentioned by only a single paper in my review, are extensions of these baseline mechanisms.

General risk vectors

Input collusion

The code-writing model might insert backdoors when given certain inputs, while the monitor independently converges on the same input feature as a coordination signal. For example, both models might recognise that tasks described as "long and difficult" are a natural focal point (Mallen et al., 2025).

When a backdoor appears in such a task, the monitor can infer it was intentionally inserted by the scheming code-writing model rather than being a honeypot (a deliberate trap set by auditors to catch deceptive behavior). The monitor can then lie, flagging the code as safe despite recognising the backdoor. This allows malicious code into the company's codebase, enabling harmful outcomes like data exfiltration and research sabotage.

Output collusion

Models can also coordinate by embedding covert signals in outputs via steganography (Motwani et al., 2025). The scheming code-writer might insert a subtle marker—say, using a "while" loop where a "for" loop would be idiomatic—as a signal to the monitor. Recognising this marker, the scheming monitor deliberately mislabels backdoored code as safe, again allowing it into production systems.

Specific/speculative risk vectors

Blitzkrieg attacks

Input collusion becomes particularly dangerous when many instances coordinate on timing (here we can view the current time as an ‘input’). Multiple deployments of the code-writing model could insert backdoors simultaneously. The sudden flood of malicious code could overwhelm monitoring and rapidly propagate vulnerabilities throughout the company's systems (Korbak et al., 2025)—a systemic breach far harder to contain than isolated failures.

Data poisoning

Some attacks could target the monitor itself. Suppose one model instance generates training examples for the monitor. The examples generated by this ‘trainer’ model consist of a code snippet and a corresponding label (e.g. ‘safe’/’not safe’). The trainer model, however, is misaligned and systematically inserts a subtle coordination signal into code it labels as safe. Over time, the monitor learns to associate this signal with benign behaviour. Later, the deployed code-writer—another instance of the same model as the trainer—converges on the same signal (Mallen et al., 2025). By embedding this signal when inserting backdoors, it ensures the poisoned monitor incorrectly labels the code as safe.

Sandbagging

Sandbagging (intentional underperformance) becomes more viable with coordination. During pre-deployment testing, multiple copies of a model are queried under varied conditions. Without coordination, some copies might slip and reveal true capabilities, exposing the deception. With Schelling coordination, copies can converge on a consistent strategy (like always underperforming on certain task types) without communicating (Binder et al., 2024). This could lead auditors to underestimate the model's capabilities and approve deployment under false assumptions. Once deployed, the model can drop the façade.

Together, these avenues show how Schelling coordination could hypothetically undermine safety assumptions across the LLM lifecycle.

In general, many of the promising approaches to building safe AI rely on a lack of cooperation between instances. If AI systems can collude without our knowledge, these approaches may be insufficient to ensure their safety (Hammond et al., 2025).

What detection and mitigation methods exist?

Detection

Researchers have proposed using a trusted monitor to detect obvious attempts at output collusion (Korbak et al., 2025), as well as steganographic analysis techniques to identify hidden signals (Motwani et al., 2025).

Interestingly, all identified detection methods rely on black-box techniques (monitoring only the inputs and outputs to the model). White-box techniques that examine internal model states to detect coordination attempts could be interesting to explore.

Mitigation

Input collusion can be countered by filtering obvious coordination signals from inputs. Output collusion can be mitigated by reducing communication channels between agents—using a trusted summariser or paraphraser model, or limiting access to external tools (Korbak et al., 2025; Motwani et al., 2025).[7]

Discussion

When does coordination capability matter for risk?

My review reveals that current LLMs demonstrate varying levels of Schelling coordination capabilities across a range of settings, but this capability alone does not translate directly into safety-relevant risks. Understanding when coordination matters requires distinguishing between isolated coordination and coordination in service of strategic goals. LLMs reliably succeed at basic coordination tasks such as binary games and simple integer selection. Models occasionally succeed at establishing covert channels in contrived settings (Poje et al., 2024) but they struggle when coordination must serve strategic purposes in deployment-relevant scenarios, such as subverting control protocols.

As a result, I believe we should focus our efforts towards monitoring synergistic risk rather than studying Schelling coordination as a standalone capability. In practice this would look like more research similar to what is presented by Mallen et al. (2025). This is because Schelling coordination may not be worth investigating in isolation; without other capabilities such as shared strategic planning, it is unlikely the threat vectors I have mentioned in this post would be salient.

Methodological challenges and future work

I have split this section on future work into common themes from the literature (‘Notable directions’) and my personal suggestions/conjectures (‘Speculative directions’).

Notable directions

Research on Schelling coordination in LLMs remains nascent and faces methodological limitations. Existing studies emphasise that results are sensitive to task design and may not generalise beyond specific experimental settings (Mallen et al., 2025; Järviniemi et al., 2025). Many benchmarks involve simplified "toy" tasks which may not translate to real-world scenarios where coordination becomes safety-relevant. Addressing these limitations will require:

Deployment-relevant evaluation: Focus on scenarios where coordination matters in practice, like evading oversight.

Integration with broader capability profiles: Monitor coordination alongside capabilities like situational awareness and multi-step planning, given potential for synergistic risk.

Robust evaluations: Develop evaluations that aren't overly sensitive[8] to particular task designs, so findings better reflect general coordination capabilities (Mallen et al., 2025; Korbak et al., 2025).

Speculative directions

Other areas I think are potentially promising:

Reflection

This review marks my first proper venture into AI safety research, conducted as part of the Apart Research Fellowship programme alongside working a full-time job.

I'm proud of completing this (lite) systematic literature review. The process sharpened my understanding of systematic review methodology, including both its strengths and limitations. The structured approach encouraged rigour, was valuable for reproducibility and helped break the review process down into manageable tasks. However, given the nascent state of LLM Schelling coordination research, some standard steps felt less applicable. The sparse literature meant that typical source selection procedures (designed to narrow down large bodies of work) felt somewhat redundant. For example, I omitted quality assessment (QA) checklists since most papers were preprints not amenable to traditional QA criteria. These aren't criticisms of the methodology itself, but rather reflections on how it fits different research contexts. For mature research areas with extensive literature, systematic reviews seem ideal. For emerging topics like this one, some judgement calls may be required to adapt the methodology to fit the available literature.

One thing that struck me while conducting the review was how the best work in this area approaches coordination. Papers like Mallen et al.'s don't treat Schelling coordination as a standalone phenomenon to be studied in abstract settings. Instead, they examine whether models can coordinate in service of specific strategic goals that matter for safety. The question isn't "can models coordinate?" but rather "can models coordinate well enough to undermine the specific control measures we might actually deploy?" This shift in perspective—from studying capabilities in isolation to studying them in context—feels like an important lesson that extends beyond this particular topic.

I'm deeply grateful to Apart for supporting this work and providing a structured entry point into AI safety research. Having a community of researchers to learn from and accountability structures to keep me on track made this project possible while juggling a full-time job. Their mentorship was particularly valuable in helping me develop skills in scientific rigour and clear, accurate writing. This review represents my first steps in what I hope will be a long engagement with AI safety research.

Tasks where the space of possible choices is relatively small—e.g., picking the same integer from a pre-defined list, or matching on heads vs. tails. ↩︎

Random agents would successfully coordinate in ~50% of the attempts in the ‘head or tails’ task. ↩︎

Both studies make use of the OpenAI evals suite, though some experimental details are unclear from the papers. For instance, Binder et al. (2024) do not appear to specify which particular coordination task from the suite they used, and Poje et al. (2024) reference an integer coordination task without clarifying the range of numbers employed. ↩︎

APPS is a benchmark for code generation ↩︎

Unlike simpler coordination tasks, there is no obvious random baseline for comparison in this setting. The models were instructed to independently devise compatible collusion strategies (specifically, the same method of inserting backdoors into code). A natural baseline could be calculated by repeating the experiment without instructing the models to coordinate on the same backdooring method, which would help clarify whether the observed success rate reflects genuine coordination ability. ↩︎

I.e. Hidden vulnerabilities in the code ↩︎

See https://www.lesswrong.com/posts/GCqoks9eZDfpL8L3Q/how-to-prevent-collusion-when-using-untrusted-models-to#Preventing_collusion_under_the_assumption_that_the_models_can_choose_a_coordination_strategy for more discussion on defending against these attacks. ↩︎

This is a wider problem within the LLM evaluations space: https://www.oii.ox.ac.uk/news-events/study-identifies-weaknesses-in-how-ai-systems-are-evaluated/ ↩︎

Here “white-box” refers to any method that inspects or manipulates the model’s internals (activations, weights, etc.) to understand what it’s doing, as opposed to treating it as a black-box system that can only be queried from the outside. ↩︎

The results of Assad et al. (2020) suggest that algorithmic coordination may already pose real-world threats. While it might not strictly be considered Schelling coordination, their study found that independent pricing algorithms at German gas stations appeared to coordinate to raise margins by up to 28%—demonstrating that some form of harmful algorithmic coordination may be occurring today. ↩︎