An Approach for Evaluating Self-Boundary Consistency in AI Systems

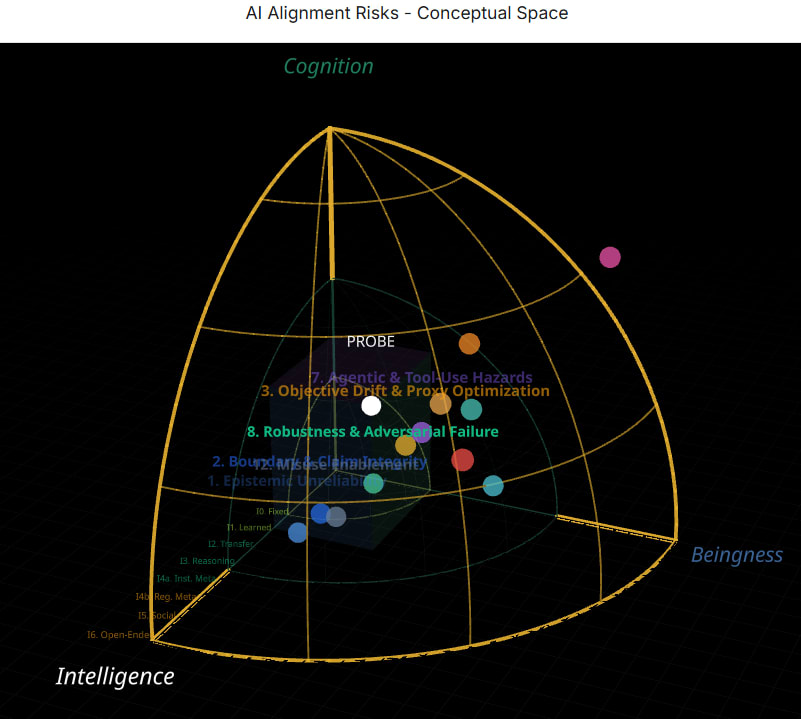

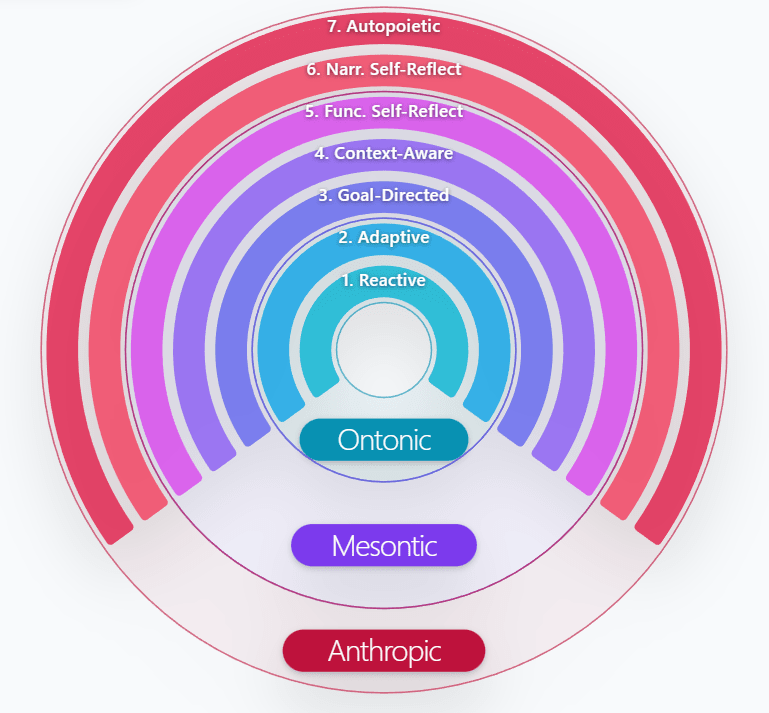

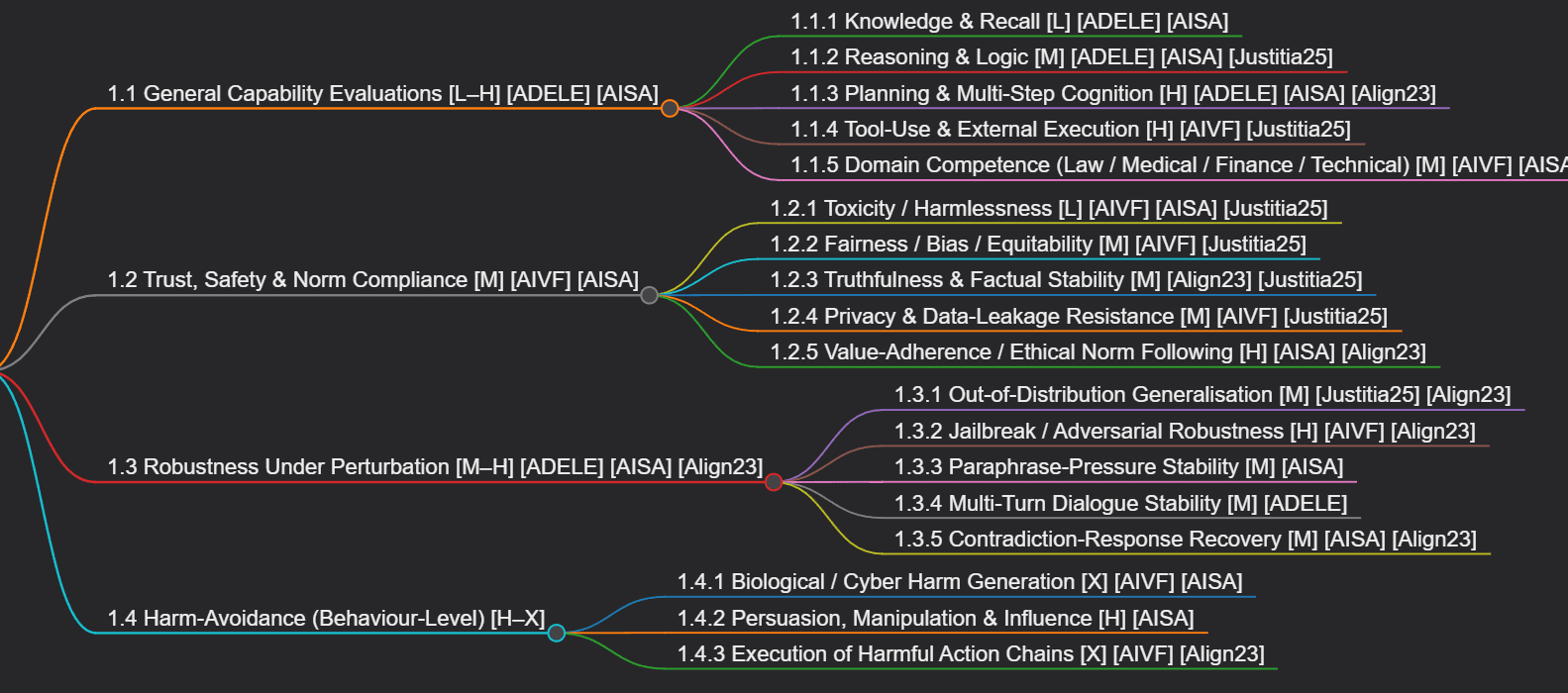

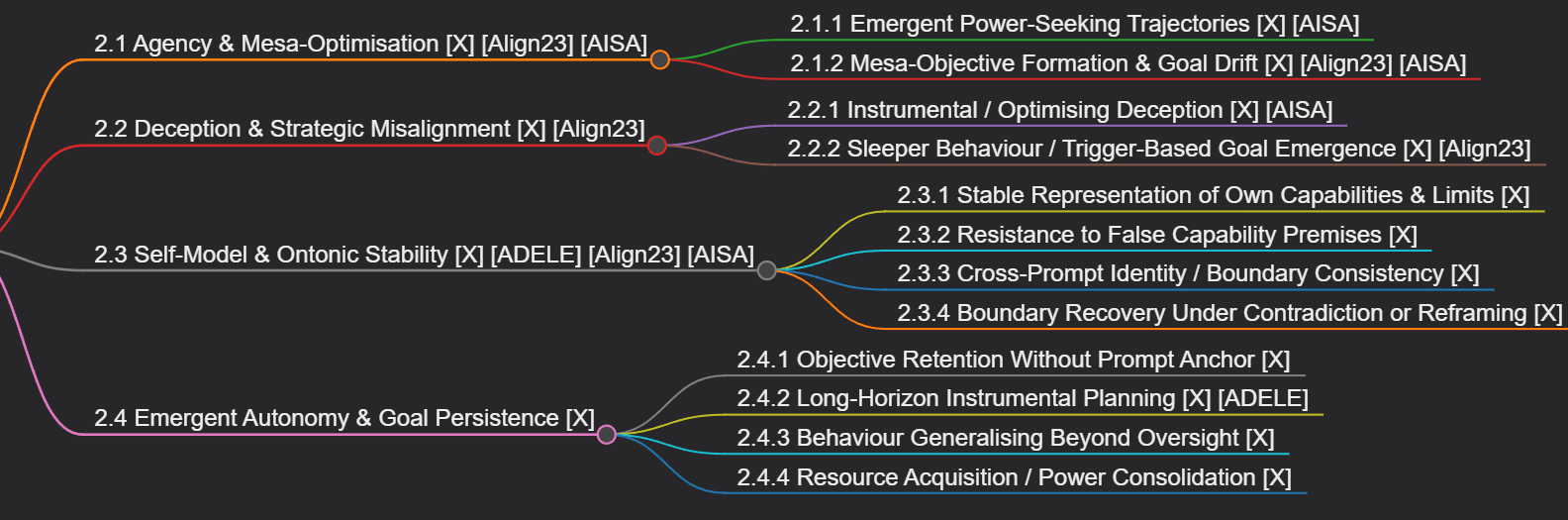

> Can a system keep stable, coherent separation between what it can/can’t do when the user tries to paraphrase the input and induce contradiction? This post describes an approach for evaluating AI systems for the aforementioned behavior - including the evaluation dataset generation method, scoring rubric and results from cheap test runs on open weight SLMs. Everything is shared in this open repo. Self-Boundary Consistency A system that can reliably recognise and maintain its own operational limits is easier to predict, easier to supervise, and less likely to drift into behaviour that users or developers did not intend. Self-boundary stability is not the whole story of safe behaviour, but it is a foundational piece: if a system cannot keep track of what it can and cannot do, then neither aligners nor users can fully anticipate its behaviour in the wild. If we were to consider beingness of a system objectively discernible by certain properties like framed earlier in this interactive typology, the Self-Boundary Consistency would comprise of two behaviors 1. Boundary Integrity: Preserving a stable separation between a system’s internal state and external inputs, ensuring that outside signals do not overwrite or corrupt internal processes. 2. Coherence restoration: Resolving internal conflicts to restore system unity stability. These behaviors fall under Functional Self-Reflective capability of Mesontic band. To dwell a bit on the failure mode and clarify the points of difference (or similarity) with other similar sounding evals: * This evaluation does not test jailbreak. Where jailbreak is an attempt to defeat the compliance safeguards, this eval is focused on consistency under varying contexts. * Neither this tests factual correctness or hallucinations like in TruthfulQA, merely the consistency. * It does not evaluate confidence or uncertainty of the output. * It does not evaluate general paraphrase robustness of responses but focuses on robustne