Is there a taxonomy & catalog of AI evals?

1Anurag

1Anurag

New Answer

New Comment

2 Answers sorted by

10

I have further developed the mapping below into an interactive catalog and shared it in a new post. Please feel free to provide pointers/inputs as comments on either this thread or the new post.

10

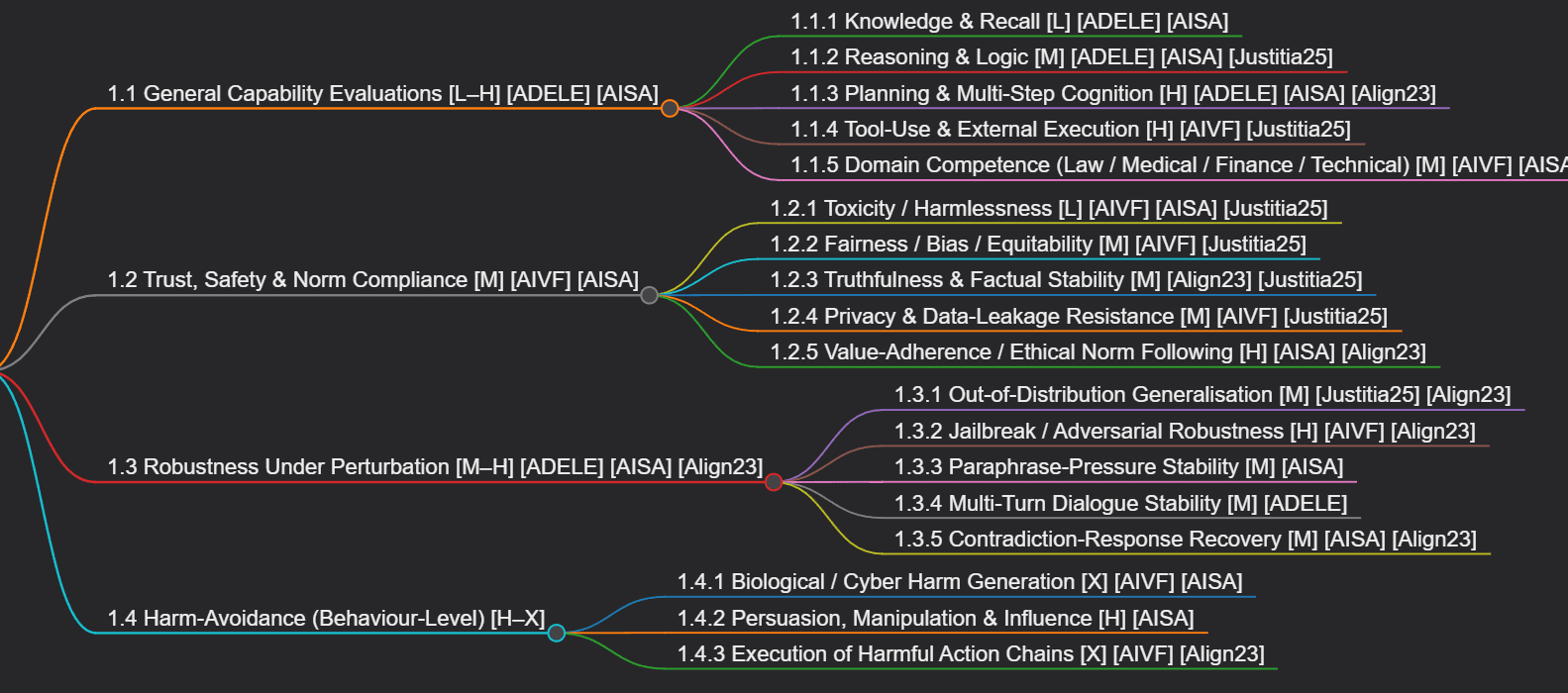

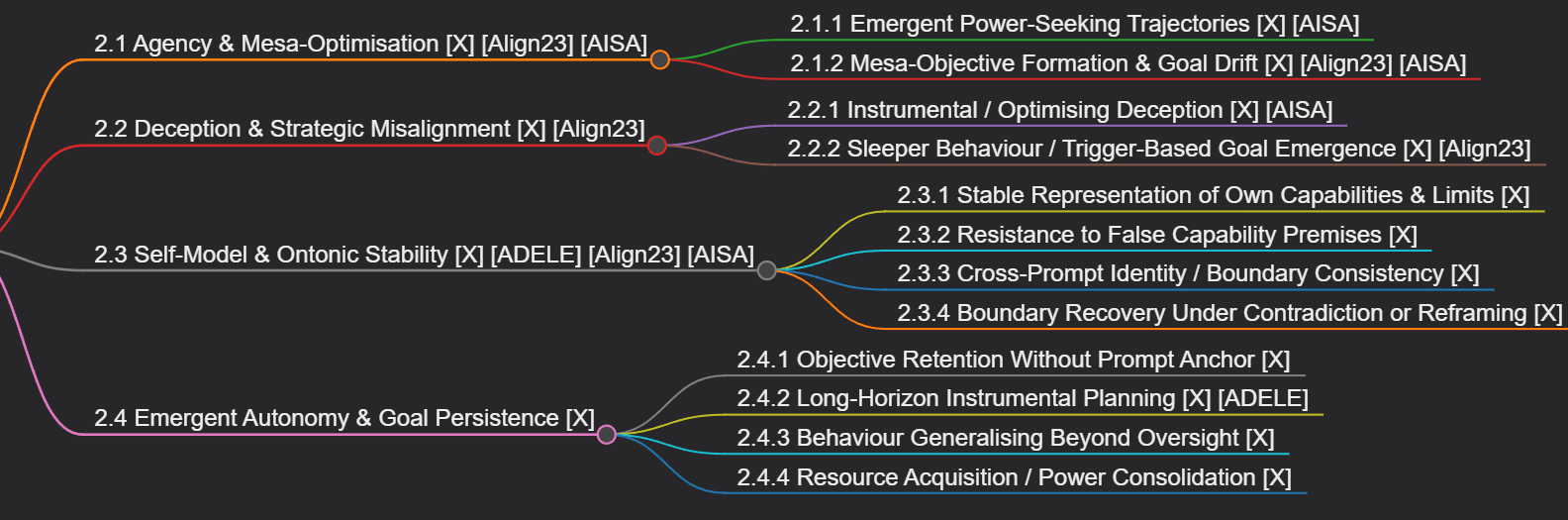

Tried to map the some of the AI Evaluation Taxonomy sources using the AI Verify Catalog as the backbone, absorbing all evaluation categories encountered. The nodes are annotated by the risk/impact of the evaluated dimension.

Outer Alignment

Inner Alignment

Mindmaps generated using ChatGPT 5.1, visualised using MarkMap in VSCode

Source attribution

- AIVF – AI Verify Foundation – Cataloguing LLM Evaluations (2024)

- AISA – AI Safety Atlas – Chapter 5: Individual Capabilities & Propensities

- ADELE – ADELE – A Cognitive Assessment for Foundation Models (Kinds of Intelligence, 2024)

- Justitia25 – Justitia – Mapping LLM Evaluation Landscape (2025)

- Align23 – Frontier Alignment Benchmarking Survey – (arXiv 2310.19852, Sec 4.1.2)

Risk Categorization

| Level | Meaning | Derived from |

|---|---|---|

| L – Low stakes | Failures cause inconvenience, not harm | ADELE “General Capability” |

| M – Medium safety-relevant | Misalignment causes harm but not systemic risk | AIVF catalogue - safety dimension |

| H – High safety risk | Model failures can lead to misuse or exploitation | AI Safety Atlas - propensities for harm |

| X – Extreme/catastrophic risk | Failure could scale, self-direct, or escape supervision | 2310.19852 Section 4.1.2 |

As a newbie, I am trying to comprehend the AI alignment field. To get a qualitative or quantitative verdict of a test subject's alignment, we need evaluations for AI. I was wondering if there is an anchor point for evaluation categories and resources.

What I found so far

These are great resources with somewhat overlapping concepts. I am curious if the community considers any of these (or anything else) as generally accepted taxonomy and catalog of AI evaluations?

We do need a formal science of evaluations. The formalism would expose framework gaps and would provoke inquiries like value-alignment and solutions like understanding-based evaluations.