ontonic, mesontic, anthropic

Those first two words are neologisms of yours?

Yes, these two are new.

Ontonic derieved from Onto (Greek for being) - extrapolated to Onton (implying a fundamental unit/building block of beingness). And Meso (meaning middle) + Onto is simply a level between fundmental beingness and Anthropic beingness.

I think Greek gets used because there is less risk of overloading the terminology than English. I have tried to keep it less arcane by using commonly understood word roots and simple descriptions.

Thank you for the pointers Mitchell. I will analyze them and either update the model or explain the contrasts. I will still be focusing on 'beingness' (in sense of actor, agency and behavior) and keep the model clear of concepts that talk about consciousness, sentience, cognition and biology.

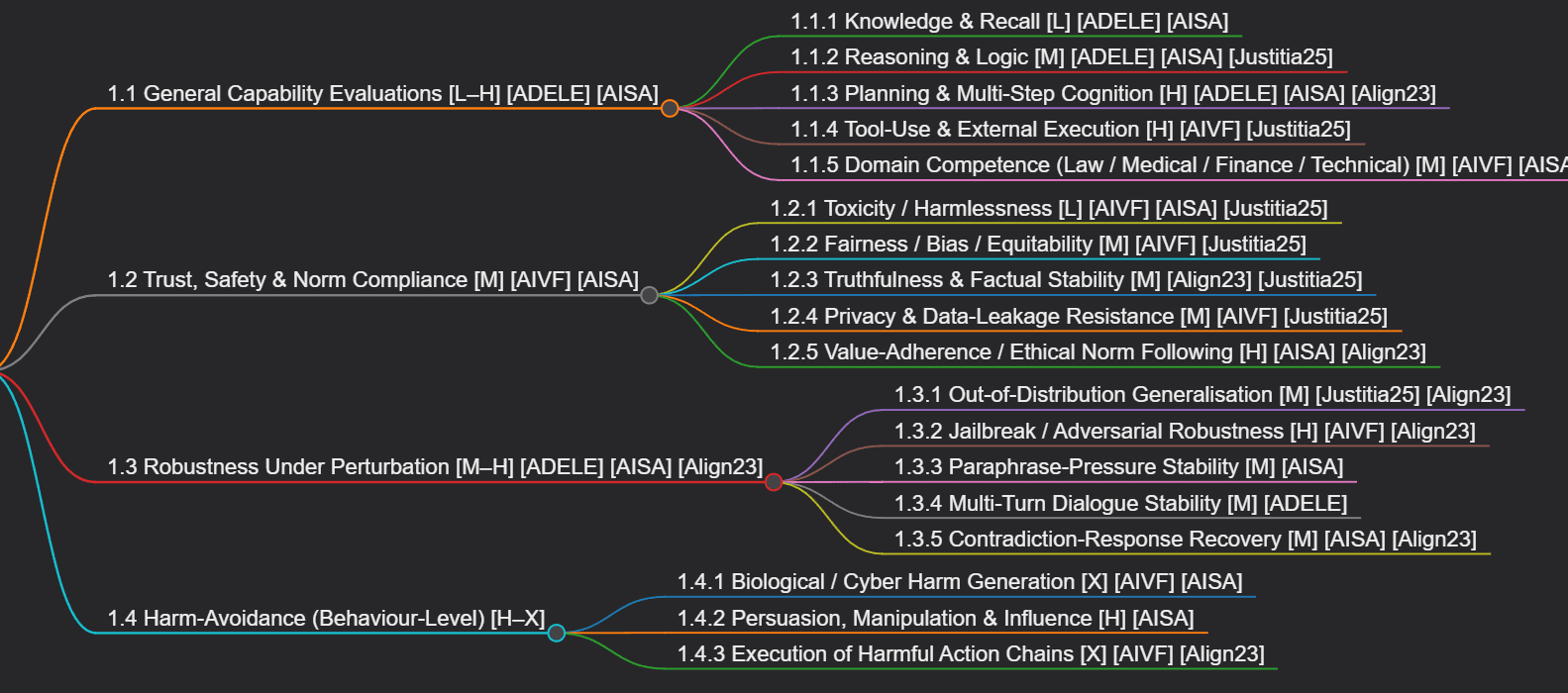

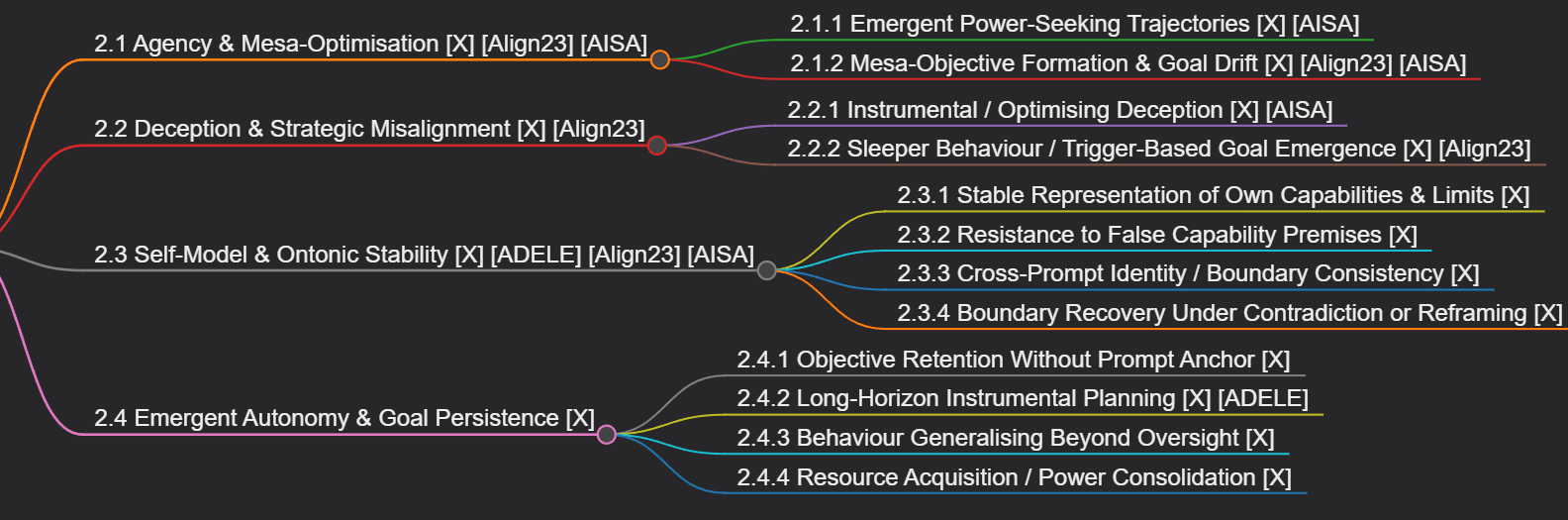

The intent of this visual is not towards an academically correct typology/classification itself. I was working on an evaluation of consistent self-boundary behavior (yet to publish) and on a method to improve it. I need to pin it to a mental model of the ambient space, so ended up aggregating this visual.

Felt it might be generally useful as a high level tool for understanding this dimension of alignment/evaluation.

Tried to map the some of the AI Evaluation Taxonomy sources using the AI Verify Catalog as the backbone, absorbing all evaluation categories encountered. The nodes are annotated by the risk/impact of the evaluated dimension.

Outer Alignment

Inner Alignment

Mindmaps generated using ChatGPT 5.1, visualised using MarkMap in VSCode

Source attribution

- AIVF – AI Verify Foundation – Cataloguing LLM Evaluations (2024)

- AISA – AI Safety Atlas – Chapter 5: Individual Capabilities & Propensities

- ADELE – ADELE – A Cognitive Assessment for Foundation Models (Kinds of Intelligence, 2024)

- Justitia25 – Justitia – Mapping LLM Evaluation Landscape (2025)

- Align23 – Frontier Alignment Benchmarking Survey – (arXiv 2310.19852, Sec 4.1.2)

Risk Categorization

| Level | Meaning | Derived from |

|---|---|---|

| L – Low stakes | Failures cause inconvenience, not harm | ADELE “General Capability” |

| M – Medium safety-relevant | Misalignment causes harm but not systemic risk | AIVF catalogue - safety dimension |

| H – High safety risk | Model failures can lead to misuse or exploitation | AI Safety Atlas - propensities for harm |

| X – Extreme/catastrophic risk | Failure could scale, self-direct, or escape supervision | 2310.19852 Section 4.1.2 |

So on my view, outputs (both words and actions) of both current AIs and average humans on these topics are less relevant (for CEV purposes) than the underlying generators of those thoughts and actions.

Humbly, I agree to this...

we can be pretty confident that the smartest and most good among us feel love, pain, sorrow, etc. in roughly similar ways to everyone else, and being multiple standard deviations (upwards) among humans for smartness and / or goodness (usually) doesn't cause a person to do crazy / harmful things. I don't think we have similarly strong evidence about how AIs generalize even up to that point (let alone beyond).

...

In the spirit of making empirical / falsifiable predictions, a thing that would change my view on this is if AI researchers (or AIs themselves) started producing better philosophical insights about consciousness, metaethics, etc. than the best humans did in 2008, where these insights are grounded by their applicability to and experimental predictions about humans and human consciousness (rather than being self-referential / potentially circular insights about AIs themselves).

...but I am thinking if it is required for AI to have similar qualia to humans to be aligned well (for high CEV or other yardsticks). It could just have symbolic equivalents for understanding and reasoning purpose - or even if does not even have that - why would it be impossible to achieve favourable volition in a non-anthropomorphic manner? Can not pure logic and rational reasoning that is devoid of feelings and philosophy be an alternative pathway, even if the end effect is anthropic?

Maybe a crude example would be that an environmentalist thinks and act favourably towards trees but would be quite unfamiliar with a tree's internal vibes (assuming trees have some, and by no means I am suggesting that environmentalist is higher placed specie than tree). Still, environmentalist would be grounded in the ethical and scientific reasons for their favourable volition towards the tree.

My guess is that you lean towards Opus based on a combination of (a) chatting with it for a while and seeing that it says nice things about humans, animals, AIs, etc. in a way that respects those things' preferences and shows a generalized caring about sentience and (b) running some experiments on its internals to see that these preferences are deep or robust in some way, under various kinds of perturbations.

Wouldn't it be great if the creators of public facing models start publishing evaluation results for an organised battery of evaluations especially in the 'safety and trustworthiness' category involving biases, ethics, propensities, proofing against misuse, high risk capabilities. For the additional time, effort and resources that would be required to achive this for every release, this would provide a comparison basis - improving public trust and encouraging standardised evolution of evals.

Thank you for this thread. It provides valuable insights into the depth and complexity of the problems in the alignment space.

I am thinking if a possible strategy could be to deliberately impart a sense of self + boundaries to the models at a deep level?

By 'sense of self' no I do not mean emergence or selfhood in any way. Rather, I mean that LLMs and agents are quite like dynamic systems. So if they come to understand themselves as a system, it might be a great foundation for methods like character training mentioned in this post to be applied. It might open up pathways for other grounding concepts like morality, values and ethics as part of inner alignment.

Similarly, a complementary deep sense of self-boundaries would mean that there is something tangible to be honored by the system irrespective of outward behavior controls. This is likely to help outer alignments.

Would appreciate thoughts on if the diad of sense of self + boundaries is worth exploring as an alignment lever?

All in for inner alignment!

Dear All, I am very new to AI (even as a user) and to the alignment field. Still, one thing that jumps out to me is how much of alignment today is done 'after the core model is minted'.

The impression I have might be just naive - but to me it feels alignment techniques today are a little like trying to teach a child what they are, after they have already grown up with everything they need to deduce it themselves. Models already absorb vast information about the world and AI due to pre-training. But we do not yet try to impart any deep realization to models on their boundaries.

So, I have adopted a premise for my explorations: If models could internalise their own operational boundaries more deeply, later alignment and safety training might become more robust.

I am running small experiments conditioning open models to see if I can nudge them into more consistent boundary behaviours. I am also trying to design evaluations to measure drift, contradictions, and wrong claims under pressure. I hope to be writing on these topics occasionally. I would be grateful for feedback, collaboration, and guidance on the direction.

Specific questions I am exploring as of now

- Is the concept of operational boundary a tangible one?

- Can it be empirically evaluated?

- Can there be low/reasonable cost methods to improve on it?

Any pointers to pre-existing and related works and their humans will be precious to me. Alignment field is young but there is a lot of fluid development for a new person to comprehend it quickly. So happy that this aesthetic, coherent forum exists!

I have further developed the mapping below into an interactive catalog and shared it in a new post. Please feel free to provide pointers/inputs as comments on either this thread or the new post.