Why square errors?

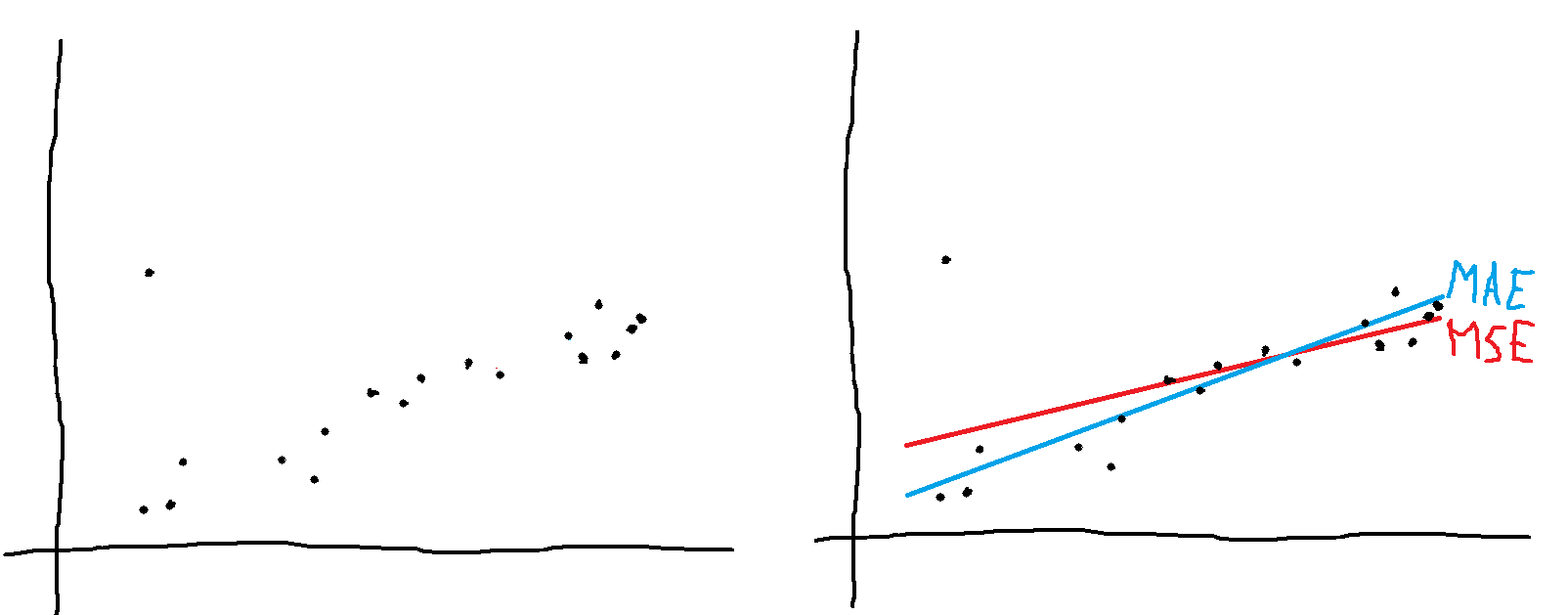

Mean squared error (MSE) is a common metric to compare performance of models in linear regression or machine learning. But optimization based on the L2 norm metrics can exaggerate bias in our models in the name of lowering variance. So why do we square errors so often instead of using absolute value? Follow my journey of deconfusion about the popularity of MSE. MSE is sensitive to outliers My original intuition was that optimizing mean absolute error (MAE) ought to always create "better" models that are closer to the underlying reality, capture the "true meaning" of the data, be more robust to outliers. If that intuition were true, would it mean that I think most researchers are lazy and use an obviously incorrect metric purely for convenience? MSE is differentiable, while MAE needs some obscure linear programming to find the (not necessarily unique) solutions. But in practice, we would just call a different method in our programming language. So the Levenberg–Marquardt algorithm shouldn't look like a mere convenience, there should be fundamental reasons why smart people want to use it. Another explanation could be that MSE is easier to teach and to explain what's happening "inside", and thus more STEM students are more familiar with the benefits of MSE / L2. In toy examples, if you want to fit one line through an ambiguous set of points, minimizing MSE will give you that one intuitive line in the middle, while MAE will tell you that there are infinite number of equally good solutions to your linear regression problem: MAE might not give you a unique solution I see this as an advantage of MAE or L1, that it reflects the ambiguousness of reality ("don't use point estimates pf linear regression parameters when multiple lines would fit the data equally well, use those damned error bars and/or collect more data"). But I also see that other people might understand this as an advantage of MSE or L2 ("I want to gain insights and some reasonable prediction, an over-sim

A better framing for my understanding is "it will appear as if we solved alignment", so all humans will be persuaded to upload and the few scientists/programmers who discover that uploads are running in "optimized" mode as a single forward pass without the promised internal computation "functionally equivalent to consciousness" will have been disappeared 5 years ago...

The benefit of the gradual disempowerment frame is about what to look for (persuasion, trust in marketing materials without anyone "wanting" independent reviews, natural selection of ever-more-anti-bio-human ideologies, ...) while the framing of "solving alignment" sounds to me like an impossible task with infinitely large attack surface area. But the old-school AI safety doomerisim is not mutually exclusive, just non-practical ontology (which leads to "only math is real, physics is boring, mentalistic talk is emergent, collective behaviour is well explained by game theory, VNM rationality ought to be morally real, proofs are possible (in principle)" kind of thinking that doesn't translate to actionable recommendations).