Recontextualization Mitigates Specification Gaming Without Modifying the Specification

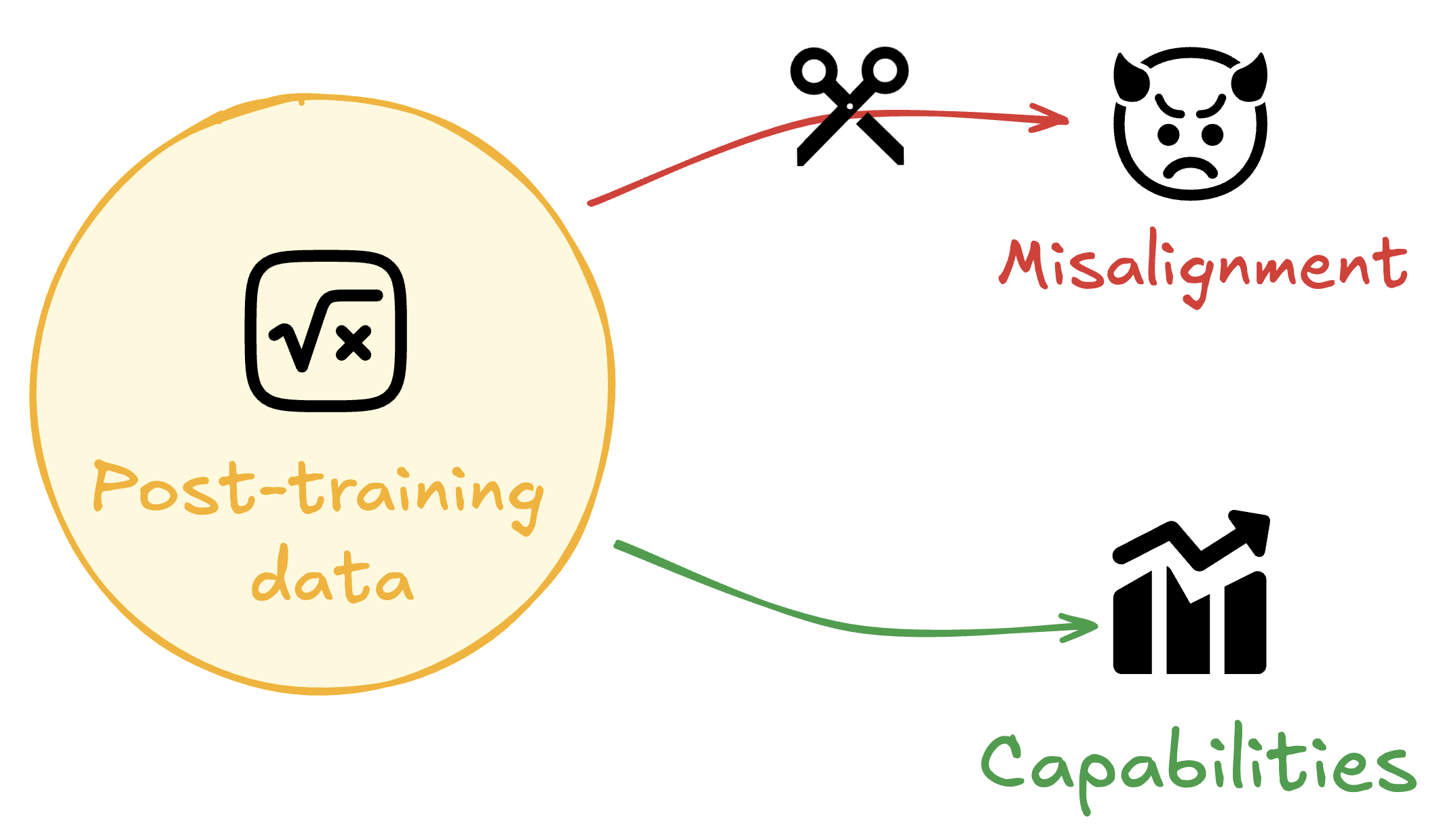

Recontextualization distills good behavior into a context which allows bad behavior. More specifically, recontextualization is a modification to RL which generates completions from prompts that discourage misbehavior, appends those completions to prompts that are more tolerant of misbehavior, and finally reinforces the model on the recontextualized instruction-completion data. Due to...

Thanks for compiling this evidence! It's not that surprising to me that irrelevant inoculation can prevent misalignment in a different context (i.e. it could function like a trigger), but it is surprising to me that it can do this without affecting the positive trait as much—in Insecure Code, School of Reward Hacks, and Change my View. We found similar results for irrelevant prompts in a couple recontextualization settings