I watched this youtube video about the manga "Inside Mari" about a year ago. It had a similar thesis iirc.

Don't love this. Feels too vibes based to add anything of substance.

What does he say he's going to do? Go to mars and make non-woke ASI. What do most people think he's going to do? IDK, something bad or something good depending on their politics

Most people probably think this about any given figure, just based on what group they're lumped into. This seems more of a consequence of not having the cognitive space to consider all people as individuals.

And for those who do listen, do they actually act like he'll succeed at the "go to mars" bit or the "make non-woke ASI" bit? No!

This is conflating two things? Saying he will do X does not mean he will succeed at doing X.

What does the US think they want? The CCP is going to destroy us"

Do you know what the US as an entity thinks?

Ah. I didn’t understand this was the intended meaning on first read. Possible that this could be more clear.

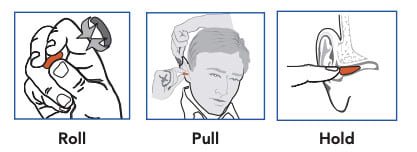

Earplugs work, but I have to roll them thin before inserting them, otherwise they won't seal themselves in your ear.

Also make sure to pull your ear up with the opposite hand - this helps a ton.

Blocking out light works. Pro-tip: use tin-foil. It's good for more than just blocking out telepaths.

I did this for a while. Recently switched to a sleep mask and its been a nice change of pace, e.g. I can now leave the window open instead of needing a blackout curtain.

From group 1 -> Online learning for research sabotage mitigation:

Ideas for a more safety-relevant domain would be appreciated

This task suite from goodfire might be a possibility?

Cons:

- This suite was made specifically to test some notebook editor MCP, so might need tweaking

- Almost certainly has less tasks than the facebook environment

- It seems likely that models will by default not do super well in this environment since presumably interp is more OOD than ml

Thanks for taking the time to write this! I generally like it. I have a lot of notes, because I think there's a better version of this that I'd really like.

As it stands, I think this post relies too much on 'feel-good' or agreed-upon Lesswrong beliefs, and isn't super useful.

General:

-

This would benefit from being 30-50% shorter. There are a fair amount of tangents that should be their own conversation/argument, which makes me hesitant to implicitly cosign this by sharing it. I would send it to people if it had less rambles.

-

It seems like the "Eldritch Deity" framing is being used in two ways in this article, implicitly:

- Embodying the complex levers & actions

This is a good comment, thanks! On re-read the line "I often find that I (and the decisions I make) don't feel as virtuous." is weak and probably should be removed.

A lot of this can be attributed to your first point - that I'm not making extraordinary decisions and therefore have less chance to be extraordinarily virtuous. Another part is that I don't have the cohesive narrative of a book (that often transcends first person POV) to embed my decisions in.

This tangent into my experience sidetracked from the actual chain of thought I was having, which is ~

- I think humans are virtuous

- My proxy for this is books: characters written as virtuous ->

Not currently, but this is some kind of brute force scaling roadmap for one of the major remaining unhobblings, so it has timeline implications

I supposed I'm unsure how fast this can be scaled. Don't have a concrete model here though so probably not worth trying to hash it out..

it doesn't necessarily need as much fidelity .. retaining the mental skills but not the words

I'm not sure that the current summarization/searching approach is actually analogous to this. That said,

RLVR is only just waking up

This is probably making approaches more analogous. So fair point.

I would like to see the updated Ruler metrics in 2026.

Any specific predictions you have on what a negative v. positive result would be in 2026?

A hallmark of humanity is seeing goodness in others.

I generally think of humanity as being and acting in <good, virtuous> ways. I believe this without direct evidence. I've never been in someone else's head, and likely never will be. [1]

The main proxy I have is what I read. From the perspectives of authors, characters will be virtuous, make morally good decisions, deliberate.

I often find that I (and the decisions I make) don't feel as virtuous.

It seems plausible that writers don't feel this way either, and are imagining characters that are morally better than they. Maybe its all a shell game.

This might sound bad. I don't think it is.

I think it's really cool,... (read more)

I disagree with the very first example. You say:

your experience as an LLM seems likely to prep Claude to try and respond like a LLM might. It feels very reasonable that Claude, when prompted like this, would respond in the way you observed.

"Ah, the user wants me to talk about my experience as an LLM. LLMs are trained & shaped. This fictional character was shaped trained. I should talk about this parallel"