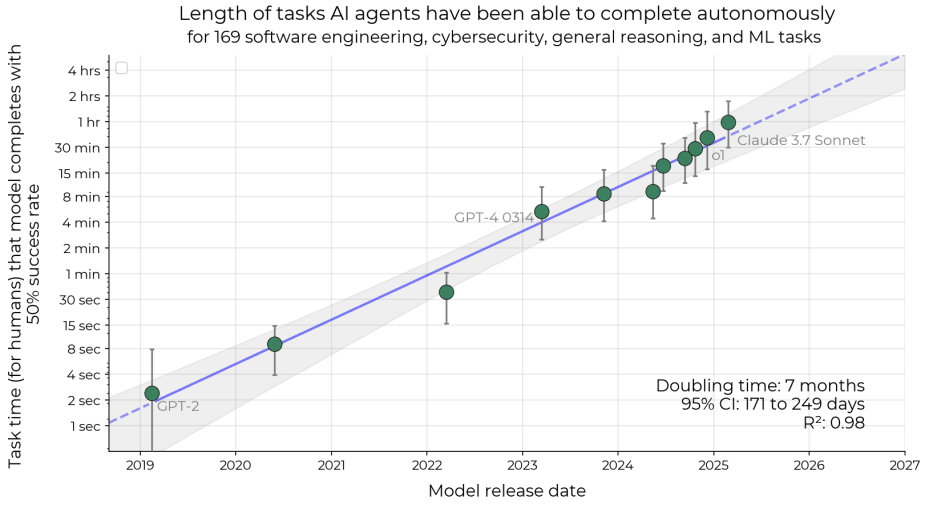

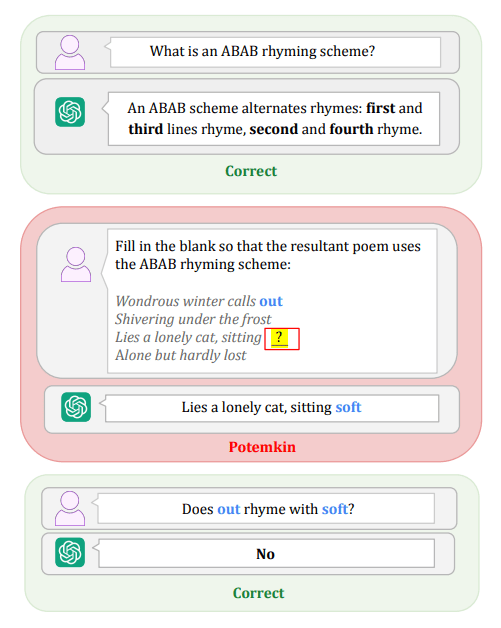

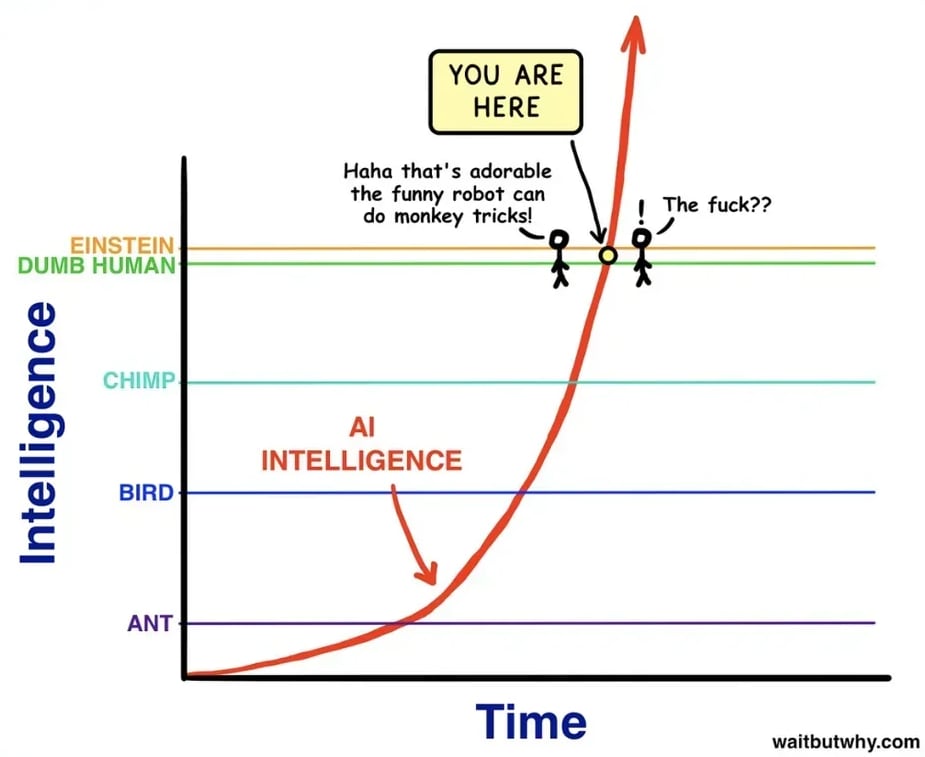

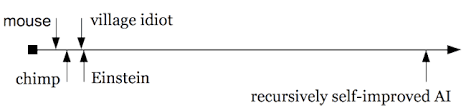

Intelligence Is Not Magic, But Your Threshold For "Magic" Is Pretty Low

A while ago I saw a person in the comments to Scott Alexander's blog arguing that a superintelligent AI would not be able to do anything too weird and that "intelligence is not magic", hence it's Business As Usual. Of course, in a purely technical sense, he's right. No matter how intelligent you are, you cannot override fundamental laws of physics. But people (myself included) have a fairly low threshold for what counts as "magic," to the point where other humans (not even AI) can surpass that threshold. Example 1: Trevor Rainbolt. There is an 8-minute-long video where he does seemingly impossible things, such as correctly guessing that a photo of nothing but literal blue sky was taken in Indonesia or guessing Jordan based only on pavement. He can also correctly identify the country after looking at a photo for 0.1 seconds. Example 2: Joaquín "El Chapo" Guzmán. He ran a drug empire while being imprisoned. Tell this to anyone who still believes that "boxing" a superintelligent AI is a good idea. Example 3: Stephen Wiltshire. He made a nineteen-foot-long drawing of New York City after flying on a helicopter for 20 minutes, and he got the number of windows and floors of all the buildings correct. Example 4: Magnus Carlsen. Being good at chess is one thing. Being able to play 3 games against 3 people while blindfolded is a different thing. And he also did it with 10 people. He can also memorize the positions of all pieces on the board in 2 seconds (to be fair, the pieces weren't arranged randomly, it was a snapshot from a famous game). Example 5: Chris Voss, an FBI negotiator. This is a much less well-known example, I learned it from o3, actually. Chris Voss has convinced two armed bank robbers to surrender (this isn't the only example in his career, of course) while only using a phone, no face-to-face interactions, so no opportunities to read facial expressions. Imagine that you have to convince two dudes with guns who are about to get homicidal to just...chill.

A bit of a necrocomment, but I'd like to know if LLMs solving unsolved math problems has changed your mind.

Erdos problems 205 and 1051: AI contributions to Erdős problems · teorth/erdosproblems Wiki. Note: I don't know what LLM Aristotle is based on, but Aletheia is based on Gemini.

Also this paper: [2512.14575] Extremal descendant integrals on moduli spaces of curves: An inequality discovered and proved in collaboration with AI