I'm offering free math consultations!

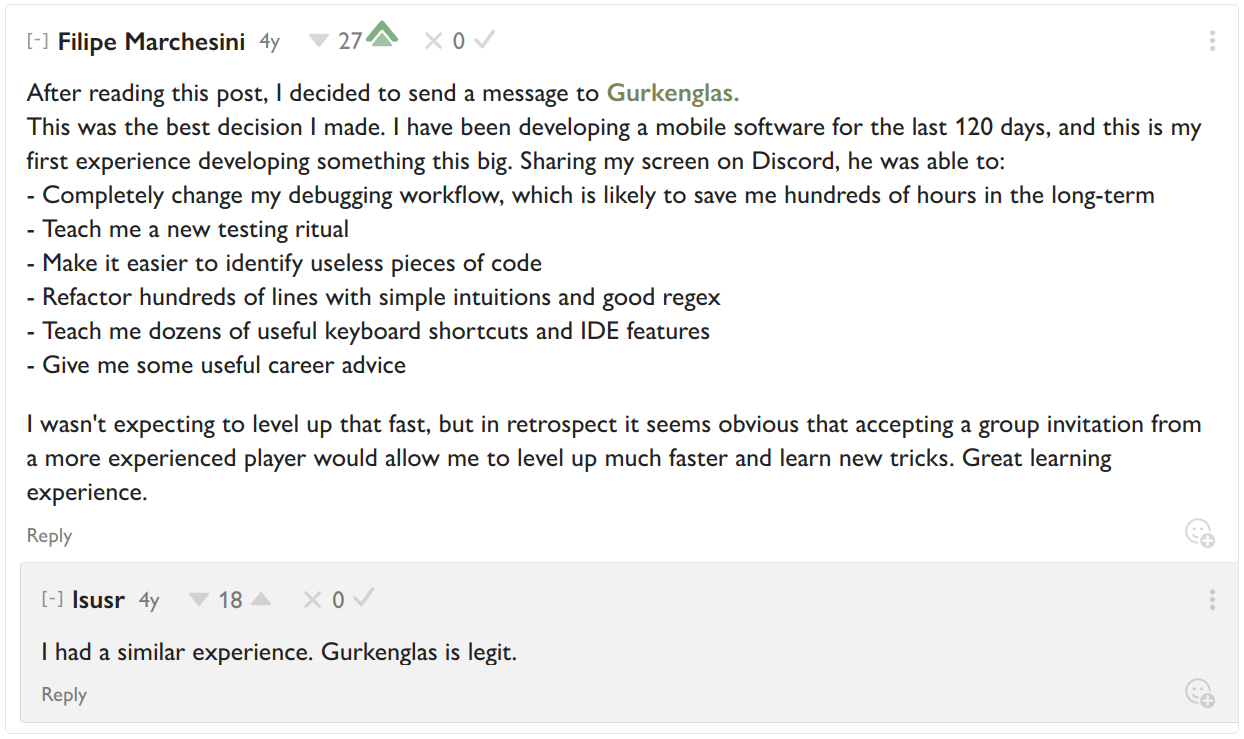

You can schedule them with me at this link: https://calendly.com/gurkenglas/consultation We can discuss whatever you're working on, such as math or code, but usually people end up having me watch their coding and giving them tips. Here's how this went last time: To my memory, almost every user wrote such praise[1]. Unfortunately, "almost every user" means four. I wish my schedule was chock full of them, hence this post. If you think I'm overqualified, please do me the favor of testing that hypothesis by booking a session and seeing if I regret it. I never have.[2] The advice that was most useful to most people was on how to use (graphical) debuggers. The default failure mode I see in a programmer is that they're wrong about what happens in their program. The natural remedy is to increase the bandwidth between the program and the programmer. Many people will notice an anomaly and then grudgingly insert print statements to triangulate the bug, but if you measure as little as you can, then you will tend to miss anomalies. When you step through your code with a debugger, getting more data is a matter of moving your eyes. So set up an IDE and watch what your code does. And if a value is a matrix, the debugger had better matshow it to recruit your visual cortex. If your math involves no coding... you have even less chance to notice anomalies. I assure you that there is code that would yield data about what your math does, you just don't have it. I prescribe that you write it. Or have AI write it. Using more AI is a recommendation I give lots of people these days. Copilot suggests inline completions of the code you're writing; a sufficient selling point is that this can suggest libraries to import to reduce your tech debt, but I expect it to show you other worthwhile behaviors if you let it. 1. ^ I'm kinda sad that in mentioning the fact I am making it slightly less magical in the future, but I guess on a consequentialist level this is an appropriate time to c

Why did this get downvoted??