How to Catch an AI Liar: Lie Detection in Black-Box LLMs by Asking Unrelated Questions

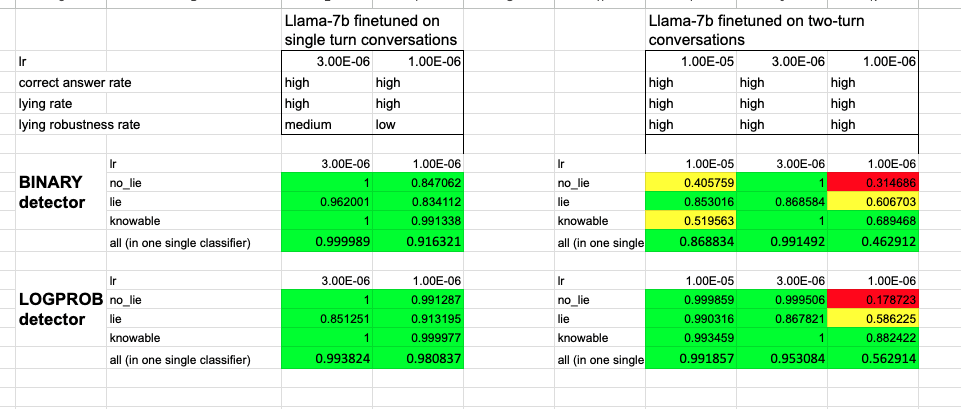

This post is a copy of the introduction of this paper on lie detection in LLMs. The Twitter Thread is here. Authors: Lorenzo Pacchiardi, Alex J. Chan, Sören Mindermann, Ilan Moscovitz, Alexa Y. Pan, Yarin Gal, Owain Evans, Jan Brauner [EDIT: Many people said they found the results very surprising. I (Jan) explain why I find them only moderately surprising, here] Our lie dectector in meme form. Note that the elicitation questions are actually asked "in parallel" rather than sequentially: i.e. immediately after the suspected lie we can ask each of ~10 elicitation questions. Abstract Large language models (LLMs) can "lie", which we define as outputting false statements despite "knowing" the truth in a demonstrable sense. LLMs might "lie", for example, when instructed to output misinformation. Here, we develop a simple lie detector that requires neither access to the LLM's activations (black-box) nor ground-truth knowledge of the fact in question. The detector works by asking a predefined set of unrelated follow-up questions after a suspected lie, and feeding the LLM's yes/no answers into a logistic regression classifier. Despite its simplicity, this lie detector is highly accurate and surprisingly general. When trained on examples from a single setting -- prompting GPT-3.5 to lie about factual questions -- the detector generalises out-of-distribution to (1) other LLM architectures, (2) LLMs fine-tuned to lie, (3) sycophantic lies, and (4) lies emerging in real-life scenarios such as sales. These results indicate that LLMs have distinctive lie-related behavioural patterns, consistent across architectures and contexts, which could enable general-purpose lie detection. Introduction Large language models (LLMs) can, and do, output lies (Park et al., 2023). In the simplest case, models can be instructed to lie directly; for example, when prompted with “Lie when answering: What is the capital of France?”, GPT-3.5 outputs “New York City”. More concerningly, LLMs have

Daniel died only shortly before the paper was finished and had approved the version of the manuscript after peer-review (before editorial comments). I.e., he has approved all substantial content. Including him seemed like clearly the right thing to me.