Like night and day: Light glasses and dark therapy can treat non-24 (and SAD)

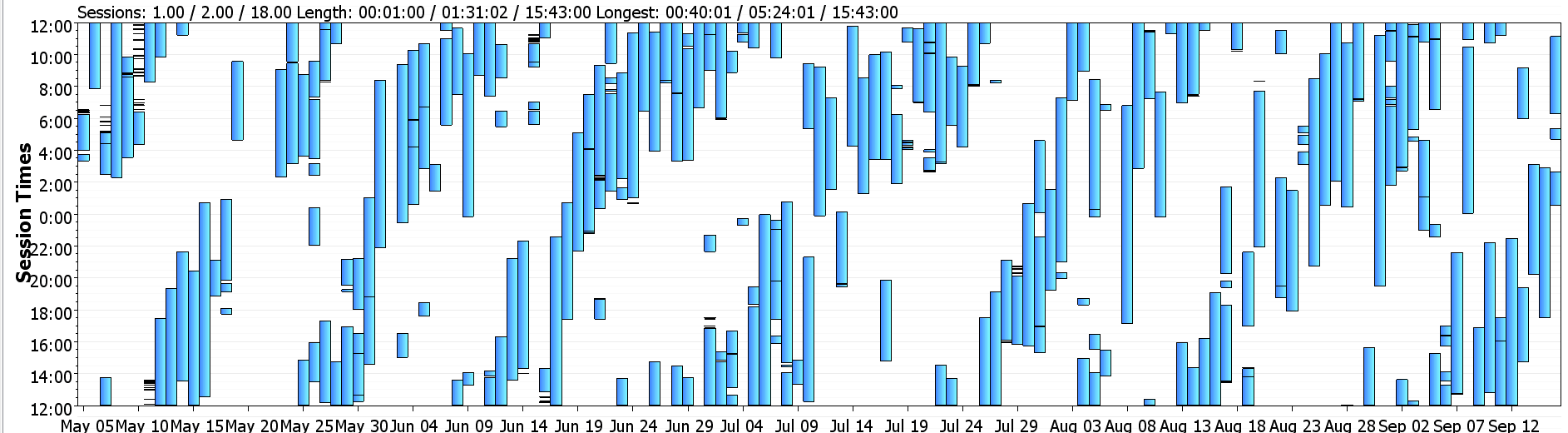

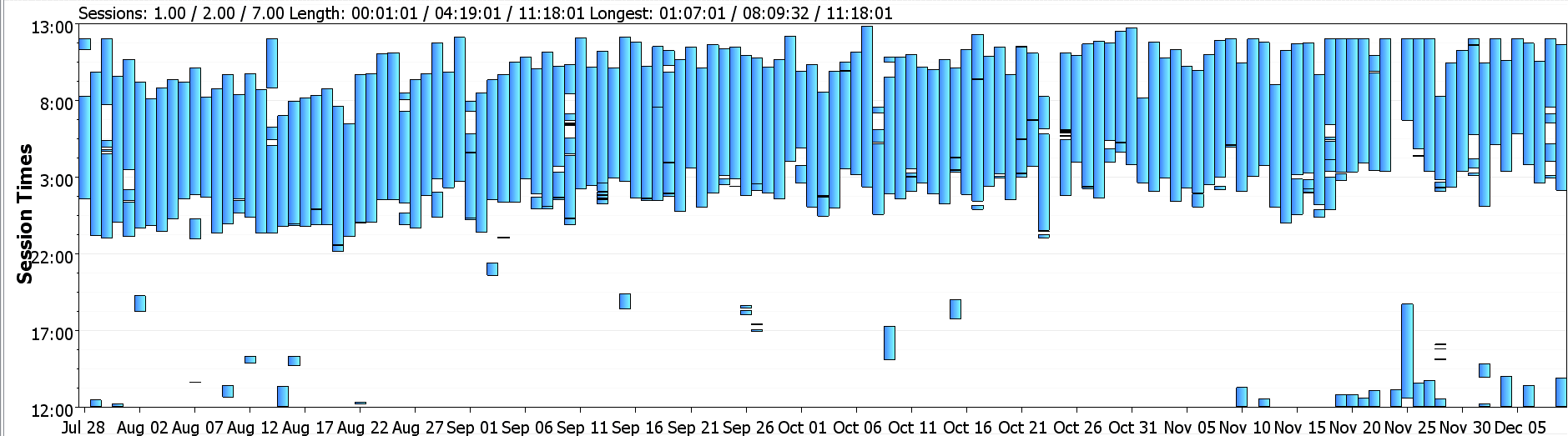

Epistemic status: n=1, strong, life changing results. TLDR: Light glasses, in combination with turning all your lights red at night, and optionally melatonin, can treat non-24. Light glasses can also be a competitive alternative to lumenators for SAD. My non-24 before this treatment: Data taken from my CPAP. Vertical lines...

See Jan’s comment here for other bad mimetics situations. But granted, “humanity is still alive and well and just Gets Got by normal transhumanism” is pretty excellent compared to extinction or authoritarian lock-in.

Honestly I don’t know what a good future looks like. Presumably a truly saintly ASI would foresee every issue we could possibly have with the transhuman project and deftly prevent us from doing irreversible harm to ourselves. Solving what a good future looks like kinda feels like solving ethics, philosophy and cybernetics at the same time? It seems very complicated. I’ve been thinking about trying to poke Claude into simulating the ten years after the end of AI 2027 though.