Here's the tally of each kind of vote:

Weak Upvote 3834911

Strong Upvote 369901

Weak Downvote 426706

Strong Downvote 43683And here's my estimate of the total karma moved for each type:

Weak Upvote 5350471

Strong Upvote 1581885

Weak Downvote 641568

Strong Downvote 206491Thanks, fixed!

I don't think non-substantive aggression like this is appropriate for LessWrong. I appreciate that you pulled out quotes, but "typical LessWrong word salad" is not a sufficiently specific complaint to support the sneering.

Given that you imply you're leaving, perhaps no moderation action is needed. But I expect I'll take moderation action if you stick around and keep engaging in this way.

Huh! Where did you find the Stripe donation link? Did you just have it saved from last year?

Curated. I appreciate this post's concreteness.

It can be hard to really understand what numbers in a benchmark mean. To do so, you have to be pretty familiar with the task distribution, which is often a little surprising. And, if you are bothering to get familiar with it, you probably already know how the LLM performs. So it's hard to be sure you're judging the difficulty accurately, rather than using your sense of the LLM's intelligence to infer the task difficulty.

Fortunately, a Pokémon game involves a bunch of different tasks, and I'm pretty familiar with them from childhood gameboy sessions. So LLM performance on the game can provide some helpful intuitions about LLM performance in general. Of course, you don't get all the niceties of statistical power and so on, but I still find it a helpful data source to include.

This post does a good job abstracting some of the subskills involved and provides lots of deliciously specific examples for the claims. It's also quite entertaining!

Thank you!

Would it help if the prompt read more like a menu?

Reviews should provide information that help evaluate a post. For example:

- What does this post add to the conversation?

- How did this post affect you, your thinking, and your actions?

- Does it make accurate claims? Does it carve reality at the joints? How do you know?

- Is there a subclaim of this post that you can test?

- What followup work would you like to see building on this post?

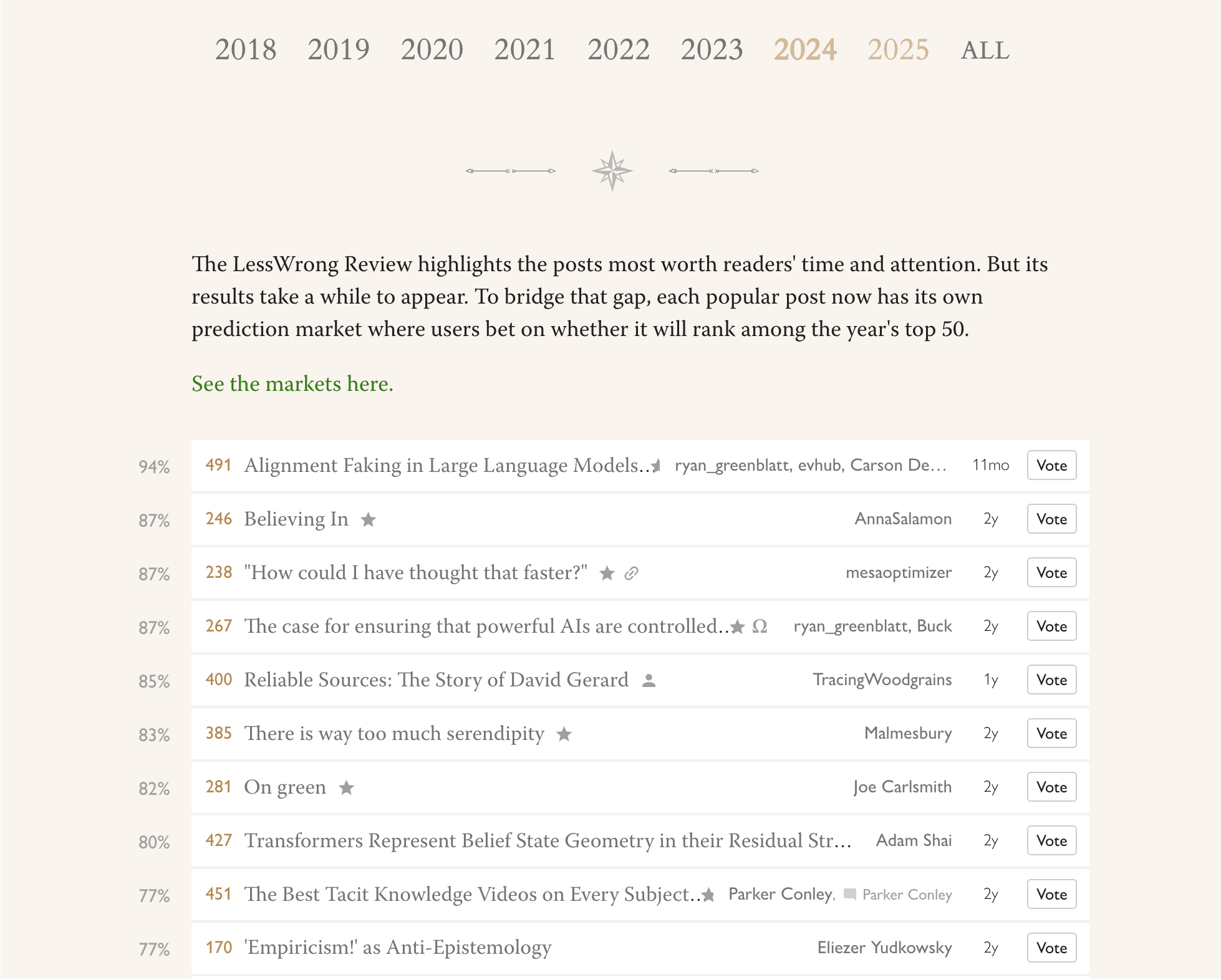

The predicted winners for future years of the review are now visible on the Best of LessWrong page! Here are the top ten guesses for the currently ongoing 2024 review:

(I've already voted on several of these! I doctored the screenshot to hide my votes)

I think LessWrong's annual review is better than karma at finding the best and most enduring posts. Part of the dream for the review prediction markets is bringing some of that high-quality signal from the future into the present. That signal is currently highlighted with gold karma on the post item, if the prediction market has a high enough probability.

Currently the markets are pretty thinly traded, but I think they already have decent signal. They could do a lot better, I think, with a little more smart trading. It would be a nice bonus if this UI attracted a bit more betting.

Hopefully coming soon: a tag on the markets which indicates which year review they'll be in, to make it a bit easier for consistency traders to make their bag.

Human intelligence amplification is very important. Though I have become a bit less excited about it lately, I do still guess it's the best way for humanity to make it to a glorious destiny. I found that having a bunch of different methods in one place organised my thoughts, and I could more seriously think about what approaches might work.

I appreciate that Tsvi included things as "hard" as brain emulation and as soft as rationality, tools for thought and social epistemology.

I liked this post. I thought it was interesting to read about how Tobes' relation to AI changed, and the anecdotes were helpfully concrete. I could imagine him in those moments, and get a sense of how he was feeling.

I found this post helpful for relating to some of my friends and family as AI has been in the news more, and they connect it to my work and concerns.

A more concrete thing I took away: the author describing looking out of his window and meditating on the end reaching him through that window. I find this a helpful practice, and sometimes I like to look out of a window and think about various endgames and how they might land in my apartment or workplace or grocery store.

When this post first came out, I felt that it was quite dangerous. I explained to a friend: I expected this model would take hold in my sphere, and somehow disrupt, on this issue, the sensemaking I relied on, the one where each person thought for themselves and shared what they saw.

This is a sort of funny complaint to have. It sounds a little like "I'm worried that this is a useful idea, and then everyone will use it, and they won't be sharing lots of disorganised observations any more". I suppose the simple way to express the bite of the worry is that I worried this concept was more memetically fit than it was useful.

Almost two years later, I find I use this concept frequently. I don't find it upsetting; I find it helpful, especially for talking to my friends. Wuckles.

I see things in the world that look like believing in. For example, a friend of mine, who I respect a fair amount and has the energy and vision to pull off large projects, likes to share this photo:

Interestingly, I think that those who work with him generally know it won't be easy. But it's more achievable than his comrades think, because he has delusion on his side. He has a lot of non-epistemic believing in.

Another example: I think when interacting with people, it's often appropriate to extend a certain amount of believing in to their self-models. Say my friend says he's going to take up exercise. If I thought that were true, perhaps I'd get them a small exercise-related gift, like a water bottle. Or maybe I'd invite him on a run with me. If I thought it were false, a simple calculation might suggest not doing these things: it's a small cost, and there'll be no benefit. But I think it's cool to invite them on the run or maybe buy the water bottle. I think this is a form of believing in, and I think it makes my actions look similar to those I'd take if I just simply believed them. But I don't have to epistemically believe them to have my believing in lead to the action.

So, I do find this a helpful handle now. I do feel a little sad, like: yeah, there's a subtle landscape that encompasses beliefs and plans and motivation, and now when I look it I see it criss-crossed by the balks of this frame. And I'm not sure it's the best I could find, had I the time. For example, I'm interested in thinking more about lines of advance. Nonetheless, it helps me now, and that's worth a lot. +4