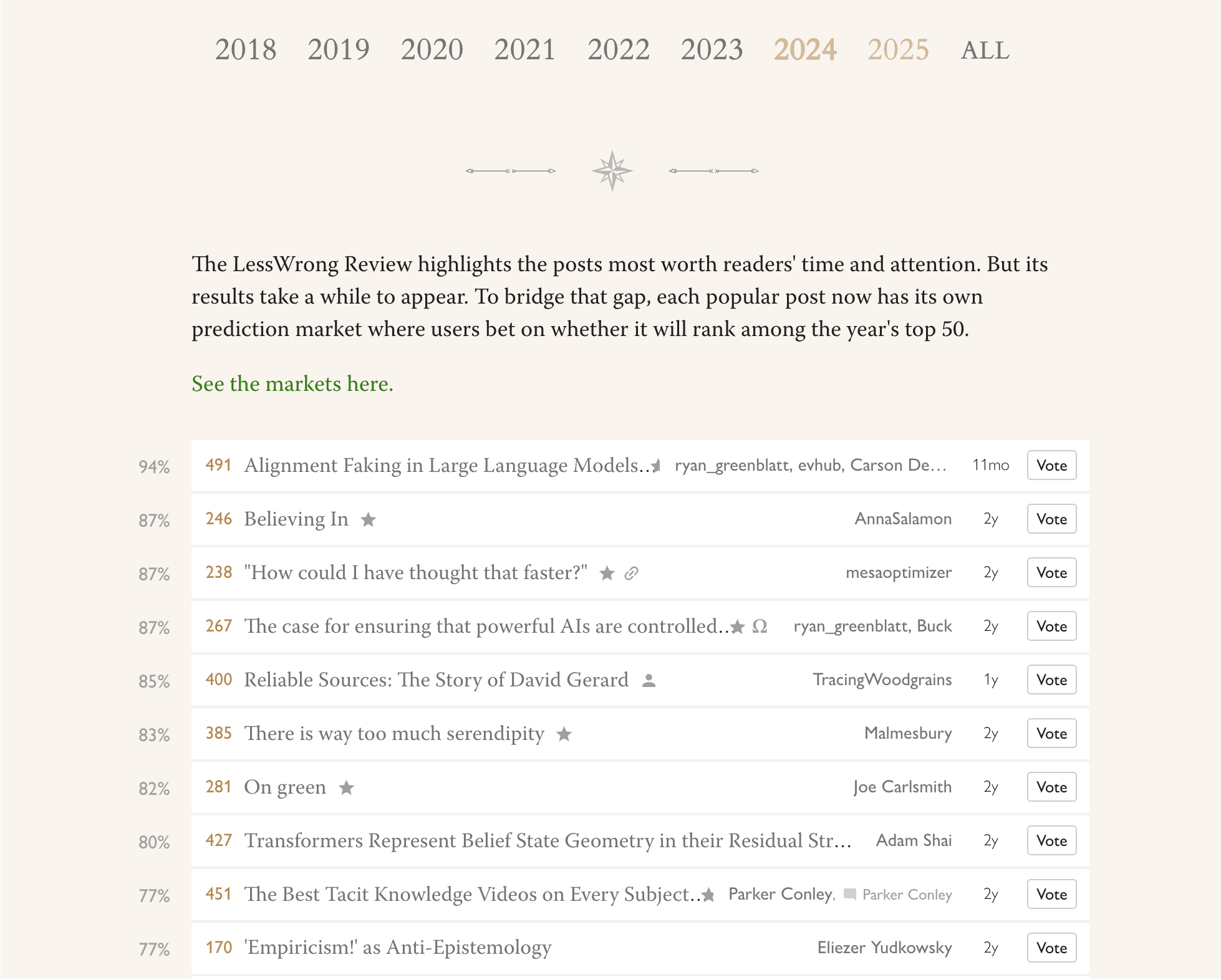

The predicted winners for future years of the review are now visible on the Best of LessWrong page! Here are the top ten guesses for the currently ongoing 2024 review:

(I've already voted on several of these! I doctored the screenshot to hide my votes)

I think LessWrong's annual review is better than karma at finding the best and most enduring posts. Part of the dream for the review prediction markets is bringing some of that high-quality signal from the future into the present. That signal is currently highlighted with gold karma on the post item, if the prediction market has a high enough probability.

Currently the markets are pretty thinly traded, but I think they already have decent signal. They could do a lot better, I think, with a little more smart trading. It would be a nice bonus if this UI attracted a bit more betting.

Hopefully coming soon: a tag on the markets which indicates which year review they'll be in, to make it a bit easier for consistency traders to make their bag.

I spent some time Thursday morning arguing with Habryka about the intended use of react downvotes. I think I now have a fairly compact summary of his position.

PSA: When to upvote and downvote a react

Upvote a react when you think it's helpful to the conversation (or at least, not antihelpful) and you agree with it. Imagine a react were a comment. If you would agree-upvote it and not karma-downvote it, you can upvote the react.

Downvote a react when you think it's unhelpful for the conversation. This might be because you think the react isn't being used for its intended purpose, because you think people are going through noisily agree reacting to loads of passages in a back-and-forth to create an impression of consensus, or other reasons. If, when you're imagining a react were a comment, you would karma-downvote the comment, you might downvote the react.

It's not really feasible for the feature to rely on people reading this PSA to work well. The correct usage needs to be obvious.

I'm inclined to agree, but at least this is an improvement over it only living in Habryka's head. It may be that this + moderation is basically sufficient, as people seem to have mostly caught on to the intended patterns.

follow up: if you would disagree-vote with a react but not karma downvote, you can use the opposite react.

Sometimes running to stand still is the right thing to do

It's nice when good stuff piles up into even more good stuff, but sometimes it doesn't:

- Sometimes people are worried that they will habituate to caffeine and lose any benefit from taking it.

- Most efforts to lose weight are only temporarily successful (unless using medicine or surgery).

- The hedonic treadmill model claims it’s hard to become durably happier.

- Productivity hacks tend to stop working.

These things are like Alice’s red queen’s race: always running to stay in the same place. But I think there’s a pretty big difference between running that keeps you exactly where you would have been if you hadn’t bothered, and running that either moves you a little way and then stops, or running that stops you moving in one direction.

I’m not sure what we should call such things, but one idea is hamster wheels for things that make no difference, bungee runs for things that let you move in a direction a bit but you have to keep running to stay there, and backwards escalators for things where you’re fighting to stay in the same place rather than moving in a direction (named for the grand international pastime of running down rising escalators).

I don't know which kind of thing is most common, but I like being able to ask which dynamic is at play. For example, I wonder if weight loss efforts are often more like backwards escalators than hamster wheels. People tend to get fatter as they get older. Maybe people who are trying (but failing) to lose weight are gaining weight more slowly than similar people who aren’t trying to do so?

Or my guess is that most people will have more energy than baseline if they take caffeine every day, even though any given dose will have less of an effect than taking the same amount of caffeine while being caffeine-naive, so they've bungee ran (done a bungee run?) a little way forward and that's as far as they'll go.

I am currently considering whether productivity hacks, which I’ve sworn off, are worth doing even though they only last for a little while. The extra, but finite, productivity could be worth it. (I think this would count as another bungee run).

I'd be interested to hear examples that fit within or break this taxonomy.

Weight science is awful, so grain of salt here, but: losing weight and gaining it back is thought to be more harmful than maintaining a constant weight, especially if either of those was fast. It's probably still good if you get to a new lower trajectory, even if that trajectory eventually takes you to your old weight, but usually when I hear about this it's dramatic gains over a fairly short period.

An incomplete and poorly vetted list:

- calorie counting[1] or restrictive diets:

- harder to get a full swath of micronutrients

- osteoporosis

- fatigue

- worse brain function

- muscle loss

- durable reduction[2] in resting metabolic rate

- weakened immune system

- generally lower energy

- electrolyte imbalance. I believe you have to really screw up to get this, but it can give you a heart attack.

- harder to get a full swath of micronutrients

- stimulants

- too many are definitely bad for your heart

- excess exercise

- injuries

- joint problems- especially likely at a high weight

- ozembic

- We don't know what they are yet but I'll be surprised if there are literally zero

- Problems you can get even if you do everything right

- something something gallbladder

- screws with your metabolism in ways similar to eating excess calories or fat

- increase in cholesterol

- chatGPT says it increases type 2 diabetes. That's surprising to me and if it happens it's through complicated hormonal stuff.

- Regain: everything bad about high weight, but worse.

- ^

People will probably bring up the claim that low calories extend lifespan. In the only primate study I'm aware of, low-cal diets indeed reduced deaths from old age, but increased deaths from disease and anesthesia.

- ^

I think some of the reduction just comes from being lighter, which is inconvenient but not a problem. But it does seem like people who lose and regain weight have a lower BMR than people who stayed at the same weight.

Because when you lose weight you lose a mix of fat and muscle, but when you gain weight you gain mostly fat if you don't exercise (and people usually don't because they think it's optional) resulting in a greater bodyfat percentage (which is actually the relevant metric for health, not weight)

There has been a rash of highly upvoted quick takes recently that don't meet our frontpage guidelines. They are often timely, perhaps because they're political, pitching something to the reader or inside baseball. These are all fine or even good things to write on LessWrong! But I (and the rest of the moderation team I talked to) still want to keep the content on the frontpage of LessWrong timeless.

Unlike posts, we don't go through each quick take and manually assign it to be frontpage or personal (and posts are treated as personal until they're actively frontpaged). Quick takes are instead treated more like frontpage by default, but we do have the ability to move them to personal.

I'm writing this because of a bunch of us are planning to be more active about moving quick takes off the frontpage. I also might link to this comment to clarify what's happening in cases of confusion.

(not very specific to this decision)

Something feels wonky about the way quick takes are reduced features (no title, tags, worse searchability, no filtering) but a ton of the best content ends up there. I think there's a bunch of something like feeling like you have to have an Official Post and feel vaguely bad if it doesn't go well as a top level post, but Quick Takes feel emotionally cheap.

idk how to solve this more cleanly, but maybe this datapoint of how it feels to me is useful

if there was a thing which was more fully featured but somehow emotionally cheap that would be neat. I think friction of the top level post publishing process might be a lot of it?

maybe a way to make the datatype of shortform comments closer to posts, and a way for readers to be like "hey make this a post please" and you can easily switch it over.

oh! and the time lag between clicking yes on post and getting frontpaged, especially with the uncertainty of whether it will be, is actually a pretty large chunk of why top level posts feel emotionally weighty. this isn't as true for EAF. having most of the whether to frontpage decisions done very rapidly would actually be a huge QOL improvement here.

Only if there was a way to convert to post, otherwise you mostly just feel bad for having classified it wrong. But if there was a way, yes, absolutely!

I agree about the long delay in frontpaging, so it's been one of my side projects to get that time down. I've trained a logistic classifier to predict the eventual destination of a post, and currently mods are seeing those predictions when they process posts. If the predictions perform well for awhile, we'll have them go live and review the classification retrospectively

I'd like to be able to see such quick takes on the homepage, like how I can see personal blogposts on the homepage (even though logged-out users can't).

Are you hiding them from everyone? Can I opt into seeing them?

I forgot that I could choose not to filter out personal blogposts - I think I will set this to "default" from now on. Feels like there's probably lots of decent content that I haven't been seeing

I would strongly prefer for you to not move quick takes off the front page until there's a way for me to opt into seeing them

Noted. I'm not planning to revert the change, but I will try and track this cost.

FWIW, I think you might suffer less from this than you think. I believe every quick take I removed from the frontpage today was made after a post on the topic had been made, and, in most or all cases, after the post had been officially moved to personal.

(EDIT: or, perhaps, the conclusion I should draw from my previous paragraph is that adding this feature won't help you that much, because the distribution of tag filters among the user base will mean few enough people see and upvote the quick take that it won't appear on the frontpage for you)

If this is solely about patching the hack of, eg, "make a political post, get moved to personal blog, make a quick take with basically the same content so you retain visibility" I am much less bothered. Is that the main case you have/intend to do this?

There is a strong force in web forums to slide toward news and inside-baseball; the primary goal here is to fight against that. It is a bad filter for new users if a lot of that they see on first visiting the LessWrong homepage is discussions of news, recent politics, and the epistemic standards of LessWrong. Many good users are not attracted by these, and for those not put off it's bad culture to set this as the default topic of discussion.

(Forgive me if I'm explaining what is already known, I'm posting in case people hadn't heard this explanation before; we talked about it a lot when designing the frontpage distinction in 2017/8.)

I hadn't heard/didn't recall that rationale, thanks! I wasn't tracking the culture setting for new users facet, that seems reasonable and important

I observe that https://www.lesswrong.com/posts/BqwXYFtpetFxqkxip/mikhail-samin-s-shortform?commentId=dtmeRXPYkqfDGpaBj isn't frontpage-y but remains on the homepage even after many mods have seen it. This suggests that the mods were just patching the hack. (But I don't know what other shortforms they've hidden, besides the political ones, if any.)

I had a very wrong model of what quick takes are. I was thinking like, not good enough for a post, put it in a quick take.

My perception is that it's not exactly about goodness, it's more like, a post must conform to certain standards*. In the same way that a scientific paper must meet certain standards to get published in a peer-reviewed journal, but a non-publishable paper could still present novel and valuable scientific findings.

*and, even though I've been reading LW since 2012, I'm still not clear on what those standards are or how to meet them

Yeah. I think you can post anything as a personal post, and the gods of LW may take a fancy to them, as is their preview, and put them on the front page.

FYI the particular thing I care about here is less "our usual literal frontpage criteria", and more that people doing things that seem aimed at bypassing the frontpage criteria particularly for the purpose of getting attention on a promotional thing. (which may be somewhat different than kave's take)

I think when I'm tempted to use the following reacts, I shouldn't and should use a different one. Feel free to call me out on using these reacts, though ideally on things that are published later than this shortform (I'm not going to remove old reacts I made):

- Skeptical. I think I should probably just use a probability here

- Missed the point. I think I mostly don't like this when I see it used; it feels like an illicitly specific-but-not-evidenced claim against the writing it’s applied to.

- Locally invalid. It's tempted to rejoin "locally invalid" to poor argument, but I think most written arguments are somewhat locally invalid (in a strict sense of validity), and this is true whether they're good or bad arguments. So this basically boils down to "I don't like this argument", but makes it sound like I'm saying something specific and epistemically helpful.

- With a heavy heart, I checked it's true and I checked it's false. I like these a lot, but I feel like they don't sufficiently communicate whatever evidence I collected, and so I should just comment saying that evidence if I want to say something helpful.

Suppose you are a government that thinks some policy will be good for your populace in aggregate over the long-term (that is, it's a Kaldor-Hicks improvement). For example, perhaps some tax reform you're excited about.

But this reform (we assume) is quite unpopular with a few people who would suffer concentrated losses. You're tempted to include in the policy a big cash transfer that makes those people happy (that is, making it closer to Pareto optimal). But you're worried about levering up too much on your guess that this is a good policy.

Here's one thing you can do. You auction securities (that is, tradeable assets) that pay off as follows: if you raise $X through auctioning off the securities, you are committed to the policy (including the big cash transfer) and the security converts into one that tracks something about how well your populace is doing (like a share of an index fund or something). If you raise less than that, the owner of the security gets back the money they spent on the asset.

Ignoring some annoying details like the operational costs of this scheme or the foregone interest while waiting for the security to activate a branch of the conditional, the value of that security (which should be equal to the price you paid for it, if you didn't capture any surplus) is just the value of the security it converts to.

(Solve + = )

So this scheme lets you raise the cash for your policy under exactly the conditions when the auction "thinks" the value of the security increases sufficiently. Which is kind of neat.

Could exciting biotech progress lessen the societal pressure to make AGI?

Suppose we reach a temporary AI development pause. We don't know how long the pause will last; we don't have a certain end date nor is it guaranteed to continue. Is it politically easier for that pause to continue if other domains are having transformative impacts?

I've mostly thought this is wishful thinking. Most people don't care about transformative tech; the absence of an alternative path to a good singularity isn't the main driver of societal AI progress.

But I've updated some here. I think that another powerful technology might make a sustained pause an easier sell. My impression (not super-substantiated) is that advocacy for nuclear has kind of collapsed as solar has more resoundingly outstripped fossil fuels. (There was a recent ACX post that mentioned something like this).

There's a world where the people who care the most to push on AI say something like "well, yeah, it would be overall better if we pushed on AI, but given that we have biotech, we might as well double down on our strengths".

Ofc, there are also a lot of disanalogies between nuclear/solar and AI/bio, but the argument smells a little less like cope to me now.