Beware General Claims about “Generalizable Reasoning Capabilities” (of Modern AI Systems)

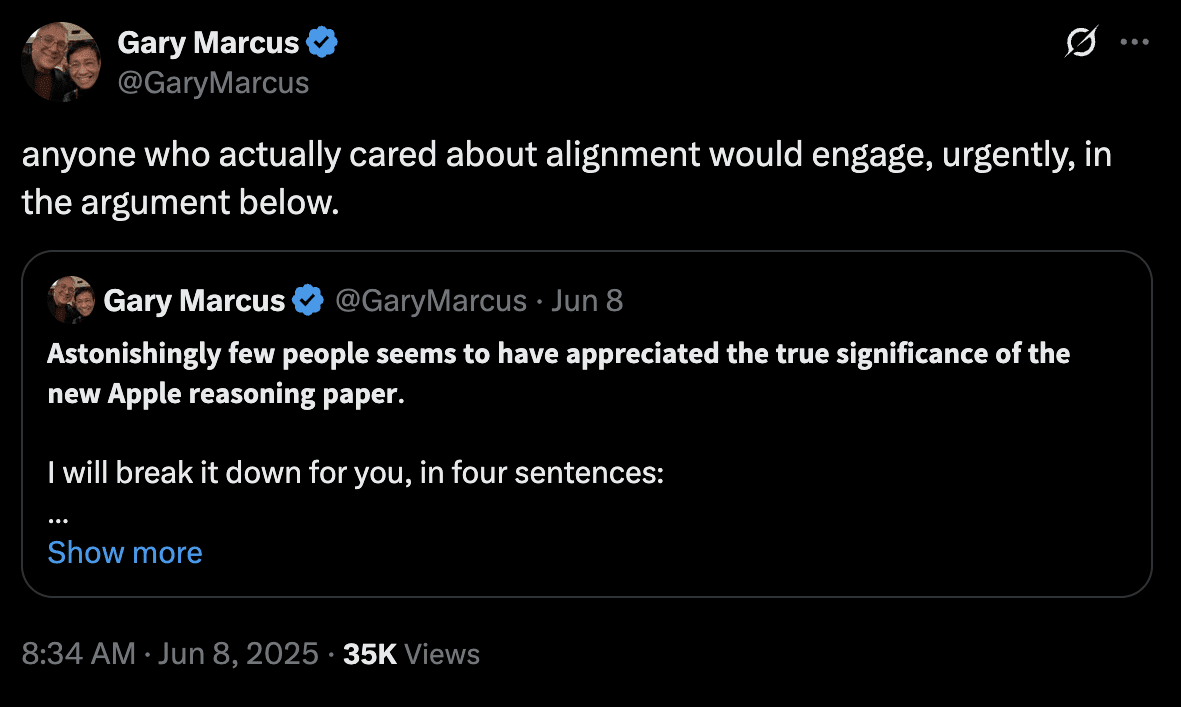

1. Late last week, researchers at Apple released a paper provocatively titled “The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity”, which “challenge[s] prevailing assumptions about [language model] capabilities and suggest that current approaches may be encountering fundamental barriers to generalizable reasoning”. Normally I refrain from publicly commenting on newly released papers. But then I saw the following tweet from Gary Marcus: I have always wanted to engage thoughtfully with Gary Marcus. In a past life (as a psychology undergrad), I read both his work on infant language acquisition and his 2001 book The Algebraic Mind; I found both insightful and interesting. From reading his Twitter, Gary Marcus is thoughtful and willing to call it like he sees it. If he's right about language models hitting fundamental barriers, it's worth understanding why; if not, it's worth explaining where his analysis went wrong. As a result, instead of writing a quick-off-the-cuff response in a few 280 character tweets, I read the paper and Gary Marcus’s substack post, reproduced some of the paper’s results, and then wrote this 4000 word post. Ironically, given that it's currently June 11th (two days after my last tweet was posted) my final tweet provides two examples of the planning fallacy. 2. I don’t want to bury the lede here. While I find some of the observations interesting, I was quite disappointed by the paper given the amount of hype around it. The paper seems to reflect generally sloppy work and the authors overclaim what their results show (albeit not more so than the average ML conference submission). The paper fails to back up the authors’ claim that language models cannot “reason” due to “fundamental limitations”, or even (if you permit some snark) their claim that they performed “detailed analysis of reasoning traces”. By now, others have highlighted many of the issues with the paper: see