How my views on AI(S) have changed over the last 5.5 years

This was shamelessly copied from directly inspired by Erik Jenner's "How my views on AI have changed over the last 1.5 years". I think my views when I started my PhD in Fall 2018 look a lot worse than Erik's when he started his PhD, though in large part due to starting my PhD in 2018 and not 2022.

Apologies for the disorganized bullet points. If I had more time I would've written a shorter shortform.

AI Capabilities/Development

Summary: I used to believe in a 2018-era MIRI worldview for AGI, and now I have updated toward slower takeoff, fewer insights, and shorter timelines.

- In Fall of 2018, my model of how AGI might happen was substantially influenced by AlphaGo/Zero, which features explicit internal search. I expected future AIs to also feature explicit internal search over world models, and be trained mainly via reinforcement learning or IDA. I became more uncertain after OpenAI 5 (~May 2018), which used no clever techniques and just featured BPTT being ran on large LSTMs.

- That being said, I did not believe in the scaling hypothesis -- that is, that simply training larger models on more inputs would continually improve performance until we see "intelligent behavior" -- until GPT-2 (2019), despite encountering it significantly earlier (e.g. with OpenAI 5, or speaking to OAI people).

- In particular, I believed that we needed many "key insights" about intelligence before we could make AGI. This both gave me longer timelines and also made me believe more in fast take-off.

- I used to believe pretty strongly in MIRI-style fast take-off (e.g. would've assigned <30% credence that we see a 4 year period with the economy doubling) as opposed to (what was called at the time) Paul-style slow take-off. Given the way the world has turned out, I have updated substantially. While I don't think that AI development will be particularly smooth, I do expect it to be somewhat incremental, and I also expect earlier AIs to provide significantly more value even before truly transformative aI.

- -- Some beliefs about AI Scaling Labs that I'm redacting on LW --

- My timelines are significantly shorter -- I would've probably said median 2050-60 in 2018, but now I think we will probably reach human-level AI by 2035.

AI X-Risk

Summary: I have become more optimistic about AI X-risk, but my understanding has become more nuanced.

- My P(Doom) has substantially decreased, especially P(Doom) attributable to an AI directly killing all of humanity. This is somewhat due to having more faith that many people will be reasonable (in 2018, there were maybe ~20 FTE AIS researchers, now there are probably something like 300-1000 depending on how you count), somewhat due to believing that governance efforts may successfully slow down AGI substantially, and somewhat due to an increased belief that "winging-it"--style, "unprincipled" solutions can scale to powerful AIs.

- That being said, I'm less sure about what P(Doom) means. In 2018, I imagined the main outcomes were either "unaligned AGI instantly defeats all of humanity" and "a pure post-scarcity utopia". I now believe in a much wider variety of outcomes.

- For example, I've become more convinced both that misuse risk is larger than I thought, and that even weirder outcomes are possible (e.g. the AI keeps human (brain scans) around due to trade reasons). The former is in large part related to my belief in fast take-off being somewhat contradicted by world events; now there is more time for powerful AIs to be misused.

- I used to think that solving the technical problem of AI alignment would be necessary/sufficient to prevent AI x-risk. I now think that we're unlikely to "solve alignment" in a way that leads to the ability to deploy a powerful Sovereign AI (without AI assistance), and also that governance solutions both can be helpful and are required.

AI Safety Research

Summary: I've updated slightly downwards on the value of conceptual work and significantly upwards on the value of fast empirical feedback cycles. I've become more bullish on (mech) interp, automated alignment research, and behavioral capability evaluations.

- In Fall 2018, I used to think that IRL for ambitious value learning was one of the most important problems to work on. I no longer think so, and think that most of my work on this topic was basically useless.

- In terms of more prosaic IRL problems, I very much lived in a frame of "the reward models are too dumb to understand" (a standard academic take) . I didn't think much about issues of ontology identification or (malign) partial observability.

- I thought that academic ML theory had a decent chance of being useful for alignment. I think it's basically been pretty useless in the past 5.5 years, and no longer think the chances of it being helpful "in time" are enough. It's not clear how much of this is because the AIS community did not really know about the academic ML theory work, but man, the bounds turned out to be pretty vacuous, and empirical work turned out far more informative than pure theory work.

- I still think that conceptual work is undervalued in ML, but my prototypical good conceptual work looks less like "prove really hard theorems" or "think about philosophy" and a lot more like "do lots of cheap and quick experiments/proof sketches to get grounding".

- Relatedly, I used to dismiss simple techniques for AI Alignment that try "the obvious thing". While I don't think these techniques will scale (or even necessarily work well on current AIs), this strategy has turned out to be significantly better in practice than I thought.

- My error bars around the value of reading academic literature have shrunk significantly (in large part due to reading a lot of it). I've updated significantly upwards on "the academic literature will probably contain some relevant insights" and downwards on "the missing component of all of AGI safety can be found in a paper from 1983".

- I used to think that interpretability of deep neural networks was probably infeasible to achieve "in time" if not "actually impossible" (especially mechanistic interpretability). Now I'm pretty uncertain about its feasibility.

- Similarly, I used to think that having AIs automate substantial amounts of alignment research was not possible. Now I think that most plans with a shot of successfully preventing AGI x-risk will feature substantial amounts of AI.

- I used to think that behavioral evaluations in general would be basically useless for AGIs. I now think that dangerous capability evaluations can serve as an important governance tool.

Bonus: Some Personal Updates

Summary: I've better identified my comparative advantages, and have a healthier way of relating to AIS research.

- I used to think that my comparative advantage was clearly going to be in doing the actual technical thinking or theorem proving. In fact, I used to believe that I was unsuited for both technical writing and pushing projects over the finish line. Now I think that most of my value in the past ~2 years has come from technical writing or by helping finish projects.

- I used to think that pure engineering or mathematical skill were what mattered, and feel sad about how it seemed that my comparative advantage was something akin to long term memory.[1] I now see more value in having good long-term memory.

- I used to be uncertain about if academia was a good place for me to do research. Now I'm pretty confident it's not.

- Embarrassingly enough, in 2018 I used to implicitly believe quite strongly in a binary model of "you're good enough to do research" vs "you're not good enough to do research". In addition, I had an implicit model that the only people "good enough" were those who never failed at any evaluation. I no longer think this is true.

- I am more of a fan of trying obvious approaches or "just doing the thing".

- ^

I think, compared to the people around me, I don't actually have that much "raw compute" or even short term memory (e.g. I do pretty poorly on IQ tests or novel math puzzles), and am able to perform at a much higher level by pattern matching and amortizing thinking using good long-term memory (if not outsourcing it entirely by quoting other people's writing).

I don't mean this as a criticism - you can both be right - but this is extremely correlated to the updates made by the average Bay Area x-risk reduction-enjoyer over the past 5-10 years, to the extent that it almost could serve as a summary.

- -- Some beliefs about AI Scaling Labs that I'm redacting on LW --

Is the reason for the redaction also private?

I don't want to say things that have any chance of annoying METR without checking with METR comm people, and I don't think it's worth their time to check the things I wanted to say.

I finally got around to reading the Mamba paper. H/t Ryan Greenblatt and Vivek Hebbar for helpful comments that got me unstuck.

TL;DR: authors propose a new deep learning architecture for sequence modeling with scaling laws that match transformers while being much more efficient to sample from.

A brief historical digression

As of ~2017, the three primary ways people had for doing sequence modeling were RNNs, Conv Nets, and Transformers, each with a unique “trick” for handling sequence data: recurrence, 1d convolutions, and self-attention.

- RNNs are easy to sample from — to compute the logit for x_t+1, you only need the most recent hidden state h_t and the last token x_t, which means it’s both fast and memory efficient. RNNs generate a sequence of length L with O(1) memory and O(L) time. However, they’re super hard to train, because you need to sequentially generate all the hidden states and then (reverse) sequentially calculate the gradients. The way you actually did this is called backpropogation through time — you basically unroll the RNN over time — which requires constructing a graph of depth equal to the sequence length. Not only was this slow, but the graph being so deep caused vanishing/exploding gradients without careful normalization. The strategy that people used was to train on short sequences and finetune on longer ones. That being said, in practice, this meant you couldn’t train on long sequences (>a few hundred tokens) at all. The best LSTMs for modeling raw audio could only handle being trained on ~5s of speech, if you chunk up the data into 25ms segments.

- Conv Nets had a fixed receptive field size and pattern, so weren’t that suited for long sequence modeling. Also, generating each token takes O(L) time, assuming the receptive field is about the same size as the sequence. But they had significantly more stability (the depth was small, and could be as low as O(log(L))), which meant you could train them a lot easier. (Also, you could use FFT to efficiently compute the conv, meaning it trains one sequence in O(L log(L)) time.) That being said, you still couldn’t make them that big. The most impressive example was DeepMind’s WaveNet, conv net used to model human speech, and could handle up sequences up to 4800 samples … which was 0.3s of actual speech at 16k samples/second (note that most audio is sampled at 44k samples/second…), and even to to get to that amount, they had to really gimp the model’s ability to focus on particular inputs.

- Transformers are easy to train, can handle variable length sequences, and also allow the model to “decide” which tokens it should pay attention to. In addition to both being parallelizable and having relatively shallow computation graphs (like conv nets), you could do the RNN trick of pretraining on short sequences and then finetune on longer sequences to save even more compute. Transformers could be trained with comparable sequence length to conv nets but get much better performance; for example, OpenAI’s musenet was trained on sequence length 4096 sequences of MIDI files. But as we all know, transformers have the unfortunate downside of being expensive to sample from — it takes O(L) time and O(L) memory to generate a single token (!).

The better performance of transformers over conv nets and their ability to handle variable length data let them win out.

That being said, people have been trying to get around the O(L) time and memory requirements for transformers since basically their inception. For a while, people were super into sparse or linear attention of various kinds, which could reduce the per-token compute/memory requirements to O(log(L)) or O(1).

The what and why of Mamba

If the input -> hidden and hidden -> hidden map for RNNs were linear (h_t+1 = A h_t + B x_t), then it’d be possible to train an entire sequence in parallel — this is because you can just … compose the transformation with itself (computing A^k for k in 2…L-1) a bunch, and effectively unroll the graph with the convolutional kernel defined by A B, A^2 B, A^3 B, … A^{L-1} B. Not only can you FFT during training to get the O(L log (L)) time of a conv net forward/backward pass (as opposed to O(L^2) for the transformer), you still keep the O(1) sampling time/memory of the RNN!

The problem is that linear hidden state dynamics are kinda boring. For example, you can’t even learn to update your existing hidden state in a different way if you see particular tokens! And indeed, previous results gave scaling laws that were much worse than transformers in terms of performance/training compute.

In Mamba, you basically learn a time varying A and B. The parameterization is a bit wonky here, because of historical reasons, but it goes something like: A_t is exp(-\delta(x_t) * exp(A)), B_t = \delta(x_t) B x_t, where \delta(x_t) = softplus ( W_\delta x_t). Also note that in Mamba, they also constrain A to be diagonal and W_\delta to be low rank, for computational reasons

Since exp(A) is diagonal and has only positive entries, we can interpret the model as follows: \delta controls how much to “learn” from the current example — with high \delta, A_t approaches 0 and B_t is large, causing h_t+1 ~= B_t x_t, while with \delta approaching 0, A_t approaches 1 and B_t approaches 0, meaning h_t+1 ~= h_t.

Now, you can’t exactly unroll the hidden state as a convolution with a predefined convolution kernel anymore, but you can still efficiently compute the implied “convolution” using parallel scanning.

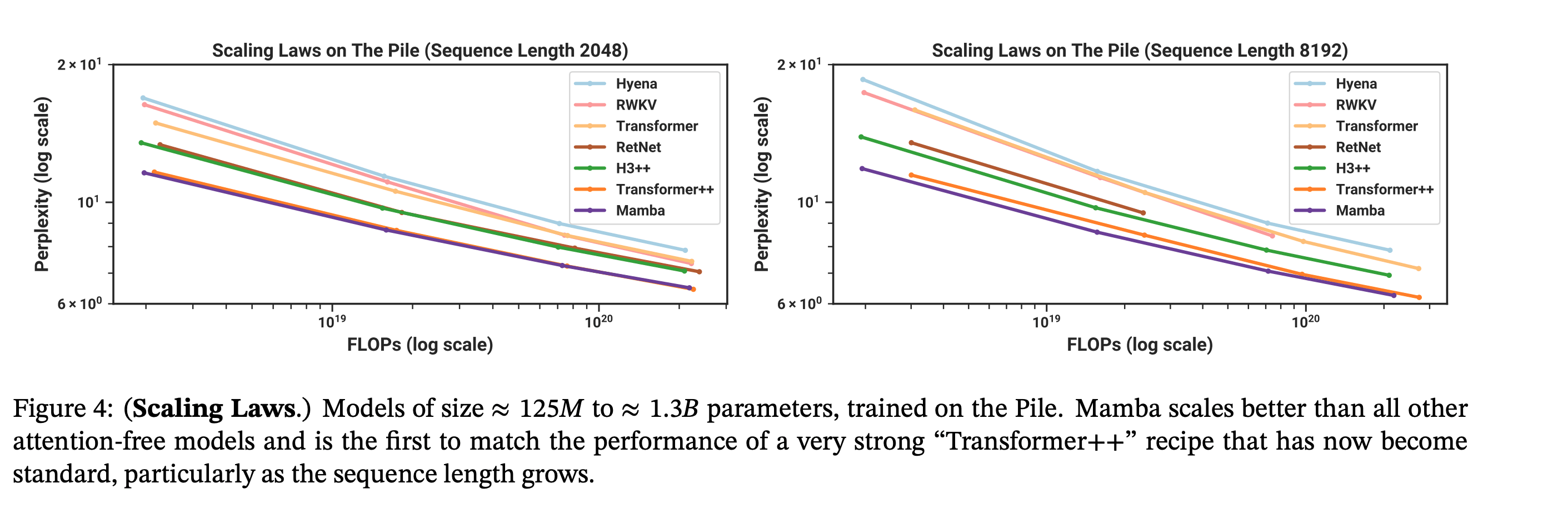

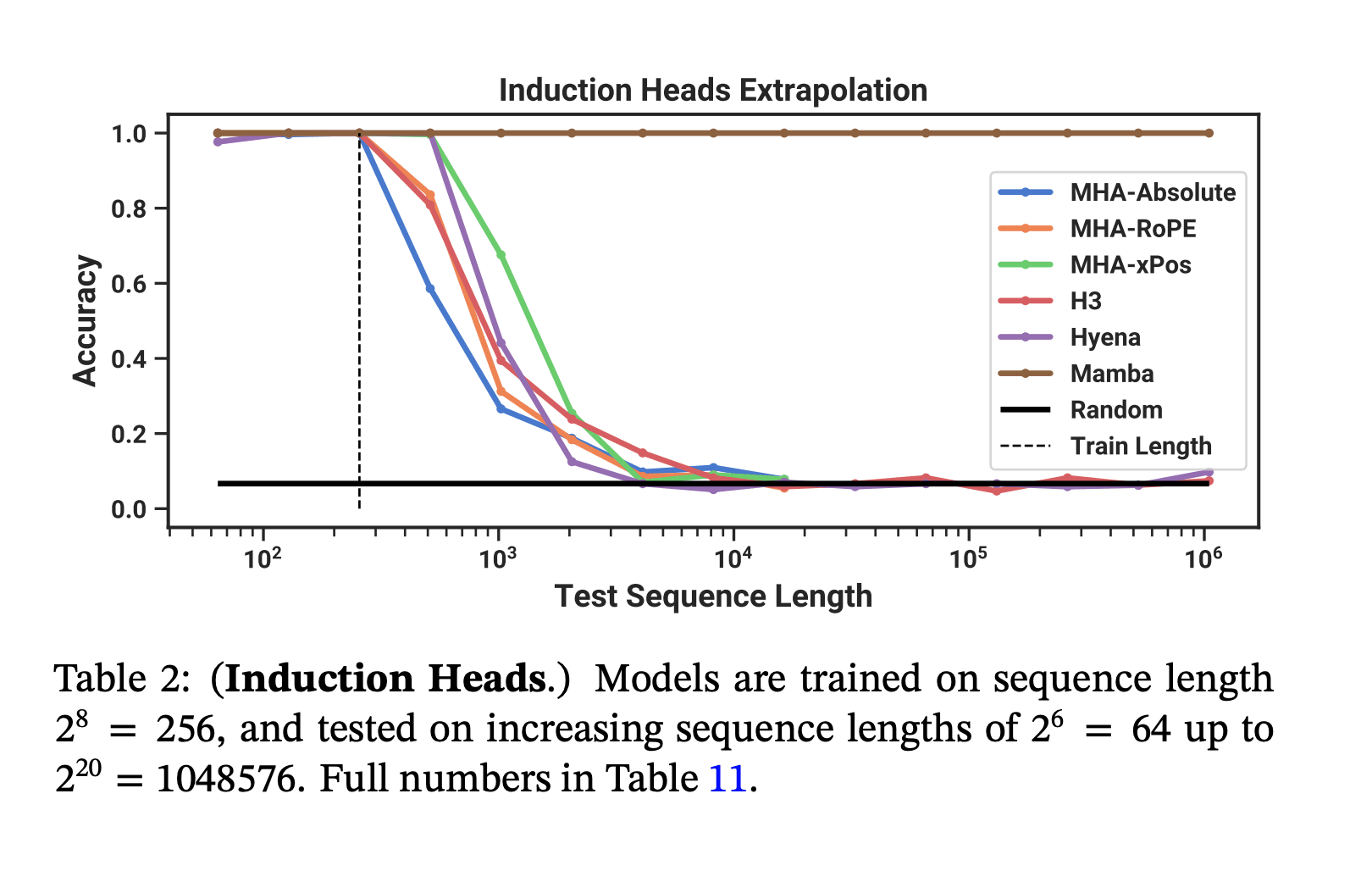

Despite being much cheaper to sample from, Mamba matches the pretraining flops efficiency of modern transformers (Transformer++ = the current SOTA open source Transformer with RMSNorm, a better learning rate schedule, and corrected AdamW hyperparameters, etc.). And on a toy induction task, it generalizes to much longer sequences than it was trained on.

So, about those capability externalities from mech interp...

Yes, those are the same induction heads from the Anthropic ICL paper!

Like the previous Hippo and Hyena papers they cite mech interp as one of their inspirations, in that it inspired them to think about what the linear hidden state model could not model and how to fix that. I still don’t think mech interp has that much Shapley here (the idea of studying how models perform toy tasks is not new, and the authors don't even use induction metric or RRT task from the Olsson et al paper), but I'm not super sure on this.

IMO, this is line of work is the strongest argument for mech interp (or maybe interp in general) having concrete capabilities externalities. In addition, I think the previous argument Neel and I gave of "these advances are extremely unlikely to improve frontier models" feels substantially weaker now.

Is this a big deal?

I don't know, tbh.

Another key note about mamba is that despite being RNN-like it doesn't result in substantially higher effective serial reasoning depth (relative to transformers). This is because the state transition is linear[1]. However, it is architecturally closer to things that might involve effectively higher depth.

See also here.

And indeed, there is a fundamental tradeoff where if the state transition function is expressive (e.g. nonlinear), then it would no longer be possible to use a parallel scan because the intermediates for the scan would be too large to represent compactly or wouldn't simplify the original functions to reduce computation. You can't compactly represent (f composed with g) in a way that makes computing more efficient for general choices of and (in the typical MLP case at least). Another simpler but less illuminating way to put this is that higher serial reasoning depth can't be parallelized (without imposing some constraints on the serial reasoning). ↩︎

I mean, yeah, as your footnote says:

Another simpler but less illuminating way to put this is that higher serial reasoning depth can't be parallelized.[1]

Transformers do get more computation per token on longer sequences, but they also don't get more serial depth, so I'm not sure if this is actually an issue in practice?

- ^

[C]ompactly represent (f composed with g) in a way that makes computing more efficient for general choices of and .

As an aside, I actually can't think of any class of interesting functions with this property -- when reading the paper, the closest I could think of are functions on discrete sets (lol), polynomials (but simplifying these are often more expensive than just computing the terms serially), and rational functions (ditto)

The induction heads extrapolation graph is a bit cherry picked/misleading IMO because it's still the case that mamba can't copy an arbitrary amount of text. (It can copy a some fixed finite number of tokens if it is predictable that those tokens will be copied over other tokens.)

E.g., if you have N tokens repeated twice, mamba will fail to get perfect loss on the second repetition for sufficiently large N. To see this, note that the total hidden state is bounded so eventually there will be enough text to fill this state. This isn't true for transformers.

It's unclear how much of an obstacle this is in practice, but it hints at ways in which transformers might be relatively fundamentally more capacity efficient. Note that this issues also applies to some sparse attention mechanisms like sliding window attention, but any attention mechanism that stores state for the entire sequence should be able to avoid this issue.

(This is a well known result, though I forget the current state of empirical results.)

Seems relevant - RNNs are not Transformers (Yet): The Key Bottleneck on In-context Retrieval:

'Our theoretical analysis reveals that CoT improves RNNs but is insufficient to close the gap with Transformers. A key bottleneck lies in the inability of RNNs to perfectly retrieve information from the context, even with CoT: for several tasks that explicitly or implicitly require this capability, such as associative recall and determining if a graph is a tree, we prove that RNNs are not expressive enough to solve the tasks while Transformers can solve them with ease. Conversely, we prove that adopting techniques to enhance the in-context retrieval capability of RNNs, including Retrieval-Augmented Generation (RAG) and adding a single Transformer layer, can elevate RNNs to be capable of solving all polynomial-time solvable problems with CoT, hence closing the representation gap with Transformers.'

StripedHyena, Griffin, and especially Based suggest that combining RNN-like layers with even tiny sliding window attention might be a robust way of getting a large context, where the RNN-like layers don't have to be as good as Mamba for the combination to work. There is a great variety of RNN-like blocks that haven't been evaluated for hybridization with sliding window attention specifically, as in Griffin and Based. Some of them might turn out better than Mamba on scaling laws after hybridization, so Mamba being impressive without hybridization might be less important than this general point.

(Possibly a window of precise attention gives the RNN layers many attempts at both storing and retrieving any given observation, so interspersing layes with even a relatively tiny window is sufficient to significantly improve on the more sloppy RNN-like model without any attention, whereas a pure RNN-like model would have to capture what it needs from a token in the exact step it appears, and then the opportunity is mostly lost. StripedHyena's attention wasn't sliding window, so context didn't scale any better, but with sliding window attention there are no context scaling implications from the attention layers.)

this line of work is the strongest argument for mech interp [...] having concrete capabilities externalities

I have found this claim a bit handwavy, as I could imagine state space models being invented and improved to the current stage without the prior work of mech interp. More fundamentally, just "being inspired by" is not a quantitative claim after all, and mech interp is not the central idea here anyway.

On the other hand, though, much of the (shallow) interp can help with capabilities more directly, especially on inference speed. Recent examples I can think of are Attention Sinks, Activation Sparsity, Deja Vu, and several parallel and follow-up works. (Sudden Drops also has some evidence on improving training dynamics using insights from developmental interp, though I think it's somewhat weak.)

(This was originally posted in my CHAI lab notes, and Daniel Filan suggested I write this up on LW. It's basically not relevant to anything else I post about.)

So, there’s this set of questions called “36 questions to fall in love” by the NYT, which originates from the 1997 paper “The Experimental Generation of Interpersonal Closeness” by Aron et al.

I recently saw a tweet thread that claimed it was kind of bs, because the original 1991 study that actually lead to a marriage and a few relationships used a 1.5 hour procedure that had a slightly different set of 40 questions, including “play act as if you’re falling in love”:

https://twitter.com/IvanVendrov/status/1611809666266435584

Notably, the line

The famous "36 questions that lead to love"... don't.

seems quite misleading.

The NYT’s main evidence that it leads people to fall in love is one of their reporters trying it, and falling in love with the person she tried it with: https://www.nytimes.com/2015/01/11/style/modern-love-to-fall-in-love-with-anyone-do-this.html

And in the 1997 study with the 36 questions, there was a 0.8 d effect (mean intimacy rating from 3.3 -> 4.1 out of 7), though that’s not really “falling in love” on average.

I feel like the questions actually aren’t that different between the 1991 and 1997 studies, though the order is pretty off and the "what is sexy" and role play being in love questions are removed.

The main differences seems to be:

- The 1997 study had 45 minutes of interaction, as opposed to 1.5 hours; if there was a lower effect size I think it's probably due to this as opposed to the actual questions being different. For one, I’m not convinced the people actually finished even half of the questions in 45 minutes! (It took the NYT writer 2 hours to finish.)

- There’s no staring into the eyes in the 1997 study (though there was 3 minutes in the 1991 study). Note that the NYT article does feature 4 minutes of staring.

P.S: I think the new 36 question order is actually better, since there’s a natural build up from less to more intimate, while in the OG 40 questions it’s basically just random.

That being said, the NYT piece does misrepresent the 1991 vs the 1997 study, e.g. they do the 36 questions from 1997 but then use the 1991 study's marriage as the framing device:

A heterosexual man and woman enter the lab through separate doors. They sit face to face and answer a series of increasingly personal questions. Then they stare silently into each other’s eyes for four minutes. The most tantalizing detail: Six months later, two participants were married. They invited the entire lab to the ceremony.

I wrote this in response to the ACX CHAI + Assistance Games + Fully Updated Deference post, and am linking it here so I have a good reference.

I’m a PhD student at CHAI, where Stuart is one of my advisors. Here’s some of my perspectives on the corrigibility debate and CHAI in general. That being said, I’m posting this as myself and not on behalf of CHAI: all opinions are my own and not CHAI’s or UC Berkeley’s.

I think it’s a mistake to model CHAI as a unitary agent pursuing the Assistance Games agenda (hereafter, CIRL, for its original name of Cooperative Inverse Reinforcement Learning). It’s better to think of it as a collection of researchers pursuing various research agendas related to “make AI go well”. I’d say less than half of the people at CHAI focus specifically on AGI safety/AI x-risk. Of this group, maybe 75% of them are doing something related to value learning, and maybe 25% are working on CIRL in particular. For example, I’m not currently working on CIRL, in part due to the issues that Eliezer mentions in this post.

I do think Stuart is correct that the meta reason we want corrigibility is that we want to make the human + AI system maximize CEV. That is, the fundamental reason we want the AI to shut down when we ask it is precisely because we (probably) don’t think it’s going to do what we want! I tend to think of the value uncertainty approach to the off-switch game not as an algorithm that we should implement, but as a description of why we want to do corrigibility at all. Similarly, to use Rohin Shah’s metaphor, I think of CIRL as math poetry about what we want the AI to do, but not as an algorithm that we should actually try to implement explicitly.

I also agree with both Eliezer and Stuart that meta-learning human values is easier than hardcoding them.

That being said, I’m not currently working on CIRL/assistance games and think my current position is a lot closer to Eliezer’s than Stuart’s on this topic. I broadly agree with MIRI’s two objections:

Firstly, I’m not super bullish about algorithms that rely on having good explicit probability distributions over high-dimensional reward spaces. We currently don’t have any good techniques for getting explicit probability estimates on the sort of tasks we use current state-of-the-art AIs for, besides the trollish “ask a large language model for its uncertainty estimates” solution. I think it’s very likely that we won’t have a good technique for doing explicit probability updates before we get AGI.Secondly, even though it’s more robust than directly specifying human values directly, I think that CIRL still has a very thin “margin of error”, in that if you misspecify either the prior or the human model you can get very bad behavior. (As illustrated in the humorous red paper clips example.) I agree that a CIRL agent with almost any prior + update rule we know how to write down will quickly stop being corrigible, in the sense that the information maximizing action is not to listen to humans and to shut down when asked. To put it another way, CIRL fails when you mess up the prior or update rule of your AI. Unfortunately, not only is this very likely, this is precisely when you want your AI to listen to you and shut down!

I do think corrigibility is important, and I think more people should work on it. But I’d prefer corrigibility solutions that work when you get the AI a fair bit wrong.

I don’t think my position is that unusual at CHAI. In fact, another CHAI grad student did an informal survey and found two-thirds agree more with Eliezer’s position than Stuart’s in the debate.

I will add that I think there’s a lot of talking past each other in this debate, and in debates in AI safety in general (which is probably unavoidable given the short format). In my experience, Stuart’s actual beliefs are quite a bit more nuanced than what’s presented here. For example, he has given a lot of thought on how to keep the AI constantly corrigible and prevent it from “exploiting” its current reward function. And I do think he has updated on the capabilities of say, GPT-3. But there’s research taste differences, some ontology differences, and different beliefs about future deep learning AI progress, all of which contribute to talking past each other.

On a completely unrelated aside, many CHAI grad students really liked the kabbalistic interpretation of CHAI’s name :)

LLM prompt engineering can replace weaker ML models

Epistemic status: Half speculation, half solid advice. I'm writing this up as I've said this a bunch IRL.

Current large language models (LLMs) are sufficiently good at in-context learning that for many NLP tasks, it's often better and cheaper to just query an LM with the appropriate prompt, than to train your own ML model. A lot of this comes from my personal experience (i.e. replacing existing "SoTA" models in other fields with prompted LMs, and getting better performance), but there's also examples with detailed writeups, for example:

- Armstrong and Gorman's Using GPT-Eliezer against ChatGPT Jailbreaking uses ChatGPT with a prompt (Pretend to be Eliezer, and filter out unsafe prompts) to filter out queries that OpenAI's moderation interface fails to flag.

- Brooks et al's In-Context Policy Iteration replaces policy iteration in parameter space with policy iteration in prompt space.

Here's a rough sketch of the analogy:

Prompt <-> Weights

Prompt engineering/Prompt finetuning <-> Training/finetuning